What is data integration? Unveiling the process and its impact

What is data integration?

Data integration refers to the process of bringing together and consolidating data from multiple disparate sources across an organization into a unified consistent view that can be reliably used for analytical, operational, and other business purposes. At its core, data integration focuses on eliminating fragmented data silos and providing users with seamless access to complete, accurate, and timely data sets for business intelligence, reporting, analytics, and more.

Specifically, it involves establishing connectivity to relevant systems housing valuable business data, extracting that data through batch or real-time methods, transforming it to match business needs, and loading it into target repositories such as data warehouses, lakes, and marts that power analytics. Robust data integration forms the crucial foundation that breaks down data silos to fuel data-driven processes and decisions.

Importance and benefits of data integration

With data now recognized as one of any enterprise’s most vital assets, the ability to reliably consolidate and deliver high quality integrated data conveys tremendous advantages. Data integration essentially provides business users with a comprehensive 360 view for tracking KPIs, uncovering insights, optimizing workflows, personalizing customer experience, and enabling more informed data-driven decision making.

By unifying data from across departments, systems, and external sources, data integration lays the groundwork for analytics tools to generate tremendous value. It brings together customer, product, financial, and operational data domains into trusted single sources of truth that both technical and business users can leverage for multiple objectives. Specific benefits provided by capable data integration platforms and governance procedures include:

Higher quality business information: Consolidating data from multiple systems while handling redundancy, duplication and consistency issues results in clean master data sets that accurately reflect key performance indicators of the state of the business. This ensures stakeholders can trust analytics reporting.

Accelerated analytics and business processes: Directly delivering integrated data to data analysts, other data scientists, and business users allows them to bypass the lengthy manual data entry, sourcing and preparation steps, enabling faster time-to-insight and decision making.

Reduced data silos and duplication: Bringing reconciled data together reduces fragmented silos locked within departments. It also minimizes redundant copies of the same data storage systems that get out of sync. This promotes collaboration with a unified view of data.

Greater operational efficiency: With integrated data readily available, less time is wasted trying to manually gather, combine, and cleanse data required for different analytics, reporting, and operational tasks.

Advanced analytics enablement: Integrated big data, IoT sensor data, customer engagement logs, etc. fuel advanced analytics around predictive modeling, machine learning, and AI to drive innovation.

With these crucial advantages spanning quality, access, productivity, and cutting edge functionality, a well integrated customer data and integration system is indispensable for competing as a modern data-centric organization.

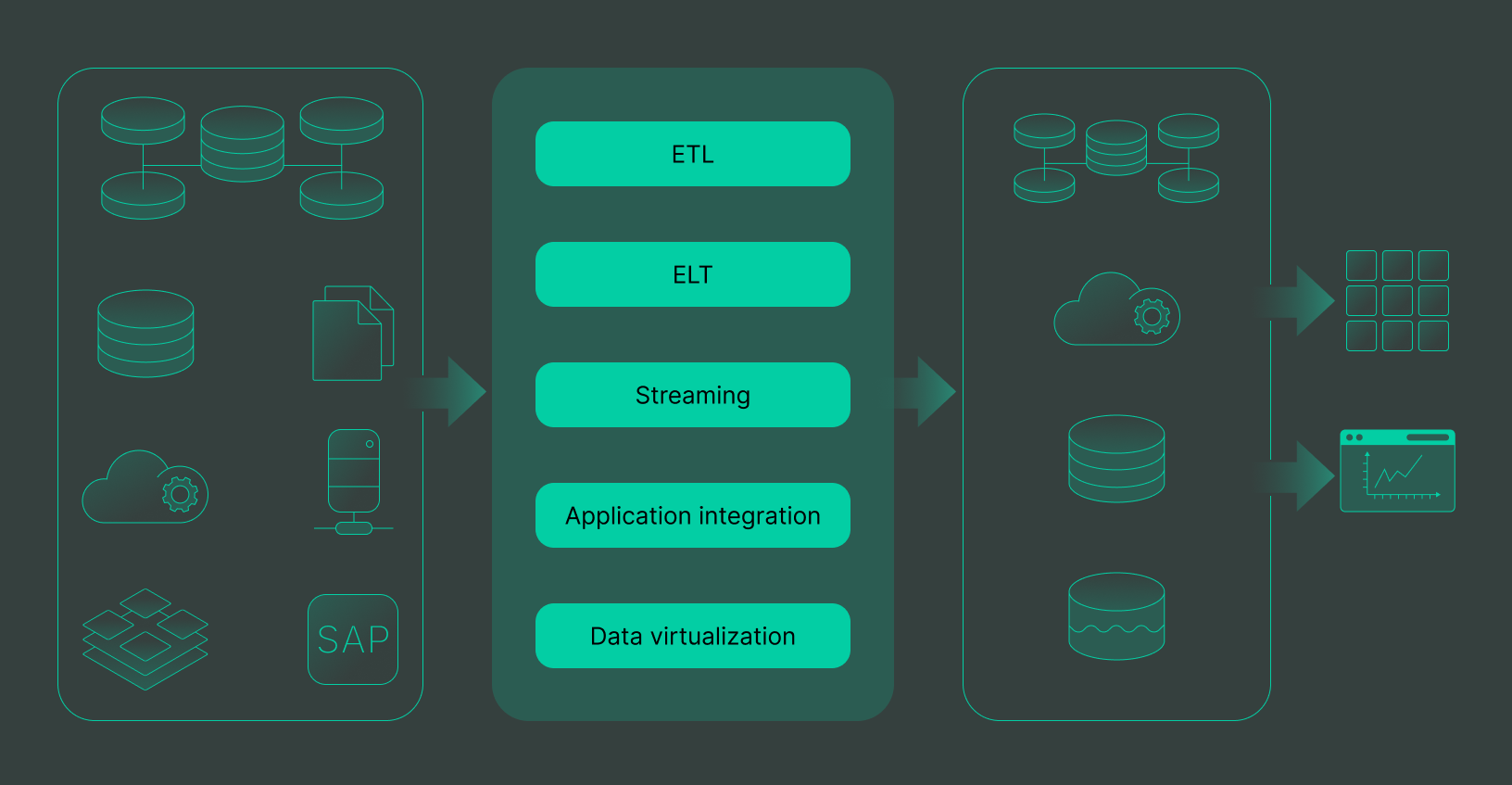

How data integration works

While methods and technical implementations to integrate data may vary across tools, the overarching data integration process comprises several key steps:

Identifying data sources: The first step catalogs transactional systems, databases, SaaS applications, and other sources with potentially valuable data distributed across business units. Critical details around update frequency, data structures, access methods, security constraints, etc., get gathered at this stage.

Extracting data: Specialized connectors, scripts, or APIs then facilitate batch or real-time data extraction from designated sources via approaches including ETL, ELT and streaming pipelines. Properly understanding source systems greatly aids this process.

Transforming data: Upon extraction, automation tools cleanse, filter, aggregate, encrypt, and otherwise conform and transform datasets to match the schema required by target systems and for downstream business use. Many data quality issues, such as completeness, consistency, and duplication get handled here.

Loading data: Now the transformed datasets flow and get loaded into target repositories and systems like cloud data warehouses, data lakes, marts, or operational databases for consumption.

Synchronizing data: To ensure accuracy amidst ongoing upstream data changes, efficient routines propagate inserts, updates, and deletes to incrementally sync the integrated data in downstream platforms based on suitable frequencies ranging from near real time data integration one-time to daily.

Governing data: Holistic data governance spans setting policies, maintaining metadata, applying security rules, tracing data lineage and properly managing the data integration tool and process from development through operation. This brings structure and oversight.

At their core, these key steps center on using the right tools and techniques to connect multiple source systems housing valuable data with target systems requiring that data, moving, transforming or virtually providing access to unified, standardized data between the two. Integration platforms and languages like Informatica, Snowflake Streams, and SQL aid automation.

Data integration approaches

From batch ETL pipelines to real-time streaming data mapping to buses to cloud data platforms, several core approaches enable moving data:

Extract, Transform, Load (ETL)

The extract, transform and load (ETL) approach provides flexible batched movement of relational data sets using customized business logic. It extracts data from sources, applies complex transformations like deduplication and cleansing in a specialized environment, and then loads the resulting dataset into target data warehouses, marts, or lakes. ETL works well for small to moderately sized data volumes requiring substantial manipulation. Cloud data integration services increasingly host and manage ETL processes.

Extract, Load, Transform (ELT)

Extract, load, transform (ELT) is an emerging technique that follows a more linear flow suited for big data. It extracts large data sets, and then directly loads them into a high performance target system such as a cloud data warehouse or lake, leveraging their inherent scale-out processing capacity to transform the data. By postponing transformations, ELT better fits bigger and faster changing data volumes where some level of raw data access enables advanced analysis. It reduces resource overhead.

Streaming data integration

For use cases involving internet-scale device or log data that fluctuate rapidly and require real-time analytics, streaming data integration consumes messages from Kafka, MQTT and other messaging buses to propagate data changes continuously between systems instead of standard interval batch jobs. Distributed engines help handle the load.

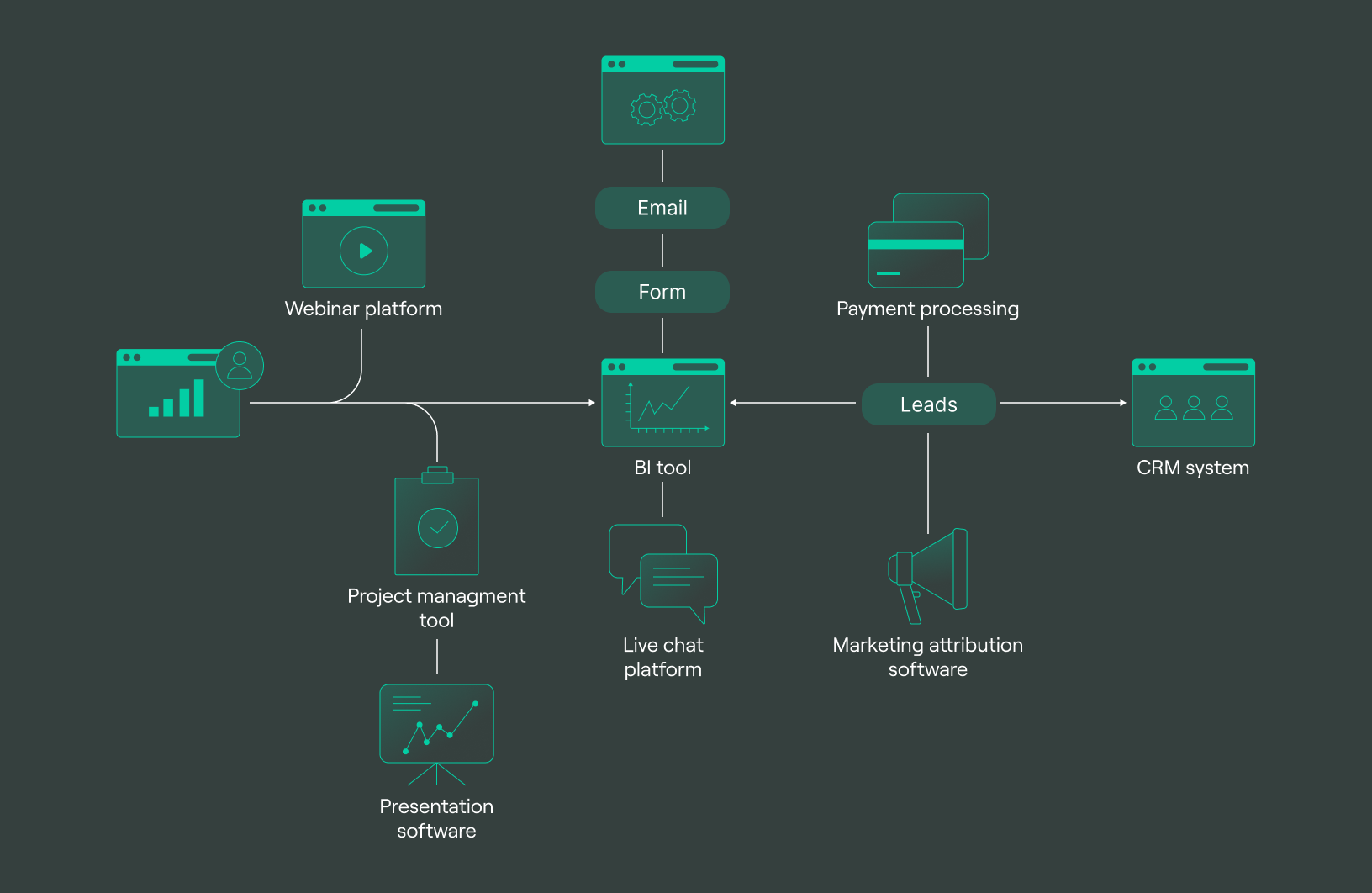

Application integration

Connecting critical software systems directly via application programming interfaces (APIs) allows point-to-point data synchronization used primarily for operational consistency rather than analytics (e.g. HR system to payroll system). Specialized integration middleware helps develop, secure, manage, and monitor APIs and handles exception conditions via administrative UIs.

Data virtualization

When moving large data sets is unnecessary or infeasible, data virtualization provides a consolidated 360 view by abstracting and logically integrating data across siloed sources. Users and applications access data from this unified set of views, tables and APIs without requiring rearward data to be physically replicated or transformed first. The virtual layer maps query the transformed data.

Other approaches

Data replication propagates synchronized copies of defined data sets across operating systems to provide redundancy for backup, disaster recovery, and cross-region availability use cases, rather than providing data integration alone. The tools make data integration important, keeping physically duplicated data sets consistent.

Data integration use cases

Top scenarios with popular and established needs for capable data integration systems include:

Central analytics repositories

Populating centralized enterprise data warehouses, marts, and lakes enables building trustworthy single sources of truth for business intelligence and advanced analytics, such as machine learning, to guide decisions. This requires automating complex batch pipelines effortlessly (ETL/ELT) and routine incremental updates.

Customer 360° views

Fully understanding customers by consolidating CRM records, sales transactions, contact center logs, website interactions, and other data sources provides complete visibility of engagement across departments. This helps sales, service, marketing etc. better personalize interactions.

Data lifecycle automation

Given the ever-increasing size of data, solutions that accelerate creation of analytics-ready data like enterprise-grade cloud data warehouses do so by providing self-service automation around key lifecycle steps. This includes automated ETL pipeline configuration after identifying relevant data sources, change data capture, propagation of updates to facts and dimensions, etc. This boosts productivity by codifying recurring tasks.

Big data management

Deriving value from big data introducing variety, volume and velocity characteristics combining data from multiple sources spanning IoT sensors, web and social media activity, etc., depends on cost-effective data lakes built purposefully to land, store, and refine previously trapped data. Data integration tames complex semi-structured data.

Data integration challenges

While able to deliver tremendous value around analytics-fueled decision making, compliant operations, optimized interactions and innovation, smooth end-to-end data integration efforts face multiple pragmatic challenges:

Overwhelming volume and growth: Relentlessly accumulating raw structured, semi-structured, and unstructured data of variable quality flooding in from countless customer engagement systems, IoT sensors, operational logs, and third party signals threatens to bottleneck legacy batch data integration tools. Important signals risk getting drowned out, unless the data repository is filtered on a regular basis.

Siloed systems and inconsistent data: Mergers, decentralized data management maturity, and isolated vendor selections over time raze disconnected architectural complexity across business units. Ingesting data of inconsistent quality stored in such formats as relational tables, document stores, specialized analytical engines, etc., becomes cumbersome.

Blending streaming and batch data: For a range of analytics spanning real-time anomaly detection to backend reporting, caching interim states using Apache Kafka helps withstand surges while ensuring orderly handoff to systems like Snowflake designed specially to process exabyte-scale batches in parallel. However, configuring durable streaming pipelines neatly tied with a batch requires new expertise.

Proliferating multi-cloud sources: As hybrid and multi-cloud foundation adoption allows leveraging niche capabilities across platforms both on-premises and in the cloud, making sense of data spread further becomes tedious. This sprawl stresses ETL tools, heightens data governance tools' needs, and aggravates challenges related to properly refreshing materialized views.

Technical bebt accumulation: The short shelf life of source data tied with demands to refine existing pipelines steeps maintenance costs over time. This debt accumulation gradually degrades performance. Modern metadata lineage tracking helps measure drift.

Data integration tools landscape

The landscape of data integration tools is diverse, offering solutions to challenges related to batch processing, streaming, and data access. These tools are essential for businesses looking to consolidate and make sense of their data from various sources. Here’s an overview of the key types of data integration tools and how such services as DoubleCloud can enhance their capabilities:

1. ETL/ELT tools: Such tools as Matillion, Hevo, Fivetran, and Segment are pivotal for batch data integration, especially for analytics. They offer flexible workflows and automation recipes that facilitate the loading of data into centralized data warehouses. DoubleCloud can complement these tools by providing a seamless integration layer that enhances data flow efficiency and reduces the time to insights.

2. Data virtualization tools: Such platforms as Denodo and AtScale offer logical data unification, abstraction, and transformation. They help bridge multiple data sources and simplify backend complexities. DoubleCloud stands out by offering advanced data caching and query optimization capabilities, making data access faster and more reliable compared to traditional virtualization tools.

3. Cloud iPaaS: Integration platforms-as-a-service, such as Azure Data Factory, manage and scale complex integrations across cloud data stores. DoubleCloud enhances iPaaS solutions by offering a more intuitive interface and simplified workflow design, making it easier for users to create and manage their data integration processes without deep technical expertise.

No-code ELT tool: Data Transfer

A cloud agnostic service for aggregating, collecting, and migrating data from various sources.

4. Streaming / messaging data buses: Platforms like Apache Kafka are crucial for real-time data changes, such as IoT sensor feeds. DoubleCloud can augment these platforms by providing advanced analytics and monitoring tools, ensuring data integrity and timely delivery of critical information.

5. Data lakes: These are distributed repositories designed to store vast amounts of raw data. DoubleCloud can significantly improve data lake functionality by offering enhanced data cataloging and search capabilities, making it easier for data science teams to find and utilize the data they need.

6. Data quality tools: Addressing issues like duplicated records and data consistency is crucial. DoubleCloud adds value by integrating machine learning algorithms to improve data quality automatically, reducing the need for manual data cleaning.

7. API / App integration platforms: Tools that specialize in syncing contexts across apps, like Mulesoft, are vital for system interoperability. DoubleCloud provides a more flexible and scalable approach to API management, supporting a wider range of protocols and data formats.

8. SQL IDEs and notebooks: Scripting data integration jobs in languages like Python allows for custom transformations. DoubleCloud supports this by offering a cloud-based development environment that enhances collaboration among data teams and simplifies the deployment of data integration scripts.

In summary, DoubleCloud stands out in the data integration landscape by offering a comprehensive suite of features that enhance the capabilities of existing tools. Its advantages include improved efficiency, enhanced data quality, simplified user experience, and advanced analytic capabilities. By integrating DoubleCloud into their data management strategy, businesses can achieve faster insights, better data governance, and a more scalable data infrastructure.

Data integration best practices

Managed Service for Apache Kafka

Fully managed, secure, and highly available service for distributed delivery, storage, and real-time data processing.

To optimally configure data integration mechanisms, data integration architects, developers, and data leaders must holistically design and govern solutions:

Thoroughly map existing infrastructure: Maintain complete documentation encompassing structure and meaning across current business systems holding valuable data using metadata catalogs like Atlan before piping data elsewhere.

Ensure business relevance via collaboration: Data engineers must early on deeply collaborate with business leadership and analytics teams on use cases, precise data needs, tolerable latency, quality and consistency expectations, target dashboards, and overall ROI goals.

Standardize via master data and governance: Establish centralized data store semantics, data domains like customer, product and financial concepts, as well as usage policies and oversight through organizational master data management and governance initiatives early.

Architect end-to-end governance upfront: Holistically address facets like security, metadata capture, lineage tracking, pipeline monitoring, reference data sets and self-service access controls under the purview of Chief Data Officers from the outset before piecemealing.

Institute integration partner ecosystems: Strategic partnerships with specialized technology, system integration, and MSP partners during early evaluation stages of modernizing analytics stacks aid accelerated success without undue lock-in.

Following these and other leading guidelines will help futureproof data integration efforts to continue sustainably fueling business-critical outcomes at scale even as upstream source systems and business priorities evolve. By investing in talented teams supported by streamlined solutions, the power of big data integration becomes really unleashed.

Frequently asked questions (FAQ)

What is data integration?

What is data integration?

Data integration means combining data from different sources to provide a unified view.

Can you give examples of data integration?

Can you give examples of data integration?

What distinguishes data integration from ETL?

What distinguishes data integration from ETL?

Why is data integration important?

Why is data integration important?

What challenges does data integration address?

What challenges does data integration address?

What technologies are used in data integration?

What technologies are used in data integration?

Get started with DoubleCloud