How Kafka Streams work and their key benefits

Kafka Streams is a versatile client library designed for building real-time stream processing applications that can be integrated into any application, independent of the Apache Kafka platform. It provides developers with the ability to process, analyze, and respond to data streams promptly. This library is notable for its robust features such as fault tolerance and scalability, which ensure that it can handle large-scale data processing efficiently and remain resilient against system failures.

Whether you’re processing real-time analytics, implementing event-driven architectures, or building real-time data pipelines, read on to learn more about Kafka Streams as a robust and flexible solution that can tailor your streaming data needs.

Kafka basics

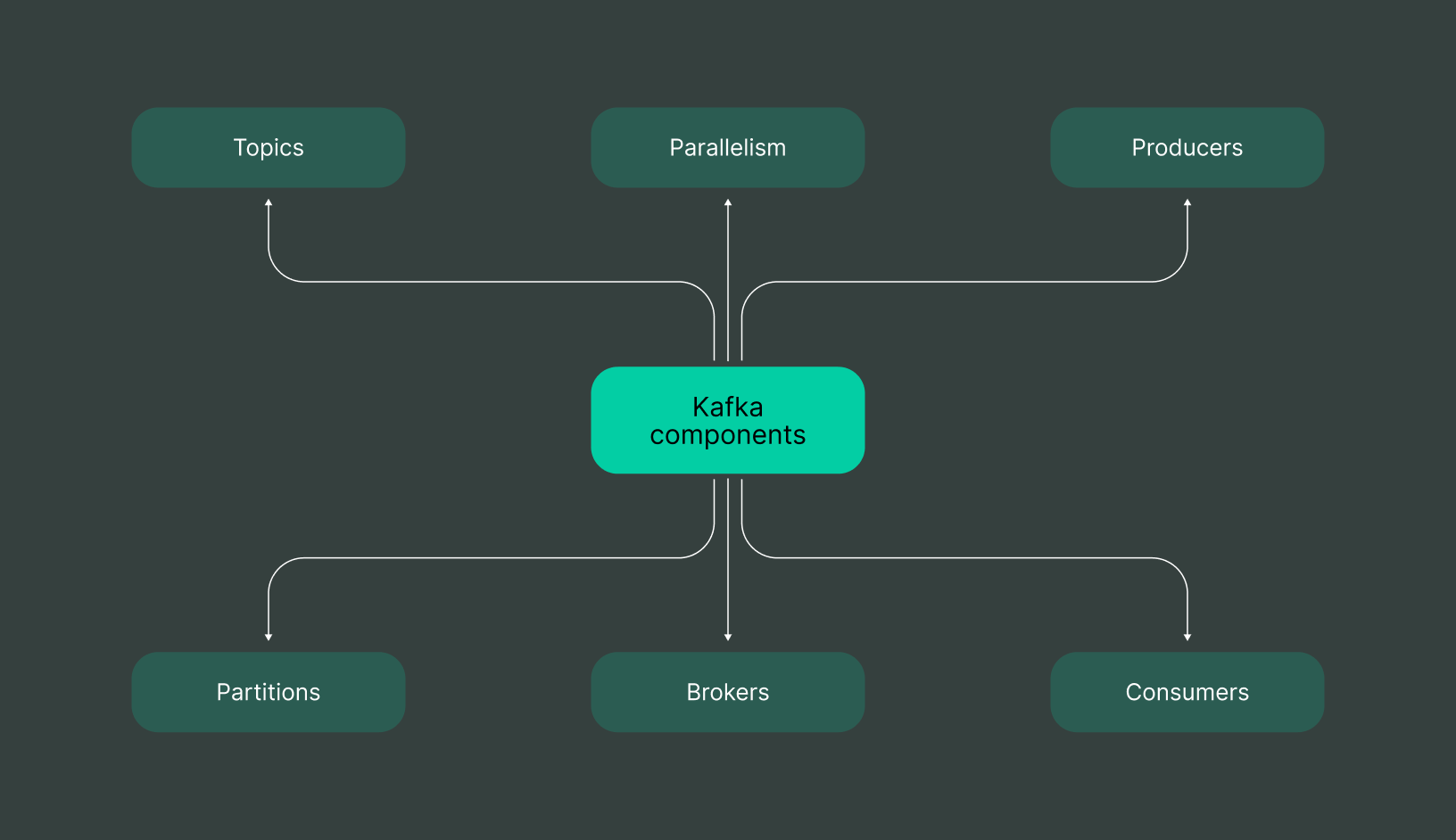

Understanding Kafka Streams requires a grasp of several key Kafka concepts that underpin its architecture. Kafka operates with a range of components, each serving a specific role in processing data streams. Here’s an overview of these critical components:

In this article, we’ll talk about:

-

Kafka topics. These are the fundamental building blocks where data is stored and organized. Topics act as named channels through which messages flow.

-

Producers and consumers. Producers send data to Kafka topics, while consumers retrieve it for processing. This pattern allows for a decoupled data flow, enabling flexibility in processing.

-

Brokers. Kafka clusters comprise several brokers that manage the storage and distribution of data within topics. Brokers ensure the system’s resilience and scalability.

-

Partitions and parallelism. Kafka topics are divided into partitions, allowing data to be distributed across multiple brokers. This division provides the parallelism necessary for high-throughput data processing.

These fundamental components work together to enable Kafka Streams to process data streams efficiently. For example, an application instance can use Kafka clients to read from an input topic and process data using a word count algorithm. The record key can be a string key determining how data is partitioned.

Kafka Streams uses the low-level processor API to parallelize processing, ensuring optimal processing time. The architecture’s design allows for easy scaling across several Kafka clusters, supporting robust and scalable stream processing applications.

Why are Kafka Streams needed?

Traditional stream processing platforms, for example, Apache Storm, often struggle with key challenges like fault tolerance, scalability, and flexible deployment. These platforms tend to require complex setups for achieving fault tolerance and can lack seamless scalability when processing large data volumes. Deployment flexibility is another common limitation, as traditional platforms may not easily adapt to cloud environments or require specialized hardware configurations.

Considering the limitations mentioned above, Kafka Streams is necessary for stream processing as it provides a robust, scalable, and seamlessly integrated solution within Kafka ecosystems. The following features highlight its strengths and usefulness for managing large amounts of real-time data:

-

Fault tolerance. Kafka Streams ensures data integrity even during failures. It uses Kafka’s replication mechanism to ensure data redundancy across multiple brokers, minimizing the risk of data loss.

-

Scalability. With a distributed architecture, Kafka Streams can scale horizontally by adding more Kafka cluster nodes. This scalability allows Kafka Streams applications to handle growing workloads without performance degradation.

-

Flexible deployment. Kafka Streams is a client library that enables easy integration with other Kafka client libraries and deployment on various cloud platforms. This flexibility supports a range of deployment scenarios, from on-premises to cloud-based setups.

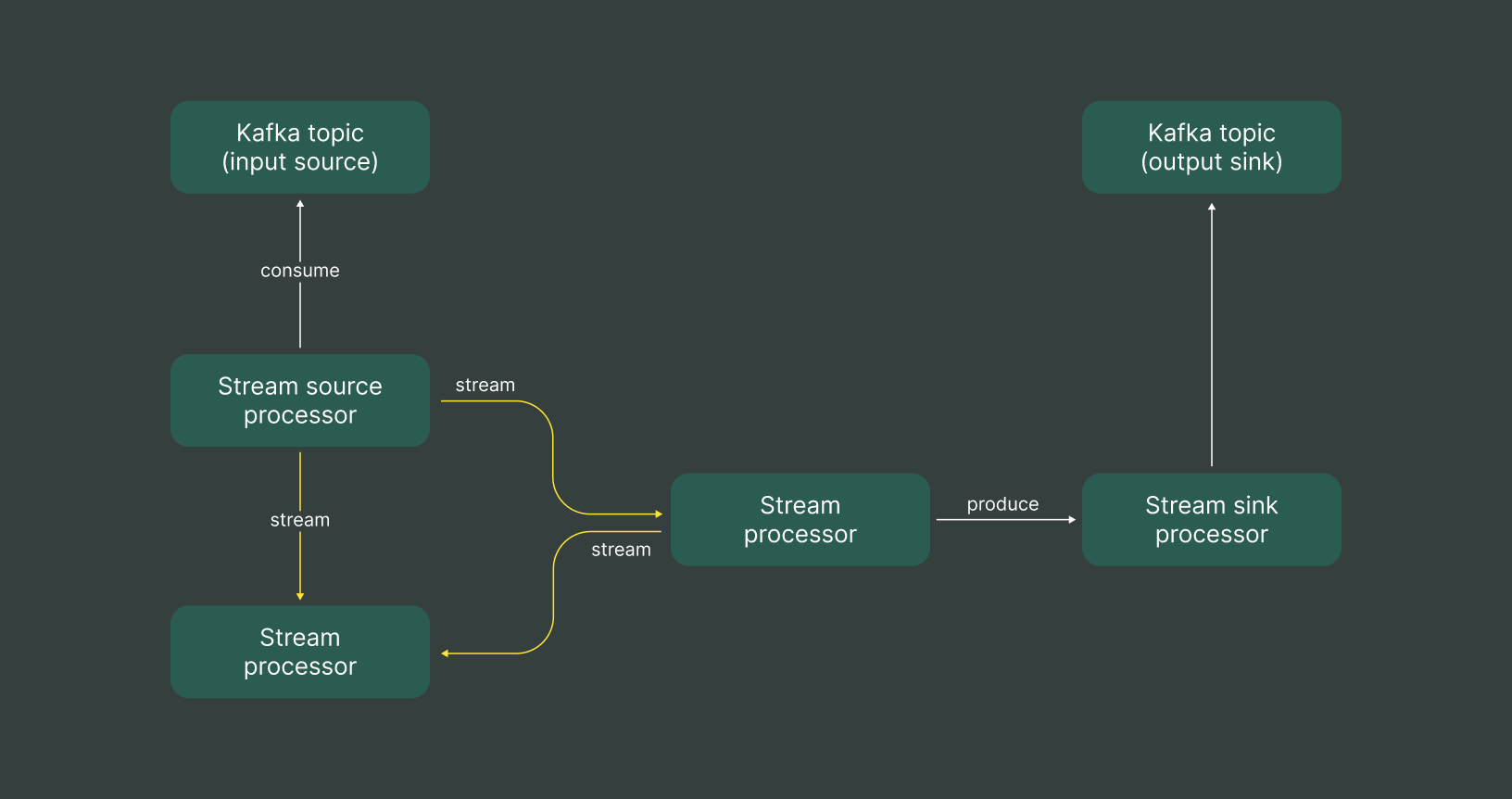

Kafka Streams architecture

In a Kafka Streams application, the architecture revolves around a processor topology. This structure represents the flow of data through a series of processors that transform, filter, or aggregate the data in real time. At the heart of this architecture are two types of special processors: sink processors and source processors.

-

The source processors are responsible for consuming data from Apache Kafka topics, initiating the stream processing flow.

-

The sink processors handle the output, writing processed data back to Kafka topics or external systems.

Developers can define these processor topologies using the Kafka Streams API. This versatile API offers two approaches for building stream processing applications: the low-level Processor API and the higher-level Kafka Streams DSL.

The Processor API allows for detailed, fine-grained control over the stream processing logic, making it ideal for custom and complex transformations. In contrast, the Kafka Streams DSL provides a more straightforward, declarative way to build stream processing applications, with built-in operations for common tasks like filtering and aggregating.

Streams in Kafka Streams

In Kafka Streams, a stream represents an unbounded, continuously updating data set composed of ordered, replayable, and fault-tolerant data records. Each stream is partitioned, dividing the data into multiple stream partitions. A stream partition is a totally ordered sequence of immutable data records mapping directly to a Kafka topic partition. Each data record within a stream partition is assigned a unique offset and is associated with a timestamp.

By maintaining this partitioned, immutable log of records, Kafka Streams ensures that data remains ordered, replayable, and fault-tolerant.

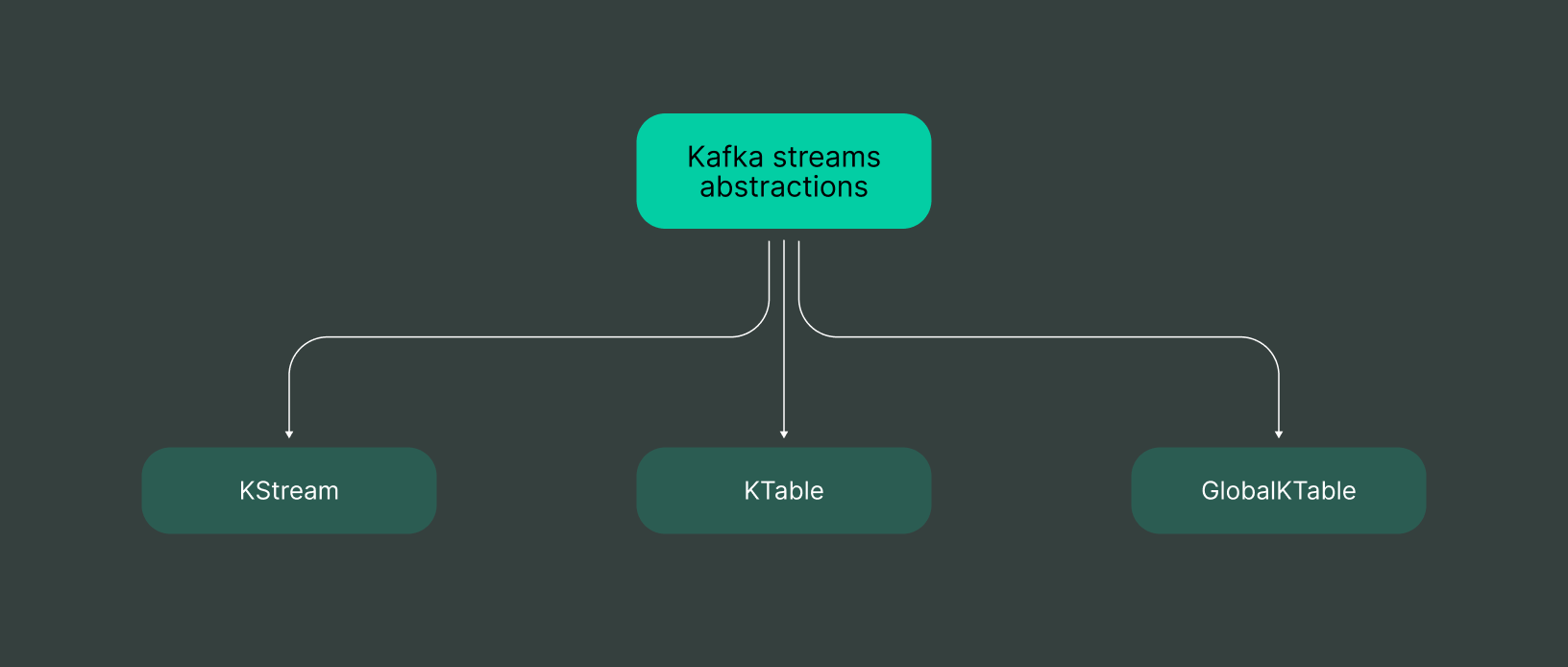

Streams and tables

Kafka Streams offers abstractions for representing and processing data as streams and tables. This section will provide an overview of these abstractions, discussing how they function and their core features.

KStream

KStream is a central abstraction within the Kafka Streams client library, serving as a robust framework for processing continuous streams of data. Unlike basic Kafka clients, which operate at the level of individual records within a Kafka cluster, KStream interprets each data record as an “INSERT” operation. This method allows for processing data without overwriting existing records with identical keys, thereby preserving historical context.

Moreover, KStream can group records based on their keys, enabling the formation of windows or time-based segments for further analysis. This structure allows users to query data coming from different sources, conduct stateful transformations, and perform advanced analytics. The results can then be stored in global tables, ensuring that the right stream value is maintained for each key without data loss.

KTable

KTable is a critical abstraction in Kafka Streams, encapsulating the concept of a changelog stream where each data record represents an “UPSERT” (INSERT/UPDATE) operation. This implies that new records with the same key can overwrite existing ones, ensuring the data in the KTable stays current. The flexibility in updating records is particularly useful for stream processing applications where data can frequently change.

Within a Kafka Streams application, the KTable plays a central role in the processing topology by holding the most recent state of the data, contributing to the stream table duality. The duality allows a Kafka Streams application to interact with data as a stream (a sequence of events) and as a table (a collection of key-value pairs representing the latest state). Kafka’s log compaction ensures that only the most recent data record is retained, helping to reduce the data size while providing an efficient way to maintain the state across multiple instances of a stream processor.

KTables also support interactive queries, allowing you to retrieve your application’s current state at any point. This is particularly useful in scenarios requiring low-latency access to specific data records. Furthermore, even if a stream processing application fails, the output data retains consistency with exactly-once semantics, ensuring reliable results.

GlobalKTable

A GlobalKTable in Kafka Streams stands out from a regular KTable by sourcing data from all stream partitions of the underlying Kafka topic instead of a single partition. This distinction has significant implications for stateful operations and data parallelism.

With a GlobalKTable, you’re dealing with a broader data set, which covers every partition in the topic. This allows for a more comprehensive view when performing stateful operations. It’s useful when you need to process messages across the entire topic, ensuring that the stream has the right stream value regardless of the record key’s partition.

On the other hand, this wide-ranging coverage can impact performance. Since the GlobalKTable interacts with every partition, the processing load is higher than that of a KTable with just one partition. This can lead to more resource consumption in memory and processing power, especially when dealing with extensive data events.

Overall, the GlobalKTable is a powerful abstraction when your application requires a complete view of a topic across all partitions. However, if your Kafka Streams application is designed for high data parallelism and lower resource usage, a standard KTable with a single partition might be more appropriate.

Key capabilities of Kafka Streams

Kafka Streams leverages its integration with Apache Kafka to offer scalable and fault-tolerant processing of live data streams, encompassing several vital features. These include stateful operations, processing topology, and interactive queries, all essential for constructing streaming applications capable of handling large data volumes effectively and reliably. Let’s delve deeper into how these capabilities are implemented and their impact on streaming technology.

Stateful operations

Kafka Streams facilitates stateful operations through advanced management of local state stores and changelog topics. Local state stores enable stream processors to maintain and access data locally, minimizing latency and dependency on external storage systems. Changelog topics complement this by logging state changes, providing a resilient mechanism for data recovery, and ensuring consistent state across processor restarts. This setup supports exactly-once processing semantics, crucial for applications where data accuracy and consistency are paramount.

Processing topology

The architecture of Kafka Streams allows developers to define processing topologies using either the declarative Streams DSL or the imperative Processor API. The Streams DSL is designed for ease of use, providing a high-level approach to defining common processing patterns, such as filtering and aggregation, without deep knowledge of the underlying implementation details. On the other hand, the Processor API offers detailed control over the processing logic, suitable for scenarios requiring unique customizations that go beyond the capabilities of the DSL. Both APIs facilitate the construction of scalable, resilient processing pipelines tailored to the application’s specific needs.

Interactive queries

Interactive queries are a distinctive feature of Kafka Streams that empower developers to query the state held in local state stores directly. This capability provides immediate access to the processed data, enabling applications to perform real-time data analysis and manipulation. Whether it’s powering real-time dashboards or enabling operational decisions based on the latest data insights, interactive queries enhance the flexibility and responsiveness of streaming applications. This feature is integral to deploying advanced analytics that requires live data visibility and instant query capabilities.

Time in Kafka Streams

Kafka Streams offers several notions of time to manage real-time data processing: event time, processing time, ingestion time, and stream time. Let’s examine these critical time concepts for operations in Kafka Streams.

Event-time

This term refers to the specific moment when an event or data instance originally occurred, as generated by its source. To achieve accurate event timestamping, it’s essential to include timestamps within the data as it is being created.

Example: Consider a vehicle’s GPS sensor that records a shift in position. The event timestamp would be the exact moment the GPS detected this change.

Processing-time

This represents the moment when a data processing system actually handles an event or data instance, which could be significantly later than the event originally took place. Depending on the system setup, the delay between the event and processing timestamp can range from milliseconds to hours or sometimes even days.

Example: Consider a system analyzing vehicle GPS data for a fleet management dashboard. The processing timestamp could be nearly instantaneous (in systems using real-time processing like Kafka) or delayed.

Ingestion-time

Ingestion time refers to the specific moment a data record is recorded into a topic partition by a Kafka broker, at which point a timestamp is embedded into the record. This concept is similar to event time, with the primary distinction being that the timestamp is applied not at the moment the data is generated by its source but rather when the Kafka broker appends the record to the destination topic.

Although similar, ingestion time is slightly delayed compared to the original event time. The difference is usually minimal, provided the interval between the data’s generation and its recording by Kafka is short. This short delay means that ingestion time can often serve as an effective proxy for event-time, especially in cases where achieving precise event-time semantics is challenging.

Stream-time

Stream time is the maximum timestamp encountered across all processed records, tracked for each task within a Kafka Streams application instance. This concept, used in the low-level Processor API, allows the parallelization of processing across several Kafka clusters.

Timestamps

In Kafka Streams, timestamps play a vital role in managing how data is processed and synchronized. Each data record is assigned a timestamp, either based on when the event actually happened (“event-time”) or when it is processed (“processing-time”). This distinction allows applications to maintain accuracy in data analysis regardless of processing delays or data arrival orders.

Timestamps are managed through timestamp extractors, which can pull timestamps from the data itself, use the ingestion time, or assign the current processing time. These timestamps then influence all subsequent operations, ensuring data remains consistent and temporally aligned across different streams and processing stages.

For example, in operations like joins and aggregations, timestamps determine how records are combined or summarized, using rules to ensure the correct sequence of events is respected. This mechanism is crucial for applications requiring precise and reliable real-time analytics, providing a framework for developers to build complex, time-sensitive data processing workflows.

Assign Timestamps with Processor API

You can modify the Processor API’s default behavior by explicitly setting timestamps for output records when using the “forward ()” method.

The “forward ()” method accepts two parameters: a key-value pair and an optional timestamp. This timestamp parameter allows you to explicitly specify the timestamp for the output record.

Example:

import javax.xml.crypto.dsig.keyinfo.KeyValue;

public class NewProcessor implements Processor<String, String> {

private ProcessorContext context;

@Override

public void init(ProcessorContext context) {

this.context = context;

}

@Override

public void process(String key, String value) {

long inputTimestamp = context.timestamp();

// The timestamp is extracted from the input record by using this method

// Process the input record.

String outputValue = processRecord(value);

// Assign the timestamp to the output record explicitly.

// You implement The OutputTimestamp method for your use case.

long outputTimestamp = computeTheOutputTimestamp(inputTimestamp);

// Custom method: compute TheOutputTimestamp(). Made by the user and compute the timestamp for the output record.

KeyValue<String, String> outputRecord = KeyValue.pair(key, outputValue);

// Here the KeyValue.pair is created to store the output record

context.forward(outputRecord, outputTimestamp);

// The context.forward() method is called using both the pair and the timestamp that was computed.

}

@Override

public void close() {}

}

Assign Timestamps with Kafka Streams API

In Kafka Streams, timestamps can be explicitly set for output records by implementing the “TimestampExtractor” interface. This interface extracts timestamps from each record, which can then be used to handle processing-time or event-time semantics.

The example below illustrates how to use the TimestampExtractor interface to set timestamps for output records explicitly.

public class CustomizeTimestampExtractor implements Timestamp Extractor {

@Override

public long extract(ConsumerRecord<Object, Object> record, long previousTimestamp) {

// Using TimestampExtractor a timestamp is being extract from each record

long timestamp = ...;

//The records are returned as "long" values.

return timestamp;

}

}

// Use the custom timestamp extractor when creating a KStream and call it through

// the withTimestampExtractor() method on the Consumed object

KStream<String, String> stream = builder.stream("input-topic", Consumed.with(Serdes.String(), Serdes.String())

.withTimestampExtractor(new CustomizeTimestampExtractor()));

// Process records with timestamps using Methods like: windowedBy() or groupByKey().

Stream processing patterns

Stream processing is a key component of real-time data processing architectures, enabling the continuous transformation and analysis of incoming data streams. By leveraging patterns such as aggregations, joins, and windowing, systems can extract meaningful insights and respond to changes in real time. Kafka Streams, a popular stream processing library, implements these patterns to allow for versatile and robust data processing solutions. Below, we explore three fundamental patterns integral to effective stream processing:

Aggregations

Aggregations are fundamental operations in stream processing that combine multiple data records into a single result. These operations are crucial for computing statistical measures like sums, counts, and averages. In Kafka Streams, aggregations are performed using stateful operations.

The system maintains a local state store that updates the aggregated results as new records arrive. This local store is backed by a changelog topic in Kafka, which provides fault tolerance and the ability to recover from failures. Types of aggregations supported include windowed aggregations, grouped aggregations, and joins and cogroup aggregations.

Joins

Kafka Streams facilitate complex data integration by allowing different types of joins between streams. Unlike traditional database joins that operate on static data sets, Kafka Streams perform joins on data in real-time as it flows through the system.

This capability is crucial for applications that need to combine and react to incoming data from multiple sources simultaneously. The main categories of joins in Kafka Streams include stream-stream joins, where two live data streams are joined; stream-table joins, where a live stream is joined with a look-up table; and table-table joins, which combine two data tables.

Windowing in Kafka Streams

Windowing is a technique that helps manage a continuous flow of data by breaking it into manageable subsets, or windows, based on certain criteria. Kafka Streams supports several types of windows:

-

Tumbling windows are non-overlapping and fixed-size windows, usually defined by a specific time interval (like every 5 minutes).</li>

-

Hopping windows are similar to tumbling windows, but these windows overlap and slide forward in time or by record count.

-

Session windows record based on periods of activity. A configurable gap of inactivity determines when a new session starts.

For instance, in a retail application, a tumbling window could calculate total sales every hour, whereas hopping windows might calculate moving average prices. Session windows could track shopping sessions, aggregating purchases made by a customer in one visit to the site.

Depending on your specific needs, windowing can be based on different timescales (event time, processing time, or ingestion time). Kafka Streams also offers a grace period feature to accommodate out-of-order records, ensuring that late arrivals are counted in the appropriate window. This is crucial for maintaining data accuracy and completeness in scenarios where delays may occur, such as network latencies or other disruptions.

Kafka Streams advantages

Kafka Streams offers several advantages for building robust and scalable real-time data streaming applications.

-

Fault-tolerance. Leveraging Kafka’s replication and durability, Kafka Streams ensures fault-tolerant stream processing by automatically recovering from failures and restoring application state.

-

Scalability and elasticity. Kafka Streams enables seamless scaling by distributing stream processing tasks across multiple application instances, automatically rebalancing partitions as needed.

-

Cloud deployment. Kafka Streams can be deployed in cloud environments, benefiting from the elasticity and managed services offered by cloud providers.

-

Security. Kafka Streams integrates with Kafka’s robust security features, including encryption, authentication, and authorization, ensuring secure data processing.

-

Open source. As an open-source project, Kafka Streams benefits from an active community, continuous development, and freedom from vendor lock-in.

-

Real-time data streaming. Kafka Streams is designed for real-time data streaming, enabling low-latency processing of continuous data streams with precise ordering guarantees.

Kafka Streams is used widely across various industries for processing large streams of real-time data. Below are some practical examples of how companies can implement Kafka Streams in their operations:

-

Real-time Fraud Detection: A financial institution can use Kafka Streams to analyze transactions in real time, allowing it to detect and prevent fraud as it happens. For example, if the system detects an unusual pattern of transactions, it can automatically flag and block further transactions pending investigation.

-

Personalized Recommendations: An e-commerce platform leverages Kafka Streams to process user activity data in real-time and provide personalized product recommendations. The platform can dynamically suggest relevant products by analyzing a user’s browsing and purchasing history, enhancing the user experience and increasing sales.

-

Network Monitoring: A telecommunications company can use Kafka Streams to monitor network traffic and performance metrics continuously. This enables real-time detection of network anomalies or failures, enabling rapid response to issues before they affect customers.

Conclusion

Apache Kafka is renowned for its high throughput and scalability in handling large volumes of real-time data, distinguishing itself as a crucial technology for modern data-driven applications. In contrast, Kafka Streams, its accompanying stream processing library, enhances Kafka by providing a more accessible and developer-friendly platform specifically for building real-time streaming applications. Kafka Streams integrates seamlessly into Kafka environments, offering added capabilities such as fault tolerance and the ability to run streaming applications at scale.

Highlighting the synergy between these technologies, DoubleCloud offers a Managed Kafka solution that simplifies the deployment and management of Kafka ecosystems. This service ensures that organizations can fully capitalize on the real-time processing features of Kafka and Kafka Streams without the complexities related to setup and ongoing maintenance. By utilizing DoubleCloud’s Managed Kafka, companies can focus more on extracting insights and value from their data streams rather than on infrastructure management.

Frequently asked questions (FAQ)

How does Kafka Streams support stateful processing in a distributed environment?

How does Kafka Streams support stateful processing in a distributed environment?

Kafka Streams leverages Kafka’s inherent fault-tolerance and scalability to manage stateful operations across partitions. State stores are replicated and repartitioned across cluster nodes for resilience.

What are the strategies for managing Kafka Streams application state stores?

What are the strategies for managing Kafka Streams application state stores?

Can Kafka Streams be used for processing time-series data, and if so, how?

Can Kafka Streams be used for processing time-series data, and if so, how?

What are the security considerations when deploying Kafka Streams applications?

What are the security considerations when deploying Kafka Streams applications?

How does Kafka Streams interact with Kafka Schema Registry for data serialization?

How does Kafka Streams interact with Kafka Schema Registry for data serialization?

What are the implications of using Kafka Streams for global event aggregation?

What are the implications of using Kafka Streams for global event aggregation?

How do you monitor and troubleshoot Kafka Streams applications in production?

How do you monitor and troubleshoot Kafka Streams applications in production?

Get started with DoubleCloud