How to leverage Jupyter with DoubleCloud’s Managed ClickHouse®

Written by: Adam Jennings, Senior Solutions Architect, DoubleCloud

What is Jupyter

In 2011, IPython was formed as an interactive coding environment for Python. Then, in 2014, Project Jupyter spun out of the IPython Project as it evolved to support interactive data science and scientific computing across all programming languages.

Project Jupyter is a non-profit, open-source project. Its name comes from three of the supported languages: Julia, Python, R.

(As an aside, I am one of those weirdos that doesn’t use RStudio for R coding. I prefer to stay in Jupyter so that I can have the same workflow for my exploratory data analysis in both Python and R.)

Why use Jupyter?

Jupyter is open-source, cross-platform, and helps (albeit with some caveats) with reproducible code.

Plug here for Matt Harrison’s amazing book titled, “Effective Pandas” where he goes in-depth on chaining your Python code to ensure cells are run with the correct state.

According to Matt, “[c]haining is also called ‘flow’ programming. Rather than making intermediate variables, just leverage the fact that most operations return a new object and work on that.”

But I digress… Jupyter notebooks help visualize code, markdown text, graphs, and other visualizations in a lab “notebook” interface embedded in the browser.

Notebooks are excellent tools for exploratory data analysis and for collaboration amongst peers. By leaving in-line markdown text, one can highlight thoughts and provide explanations right in the notebook.

Jupyter notebooks make great teaching tools. Especially with remote attendees. Send them the link to the notebook’s .ipynb file and spin it up locally, or one of the cloud managed notebook platforms, and follow along with the instructor as they guide their attendees through recreating the output.

Managed notebook sources

Google Colab notebooks provide a managed Jupyter interface that can be accessed for free. It leverages Google sign-on for accessing files within Drive, GitHub, or by uploading your own file. Notebooks are saved in your Drive for easy access and sharing. One can edit the runtime and enable GPU or TPU for faster algebraic operations.

Kaggle also provides a notebook interface in both Python and R. One can also leverage GPUs on Kaggle.

The next two, DeepNote and Hex have made cloud native notebook environments that add many features to their stacks and are worth a look if you need more customizations or team environments.

DeepNote says it “is a new kind of data notebook that’s built for collaboration — Jupyter compatible, works magically in the cloud, and sharing is as easy as sending a link.”

Hex believes in “no more jumping between tools, struggling with versions, or sharing via screenshot. Hex empowers everyone to ask and answer questions, work together, and build knowledge.”

Connecting to DoubleCloud’s Managed ClickHouse

The first order of business is to ensure you have Jupyter installed on your system. There are plenty of guides for your operating system, most leveraging conda or pip, so I’ll leave you to the official installation documentation: https://jupyter.org/install.

When first creating your notebook environment, you can must install the preferred ClickHouse driver, clickhouse-driver or clickhouse-sqlalchemy and ensure the IPython kernel for sql is installed for the SQL magic commands with %sql.

By leveraging sys, we ensure the packages are installed in the currently running kernel.

Connecting JupyterLab to DoubleCloud hosted ClickHouse.

Ensure clickhouse-driver and/or clickhouse-sqlalchemy, and ipython-sql are installed.

import sys

!{sys.executable} -m pip install clickhouse-driver clickhouse-sqlalchemy ipython-sql

. . .

Load Magic SQL ipython-sql.

%load_ext sql

Next, I install a few libraries that I commonly use and set the Username, Password, and Hostname from the DoubleCloud Console (cell is hidden in my notebook).

Import Common Libraries.

import os

import pandas as pd

Hidden Cell Below with User/Pass/Host.

. . .

SQLAlchemy driver

For leveraging SQL Alchemy, import the package and leverage the magic commands with %sql. If it is the only established connection in your notebook, you can continue to use the simple %sql syntax to query data.

With SQLAlchemy.

import sqlalchemy

connection_string = "clickhouse+native://{user}:{password}@{host}:9440/nyc?secure=true".format(user=user,password=password,host=host)

%sql $connection_string

The generic connection string is as follows. Pay attention to the ? secure=true at the end of the url for connecting to DoubleCloud’s Managed Clickhouse. Your error message will state: “expected Hello or Exception, got Unknown packet” if you leave off the secure argument.

connection_string = "clickhouse+native://{user}:{password}@{host}:9440/nyc?secure=true".format(user=user,password=password,host=host)

I wanted to import the entire dataset into my notebook so I used the %sql to SELECT FROM.

Create a DataFrame with the entire table.

result = %sql SELECT * FROM trips

df = result.DataFrame()

df.tail()

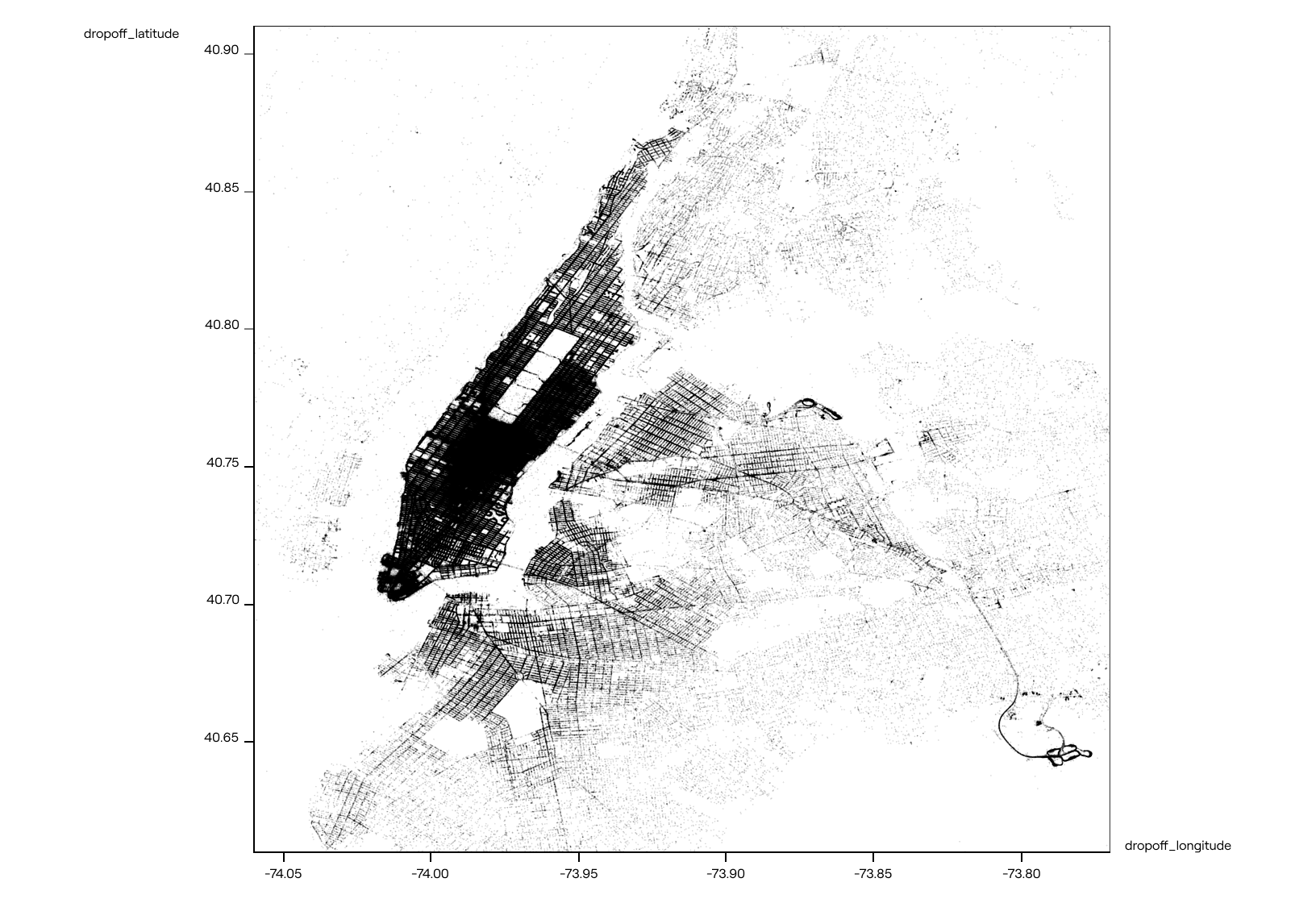

In my example, I wanted to plot the prevalence of dropoff locations, so I mapped it:

new_style = {'grid': False} #Remove grid

matplotlib.rc('axes', **new_style)

from matplotlib import rcParams

rcParams['figure.figsize'] = (10.5, 10) #Size of figure

rcParams['figure.dpi'] = 250

P=df.plot(kind='scatter', x='dropoff_longitude', y='dropoff_latitude',color='black',xlim=(-74.06,-73.77),ylim=(40.61, 40.91),s=.02,alpha=.6)

Clickhouse-driver

It is a similar setup as SQLAlchemy. I chose to use the numpy setting and then leverage query_dataframe to return directly into pandas dataframe format.

Also note the secure=True line in the code for connecting to DoubleCloud.

With ClickHouse driver.

Leverage settings={'use_numpy': True} for NumPy/Pandas compatability

use query_dataframe to return results directly to DF.

Note

secure=True is necessary for DoubleCloud’s secure connection.

from clickhouse_driver import Client

client = Client(

host=host,

user=user,

password=password,

port=9440,

secure=True,

settings={'use_numpy': True}

)

client.execute('SHOW TABLES FROM nyc')

[array(['trips'], dtype='<U5')]

In the next cell, I just hide the enum warnings to keep the cells from expanding across my screen.

%%capture --no-display

#hide enum warnings

df2 = client.query_dataframe('SELECT * FROM nyc.trips')

df2.tail()

Once the data is in a dataframe, plotting is the same as above, so no need to plot again.

Conclusion

Jupyter Notebooks are prevalent in the data science and data analytics fields.

Connecting to DoubleCloud’s Managed Clickhouse leverages either of the open-source drivers that have been developed.

Personally, I like the SQLALchemy driver with the %sql magic commands and thus allowing for a clearer sql interface for accessing the data within DoubleCloud’s managed ClickHouse and delivering to dataframes within my notebook environment.

Give it a try and let us know in Slack if you have any issues.

ClickHouse® is a trademark of ClickHouse, Inc. https://clickhouse.com.

In this article, we’ll talk about:

Start your trial today