Transferring data… Everything you’ll ever need to know

Written by Andrei Tserakhau, DoubleCloud

Data… it’s such a small word isn’t it?

And yet… it causes so many problems. Acquiring it, storing it, moving it, cleaning it… actually doing something with it!

With the right tool (hint… DoubleCloud) the right type of data, employed in the right way, can be invaluable.

So what’s the problem?

Well actually it’s not a problem… it’s problem (s), plural.

The one I’ll be focussing on today is (as you probably guess from the title) transferring data.

You see, The first problem with data is how spread out it can get meaning to do anything with it, it has to be transferred somewhere.

Organizations collect data, their different departments or even individual employees collect data, it gets stored in different places, in different formats… it’s a mess!

So to ‘tidy’ it up it needs moving (or transferring).

How does transferring data work?

Sounds simple right?

You take some data from here, you put it there… Congratulations! You now have data!

Well let me tell you, if it was that simple I, and a lot of people like me, wouldn’t have jobs.

You see, the devils in the details…

First of all we need to define what data ‘is’.

What is data?

As per Wikipedia (as their definition will be a lot better than mine will be):

Data — is a collection of discrete values that convey information, describing quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted.

Ok, so maybe I can define it a little better. For me, data is something that stores information and has some kind of structure to it… like a spreadsheet in which every row and column contains a piece of information.

Moving it from one place to another requires two completely separate types of storage… one to initially hold it and one to move it too.

What data will we be moving though?

Well… there’s plenty to choose from!

It could be tables from databases, structured or semi-structured data pulled from an API, raw file or a streaming platform (Like Apache Kafka® for instance) or even completely uncategorised data that looks useful and has some kind of structure to it.

Whatever the type of data however, before we start to move it we need to define the structure we want once it’s moved, how much data we’ll be moving and start to read it so it can be written elsewhere.

Now for tables that process isn’t difficult as almost any kind of table will have schema attached to it. That means to transfer a table from one database to another we just need to be able to define its schema, read the data and then write it into our new, target database… simples!

It gets a bit trickier with unstructured data (take my example of API’s earlier or maybe S3 files or even streaming messages) as we’re going to have to create some schema for it ourselves.

Now if you’re lucky this can just be done once.

With tasks like API’s or S3 buckets, we’ll always know what type of structure will be required so it’s fairly easy to accomplish but for other tasks there’s little choice but to make each decision on a case-by-case basis.

This creates what we call a snapshot of your data.

Relying on Wikipedia again, a snapshot of your data can be defined as…

What’s a snapshot?

Snapshot is the state of a system at a particular point in time.

The term was coined as an analogy to that in photography. It can refer to an actual copy of the state of a system or to a capability provided by certain systems.

It’s even easier with tables as each database you create can be configured so that it can be operated via an API (I like to use SQL for this) so we can select all the data we need from the table to be written.

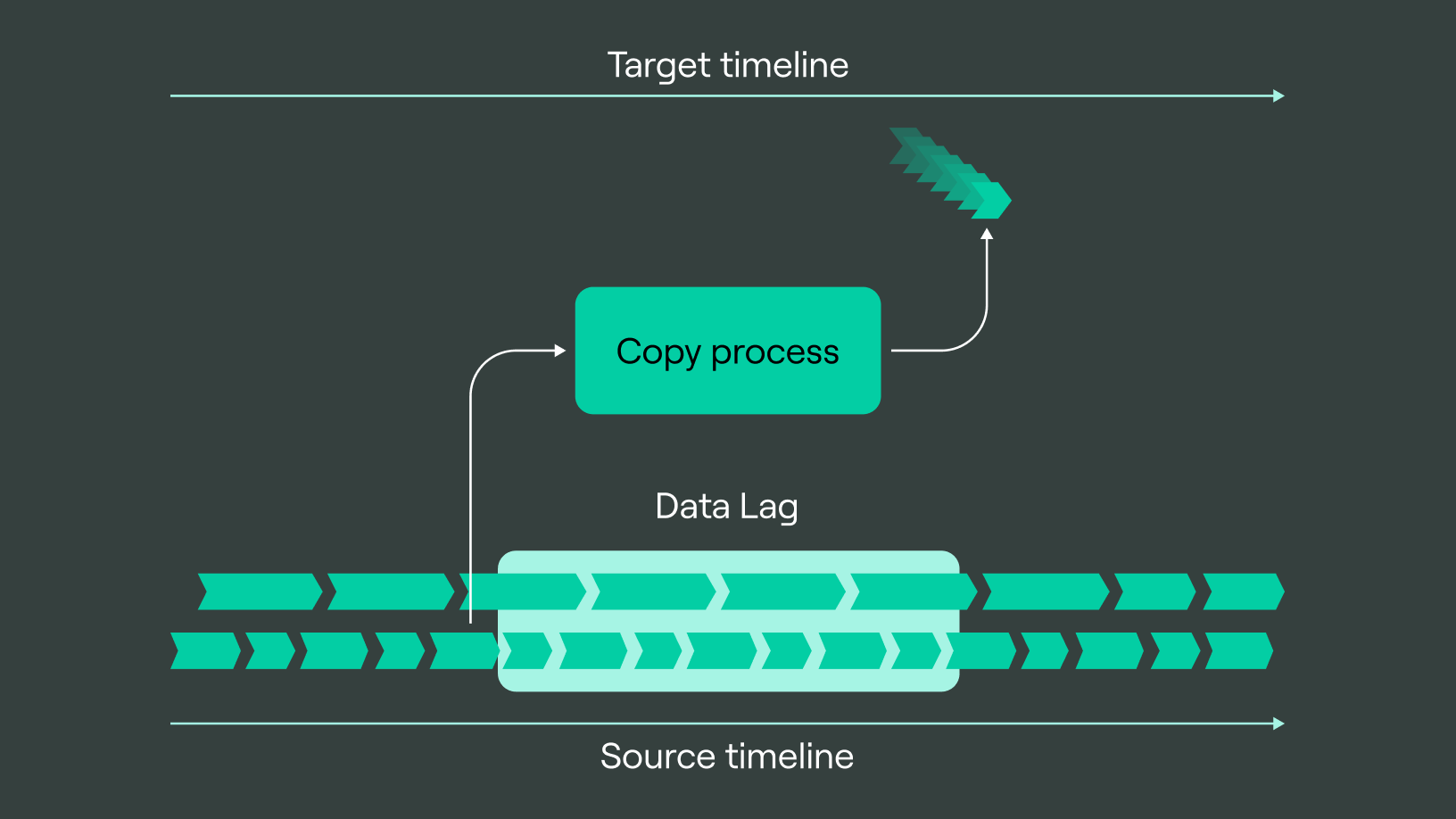

The problem comes when more data comes in.

As data moves from one platform to the next, more comes into the first, so you’re ‘snapshot’ isn’t accurate anymore.

That creates a problem for anyone relying on real-time data (or close to real-time data) and is know as Data Latency.

To once again get a ‘live’ view of your data you need to once more copy it across… which is fine till more data comes in… so you copy it across… and more comes in… so you copy it across…

Now that process is fine but eventually you’re going to have to answer the questions… how much data is coming in, how quickly can you keep transferring it and how important is ‘real-time’.

That’s where optimizing the process comes in…

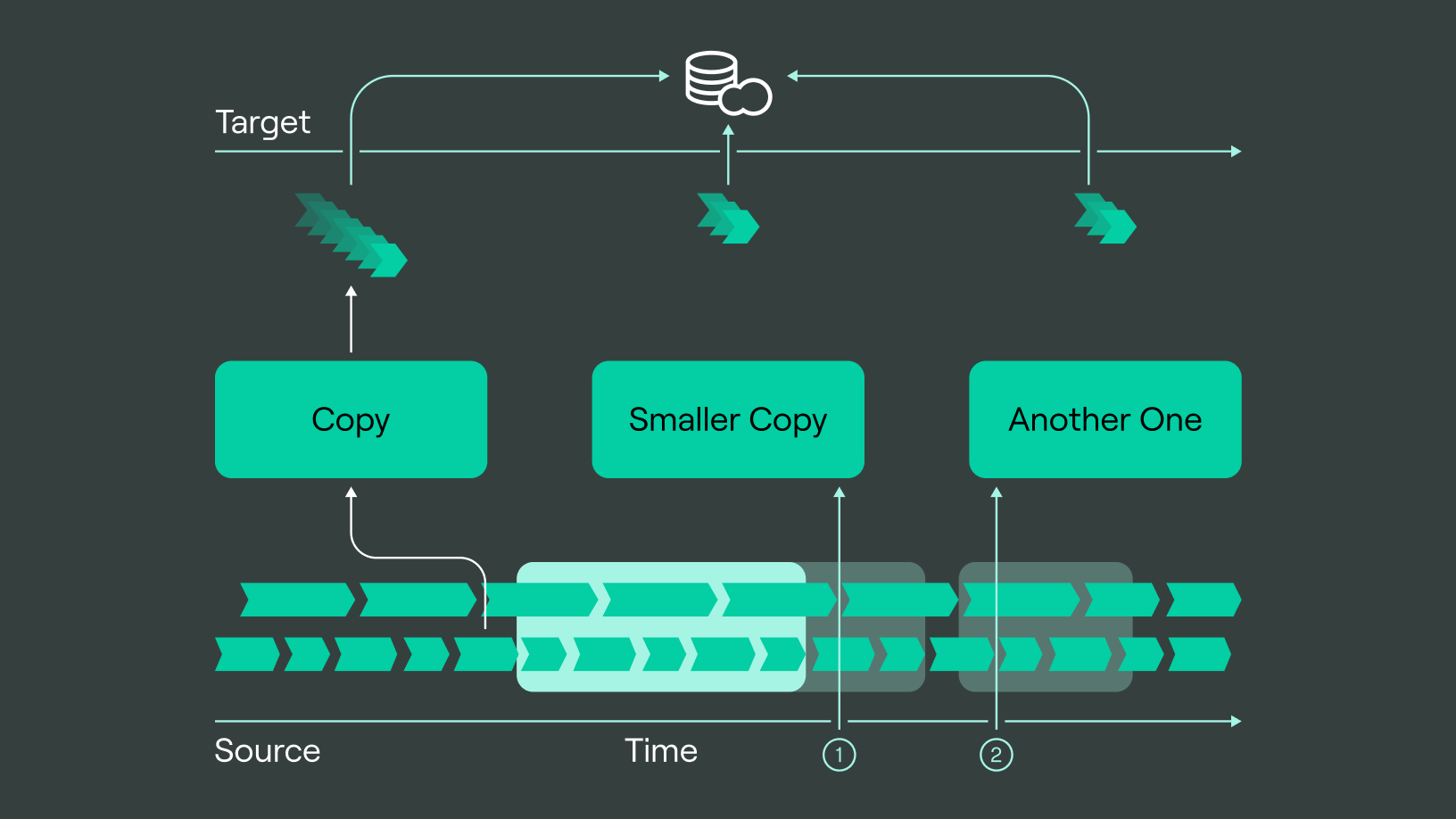

A quick and easy win is to only copy over new data, rather than constantly copying over the entire data set.

To do that, we need to add a marker of what’s already been copied so we know what to copy next time (and what not to copy).

To do that, we filter out already copied over data and store a cursor — in most instances this will be the universal cursor — time.

As in most API’s, there’ a thing usually called start_data or begin_time (or something similar) which will let us filter out any old, already transferred data… and it will work the same in a database too.

You just need to a column created_at or modified_at and the start selecting data with where created_at > today() – 1 SQL predicate.

Although I’ve heard it called different things, this is normally known as an incremental copy process — it’s simple to implement, incredibly scalable on small to mid-sized data sets and provides cursor-like column or data points.

Something to bear in mind with this technique however is that it will store all deleted records in your old data, meaning it won’t be consistent with a source if the source runs deletes.

What do I mean by that?

Let’s say you copy over today’s data but then delete a couple of records out of it (either accidently or because you didn’t need them).

The next time you transfer data, we’ll only see any new data… not the deleted records.

To fix this we’re going to need to take a dive into the murky workings of the inside of a database.

Don’t worry though… it’ll just be a short dive as we cover a concept known as Write Ahead Logging.

How to use write ahead logging

As you probably know… any database is just a log of operations that need to be done.

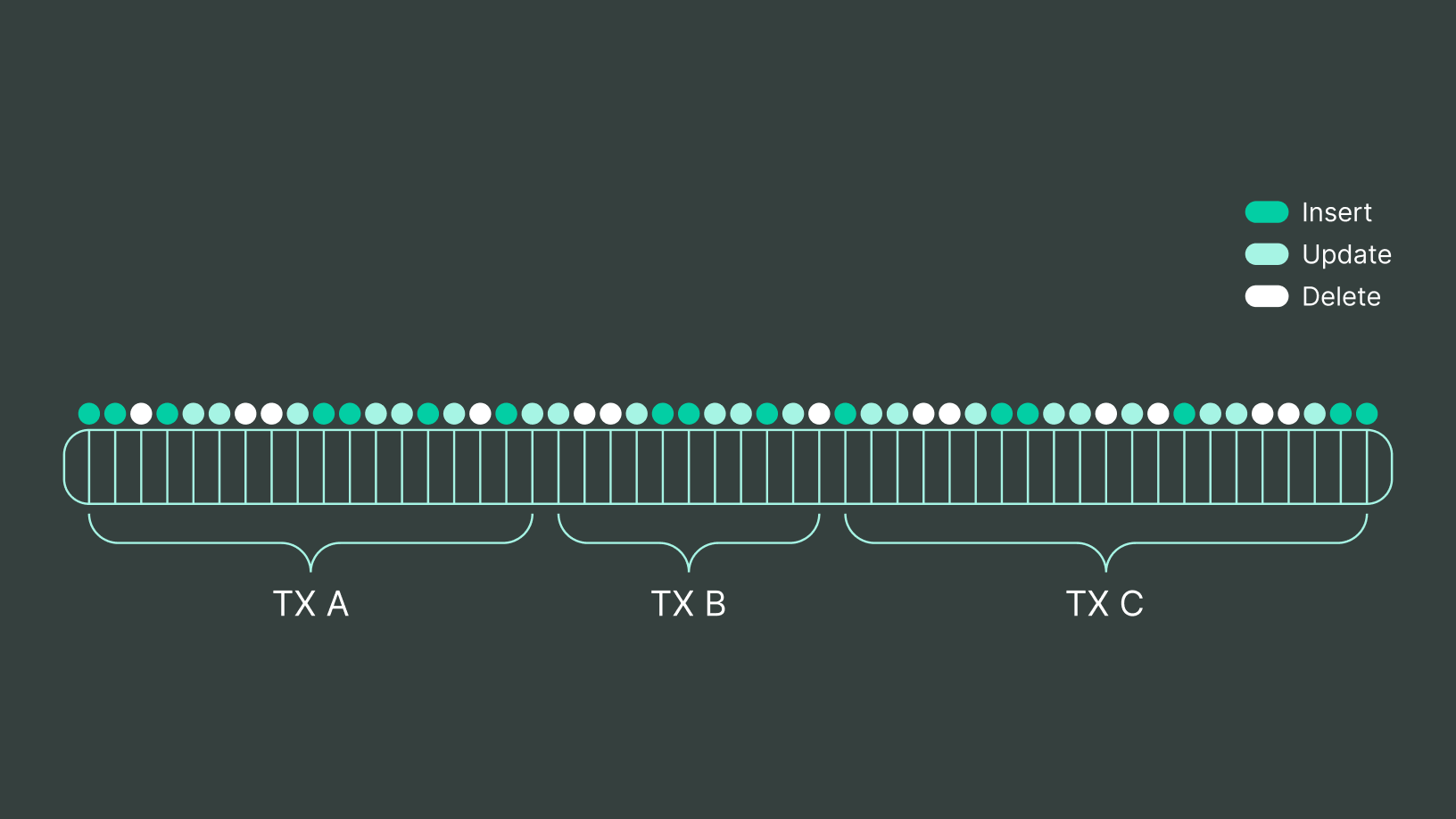

That log is called the write-ahead-log and inside it there’ll be transaction bounded operations that database users can apply to their rows.

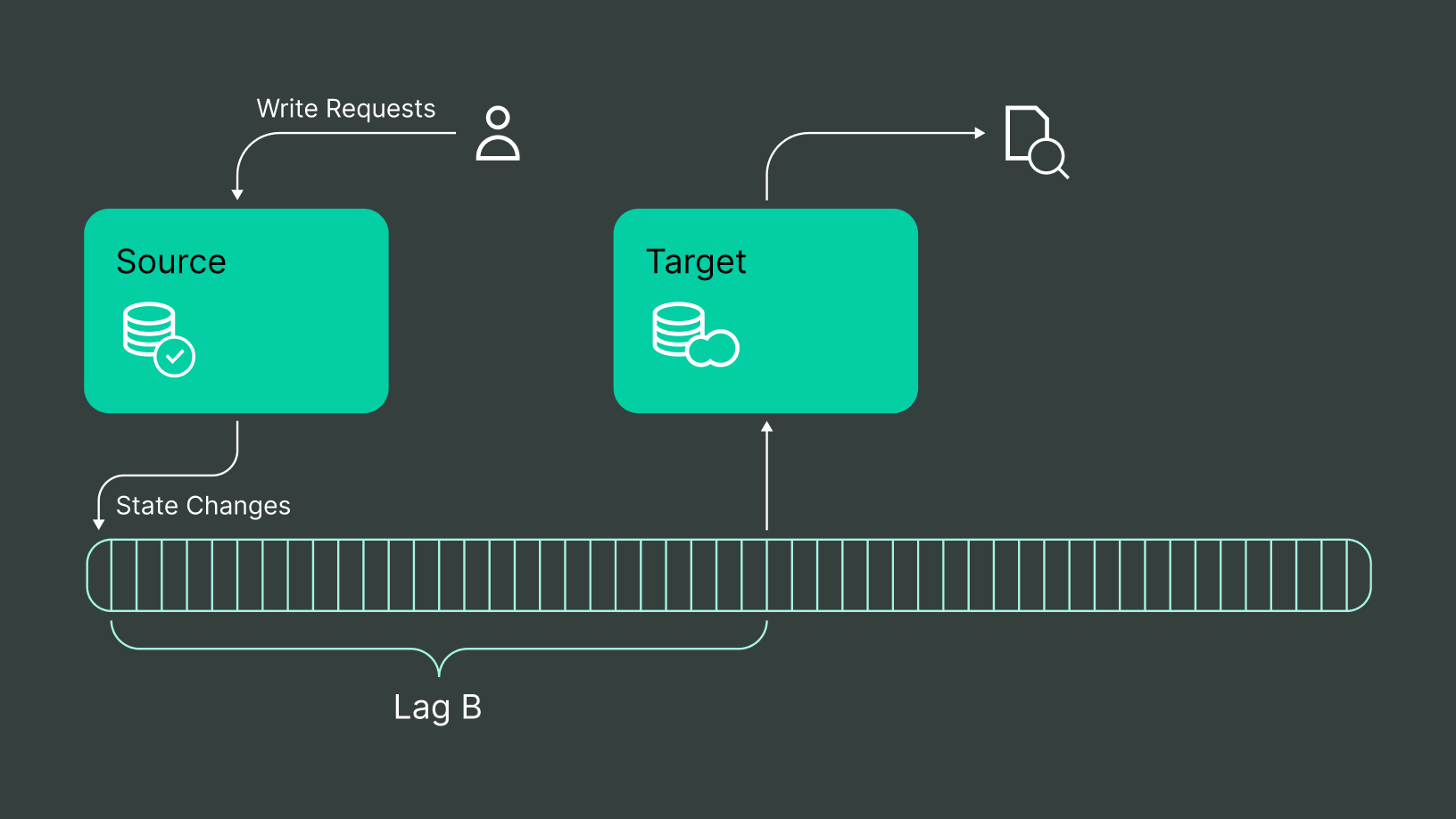

Now with that in mind we can build a process to take our source write ahead log and apply it to the target database in flight.

Doing this solves our data latency issue (as we only need to transfer over a small percentage of the data) and our consistency issues (as we can now apply, delete and update operations too).

That process is normally called a Change Data Capture (sometimes shortened to just CDC).

What’s a change data capture?

Relying on Wikipedia one last time…

In databases, change data capture (CDC) is a set of software design patterns used to determine and track the data that has changed so that action can be taken using the changed data. CDC is an approach to data integration that is based on the identification, capture and delivery of the changes made to enterprise data sources.

CDC (not to be confused with the center for disease control — this is an article about data!) will normally let us replicate data from the source through to the target.

I’ve always found it a really efficient (if a bit complicated sometimes) way to transfer data.

As you can see, transferring data around isn’t always as easy as it looks!

That why we built a platform that already implements all these techniques for you so you don’t have to worry about them (and you can learn more about data transfer here >>)

Apache® and Apache Kafka® are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries.

In this article, we’ll talk about:

Start your trial today