ETL vs ELT: Choosing the right approach for your data integration needs

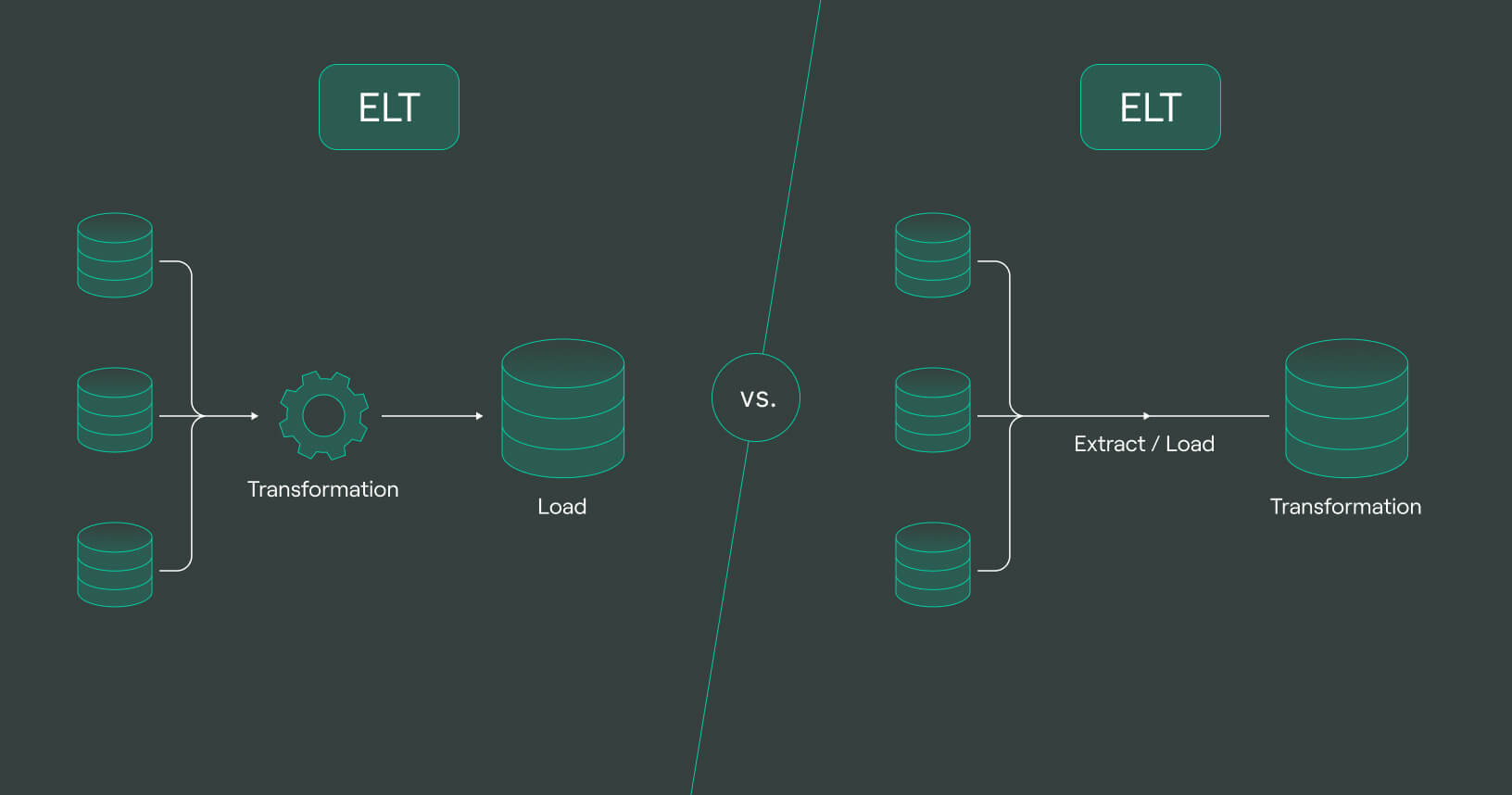

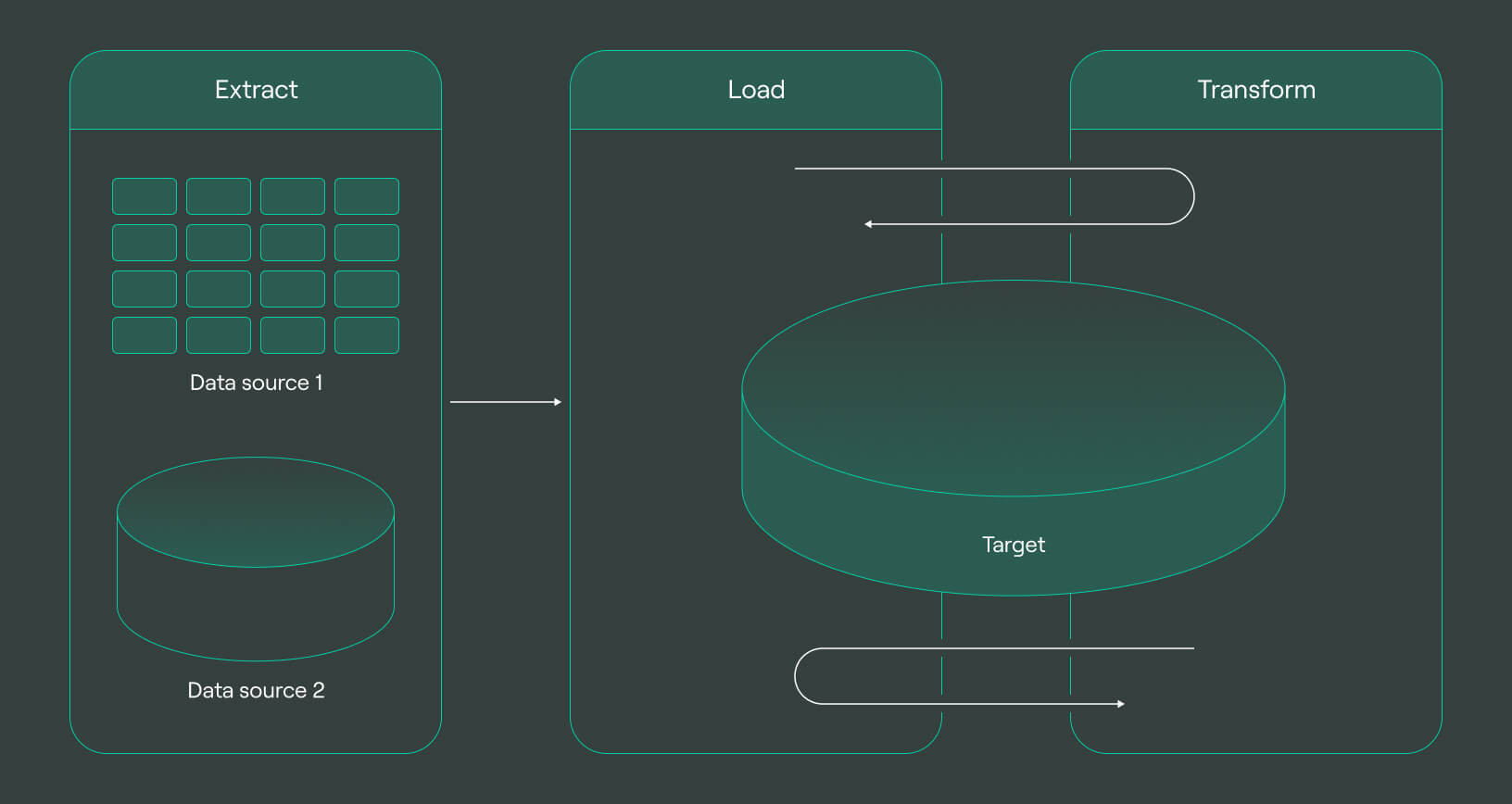

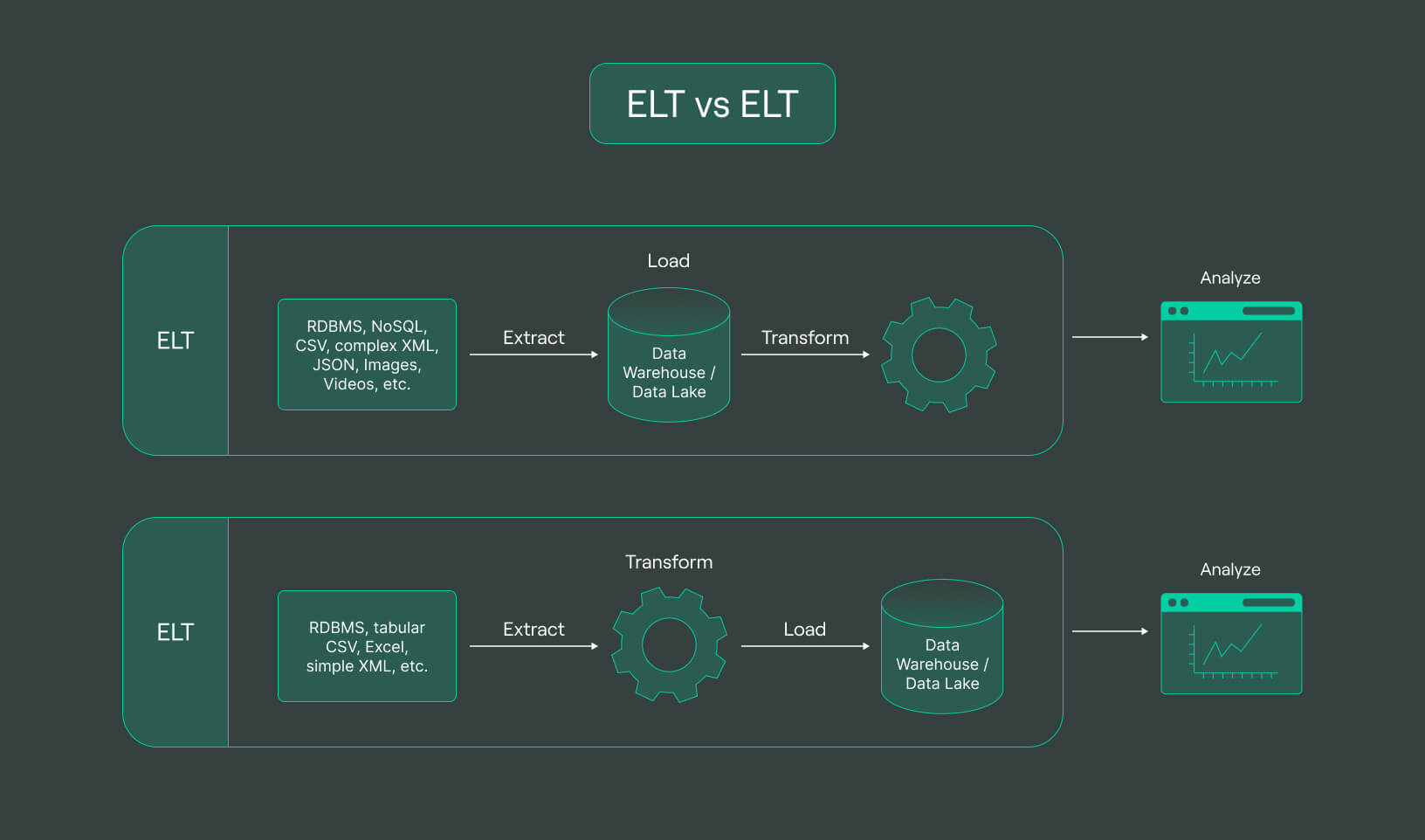

In the data integration domain, ETL vs ELT are two popular approaches used to move and transform data from source systems to a target system. ETL (Extract, Transform, Load) involves extracting data from source systems, transforming it to fit the target system, and then loading it into the target system. ELT (Extract, Load, Transform) involves loading data into the target system first and then transforming it. This article will explore the differences between these two approaches and help you decide which approach is best suited for your data integration needs. So, let’s dive into details on ELT and ETL.

Why is it important to know the difference between ETL and ELT?

It is crucial to understand the difference between ETL and ELT processes as choosing the right data integration methods can have a significant impact on the success of your data transformation process. ETL is suitable for processing large volumes of structured or unstructured data and performing complex data transformations. ELT, on the other hand, is better suited for simple data transformations and data warehousing. The decision of which method to use depends on factors such as the size of the data, the complexity of the data pipelines, the processing power required, and the target data system.

Choosing the right data integration method is essential for businesses that want to process raw data collected from multiple data sources, such as data lakes, data warehouses, and other heterogeneous data sources. The transformation process involves extracting data from the source data repositories, transforming the data to match the data models of the target system, and loading the transformed data into the target system.

Businesses utilizing DoubleCloud’s platform for their Cloud ETL tools experience seamless and effective integration of their data, resulting in rapid generation of valuable insights. By leveraging open-source technologies such as ClickHouse® and Apache Kafka®, DoubleCloud empowers its clients to construct data analytical solutions and pipelines that can process information in under a second, with the entire setup process taking no longer than 5 minutes.

What is ETL?

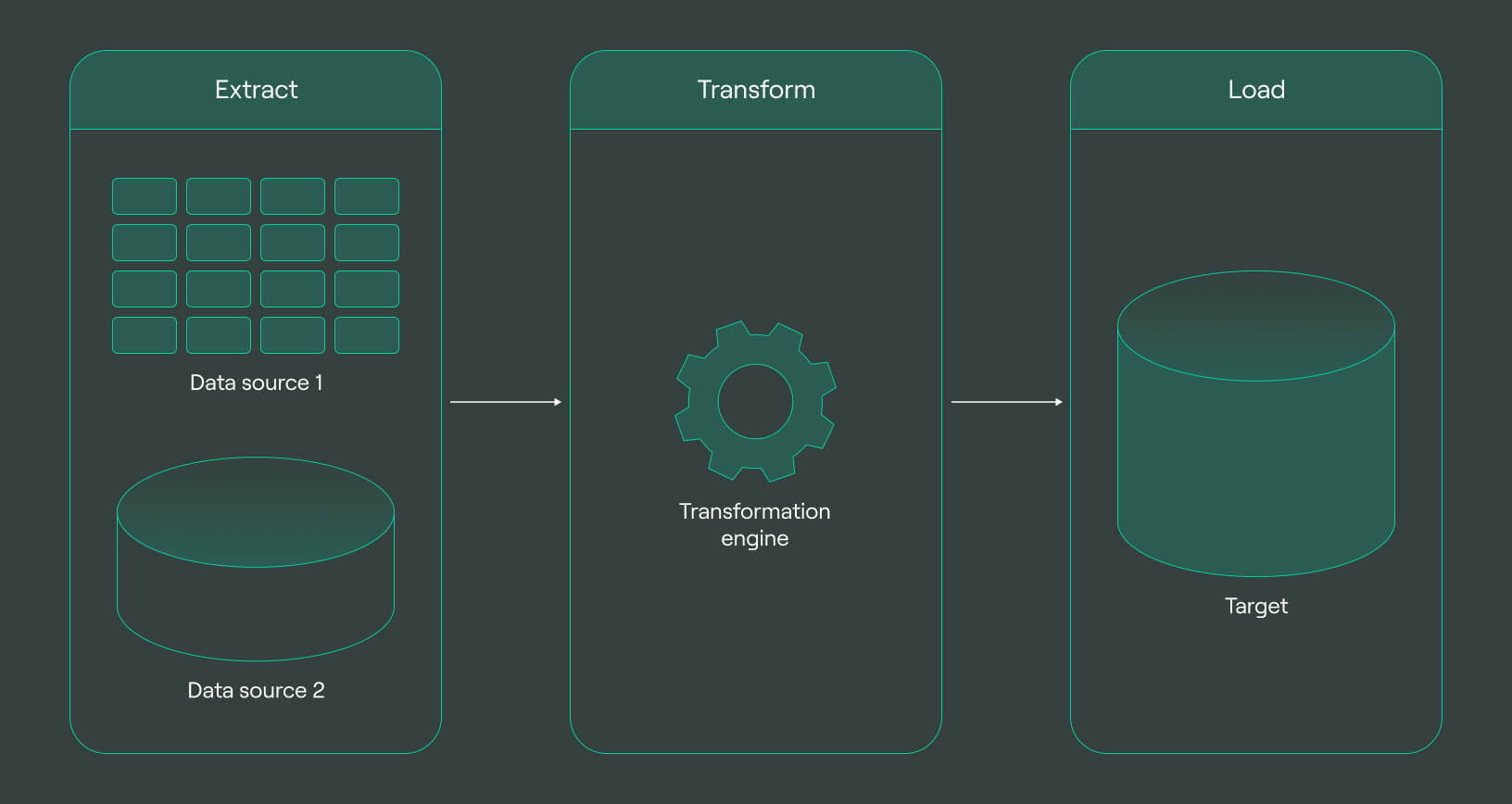

ETL is a data integration method that consolidates raw data from multiple sources by extracting, transforming, and loading it into a target system. The process involves data engineers building pipelines that transfer and transform the data into a consistent format, while also ensuring data security by encrypting sensitive data.

ETL is particularly useful for processing large volumes of structured or unstructured data and performing complex data transformations. It is commonly used to support business intelligence systems and cloud-based data warehouses are increasingly popular for ETL processes due to their scalability and cost-effectiveness.

Process of ETL

ETL is the process of consolidating raw data from various sources into a target system. The process involves collecting raw data from various data sources, including structured and unstructured data, and then transforming them to ensure consistency and quality. ETL processes are used to integrate data from multiple sources and consolidate it in a data warehouse or data lake (https://double.cloud/blog/posts/2023/04/data-lake-vs-data-warehouse/). The transformation process can involve complex data pipelines, which require significant processing power to transform and load the data. Data security is crucial during ETL, especially for sensitive data, which may require encryption.

ETL is an essential process for data integration, and choosing the right data integration method is critical for effective business intelligence systems.

Advantages of ETL

-

Scalability: ETL can handle large volumes of data from multiple sources, making it scalable for enterprise-level data integration.

-

Data Quality: ETL can ensure data quality by filtering out duplicate, incomplete, or inaccurate data, resulting in a more accurate and trustworthy data warehouse.

-

Data Transformation: ETL can transform raw data into structured or unstructured data to fit the target data system’s schema or format. This allows for easier analysis and interpretation of the data.

-

Speed: ETL can quickly process large amounts of data using its transformation processes, making it a faster option for data integration compared to manual data integration.

-

Cost-effective: ETL can automate data integration processes, reducing the need for manual labor and minimizing costs associated with data integration.

-

Data Security: ETL can encrypt sensitive data during the loading process and ensure compliance with data governance regulations.

Limitations of ETL

-

Time-consuming: ETL process can be time-consuming, especially when dealing with large amounts of data, and can cause delays in data availability.

-

Complex Data Pipelines: ETL pipelines can be complex and difficult to maintain, especially when dealing with multiple data sources or heterogeneous data sources.

-

High Processing Power: ETL requires significant processing power to transform and load data, which can be expensive.

-

Inability to Handle Real-time Data: ETL is not suitable for handling real-time data, as it relies on batch processing and may not provide timely updates to data warehouses.

-

Data Governance: ETL may pose data governance challenges, as it requires careful management of data repositories, source data, and target data systems.

Use cases

-

Data Warehousing: ETL plays a significant role in building and maintaining data warehouses. It extracts data from multiple data sources, such as databases, files, and web services, and transforms it into a consistent format, which is loaded into a data warehouse for easy analysis and reporting.

-

Data Migration: ETL is used to move data from one system to another during system upgrades or migrations. It extracts data from the source system, transforms it into the required format, and loads it into the target system.

-

Data Integration: ETL is used to integrate data from different sources and provide a unified view of the data. It extracts data from various sources, transforms it into a consistent format, and loads it into a target system.

-

Data Cleansing: ETL is used to clean and transform data by removing duplicates, correcting errors, and filling missing values. This helps to improve data quality and ensure that the data is accurate and consistent.

-

Data Aggregation: ETL is used to aggregate data from multiple sources and transform it into a format that can be analyzed easily. It extracts data from various sources, transforms it, and loads it into a target system for analysis.

What is ELT?

ELT is a data integration method that involves loading raw data directly into a target data system, then using its processing power to transform and process the data. This method is particularly useful for handling large volumes of unstructured data and complex data pipelines, as well as for cloud-based data warehouses. ELT can also help to reduce the time and complexity of the transformation process by allowing data engineers to work with the transformed data directly in the target system. The key difference between ELT and ETL is the order in which the data is transformed and loaded.

Process of ELT

ELT (Extract, Load, Transform) is a data integration process that involves extracting data from various sources such as raw data, data lakes, data warehouses, and cloud-based data warehouses. The extracted data is then loaded into a target system, such as a data warehouse or data lake, without transforming it. Once the data is loaded into the target system, it is transformed into the desired format using various data transformation processes.

The ELT process is an alternative to ETL (Extract, Transform, Load) which involves transforming the data before it is loaded into the target system. With ELT, the processing power is shifted from the transformation stage to the target system, allowing for more complex data pipelines and the ability to process larger amounts of data. ELT also allows for the processing of unstructured and structured data and encrypts sensitive data during the loading process.

Advantages of ELT

-

Better processing power: ELT can leverage the processing power of the target system, which is typically a data warehouse, to transform the data. This can result in faster processing times compared to ETL.

-

Scalability: ELT can handle large and complex data pipelines, making it an ideal solution for enterprise-level data integration needs.

-

Cost-effectiveness: ELT can reduce costs by using cloud-based data warehouses, which can be more cost-effective than onsite data warehouses.

-

Greater flexibility: ELT offers greater flexibility by allowing data engineers to manipulate data using SQL, making it easier to perform transformations and load data.

-

Better data quality: ELT can improve data quality by allowing data engineers to apply quality control processes on transformed data before loading it into the data warehouse.

-

Increased data security: ELT can encrypt sensitive data during the loading process, enhancing data security.

-

Multiple data sources: ELT can handle multiple data sources, including both structured and unstructured data, making it easier to consolidate and integrate data from various sources.

Limitations of ELT

-

Processing power: ELT requires more processing power and resources than ETL because data transformations occur after the data is loaded into the target system.

-

Complex data pipelines: As data pipelines become more complex, ELT may not be the best approach because it can slow down the data loading and transformation process.

-

Data security: With ELT, sensitive data can be vulnerable during the transformation process, and it’s important to have strong data security measures in place.

-

Data quality: Poor data quality in the source data can lead to poor quality transformed data in the target system, so it’s crucial to have good data governance and management processes in place.

-

Transformation processes: ELT requires a well-defined transformation process, and it can be challenging to develop and manage a process that is efficient and effective.

-

Integration methods: While ELT can work with many data integration methods, it may not be the right method for all data integration scenarios. It’s important to choose the right data integration method for the specific use case.

Use cases

-

Data Warehousing: ELT can be used to integrate and consolidate data from multiple sources into a single data warehouse, allowing for centralized data management and analysis.

-

Data Lake Integration: ELT can be used to transform and load data into a data lake, allowing for large volumes of data to be stored and analyzed in a flexible and scalable manner.

-

Cloud-Based Data Warehouses: ELT is well-suited for cloud-based data warehouses, as it can handle large volumes of data with ease and can easily scale up or down depending on business needs.

-

Real-Time Data Integration: ELT can be used to integrate real-time data streams from multiple sources into a single target system, providing organizations with up-to-the-minute insights into their operations.

-

Legacy System Integration: ELT can be used to integrate data from legacy systems into modern data platforms, allowing organizations to leverage their existing investments in data infrastructure.

5 key differences between ELT

-

Data Transformation: In ETL, data is transformed before it is loaded into the target system, while in ELT, data is loaded into the target system before being transformed. This means that ELT has greater flexibility when it comes to data transformation, as it can take advantage of the processing power and capabilities of the target system.

-

Processing Power: ELT leverages the processing power of the target system for data transformation, while ETL requires a separate processing environment to perform data transformations. This makes ELT better suited for processing large, complex data pipelines.

-

Data Size: ELT is better suited for processing large data volumes, as it can take advantage of the processing power of the target system. ETL, on the other hand, may struggle with large data volumes as it requires a separate processing environment.

-

Data Security: ELT can encrypt sensitive data at rest in the target system, which can provide better security for sensitive data. In ETL, data may need to be decrypted before it can be transformed, which can pose security risks.

-

Data Management: ELT allows for more efficient data management, as data can be consolidated and transformed within the target system. ETL may require more data movement and management, which can be time-consuming and increase the risk of errors.

ETL vs ELT: Key differences and considerations

There are several key differences between ELT (Extract, Load, Transform) and ETL (Extract, Transform, Load) processes in data integration which are explained under the following points.

Make a table explaining the differences

|

Difference |

ETL |

ELT |

|

Data Processing Order |

Extract data, transform data, load data |

Extract data, load data, transform data |

|

Transformation Location |

Performed in the ETL tool |

Performed in the target data warehouse |

|

Data Integration Complexity |

High, requires complex ETL tool setup and management |

Low, relies on the processing power of the target data warehouse |

|

Data Processing Speed |

Slower due to the transformation process taking place during ETL |

Faster since the data is loaded into the target data warehouse first and then transformed |

|

Data Volume Scalability |

Limited, as processing large data volumes requires expensive ETL hardware |

Highly scalable due to the processing power of modern data warehouses |

|

Data Quality Management |

Centralized and comprehensive data quality management |

Decentralized data quality management |

|

Data Warehousing Architecture |

On-premise and cloud-based data warehouses |

Primarily cloud-based data warehouses |

|

Flexibility and Adaptability |

Rigid due to the pre-defined ETL process |

More flexible, allowing for changes in data sources or target systems |

|

Connectivity Options |

Limited by the ETL tool’s compatibility with data sources and target systems |

More connectivity options available, since ELT relies on the target data warehouse’s connectivity capabilities |

|

Data Governance and Compliance |

Governed and managed through the ETL tool |

Governed and managed through the target data warehouse |

|

Data Latency and Real-Time Processing Capabilities |

Limited real-time processing capabilities and high data latency |

Higher real-time processing capabilities and lower data latency |

|

Cost and Resource |

High initial investment in hardware and software |

Lower initial investment, with costs scaling based on data warehouse usage |

|

Use Cases and Application |

Best for complex data transformation and integration scenarios |

Best for data warehousing and business intelligence use cases |

|

Availability and Suitability |

Suitable for large enterprises with complex data processing requirements |

Suitable for businesses of all sizes, especially those looking for scalable and cost-effective solutions |

|

Implementation |

Complex due to ETL tool setup and maintenance |

Simplified, with no need for complex ETL tool setup and maintenance |

|

Complexity |

High due to the need for ETL tool management and data transformation processes |

Low due to the simplified ELT process, with data transformation handled by the target data warehouse |

Are there any similarities between ETL and ELT?

ETL and ELT have many similarities in terms of their core functionality and purpose. ETL and ELT are both data integration processes used to transform and load data into a data warehouse or other target system. Both processes involve extracting data from various sources, transforming the data into a format that can be easily loaded into the target system, and loading the data into the target system.

ETL and ELT can also handle a variety of data types, including structured and unstructured data. They also support data processing from different sources, including cloud-based and on-site data warehouses. The two data integration processes require careful attention to data quality and data governance to ensure accuracy and compliance.

How to choose between ETL and ELT

Choosing between ETL and ELT requires a thorough understanding of business requirements, data sources and targets, data processing needs, technical constraints, and ROI/TCO. Firstly, identify the business needs and use cases for data integration, such as business intelligence or analytics.

Secondly, evaluate the data sources and targets, including the data types, volume, and location. Analyze data processing needs, including the complexity of data transformation and processing speed required.

Thirdly, identify technical constraints such as the processing power, data latency, and security concerns. Finally, evaluate the ROI and TCO, including costs for licenses, hardware, maintenance, and resource requirements. By considering these factors, you can choose between ETL and ELT based on which method best meets your specific business requirements and technical constraints.

Final words

When it comes to choosing between ETL and ELT for data integration needs, it’s crucial to evaluate the business requirements, data sources and targets, data processing needs, technical constraints, and the ROI and TCO. While ETL and ELT approaches have some key differences, they also share similarities, such as their ability to process structured and unstructured data, and their use in both onsite and cloud-based data warehouses. To determine the right data integration method for a particular task, it’s essential to consider factors like data security, data size, transformation processes, processing power, and complex data pipelines.

Ultimately, choosing the right approach requires a careful assessment of the specific needs and goals of the enterprise data management system.

Frequently asked questions (FAQ)

Which is better ETL or ELT?

Which is better ETL or ELT?

TThere is no one-size-fits-all answer to whether ETL or ELT is better, as it ultimately depends on the specific needs and goals of the organization. Both approaches have their advantages and disadvantages, and the right choice will depend on factors such as data volume and complexity, processing speed, data quality, technical constraints, and ROI and TCO considerations.

What are some technical considerations for implementing ETL or ELT?

What are some technical considerations for implementing ETL or ELT?

Is ELT replacing ETL?

Is ELT replacing ETL?

Is ETL outdated?

Is ETL outdated?

Start your trial today