The fastest way to ingest data into MySQL is… ClickHouse®

Written By: Stefan Kaeser, DoubleCloud Senior Solution Architect

MySQL.

It’s often the first choice for start-up’s looking for open-source software to store application data.

Now I’ve been working with MySQL myself for over two decades, and although I’ve seen and worked with other databases since then, it will always have a special place in my heart as it was the first one to show me how much fun working with SQL databases is.

Of course MySQL is an OLTP database, its strength is in serving multiple thousands of small queries and transactions, but it’s still one of the most often used DBMS for general workloads as well.

As I mentioned, a lot of companies start with MySQL, building their app (s) around it, watching it grow, and even though MySQL definitely wasn’t intended to be used as an analytical database, there are a lot of companies (and individuals) still out there using it for exactly this purpose.

And whilst there are a lot of good reasons why you shouldn’t use MySQL for big analytical workloads, MySQL is still a lot more capable of analytical processes than a lot of people think it is.

I’ve seen single MySQL instances having multiple Terabytes of data stored within itself, some with tables handling multiple billions of rows without bigger issues.

The concept of allowing different storage engines within the same environment allows MySQL to stretch over its own limits by several orders of magnitude.

I’ve seen quite impressive analytical queries achieved with the use of TokuDB or RocksDB storage engines that I never thought MySQL would be able to fulfill.

Still, MySQL was never meant to be used for big analytical workloads, as it was designed and built back in a time when clouds were meant to be in the sky and formats of data were proprietary instead of being used for big open exchanges.

So one of the biggest problems when doing analytical stuff in MySQL is still… How do you get the data in it?

1 billion rows to ingest — What to do?

Lately I’ve been running benchmarks on different DBMS.

The data set itself contained around 1 billion rows of data, split into yearly chunks of around 10 million rows, formatted in one line of JSON data per row, compressed via gzip, and located in a bucket in Amazon’s S3.

Each row has 11 columns, but for our benchmark we only need to extract 5 of those: date, station_id, tempMax, tempMin, tempAvg

For this blog, I will limit our data set to only one year of data, choosing 2020 at random.

The gzip compressed JSON data is 98MB.

When decompressed the file size grows to 2.7 GB, which is expected as the column names contained within the JSON are of course very compressible.

The total number of rows contained within the file is 12,095,646.

For our benchmark we chose a small Amazon RDS instance with 2 vCPU cores, 8GB of RAM and enough storage space to store the entire billion rows.

As we only want to show ingestion logic within this blog post, we limited the data set to 2020, so the whole data set would fit into ram.

The table definition for our ingestion case is quite simple.

We have a primary key with 2 columns (date, station_id) and a secondary index on station_id for a somewhat realistic scenario:

CREATE TABLE `sensor_data` (

`station_id` char(11) CHARACTER SET ascii COLLATE ascii_bin NOT NULL,

`date` date NOT NULL,

`tempAvg` int DEFAULT NULL,

`tempMax` int DEFAULT NULL,

`tempMin` int DEFAULT NULL,

PRIMARY KEY (`date`,`station_id`),

KEY `station` (`station_id`)

) ENGINE=InnoDB;

I chose InnoDB as the storage engine, as it’s the most commonly used table engine in MySQL.

How to start?

When trying to ingest our test data into MySQL is where we hit our first hurdle.

MySQL can’t read directly from S3 or unzip the data on the fly. Therefore we have to do some manual steps, before we can even start:

Download the files: Depending on if you need these tasks regularly or just one time; this step could be easy or complex and the amount of time needed to implement that logic cannot be estimated in a general blog. Also the download speed depends on multiple factors, so we won’t include it here in our comparison, just mention that it can have an impact.

Decompressing the files: The easiest way to achieve this is by using gzip -d {filename} on the cli.

Decompressing files of that size normally doesn’t take much time, but still, we have to write the decompressed data to the disk. In my tests it took around 7 seconds per file.

Now that we have the files locally and decompressed, we can really start to ingest the data.

We need to read the JSON data, get rid of the unwanted columns, and “only” ingest the 12 million rows of data into MySQL.

Ingesting local files to MySQL

Use scripting language like PHP

A lot of environments which use MySQL as their main DBMS also make use of PHP (remember LAMP stack anyone?).

A simple solution to achieve our goal would be to read the JSON file one line at a time, use PHPs parsing functionality to get the values of the 5 needed columns and insert them directly into MySQL.

Something like this:

$mysqli = new mysqli($mysql_host, $mysql_username, $mysql_password, '', $mysql_port);

$filename = $argv[1];

$handle = @fopen($filename, 'r');

if ($handle)

{

while (($buffer = fgets($handle, 4096)) !== false)

{

$row = json_decode($buffer, true);

$mysqli->query("

INSERT IGNORE INTO test.sensor_data

(station_id, date, tempMax, tempMin, tempAvg)

VALUES

('{$row['station_id']}', '{$row['date']}', " .

($row['tempMax'] ?? 'NULL') . ", " .

($row['tempMin'] ?? 'NULL') . ", " .

($row['tempAvg'] ?? 'NULL') . ")

");

}

fclose($handle);

}

exit(0);

Doing it this way unfortunately has the overhead of executing a single transaction for each row of the dataset, hence only achieving around 60 rows/second, or taking approx. 59 hours for the 12 million rows.

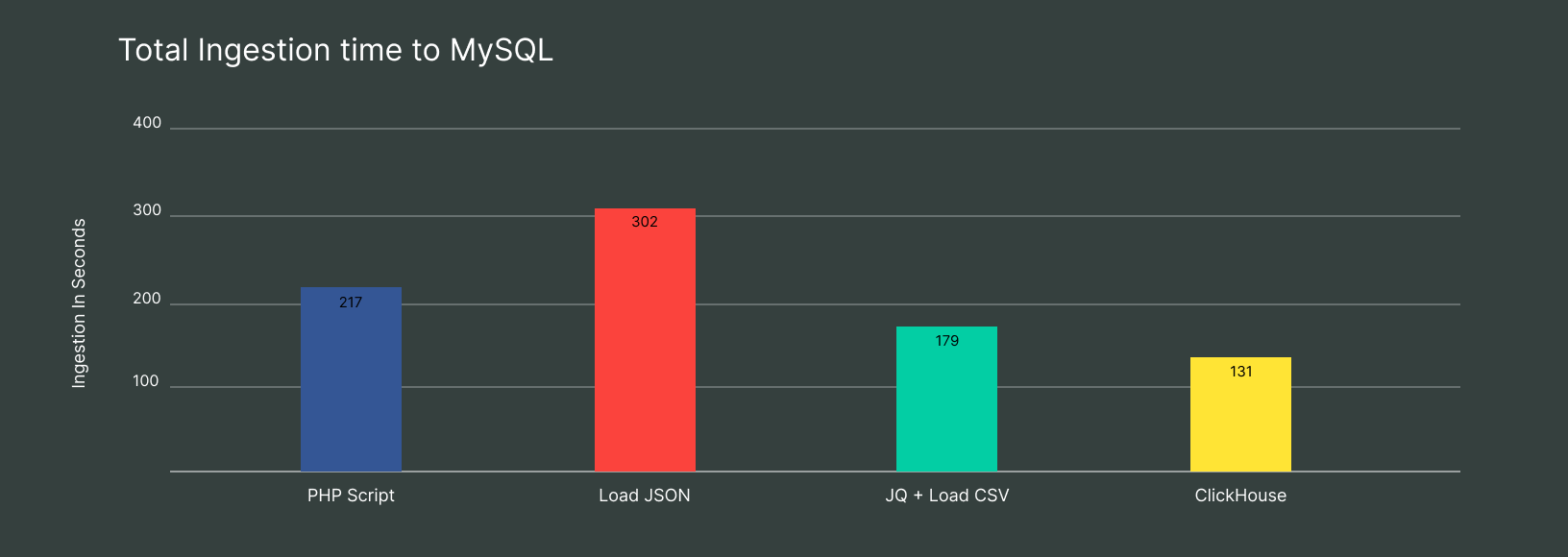

However, it’s easy to optimize this logic by introducing the bunching of rows, combining 10k rows per insert query, speeding up the whole process to take 3 minutes and 30 seconds for the whole data set.

Ingestion speed is okay for the amount of data but it required a lot of manual work and no piece of error handling is written yet.

But there are ways to optimize data ingestion in MySQL.

Use JSON functionality with MySQL

Unfortunately MySQL has no feature for working with JSON files directly, but we can interpret the whole file as a CSV file, having only a single column containing a JSON string.

That way we can load the JSON file via the LOAD DATA command, and then select the needed columns via the built-in JSON functions of MySQL.

LOAD DATA LOCAL INFILE '~/MySQLBlog/sensors.2020.json'

INTO TABLE test.sensor_data

(@json)

SET station_id = JSON_UNQUOTE(JSON_EXTRACT(@json, '$.station_id')),

date = JSON_UNQUOTE(JSON_EXTRACT(@json, '$.date')),

tempMax = nullif(JSON_UNQUOTE(JSON_EXTRACT(@json, '$.tempMax')), 'null'),

tempMin = nullif(JSON_UNQUOTE(JSON_EXTRACT(@json, '$.tempMin')), 'null'),

tempAvg = nullif(JSON_UNQUOTE(JSON_EXTRACT(@json, '$.tempAvg')), 'null')

This approach took 4 minutes and 55 seconds, so even slower than the ‘optimized’ PHP solution, but at least it’s easier to type (At least for a SQL guy like myself).

The main problem with this approach taking so long though is that we still send the data of all the columns to the MySQL server, and then throw all but 5 away within the server, hence having unneeded data sent over the wire to MySQL.

Also loading 10 million rows in a single transaction issues quite a load to the server.

Use Cli and CSV functionality with MySQL

We can avoid using the JSON functionality of MySQL as well as the sending of unwanted data by adding another preparation step before the ingestion to MySQL.

We can use a command line JSON tool like jq to select only the needed columns and create a comma separated file, only containing the 5 needed columns:

jq -r '. | [.station_id, .date, .tempMax, .tempMin, .tempAvg] | @csv' sensors.2020.json > sensors_parsed.2020.csv

Parsing JSON to CSV on cli takes around 60 seconds in that case, generating a CSV file with 376 MB of data.

Now importing the csv file can be done quite easily within the MySQL client again:

LOAD DATA LOCAL INFILE '~/MySQLBlog/sensors_parsed.2020.csv'

INTO TABLE test.sensor_data

FIELDS TERMINATED by ',' OPTIONALLY ENCLOSED BY '"'

(station_id, date, @tempMax, @tempMin, @tempAvg)

SET tempMax = nullif(@tempMax, ''),

tempMin = nullif(@tempMin, ''),

tempAvg = nullif(@tempAvg, '')

This approach takes 1 minute 52 seconds, which is a lot faster than reading directly from JSON to MySQL.

We still, however, have the problem of big transactions. So if we want our MySQL instance to handle other requests without trouble, we’d need to split the csv file into smaller chunks of 10k rows to reduce the load on the server.

Thus we again need to write simple cli logic to split the csv into chunks and then write a loop to go over the 1,000 chunk files, ingesting one after another.

Overall, regarding the 60 sec of cli time and the 1 min 52 sec for inserting the data, the total time to ingest our 12 million JSON rows takes 2 min 52 sec, therefore being 40% faster than reading JSON directly from MySQL and still being 17% faster than the scripted solution.

Let ClickHouse handle all the overhead

Now even though we can reach a somewhat acceptable speed with cli tools and the mysql client, it’s still not a very nice approach.

Also… imagine if you had to do this on a regular basis, as your software imports data from external sources on a nightly basis.

You’d need to add checks for each step to make sure it’s successfully finished and then clean up all the temporary data files afterwards again.

And if you need to integrate data from multiple sources, in multiple formats, it would get very ugly very quickly.

That’s where ClickHouse can help you.

ClickHouse has extremely rich integration capabilities, reading from multiple sources (like s3, rest APIs, local files, external DBMS etc).

So even if you don’t want to use ClickHouse as your daily driver for your analytical queries, you can still make use of its integration features.

I connected to a managed ClickHouse cluster within the DoubleCloud sitting in the same AWS region as my test MySQL instance, and then just executed this query:

SET external_storage_connect_timeout_sec = 300;

SET mysql_max_rows_to_insert = 10000;

INSERT INTO FUNCTION mysql('{mysqlurl}',

test, sensor_data,

'{username}', '{password}')

(station_id, date, tempMax, tempMin, tempAvg)

SELECT station_id, date, tempMax, tempMin, tempAvg

FROM s3('https://{s3path}/sensors.2020.json.gz', '{aws_access_id}', '{aws_secret_access_id}', JSONEachRow,

$$station_id String, date Date, tempMax Nullable(Int32), tempMin Nullable(Int32), tempAvg Nullable(Int32)$$)

- The part between the $$ signs can be omitted in most use cases, as ClickHouse can do schema inference, but in my test data this was not possible

The whole process of ingesting the data to MySQL now took 2 minutes and 11 sec, and as the inserts are automatically bunched to 10k, the cpu of MySQL barely noticed anything.

But not only is it 30% faster on the ingestion time, it also did the downloading and decompression step.

And even better, it saved me a lot of time implementing the whole logic!

ClickHouse does everything for me, I just had to execute a single query and wait for the result, making it a lot easier on the implementation side, to handle possible errors, cleanups (what cleanups?) etc.

And as a bonus, I also have the full tool set of SQL at my hands directly on insert already.

I could add WHERE conditions to filter out rows before inserting, can manipulate or parse columns, or I can even do aggregations before inserting the data to MySQL!

If anyone knows a faster way of getting data into MySQL, please contact me ;).

Conclusion

In this article, we’ll talk about:

As we can see, utilizing ClickHouse for ingesting data to MySQL has been the fastest way to do so.

ClickHouse can not only spare you time if you use it as DBMS for your analytical queries but it can also spare you a lot of implementation time.

With ClickHouse it’s extremely easy to convert data into different formats, reading from multiple sources (s3 was just an example here, you can utilize RestAPIs, other DBMS, local files, Kafka, etc.), and doing data manipulation on the fly.

So especially if you have multiple sources of data, it makes the whole process of collecting the data, downloading, converting etc. a lot easier.

You just need to rewrite a single query for echo of your ingestion process, instead of rewriting a whole bunch of commands for downloading, preparing inserting etc.

In the end you can still utilize your MySQL instances for all your preferred workloads, and still let ClickHouse help you be faster overall!

And this is the reason why as a tech guy, I fell in love with ClickHouse.

It’s not because it’s fast as hell in analytical workloads (although it is). It’s because it’s so extremely versatile to use, from prototypes to production workloads or just for having fun with.

ClickHouse is a trademark of ClickHouse, Inc. https://clickhouse.com

Start your trial today