Machine learning with ClickHouse and DoubleCloud

Written by: Adam Jennings, DoubleCloud Solution Architect

ClickHouse® is a versatile and fast open-source column-oriented database management system with a large variety of built-in, non standard SQL functions that can do some pretty amazing things.

It even has a few built-in machine learning functions for stochasticLinearRegression and stochasticLogisticRegression.

In addition to that, self-hosted ClickHouse can even evaluate against external CatBoost models by using the built in catboostEvaluate function when setting within the config.xml and pointing it to your CatBoost library.

However, if you want a more versatile set of tooling, with a syntax that will be familiar to ClickHouse and MySQL users that’s also portable across your data stores, look no further than MindsDB.

In this article, we’ll talk about:

DoubleCloud Managed Service for ClickHouse®

An open-source, managed ClickHouse DBMS service for sub-second analytics.

MindsDB is an open-source framework that aims to democratize Machine Learning by providing a familiar SQL interface for in-database SQL-based machine learning.

Think of BigQuery ML or Snowflake’s recent acquisitions into the Machine Learning space, but applied to many of the open-source machine learning frameworks including MindsDB’s own Lightwood, TensorFlow, PyTorch, HuggingFace, OpenAI, and more.

Why MindsDB

Not only does it democratize machine learning, it also allows for more of the ML-Ops lifecycle to remain within the database.

According to the documentation, “[w]ith MindsDB, you can build, train, optimize, and deploy your ML models without the need for other platforms. And to get the forecasts, simply query your data and ML models.”

Because it’s open-source, and based on MySQL syntax, it’s portable between environments and deoesn’t need to learn a specific data warehouse Machine Learning library.

How to implement MindsDB with DoubleCloud

You can either leverage a MindsDB Cloud account or you can self-host locally on your cloud VMs or Docker containers.

In either case, you’ll connect to the MySQL-based MindsDB interface and then connect to your datasource… in our case ClickHouse.

I’ve already imported the Melbourne Kaggle Housing Snapshot dataset:

1. Create a log table

sql

CREATE OR REPLACE TABLE melbourne.housing

ENGINE = MergeTree()

PARTITION BY toYYYYMM(Date)

ORDER BY (Date)

AS SELECT

SuburbAS Suburb,

Address AS Address,

toUInt16(Rooms)AS Rooms,

CAST(assumeNotNull(Type)

AS Enum8('u' = 1, 'h' = 2, 't' = 3))

AS Type,

toUInt32(Price)AS Price,

CAST(assumeNotNull(Method)

AS Enum8('S' = 1, 'VB' = 2, 'PI' = 3, 'SP' = 4, 'SA' = 5))

AS Method,

SellerGAS SellerG,

ifNull(toDate(parseDateTimeBestEffort(Date)),toDateTime(0))

AS Date,

toInt32(Distance)AS Distance,

toUInt16(Postcode)AS Postcode,

Bedroom2AS Bedroom2,

BathroomAS Bathroom,

CarAS Car,

LandsizeAS Landsize,

BuildingAreaAS BuildingArea,

toUInt16(YearBuilt)AS YearBuilt,

CouncilAreaAS CouncilArea ,

LattitudeAS Latitude,

LongtitudeAS Longitude,

geoToS2(Longitude, Latitude) AS s2Index,

RegionnameAS Regionname,

toUInt16(Propertycount)AS Propertycount

FROM melbourne.housing_log

2. Import into log table from local csv with the command:

bash

clickhouse-client --host rw.qwertyuiop.at.double.cloud --port 9440 --secure --user admin --password LongPassWordStringGoesHere --query="INSERT INTO melbourne.housing_log FORMAT CSVWithNames" < melb_data.csv

3. Convert to MergeTree and optimize the table a little and clean up some names:

sql

CREATE OR REPLACE TABLE melbourne.housing

ENGINE = MergeTree()

PARTITION BY toYYYYMM(Date)

ORDER BY (Date)

AS SELECT

SuburbAS Suburb,

Address AS Address,

toUInt16(Rooms)AS Rooms,

CAST(assumeNotNull(Type)

AS Enum8('u' = 1, 'h' = 2, 't' = 3))

AS Type,

toUInt32(Price)AS Price,

CAST(assumeNotNull(Method)

AS Enum8('S' = 1, 'VB' = 2, 'PI' = 3, 'SP' = 4, 'SA' = 5))

AS Method,

SellerGAS SellerG,

ifNull(toDate(parseDateTimeBestEffort(Date)),toDateTime(0)) AS Date ,toInt32(Distance)AS Distance,

toUInt16(Postcode)AS Postcode,

Bedroom2AS Bedroom2,

BathroomAS Bathroom,

CarAS Car,

LandsizeAS Landsize,

BuildingAreaAS BuildingArea,

toUInt16(YearBuilt)AS YearBuilt,CouncilAreaAS CouncilArea ,

LattitudeAS Latitude,

LongtitudeAS Longitude,

geoToS2(Longitude, Latitude) AS s2Index,

RegionnameAS Regionname,

toUInt16(Propertycount)AS Propertycount

FROM melbourne.housing_log

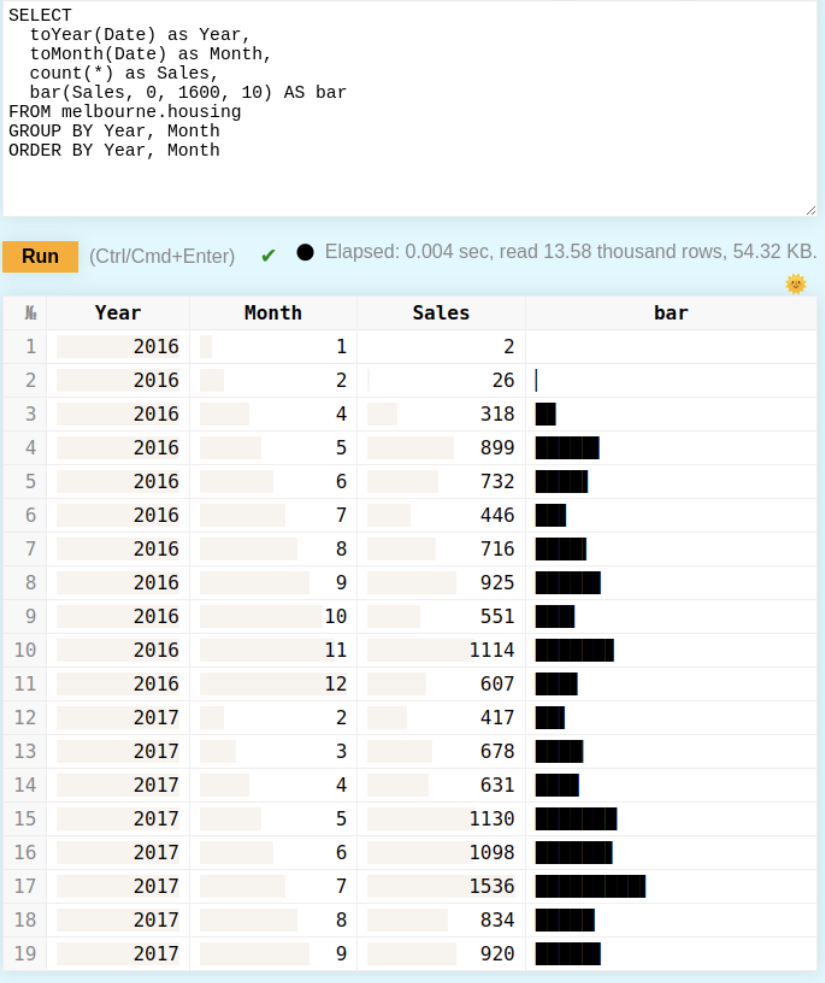

Let’s query the data from the DoubleCloud UI, by clicking on open the SQL console from the cluster’s main tab.

See the shape of the data, in aales per month:

sql

SELECT

toYear(Date) as Year,

toMonth(Date) as Month,

count(*) as Sales,

bar(Sales, 0, 1600, 10) AS bar

FROM melbourne.housing

GROUP BY Year, Month

ORDER BY Year, Month

I first need to establish a connection to my ClickHouse.

In DoubleCloud’s UI, I need to expose the cluster to the MindsDB source IP.

If running locally, this will be your WAN IP address but if running on MindsDB cloud, you need to contact MindsDB to get their list of IP addresses (Hint, they have 3 and you might find it in their Slack or if you reach out to me).

Then I put in my connection string within the MindsDB console:

sql

CREATE DATABASE doublecloud --- display name for database.

WITH ENGINE = 'clickhouse', --- name of the mindsdb handler

PARAMETERS = {

"host": "rw.qwertyuiop.at.double.cloud",--- hostname or IP address

"port": 8443, --- port used to make TCP/IP connection

"database": "melbourne", --- database name

"user": "admin", --- database user

"password": "SuperSecretLongPassWordStringHere",--- password

"protocol": "https" --- optional, http or https (defaults to native)

};

In MindsDB, I can run a similar query, paying attention to my database name from above (doublecloud) and wrapping the query in a SELECT statement to pass case-sensitive functions to my ClickHouse cluster:

sql

SELECT * FROM doublecloud ( SELECT

toYear(Date) as Year,

toMonth(Date) as Month,

count(*) as Sales

FROM melbourne.housing

GROUP BY Year, Month

ORDER BY Year, Month )

To train the model

sql

CREATE MODEL mindsdb.sale_price_model

FROM doublecloud

(SELECT * FROM housing)

PREDICT Price;

To view status and models:

sql

SELECT name, accuracy, predict, select_data_query

FROM mindsdb.models

To check if there is a difference when storing signed values, I also added the same columns again but prefixed with an i.

And this is the result:

|

name |

accuracy |

predict |

select_data_query |

|

sale_price_model |

0.817 |

Price |

SELECT * FROM housing |

|

sale_price_model_small |

0.799 |

Price |

SELECT Suburb, Rooms, Dist… |

|

sale_price_model_xsmall |

0.453 |

Price |

SELECT Type, Rooms, Price,… |

I created three models.

I tried to balance the number of features with accuracy. The first uses 21 feature columns and the target column and receives an 81.7% accuracy.

The second model (small) barely loses any accuracy at 79.9%, but only uses 10 features and the target column.

The last model, (xsmall), uses 6 feature columns and the target column.

We won’t be using that one.

To test my model, I’ll choose a sale date and a few of the feature columns and query the AI table for a predicted answer.

I’ll select both the target column and the _explain column for further details about the model’s performance.

sql

SELECT Price, Price_explain

FROM mindsdb.sale_price_model_small

WHERE Rooms=2

AND Bathoom=2

AND YearBuilt=2012

As Text:

“predicted_value”: 724781, “confidence”: 0.83, “anomaly”: null, “truth”: null, “confidence_lower_bound”: 415688, “confidence_upper_bound”: 1033873

This results has an accuracy of 83% and includes our upper and lower confidence bounds

If I want to batch predict, by joining a model with a new source table.

sql

SELECT h.Price AS real_price,

m.Price AS predicted_price,

h.Rooms, h.Bathroom, h.YearBuilt, h.Car, h.Landsize

FROM doublecloud.housing AS h

JOIN mindsdb.sale_price_model_small AS m

LIMIT 100;

|

real_price |

predicted_price |

Rooms |

Bathroom |

YearBuilt |

Car |

Landsize |

|

813000 |

612953 |

2 |

1 |

[NULL] |

2 |

108 |

|

1205000 |

1309204 |

3 |

1 |

[NULL] |

2 |

490 |

|

1035000 |

1149307 |

2 |

1 |

1900 |

0 |

156 |

|

720000 |

675667 |

2 |

1 |

2013 |

2 |

292 |

|

1222500 |

1074140 |

3 |

2 |

2000 |

2 |

299 |

|

1151000 |

1288506 |

3 |

1 |

[NULL] |

1 |

695 |

Start your trial today