Confluent Kafka vs Apache Kafka: Experts comparison

Last updated on Sep 4th, 2024.

In 2008, Jay Kreps and his colleagues at LinkedIn faced a monumental challenge. As the technical lead for the platform’s search systems, recommendation engine, and social graph, Kreps needed a way to efficiently handle the company’s real-time data feeds. This need led to the creation of Apache Kafka, an open-source software designed to process massive amounts of real-time data.

Kafka quickly proved its worth at LinkedIn, serving as a “central nervous system” for the platform’s complex systems and applications. It allowed different parts of the system to tap into continuous data streams, responding in real-time to user activities like profile updates, new connections, and posts to the newsfeed.

Kafka’s potential extended far beyond LinkedIn’s walls. Recognizing this, Kreps and co-creators Neha Narkhede and Jun Rao left LinkedIn in 2014 to found Confluent. Their vision was to build a fully managed Kafka service and enterprise stream processing platform that could serve businesses across various industries.

Today, borne from the same minds, Apache Kafka is used by over 100,000 organizations globally, while Confluent offers an enhanced, enterprise-ready version of the technology. But what exactly sets Confluent Kafka apart from its open-source counterpart?

Understanding the basics of Confluent Kafka vs. Apache Kafka

In this article, we’ll talk about:

Before we discuss the specifics of Apache Kafka vs. Confluent Kafka, let’s examine what each platform provides.

What is Apache Kafka?

Apache Kafka is a real-time event-streaming platform. Let’s say you run an e-commerce website. Every time a customer views a product, adds it to their cart, or makes a purchase, that’s an event. Kafka lets you capture, store, and process these events in real-time.

Apache Kafka can perform this process millions of times per second at low latency. This makes it ideal for high-capacity, real-time data pipelines and streaming applications. Its key features include:

-

Scalability: Kafka can easily scale horizontally to handle massive volumes of data across distributed systems.

-

Durability: Data persists on disk and is replicated within the cluster for fault tolerance.

-

High throughput: Even with modest hardware, Kafka can handle millions of messages per second.

-

Fault tolerance: The distributed nature of Kafka ensures that it can survive machine failures without losing data.

-

Exactly-once semantics: Kafka guarantees that each message is processed once and only once, crucial for many business applications.

-

Stream processing: With Kafka Streams API, you can perform real-time processing on your data streams.

These capabilities make Apache Kafka used in a wide array of scenarios: tracking product activity, monitoring IoT sensor data, real-time analytics, and event-sourcing architectures. With its scalability, it is also the go-to solution for any product that sees surges in demand, such as social media or e-commerce platforms during events.

This is all possible because of the Apache Kafka architecture.

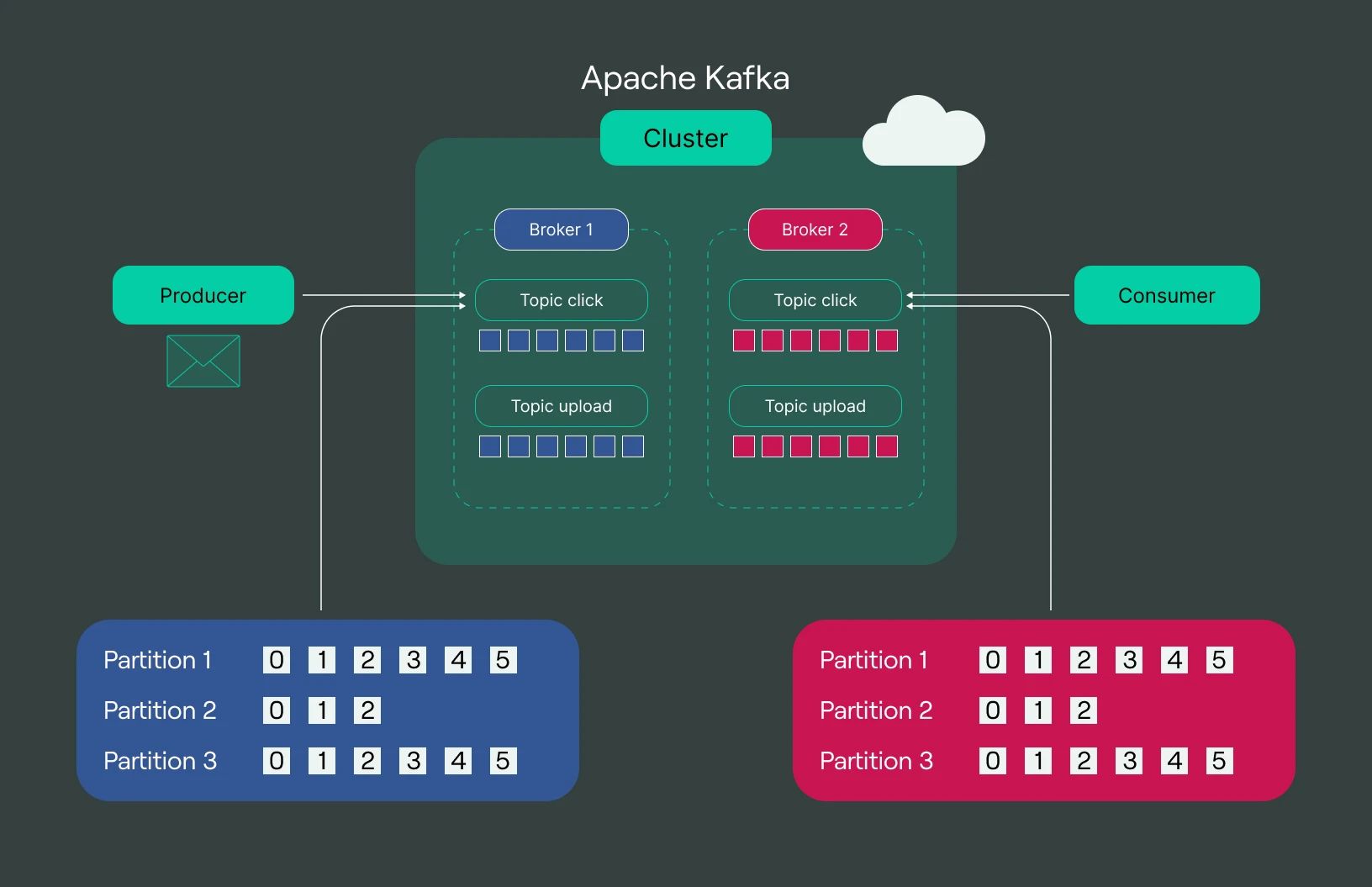

1. Brokers: are the servers that form the backbone of a Kafka cluster. They store and manage topics and handle read and write requests from clients. Brokers can be easily scaled horizontally to increase capacity and throughput.

2. Topics: These are categories or feed names to which records are published. Topics in Kafka are always multi-subscriber; a topic can have zero, one, or many consumers that subscribe to the data written to it.

3. Partitions: Each topic is divided into partitions, which are the units of parallelism in Kafka. This allows data within a topic to be distributed across multiple brokers, enabling parallel processing and high scalability.

4. Producers: These are client applications that publish (write) events to Kafka topics. Producers can choose which partition to send a record to within a topic.

5. Consumers: These are client applications that subscribe to (read and process) events from Kafka topics. Consumers are organized into consumer groups for parallel processing.

6. ZooKeeper: This is used for distributed coordination within the Kafka cluster, managing broker leadership elections, and maintaining configuration information. (It’s worth noting that Kafka is moving towards ZooKeeper-less operation in future versions.)

This architecture allows Kafka to achieve its high throughput, scalability, and fault tolerance. For example, if a broker fails, other brokers can take over its work. You can add more brokers to the cluster if you need more capacity.

This all sounds ideal. But Apache Kafka is an open-source tool. This means that users have to set up and manage any instances of Apache Kafka themselves, and, at scale, this can be extremely challenging, even for large, experienced engineering teams.

Step in Confluent Kafka.

What is Confluent Kafka?

So, Confluent Kafka came from the same team as Apache Kafka, and for a simple reason: their invention had taken off, and they saw how difficult it was for scaling teams to manage Apache Kafka deployments.

Confluent Kafka is a commercial offering built on top of Apache Kafka and aims to address these challenges by providing a fully managed, cloud-native Kafka service. This allows organizations to offload the operational burden of running Kafka, freeing up engineering resources to focus on building applications rather than managing infrastructure.

Confluent has a few proprietary features that extend Kafka’s functionality. The first is the Schema Registry. This allows users to manage and store a versioned history of all schemas, ensuring data compatibility across producers and consumers. The Schema Registry helps prevent data corruption and enables smooth schema evolution, which is crucial in large-scale, evolving systems. It also reduces the chances of downstream failures due to incompatible data formats, improving overall system reliability.

Another is ksqlDB. This streaming SQL engine enables real-time data processing and analysis using SQL-like syntax, making it easier for non-developers to work with streaming data. With ksqlDB, organizations can build powerful stream processing applications without needing extensive programming knowledge. This democratizes access to real-time data and allows faster development of streaming analytics solutions, ultimately accelerating business time-to-insight.

Confluent Kafka doesn’t replace Apache Kafka–it builds upon it. Organizations using Confluent Kafka still benefit from all the features and capabilities of Apache Kafka, but with additional tools, services, and support that can accelerate development, simplify operations, and reduce the total cost of ownership for large-scale, mission-critical event streaming platforms.

The key comparisons between Confluent Kafka vs. Apache Kafka

So now we know the basic difference between Confluent Kafka and Apache Kafka. But how do they stack up with each other when we go into detail?

Use cases

Apache Kafka, as the foundational technology, is incredibly versatile. It’s the Swiss Army knife of event streaming, capable of handling a wide array of scenarios:

1. Real-time data pipelines: Apache Kafka is excellent for building real-time data pipelines between systems or applications. It’s often used to reliably get data from system A to system B with low latency.

2. Microservices communication: Kafka serves as a robust backbone for microservices architectures, allowing services to communicate asynchronously and reliably.

3. Log aggregation: Many organizations use Kafka to collect logs from various services and systems, centralizing them for easier processing and analysis.

4. Stream processing: The Kafka Streams API allows developers to build real-time applications that process and analyze data streams.

5. Event sourcing: Kafka’s ability to store and replay events makes it suitable for implementing event-sourcing patterns in applications.

6. Metrics and monitoring: Kafka can collect and process metrics and monitoring data from distributed systems, enabling real-time system health checks and alerts.

While Confluent Kafka can handle all the use cases of Apache Kafka, it excels in more complex, enterprise-level scenarios:

1. Multi-region deployments: Confluent’s tools make it easier to set up and manage Kafka clusters across multiple data centers or cloud regions, ideal for global organizations.

2. Data governance and compliance: Confluent Kafka is better suited for industries with strict data governance and compliance requirements with features like Schema Registry and enhanced security options.

3. Self-service data platforms: Confluent’s GUI tools and ksqlDB make it easier for non-technical users to work with streaming data, enabling organizations to build self-service data platforms.

4. Large-scale data lake integration: Confluent’s pre-built connectors and tools simplify the process of building real-time data lakes, making it easier to integrate streaming data with batch processing systems.

So while Apache Kafka is a powerful tool for building event streaming applications, Confluent Kafka is designed to take that to the enterprise scale.

Performance

When it comes to performance, Apache Kafka and Confluent Kafka are more alike than different.

This isn’t surprising, given that they share the same core architecture. Both can handle millions of messages per second with low latency, which is why Kafka has become the go-to solution for high-throughput, real-time data streaming.

However, performance isn’t just about the underlying code. In real-world scenarios, it heavily depends on the infrastructure and deployment. This is where the differences between Apache Kafka and Confluent Kafka start to emerge.

With Apache Kafka, you’re in the driver’s seat. If you have a team with deep Kafka expertise and the resources to optimize your infrastructure, you can potentially achieve higher performance than a standard Confluent Kafka setup. You have complete control over hardware selection, network configuration, and fine-tuning parameters, which can lead to performance gains in specific use cases.

Confluent Kafka, on the other hand, takes a different approach. Instead of requiring you to be a Kafka expert, they provide a managed service that’s already optimized for performance. Their team of Kafka specialists handles the infrastructure and deployment details, ensuring that your Kafka clusters are running efficiently at scale.

This doesn’t necessarily mean that Confluent Kafka will always outperform a well-tuned Apache Kafka deployment. However, it does mean that you’re more likely to achieve consistent, reliable performance without needing to become a Kafka guru yourself.

Scalability

Both Apache Kafka and Confluent Kafka are built for scale. The ability to handle massive amounts of data made Kafka famous in the first place. However, when scaling your system in the real world, some key differences exist.

Apache Kafka’s scalability is legendary. Need to handle more data? Just add more brokers to your cluster. Want to process messages faster? Increase the number of partitions in your topics. It’s a bit like playing with Lego blocks — you can keep adding pieces to build a bigger, more powerful system.

But here’s the catch: while Apache Kafka can scale impressively, managing that scale yourself can be a bit like juggling while riding a unicycle. It’s possible, but it takes a lot of skill and practice. You need to think about things like:

- How do you balance data across your brokers as you add more?

- How do you handle network partitions in a geographically distributed cluster?

- How do you manage consumer group rebalancing when you add new consumers?

These problems aren’t insurmountable, but they require Kafka expertise and careful planning.

With Confluent Kafka, their managed service handles many of the complexities of scaling for you. Want to scale up? They can provision more resources. Need to scale globally? They have tools to help manage multi-region clusters.

Confluent also provides features that can make scaling easier:

- Self-balancing clusters automatically redistribute data as you add or remove brokers.

- Confluent Replicator simplifies setting up and managing multi-datacenter deployments.

- Confluent Control Center gives you visibility into your cluster’s performance as you scale.

In the end, both Apache Kafka and Confluent Kafka can scale to meet enterprise needs. The question is: how much of the scaling complexity do you want to manage yourself?

Ease of use

Apache Kafka is powerful but can be complex. It requires a deep understanding of its architecture and best practices. You’ll need to manually configure brokers, manage ZooKeeper, and handle upgrades yourself.

Confluent Kafka, on the other hand, aims to simplify things. Their managed service takes care of the nitty-gritty details. With user-friendly interfaces and tools like ksqlDB, even non-experts can work with Kafka. This makes it ideal for larger teams, where not everyone will be an event-streaming god. The G2 reviews of Confluent Kafka often state ease of use as a key factor. There are two main reasons for the ease of use of Confluent Kafka:

1. Cloud-native design: Confluent Kafka is built from the ground up for cloud environments, offering seamless scalability and integration with cloud services. This design allows users to leverage familiar cloud concepts and tools, reducing the learning curve and simplifying deployment and management.

2. Significant support: Confluent provides extensive documentation, tutorials, and expert support. Their team of Kafka creators and specialists is always available to help with complex issues, ensuring that users can quickly overcome challenges and optimize their Kafka implementations.

Integration capabilities

Both platforms shine when it comes to integration. Apache Kafka has a vast ecosystem of open-source connectors, allowing you to integrate with numerous systems. If a connector doesn’t exist, you can always build your own.

Confluent takes this a step further. They offer a curated set of pre-built, fully managed connectors. This means less time spent on integration and more time on building your applications. Plus, with Confluent’s Schema Registry, you can ensure data compatibility across your entire system.

Security features

Apache Kafka provides solid security features, including SSL for encryption, SASL for authentication, and ACLs for authorization. However, setting these up and managing them requires significant effort. This can be particularly challenging in dynamic environments where security configurations need frequent updates. Many organizations find they need to dedicate substantial resources to maintain robust security in their Apache Kafka deployments.

Confluent Kafka builds on this foundation, offering additional features like Role-Based Access Control (RBAC) and centralized audit logs. They also provide tools to simplify security management, which can be a big plus for enterprises with strict compliance requirements. Confluent’s security features are designed to integrate seamlessly with existing enterprise security systems, making it easier to maintain a consistent security posture across the entire data infrastructure.

Monitoring and management

With Apache Kafka, you’re responsible for setting up your own monitoring and management tools. Several open-source options are available, but setting up a comprehensive monitoring system requires effort. This can lead to a patchwork of tools that may not always work well together, potentially leaving blind spots in your monitoring coverage.

Confluent Control Center helps with managing and monitoring your Kafka clusters. It provides real-time monitoring alerts and allows you to manage your entire Kafka ecosystem from a single interface. This can significantly reduce the operational overhead of running Kafka. The Control Center also offers predictive maintenance features, helping teams proactively address potential issues before they impact performance or availability.

Community and ecosystem

Apache Kafka has a vibrant, active open-source community. This means a wealth of resources, third-party tools, and community support. This can be a significant advantage if you enjoy being part of an open-source community and don’t mind rolling up your sleeves. The community-driven nature also means that new features and improvements are constantly being developed and shared.

Confluent, while built on open-source Kafka, is a commercial product. However, they contribute significantly to the Kafka project and offer their own community resources. With Confluent, you get the backing of the original Kafka creators and a team of experts. This can provide peace of mind for organizations that require enterprise-grade support and want to ensure they’re implementing Kafka best practices from the outset.

Comparison table

|

Comparison |

Apache Kafka |

Confluent Kafka |

|

Use cases |

Real-time stream processing, messaging, log aggregation, and event sourcing. |

Same as Apache Kafka, but also includes advanced features for enterprise use cases like multi-datacenter replication, data governance, and security. |

|

Popularity |

Widely used by developers and organizations of all sizes. |

Popular among large enterprises, especially those that require advanced features and support services. |

|

Technology |

open source distributed event streaming platform. |

Built on top of Apache Kafka and provides a more comprehensive platform for event streaming with additional features and tools. |

|

Performance |

High throughput, low latency, and fault-tolerant. |

Same as Apache Kafka, but also includes additional performance optimizations and monitoring tools. |

|

Pricing |

Free and open source. |

Offers both a free Community edition and a paid Enterprise edition with additional features and support services. |

|

Features |

Core features include pub/sub messaging, stream processing, and fault-tolerant storage. |

Adds additional features like schema registry, connectors, ksqlDB, multi-datacenter replication, and data governance tools. |

|

Licensing |

Apache License 2.0 |

Confluent Community License (based on Apache License 2.0) and Confluent Enterprise License. |

|

Ease of Use |

Requires some expertise to set up and configure, but has a relatively simple API for developers. |

Provides additional tools and services to simplify deployment, configuration, and management. |

|

Support and Services |

Community support available, but no formal support from the Apache Kafka project. |

Offers enterprise-grade support services, training, and consulting. |

|

Community |

Large and active open source community. |

Smaller community, but focused on enterprise use cases and features. |

|

Integration |

Integrates with a wide variety of data sources and processing frameworks. |

Same as Apache Kafka, but also includes additional integrations with Confluent’s proprietary tools and services. |

|

Security |

Supports SSL/TLS encryption, authentication, and authorization. |

Same as Apache Kafka, but also includes additional security features like data encryption, audit logs, and fine-grained access control. |

|

Monitoring |

Provides basic monitoring through JMX and command-line tools. |

Includes additional monitoring tools and dashboards through Confluent Control Center. |

|

Deployment |

Can be deployed on-premises or in the cloud. |

Same as Apache Kafka, but also includes additional deployment options like Confluent Cloud. |

|

Ecosystem |

Large and growing ecosystem of third-party tools and services. |

Same as Apache Kafka, but also includes additional tools and services from Confluent. |

|

Connectors |

Provides a library of connectors for integrating with various data sources and sinks. |

Same as Apache Kafka, but also includes additional connectors developed by Confluent. |

Pros and cons of Confluent Kafka vs. Apache Kafka

Apache Kafka

Advantages:

1. Throughput and scalability: Apache Kafka excels in handling massive volumes of data. Its ability to scale horizontally allows it to process millions of messages per second, making it suitable for high-throughput scenarios.

2. Flexibility: With full access to the source code, users can customize Apache Kafka to meet specific requirements. This level of control allows for fine-tuning and optimization based on unique use cases.

3. Cost-effective: As an open-source solution, Apache Kafka can be more economical for organizations with the in-house expertise to manage and maintain it.

Disadvantages:

1. Complexity: Apache Kafka has a steep learning curve. Setting up, configuring, and managing a Kafka cluster requires significant expertise in distributed systems and event streaming.

2. Maintenance: Running Apache Kafka requires ongoing attention and resources. Organizations need to allocate dedicated personnel for cluster management, upgrades, and troubleshooting.

3. Limited out-of-the-box features: While Apache Kafka provides robust core functionality, advanced features often require additional development or integration with third-party tools.

Confluent Kafka

Advantages:

1. Ease of use: Confluent Kafka offers a more user-friendly experience with its managed service and additional tools like ksqlDB and Confluent Control Center.

2. Managed service: Confluent handles many operational aspects, including maintenance, upgrades, and scaling, allowing teams to focus more on application development rather than infrastructure management.

3. Advanced features: Confluent provides additional proprietary features, such as Schema Registry and robust security options, which enhance Kafka’s capabilities out of the box.

Disadvantages:

1. Cost: The managed service and additional features of Confluent Kafka are more expensive than those of the open-source Apache Kafka.

2. Less direct control: While Confluent’s managed service reduces operational burden, it also means less direct control over the underlying Kafka infrastructure.

3. Potential vendor lock-in: Reliance on Confluent’s proprietary features can make it challenging to switch to other Kafka-based solutions in the future.

Making the right choice with Confluent Kafka vs. Apache Kafka

So, you’ve seen the differences between Apache Kafka and Confluent Kafka. But how do you decide which one is right for your organization? Let’s break down the key factors to consider and some scenarios where each platform might shine.

When choosing between Apache Kafka and Confluent Kafka, you’ll want to evaluate:

-

Budget: How much are you willing to invest in your event streaming platform?

-

In-house expertise: Do you have Kafka experts on your team, or are you looking for a more managed solution?

-

Required features: Do you need advanced features like ksqlDB or Schema Registry out of the box?

-

Scalability needs: How much data are you planning to process, and how quickly do you need to scale?

-

Integration requirements: What existing systems do you need to connect to your Kafka deployment?

Apache Kafka might be the way to go if you’re working with a tight budget and have the in-house expertise to manage Kafka yourself. The same is true if you need full control over your infrastructure and want to customize every aspect of your Kafka deployment or if your team enjoys working with open-source technologies and doesn’t mind getting their hands dirty with configuration and maintenance. Apache Kafka will work if you’re okay with developing or integrating additional tools for advanced features as your needs grow.

Confluent Kafka could be the better choice if you’re looking for a more turnkey solution that allows you to focus on building applications rather than managing infrastructure or:

1. You need advanced features like ksqlDB or Schema Registry right out of the gate.

2. Your organization values professional support and wants to ensure you’re following Kafka best practices from the start.

3. You’re planning to deploy Kafka across multiple regions or in a hybrid cloud environment and want tools to simplify this process.

The right choice depends on your specific needs, resources, and long-term strategy. Whether you choose Apache Kafka or Confluent Kafka, you’re getting a powerful event streaming platform that can handle massive amounts of real-time data. The key is to align your choice with your organization’s capabilities and goals.

Managed Kafka services

So, you’re sold on the power of Kafka, but the thought of managing it yourself is giving you a headache? Confluent Kafka isn’t the only managed Apache Kafka solution available. Managed Kafka services are exactly what they sound like–Kafka deployments that a third-party provider manages. You get all the benefits of Kafka without the operational headaches.

Why would you want to use a managed service? Well, there are a few compelling reasons:

1. Less operational overhead: No more late-night calls because a broker went down. The service provider handles all that for you.

2. Automatic scaling and updates: Need to handle more data? Most managed services can scale your cluster automatically. And updates? They’re handled seamlessly in the background.

3. Improved security management: Many managed services come with robust security features out of the box, saving you the hassle of configuring everything yourself.

4. Expert support: Got a Kafka conundrum? With a managed service, you’ve got a team of experts just a support ticket away.

As a cloud-native platform that provides managed services for Apache Kafka, DoubleCloud allows users and companies to streamline the deployment and management of their data-streaming infrastructure. With DoubleCloud, you can quickly and easily provision Kafka clusters on GCP or AWS, configure them to meet your specific needs, and let DoubleCloud handle the ongoing management and maintenance. This takes the burden of managing and scaling Kafka off of your internal IT team, allowing them to focus on other critical business needs.

Where DoubleCloud really excels is cost-efficiency. We’ve helped customers cut costs by up to 40%. DoubleCloud operates a pay for what you use model without hidden costs, and lets customers take advantage of:

-

Sustained-use discounts of up to 20%

-

Bring-your-own-cloud

-

ARM processors, that can outperform x86 processing by almost 40%.

-

Hybrid storage to decrease your storage costs by up to 5X

DoubleCloud’s managed Kafka service is highly flexible, allowing you to easily integrate Kafka with other tools and services in your data streaming infrastructure.

Read more about the significant advantages of managing Kafka on DoubleCloud.

Conclusion: The right choice for your organization

So, what conclusions can we draw?

Apache Kafka excels in scenarios requiring maximum performance and complete control. It’s best suited for organizations with a team of Kafka experts and the resources to manage a complex distributed system. As an open-source solution, it also offers potential cost savings for those with the necessary in-house expertise.

Confluent Kafka builds upon Apache Kafka’s foundation, offering additional features and managed services that simplify deployment, scaling, and maintenance. It’s particularly well-suited for organizations that prefer to focus on application development rather than infrastructure management.

The optimal choice depends on your specific requirements, resources, and long-term strategy. Your budget, in-house expertise, required features, scalability needs, and integration requirements are key factors to consider.

Managed Kafka services like DoubleCloud offer an ideal solution. These services provide many of Confluent Kafka’s benefits while mitigating some potential drawbacks. DoubleCloud combines Apache Kafka’s power with the convenience of a managed service. To explore how DoubleCloud can provide Kafka’s benefits without the operational complexities, visit our managed Kafka service page to discover how we can help optimize your data streaming infrastructure.

Managed Service for Apache Kafka

Fully managed, secure, and highly available service for distributed delivery, storage, and real-time data processing.

Frequently asked questions (FAQ)

What companies use Apache Kafka?

What companies use Apache Kafka?

Many businesses, including LinkedIn, Uber, Netflix, Airbnb, Twitter, and many more, use Apache Kafka. As a popular option for large-scale data streaming and processing requirements, Apache Kafka is widely used by businesses in a variety of industries.

What is Apache Kafka good for?

What is Apache Kafka good for?

Is Apache Kafka free?

Is Apache Kafka free?

Start your trial today