Introduction to data streaming: What it is, and why is it important?

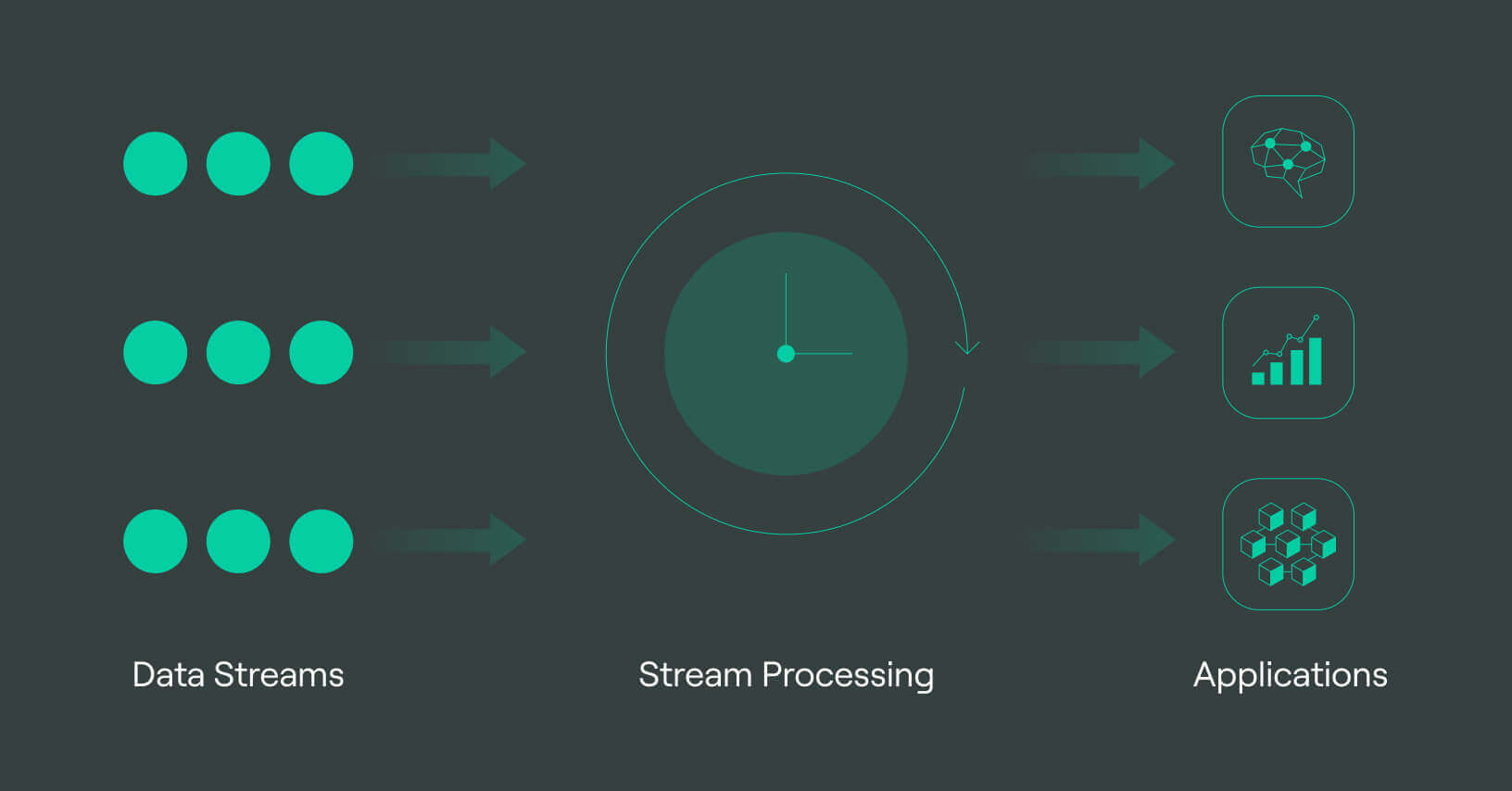

Data streaming is the continuous flow of data elements ordered in a sequence, which is processed in real-time or near-real-time to gather valuable insights. It is important because it enables the processing of streaming data that can be used to monitor day-to-day operations, analyze market trends, detect fraud, perform predictive analytics, and much more. Unlike batch processing, streaming data applications process data in real-time, providing insights on demand. Stream processing systems like Apache Spark Streaming process data stream using machine learning algorithms and present data from incoming data sources like social media feeds, user’s preferences, location data, home security systems, security logs, fault tolerance, and log files. The processed data is then stored in data warehouse, data lake, or other forms of data storage for further analysis.

What is data stream?

Data streaming is a modern approach to processing and analyzing data in real-time, as opposed to batch processing methods. A data stream is a continuous flow of data elements that are ordered in a sequence and processed as they are generated. Data stream is different from traditional batch processing methods in that they are continuous, unbounded, and potentially high-velocity with high variability.

Unlike traditional data processing, where data is collected and processed in batches, data streams are continuously collecting data, making it possible to process data as soon as they are created. This provides businesses with the ability to monitor and succeed in day-to-day operations.

Key features of data streams include their continuous flow, infinite length, unbounded nature, high velocity, and potentially high variability. They are often used in stream processing systems like Apache Spark Streaming.

In this article, we’ll talk about:

- What is data stream?

- Importance of data streams in modern data processing

- Benefits of data streaming for businesses

- Characteristics of data streams

- Types of data streams

- Technologies and tools for processing data streams

- Challenges and solutions in processing data streams

- Applications of data streams

- How DoubleCloud helps with data streaming?

- Final words

Importance of data streams in modern data processing

Data streams play a critical role in modern data processing, enabling real-time insights and automated actions. Businesses can analyze data as it’s generated and make decisions based on up-to-the-minute information. This is in contrast to batch processing, which can take hours or even days to complete.

Data streams allow for continuous flow of data, providing insights in real-time and enabling timely data analysis. This is particularly important for industries such as finance, where real-time insights can help detect fraud and other security breaches. It’s also important for home security systems, where real-time event data can alert homeowners to potential threats.

Social media feeds generate a continuous stream of data, making it essential for analyzing user behavior and preferences. Retailers can use data streams to monitor market trends and pricing data, while logistics companies can use them to optimize inventory management and troubleshoot systems in real-time.

In the healthcare industry, data streams enable continuous monitoring of patient data, allowing for early detection and intervention in the event of critical health issues. Data streaming can also be used in machine learning algorithms to derive insights from continuous data and improve predictive analytics.

So evidently, data streams are essential for modern data processing and decision-making, enabling businesses to derive valuable insights from the continuous stream of real-time data generated by their internal IT systems and external data sources.

Benefits of data streaming for businesses

Data streaming provides several benefits for businesses, including the ability to process data in instantaneously, make faster decisions, and derive valuable insights that can enhance business outcomes. With streaming data, businesses can continuously collect and process data from various sources, including social media feeds, customer interactions, and market trends, to name a few.

Data streaming also enables businesses to automate actions based on real-time insights, such as information security or inventory management, thereby improving the way they run their operations. It also helps in predictive analytics by processing data as it comes in, enabling businesses to make predictions based on current and historical data.

Furthermore, data streaming can help companies with fault tolerance, ensuring that their systems continue to operate even if individual components fail. With low latency and continuous data flow, SRE or data engineers can monitor logs and troubleshoot issues in real-time. Overall, data streaming provides a powerful way for businesses to derive insights, improve decision-making, and gain a competitive advantage.

Characteristics of data streams

Continuous flow of data: Data streams are generated continuously and in real-time, without any fixed start or end time. They are ongoing and do not have a predefined structure or format.

Infinite (or potentially infinite) length: Data streams have an infinite or potentially infinite length because they are continuously generated, making it impossible to predict the size of the data.

Unbounded nature: Data streams are unbounded, meaning that they have no defined beginning or end, and they can go on indefinitely.

High velocity: Data streams are generated at high speeds and require real-time processing, often measured in milliseconds. This high velocity requires special processing techniques such as stream processing to be able to keep up with the data.

Potentially high variability: Data streams can have varying characteristics and data formats, making it difficult to process and analyze them. The data may be structured, unstructured, or semi-structured, and it can vary in volume, velocity, and variety.

Types of data streams

Event streams

Event streams are continuous streams of real-time event data generated by different sources, such as IoT devices, transactional systems, or customer interactions. Event streams require real-time data processing to derive insights that can help organizations make informed decisions. Examples of event streams include customer interactions, market trends, and home security systems.

Log streams

Log streams are generated by internal IT systems or applications, which produce log files that contain information about various events such as system errors, security logs, and troubleshooting systems. Log streams require processing tools to extract meaningful insights and enable organizations to monitor their system components' performance, detect faults, and improve system efficiency.

Sensor streams

Sensor streams are generated by various sensors that continuously collect data on different aspects, such as location data, temperature, and inventory management. Sensor streams require real-time data processing to enable organizations to make informed decisions on how they operate and improve the efficiency of their business processes.

Social media streams

Social media streams are generated by social media feeds that produce continuous data on users' preferences and interactions. Social media streams require processing tools to extract meaningful insights and enable organizations to improve their customer interactions, detect market trends, and build brand awareness.

Click streams

Click streams are generated by users' interactions with web applications, such as web browsers or mobile applications, and provide data on their behavior, preferences, and interests. Click streams provides insights into customer behavior, preferences, and interests to improve user experiences and increase sales.

Technologies and tools for processing data streams

Stream processing frameworks

Stream processing frameworks such as Apache Flink, Apache Storm, and Apache Kafka Streams are used for processing streaming data in real-time. They enable developers to build streaming data applications that can handle high volumes of data and make decisions with real-time insights.

Real-time analytics tools

Real-time analytics tools such as Tableau, QlikView, and Power BI help organizations to analyze data in instantenously and make decisions based on the latest information available. These tools allow businesses to visualize real-time data and identify trends and patterns as they emerge.

Message brokers

Message brokers such as Apache Kafka or RabbitMQ are used to manage and transport messages between applications and systems. They provide a reliable and scalable way to handle and monitor the continuous flow of data, such as logs or metrics, in real-time.

In-memory databases

In-memory databases such as Apache Ignite and Redis are used to store and process streaming data in real-time. These databases provide fast access to data, enabling businesses to make real-time decisions based on the latest information.

Challenges and solutions in processing data streams

Handling high velocity and volume of data

One of the biggest challenges in processing data streams is handling the high volume and velocity of data. With the advent of new technologies, data is generated and collected at an unprecedented rate. The sheer volume of data generated by various sources can overwhelm traditional batch processing systems, resulting in data latency and poor performance. One solution to this challenge is stream processing systems, which can handle continuous streams of data and enable real-time data processing. These systems can process data as it is generated and provide real-time analytics.

Managing data skew and unevenness

Data skew and unevenness can be another challenge for processing data streams. In many cases, data is not evenly distributed, and some sources may generate more data than others, resulting in a data skew. This can lead to inefficiencies and accuracy issues when processing the data. One solution to this challenge is to use data partitioning techniques, where the data is divided into smaller partitions and distributed evenly across the processing nodes. This can help improve the processing efficiency and accuracy of the data.

Ensuring data quality and consistency

Another challenge in processing data streams is ensuring data quality and consistency. Data quality is crucial for making accurate decisions, but processing large volumes of raw data in real-time can lead to inconsistencies and errors. One solution to this challenge is to implement data validation and cleansing techniques, where data is checked for accuracy and completeness. Real-time data quality checks can help detect errors and inconsistencies as they occur, enabling timely remedial actions.

Dealing with data loss and out-of-order arrival

Data loss and out-of-order arrival is another noticeable challenge when processing data streams. In some cases, data may be lost due to network issues, system failures, or other reasons. Moreover, data may arrive out-of-order, which can cause significant problems when processing the data. The solution to this challenge is to use stream processing software that can handle streamed data durability and fault tolerance. These systems can ensure that all data is stored and processed correctly, even if some components fail or data arrives out of order.

Scaling and deploying stream processing applications

Scaling and deploying stream processing applications can also be a challenge, as the data volume and processing requirements can increase rapidly. One way to solve this is by using cloud-based stream processing systems that can scale dynamically to meet the processing needs of the data. These systems can be easily deployed and configured, enabling businesses to scale their stream processing applications as needed, without the need for significant hardware investments.

Applications of data streams

Loja Integrada & Pagali switched to DoubleCloud for Visualizing a Massive Amount of Financial Data

Use cases

In the fast-paced world of data, streams are the most valuable players. They are excellent for real-time analytics, such as monitoring user behavior or tracking stock market fluctuations. E-commerce giants use them to power instant recommendation engines, while social media platforms stream data to personalize feeds and ads. In finance, they are essential for fraud detection, and in gaming, they enhance player experiences.

Fraud detection: Data streams are widely used for fraud detection as it enables real-time identification and response to fraudulent activities. For example, a credit card company can process streaming data from all transactions to detect fraud instantaneously. By analyzing the data, a stream processor can identify patterns and anomalies to flag suspicious transactions for further investigation. This enables the company to take immediate action to prevent fraud before it happens.

Predictive maintenance: Data streams can be used for predictive maintenance to improve the reliability and availability of machines. For instance, a manufacturer can use sensors on machinery to collect data in real-time. The data can be analyzed to detect patterns that indicate when a machine is likely to fail. With this information, the manufacturer can perform maintenance before the machine breaks down, reducing downtime and maintenance costs.

Real-time personalization: Data streams can be used to personalize the customer experience in real-time. For example, an e-commerce company can use streaming data from user interactions to make personalized product recommendations. By analyzing data elements ordered in real-time, a stream processor can generate insights into user’s preferences and present data-driven recommendations to customers as they browse.

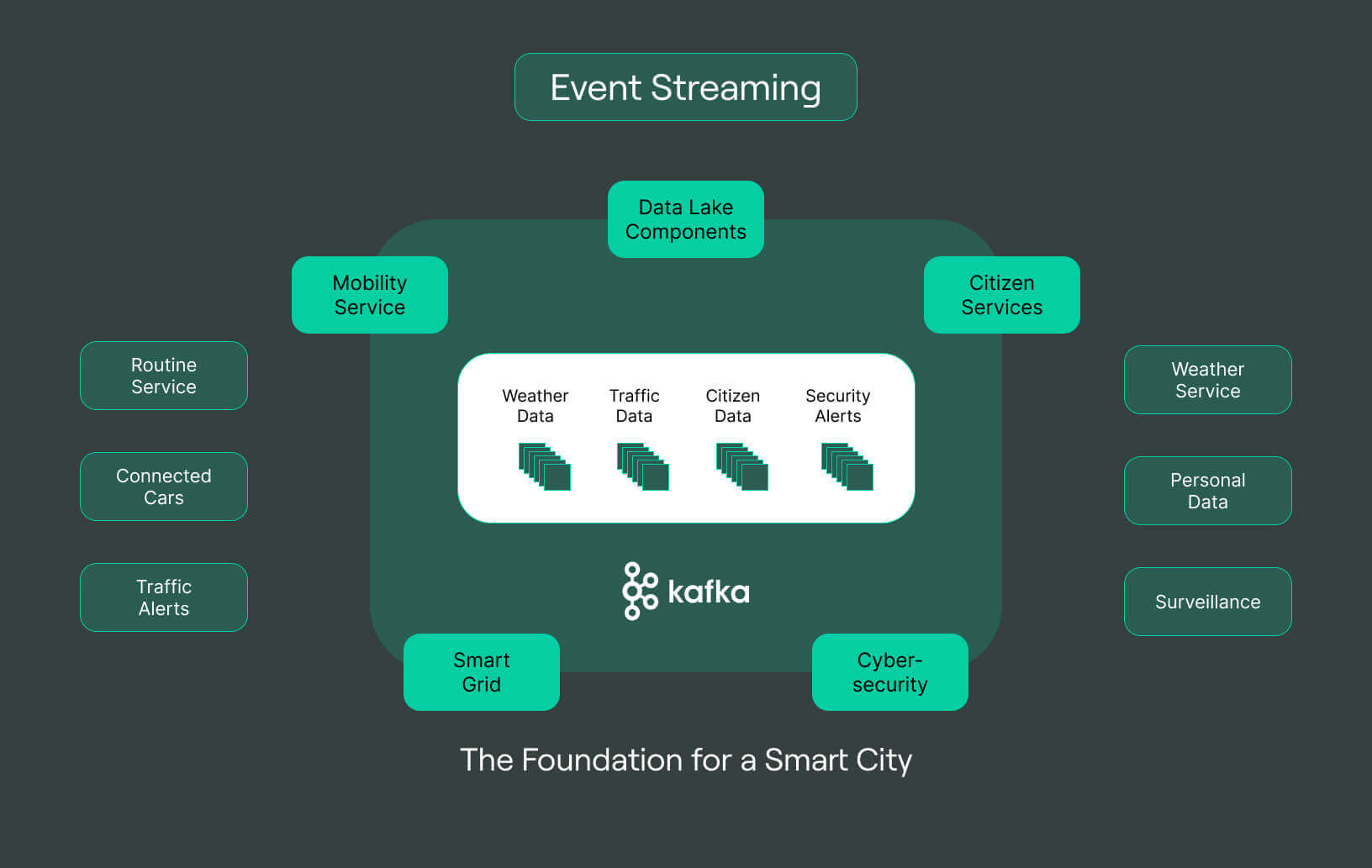

IoT and smart city applications: Data streams are essential for IoT and smart city applications that require continuous data collection and analysis. For example, a smart city can use streaming data from sensors and cameras to monitor traffic, air quality, and weather. By analyzing the data in real-time, the city can make adjustments to optimize traffic flow and improve public safety.

Financial market analysis: Data streams are used in financial markets for real-time analytics and decision-making. For example, a trading firm can use streaming data from stock exchanges to monitor market trends and make trading decisions. By processing data in real-time, a stream processor can detect market anomalies and predict market movements, providing the firm with a competitive edge.

How DoubleCloud helps with data streaming?

DoubleCloud provides businesses with a powerful platform to leverage the benefits of data streaming in their operations, helping them gain valuable insights and improve their business outcomes. The platform offers a range of features, including real-time data ingestion, processing, and analytics, as well as data visualization and reporting tools.

One example of how businesses can use DoubleCloud is for predictive maintenance. By leveraging the platform’s real-time data processing capabilities, businesses can monitor equipment and machinery to detect potential issues before they become serious problems, thereby avoiding costly downtime and repairs.

DoubleCloud can also be used for real-time personalization, such as in e-commerce. By analyzing customer behavior data, businesses can personalize product recommendations and offers in real-time, enhancing the customer experience and driving sales.

DoubleCloud can support IoT and smart city applications by processing and analyzing sensor data from various sources, such as traffic cameras, weather sensors, and more, to improve city planning and resource allocation.

Final words

We have discussed the growing importance of data streaming in today’s business landscape, where real-time insights and actionable information are crucial for driving innovation and gaining a competitive edge. We also talked about the challenges businesses face in processing data streams, such as handling high velocity and volume of data, managing data skew and unevenness, ensuring data quality and consistency, dealing with data loss and out-of-order arrival, and scaling and deploying stream processing applications.

To overcome these challenges, businesses can leverage data streaming platforms such as DoubleCloud, which provide a range of features and capabilities to process streaming data efficiently. With DoubleCloud, businesses can continuously collect and process data in real-time, derive valuable insights, and make data-driven decisions to improve their day-to-day operations.

In conclusion, data streaming is an essential aspect of modern data processing and analysis. It enables businesses to gain real-time insights and drive innovation by leveraging the power of continuously flowing data. DoubleCloud has the potential to enable businesses to harness the full potential of data streaming and stay ahead of the competition.

TripleTen replaces Metabase & builds powerful BI with DoubleCloud

Frequently asked questions (FAQ)

What is a streaming data pipeline?

What is a streaming data pipeline?

A streaming data pipeline is a system that collects, processes, and analyzes continuous data flows in real-time, enabling organizations to make informed decisions quickly and effectively.

What is considered streaming data?

What is considered streaming data?

What is real-time data streaming?

What is real-time data streaming?

What is the difference between data flow and data stream?

What is the difference between data flow and data stream?

Get started for free