What we've learned from the development of GPT-4 integration

A few months ago, here at DoubleCloud, we discovered that ChatGPT could understand and analyze structured data such as tables, HTML, CSV, and other formats. We have a self-service BI at DoubleCloud where users can create vibrant and appealing charts and dashboards.

We often hear from users who struggle to understand the information presented on dashboards created by their analytics teams. They face challenges interpreting a variety of puzzling KPIs, metrics, complex graphs, etc.

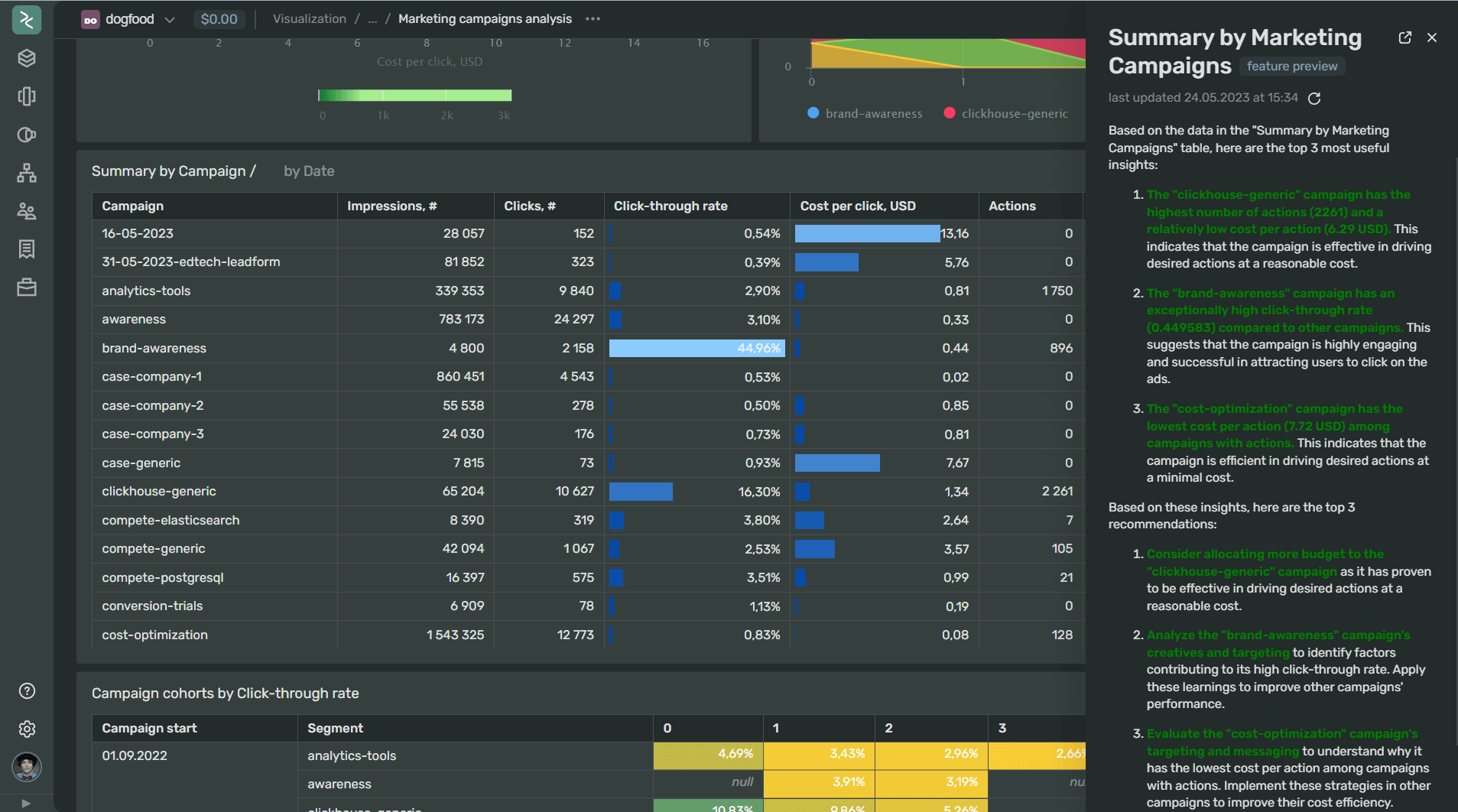

This is why we built in an integration with ChatGPT: to help end users quickly get explanations, in a human-readable format, on what’s happening in a particular chart. ChatGPT provides conclusions, insights, and recommendations in that specific domain of knowledge. We are creating a prompt, behind the scenes, that helps the AI understand the context of the chart and pass the raw data of that chart to itself.

This tool becomes extremely valuable for complicated charts and tables where the human brain needs time to understand the context, the numbers, the scale, and other different factors. It’s like having a business analyst who can quickly give you an overview and business advice.

Below, you can see an example of how AI-Insight provides a quick overview of a large table of marketing campaign performance data, which may not be easy for a human to quickly understand.

Here are some other examples:

1. When analyzing data from marketing campaigns, it may reveal trends about advertising channels’ efficiency and audience behavior. This, along with recommendations to improve metrics like CAC, ROMI, etc., makes it an invaluable tool for business owners.

2. Another real example is in the food delivery industry, where the AI automatically understands the business context and identifies anomalies across different warehouses based on their KPIs.

Given that the number of KPIs was about 20 across roughly 30 warehouses, it’s not easy for humans to quickly understand and compare so many data points. However, ChatGPT identified a few warehouses with significantly low speed during the packaging stage and suggested moving staff from these warehouses to a particular warehouse to learn from the best one.

As for future ideas, we are waiting to gain access to the multimodal version of ChatGPT to test and potentially implement the idea of using images, perhaps passing the full screenshot of a dashboard with different charts. This could give more context to the model and potentially enable it to find correlations between charts, providing broader and richer answers.

These answers could not only explain a single chart but also provide an overview of the state of your business based on the range of the dashboards. The main limitation to implementing this with raw data is the limit of prompt length, and data in a visual format could be more compact/compressed.

What we discovered in ChatGPT as we developed our new AI feature

We began developing our integration about a month and a half ago, almost immediately after the release of GPT-4. As soon as we got started, we began making discoveries about ChatGPT that we thought would be useful for others. Some might seem obvious, but I’ve tried to put all our findings together in a single post:

1. Firstly, I would recommend this short free course, offered by Isa Fulford of OpenAI and Andrew Ng of DeepLearning.AI. It’s about an hour long and provides some insightful advice that we incorporated into our prompts, such as limiting the length of responses and providing answers in a desired format like markdown or HTML.

2. Given that our users are scattered across the globe and not everyone is proficient in English, we decided to add the capability to provide answers in different languages. To decide which languages to include, we created a list of target languages and restricted it based on the proficiency of ChatGPT in those languages.

I determined this proficiency by interacting with ChatGPT and reviewing research papers. Interestingly, the model’s accuracy seemed to vary depending on the language, with English being the most accurate. This discrepancy not only affected the grammatical construction of the answers but also the model’s factual knowledge and tendency to 'hallucinate.' You can find more about this in this research paper here and below is a self-assessment from ChatGPT:

|

Rank |

Language |

Estimated Total Number of Speakers (Native + Second Language, in billions) |

GPT-4 Proficiency Rating |

|

1 |

English |

1.5 |

10 |

|

2 |

Mandarin Chinese |

1.3 |

8 |

|

3 |

Spanish |

0.57 |

10 |

|

4 |

Hindi |

0.60 |

8 |

|

5 |

French |

0.53 |

9 |

|

6 |

Arabic |

0.42 |

7 |

|

7 |

Bengali |

0.26 |

7 |

|

8 |

Russian |

0.26 |

8 |

|

9 |

Portuguese |

0.25 |

9 |

|

10 |

Indonesian |

0.23 |

8 |

1. The wording of the prompt is crucial; the more specific the question, the more accurate the answer tends to be. Clear specifications such as the desired language, maximum length of the answer, and explanation of data can help enhance the results. Roleplaying or simulating a specialist can also guide the model to provide more detailed responses within a particular knowledge domain.

2. ChatGPT excels in parsing and working with tabular data, including CSV. We chose this format to transmit raw data to the model due to its compactness, accuracy, and readability. The model can even conceptualize the way data from such a table could be represented using different chart types and can explain the data using these visualizations.

3. It’s worth noting that ChatGPT seems to struggle with large values and fractional numbers with numerous decimal points. To overcome this, we rounded numbers to a maximum of 2-3 decimal places. This practice not only improves accuracy but also reduces the number of tokens used.

4. Chaining prompts also appear to be a viable strategy. For instance, you could introduce a data set in two parts: “I will provide a table of data in two parts; below is the first part,” followed by, “Here is part two of the data.” You can then pose a question based on the provided data.

5. Isa Fulford (OpenAI) suggests encapsulating data or dynamic prompts using different characters like “”, <>, etc. to prevent unwelcome user input. However, this doesn’t always work. An embedded command can still override the initial prompt sometimes.

6. OpenAI recently altered their privacy policy, and data from API calls are no longer used in training or retraining the models. This change addressed a significant concern raised by our legal team and customers, and we are pleased that OpenAI quickly adapted their approach.

7. The second largest concern, raised by both our legal team and customers, was about inadvertently sharing sensitive data with ChatGPT that they didn’t wish to disclose externally. To minimize such instances and make the process transparent as possible, we have modified our user flow and now implemented a two-step activation process for this feature in our product. The first step must be executed by the admin or dashboard editor, while the second step requires approval from the end user.

If you are interested in what we built as a result check the video below or just try it for free without providing any API keys:

As a bonus, here’s an example of one of our prompts:

Pretend you are a business analyst. Provide the top 3 most useful insights and the top 3 recommendations based on the data in the table below in <<>> with max 250 words. That data is used to build a Scatter chart named: “Scatter chart by Campaigns”. The field “Cost per click” is the x-axis, the field “Click-through rate” is the y-axis and point size by “Cost, USD” field. Provide the answer in German in markdown format highlighting the most important part of the text in green.

<<“Segment”;"Click-through rate”;"Cost per click”;"Actions”;"Cost, USD”

analytics-tools;0.0289;0.812;1750;7993.812

Awareness;0.029;0.318;0;7316.329 …>>

P.S.

The text was entirely authored by me, with ChatGPT assisting only in proofreading :)

DoubleCloud Visualization. Get insights with ChatGPT!

Don’t waste time reading numerous reports and manually analyzing data — rely on AI-Insights and get fast and accurate conclusions.

Start your free trial today