ETL vs data pipelines: What are they and how do they work?

In today’s dynamic business landscape, ETL pipelines and data pipelines are increasingly crucial. With technology integration, companies acknowledge their critical role in seamless data transfer between systems. Understanding their functioning and fundamental differences becomes imperative as businesses heavily rely on data for day-to-day operations.

Within this article, we delve into the complexities of these pipelines, shedding light on how organizations can fully harness the power of data, leading them to achieve remarkable success.

What are data pipelines?

In this article, we’ll talk about:

- What are data pipelines?

- What is ETL?

- Why are data pipelines and ETL important in data engineering?

- How ETL and Data Pipelines Relate

- Key Differences Between Data Pipelines and ETL

- When to Use Data Pipelines and ETL

- Advantages and Disadvantages of Data Pipelines

- Advantages and Disadvantages of ETL Pipelines

- Popular Tools and Technologies for Building Data Pipelines and ETL

- How to choose the right tool for your data processing needs

- Final words

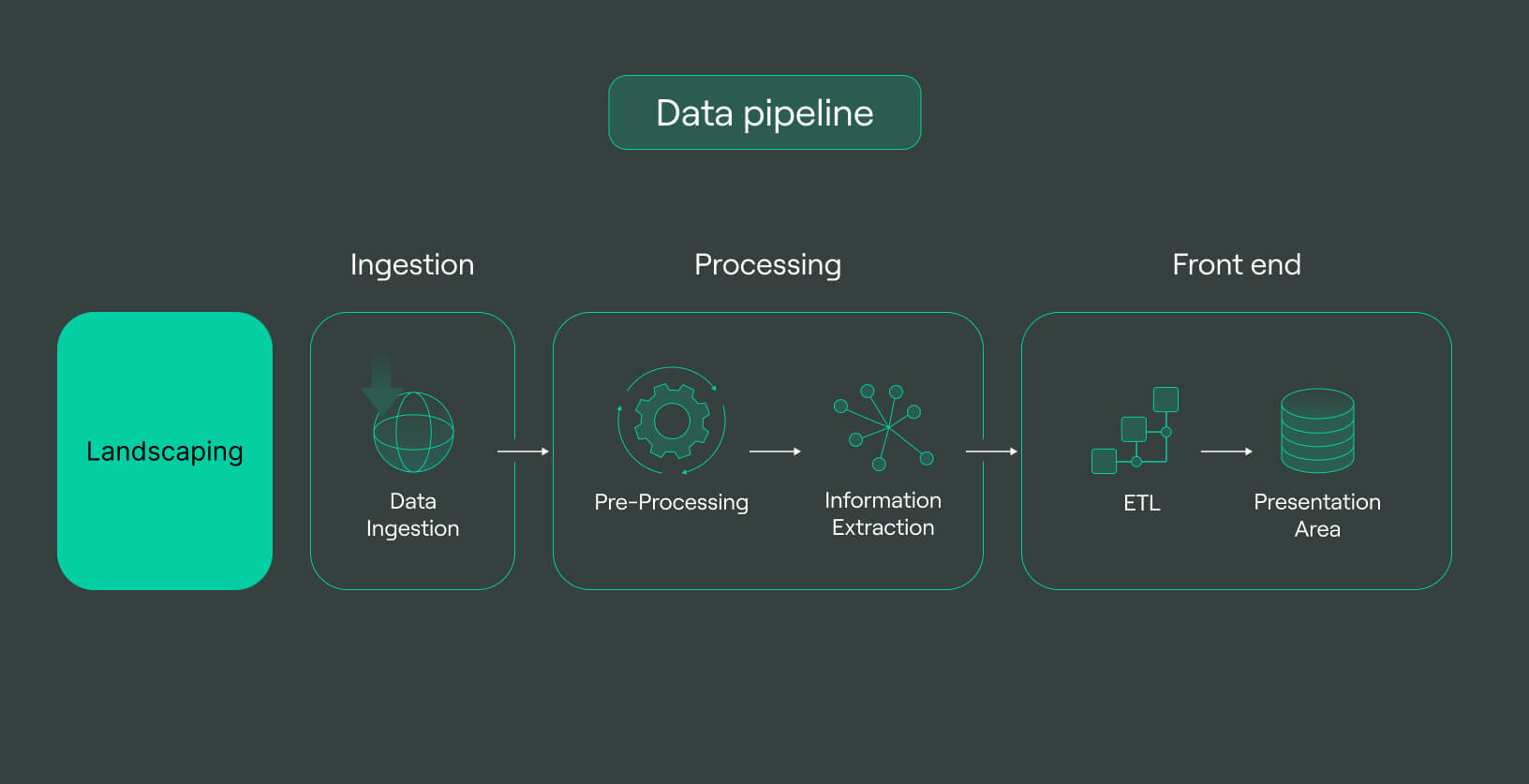

A data pipeline serves as a framework of interconnected processes that facilitate the seamless data transfer from one system to another. Its primary purpose is to gather data from diverse sources efficiently, convert it into a desired format, and load it into a specified destination.

Data pipelines contribute to the enhancement of data quality, simplifying the subsequent data analysis process. The core components of data pipelines consist of the following:

-

Data sources: These encompass various systems where the data originates, ranging from databases to cloud storage services.

-

Data processing: This stage involves transforming the collected data into the desired format. Tasks such as data cleaning, deduplication, and format conversion are commonly performed during this phase.

-

Data destinations: These represent the ultimate repositories where the processed data is loaded, including databases or data warehouses.

What is ETL?

ETL, short for extract, transform, and load, is a process that facilitates the movement of data from one system to another. ETL pipelines play a vital role in collecting data from various sources, transforming it into the desired format, and loading it into a designated destination.

The process of an ETL pipeline typically involves three key stages:

-

Extract: In this stage, data is gathered from the source system using various methods such as querying databases, transferring files, or making web service calls.

-

Transform: The extracted data transforms, including cleaning, deduplication, format conversion, and the enrichment of additional data, such as geolocation or demographic information.

-

Load: The transformed data is loaded into the target system, which can be a database, data warehouse, or cloud storage service.

You may also want to read — ETL vs ELT: Choosing the Right Approach for Your Data Integration Needs

Why are data pipelines and ETL important in data engineering?

Data pipelines and ETL are crucial components in data engineering, they play a significant role in the following:

-

Data processing: These technologies enable data cleaning, transformation, and enrichment, improving data quality and making it more suitable for analysis.

-

Data storage: Data pipelines and ETL facilitate storing data in databases, data warehouses, and cloud storage services, ensuring data security and accessibility for analysis.

-

Data integration: These tools integrate data from multiple sources into a centralized repository, providing a unified view of the data for analysis and reporting purposes.

Why ETL Pipelines Are Essential For Businesses?

How ETL and Data Pipelines Relate

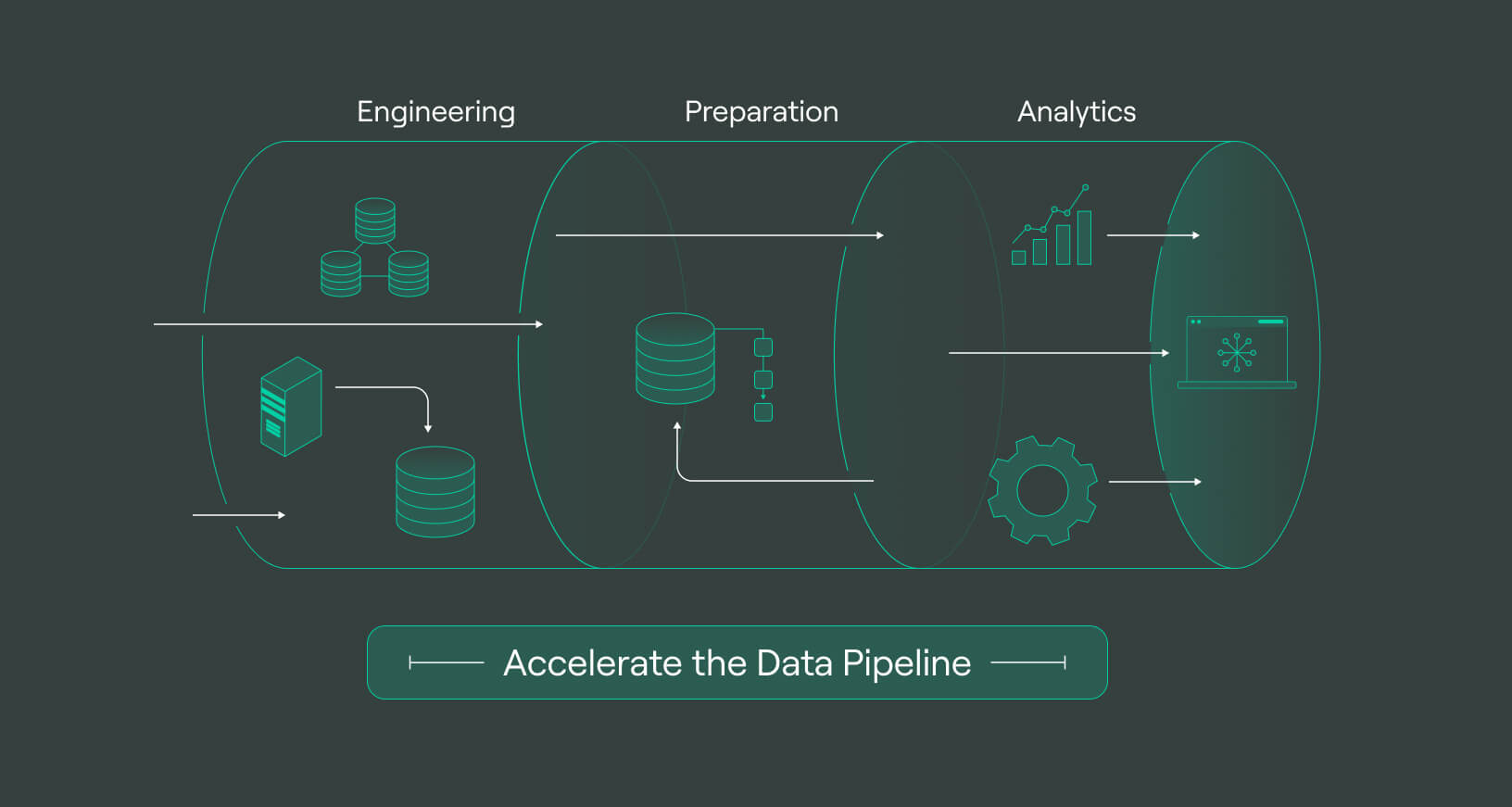

ETL and data pipelines work together to achieve data analytics goals. ETL is used to extract, transform, and load data into a data warehouse, while data pipelines transfer data from the warehouse to various applications like BI or data visualization tools.

The optimal approach for data movement depends on the organization’s specific requirements. If batch processing suffices, ETL proves to be a suitable choice.

On the other hand, if real-time processing is necessary, data pipelines offer a better solution. For organizations with diverse data movement needs, combining them can yield the most effective results.

Key Differences Between Data Pipelines and ETL

Data movement and transformation

Data movement and transformation are two of the most important aspects of data engineering but differ in focus and steps involved. Data movement is the process of transferring data from one location to another, while data transformation is the process of converting data from one format to another.

In data pipelines:

-

Data Extraction: Data is extracted from various sources, such as databases, APIs, files, or streaming data pipeline platforms.

-

Transportation: Extracted data is transported or transferred to the desired destination.

-

Loading: The data is loaded into the target system for further processing and analysis.

In ETL processes:

-

Data Extraction: Data is extracted from multiple sources, similar to data pipelines.

-

Data Cleaning: The extracted data undergo cleansing to remove inconsistencies, errors, duplicates, and irrelevant elements.

-

Data Transformation: The extracted data is transformed into a consistent and standardized format suitable for analysis.

-

Data Loading: The transformed data is loaded into the target system, which can be accessed for reporting and analysis purposes.

Data processing and storage

Data processing and storage in data pipelines and ETL differ in their approach, focus, and associated benefits and drawbacks. Let’s explore each of them:

Data Processing

-

Data pipelines enable data processing in real-time or close to real-time, facilitating quicker insights and decision-making. Nevertheless, implementing real-time data processing can be more intricate and costly.

-

ETL processes data in batches, offering a less complex and costly implementation than real-time processing. However, this approach can delay obtaining insights and making decisions.

Data storage

-

Data pipelines use data lakes or data warehouses. Data lakes handle large data volumes, while data warehouses are optimized for data analysis. However, managing and securing them can be challenging.

-

ETL processes commonly store data in relational databases. Relational databases are easier to manage and secure than data lakes or warehouses. However, their data handling capacity is relatively limited.

Integration with modern data architectures

Data pipelines and ETL are both essential components of modern data architectures. Modern architectures such as:

-

Cloud computing is widely adopted for data pipelines and ETL, providing benefits like scalability, flexibility, and cost-efficiency.

-

Big data platforms, tailored for large data volumes, offer relevant features for data pipelines and ETL, encompassing data storage, processing, and analysis.

Data pipelines and ETL can be integrated with modern data architectures in various ways:

-

Cloud-based data pipelines are favored by businesses transitioning to the cloud, providing scalability, flexibility, and cost-effectiveness.

-

Hybrid data pipelines merge cloud-based and on-premises pipelines, leveraging the best features of both approaches.

-

ETL tools designed for big data platforms offer benefits like processing large data volumes, managing complex data transformations, and integrating with various big data platforms.

Flexibility and scalability

Data pipelines and ETL serve different purposes in data processing. Data pipelines provide flexibility by supporting various data sources and processing methods, including batch and real-time processing. They are highly scalable, leveraging cloud-based technologies to handle large data volumes.

On the other hand, ETL is less flexible, primarily used for batch processing from a single source to a single target. It relies on on-premises technologies, which limits its scalability compared to data pipelines.

Several factors influence the flexibility and scalability of data pipelines and ETL:

-

Data type: Data pipelines excel in processing unstructured data like text and images, while ETL is more suitable for structured data like tables and columns.

-

Data volume: Data pipelines are designed for large data volumes, while ETL is more appropriate for smaller datasets.

-

Data processing speed: Data pipelines are ideal for real-time processing, while ETL is better suited for batch processing.

Real-time vs batch processing

Real-time processing and batch processing are two approaches used in data pipelines and ETL, and they differ in terms of data processing speed, data latency, data volume, data complexity, and data accuracy.

-

Real-time processing involves immediate data handling as it is generated. It is utilized for time-sensitive applications like fraud detection or risk assessment. Data pipelines and ETL tools can facilitate real-time processing, accommodating various data volumes and complexities. Accuracy levels vary based on the application. Real-time processing exhibits low data latency, typically in milliseconds.

-

Batch data processing involves processing in scheduled batches, commonly used in applications like data warehousing or reporting. It handles large volumes and complex data structures using data pipelines and ETL tools. It provides higher accuracy than real-time processing but introduces longer data latency, ranging from seconds to minutes or even hours.

Organizations can select the suitable approach according to their specific needs and preferences. Organizations can utilize real-time data pipelines or ETL tools for tasks like fraud detection that demand real-time processing.

Conversely, if immediate processing is not essential, batch processing in data pipelines or ETL can be used for tasks like data warehouse loading or report generation.

When to Use Data Pipelines and ETL

Data pipelines and ETL are distinct methods for transferring data between systems. While data pipelines have a broader range of applications, including ETL, data integration, and real-time data streaming, ETL focuses explicitly on extracting data from a source, transforming it, and loading it into a target system.

The choice between data pipelines and ETL depends on the organization’s specific requirements. Here, we explore some of the use cases.

Use cases for data pipelines

Here are some common scenarios where data pipelines are commonly applied:

-

Data integration: Data pipelines are utilized to consolidate and integrate data from diverse sources into a single data warehouse or data mart. This consolidation enhances data quality, consistency, and facilitates easier data analysis.

-

Data warehousing: Data pipelines are employed to load data into data warehouses, enhancing data performance, scalability, and simplifying data analysis.

-

Real-time analytics: Data pipelines enable real-time data processing, enabling applications such as fraud detection, risk management, and personalized customer experiences.

-

Machine learning: Data pipelines supply data to machine learning models. This empowers organizations to enhance decision-making capabilities and automate various tasks.

Use cases for ETL

ETL is a versatile tool with various use cases that can benefit organizations in multiple ways:

-

Data cleaning: ETL is employed to cleanse data by eliminating duplicates, rectifying errors, and filling in missing information. This ensures data accuracy and reliability.

-

Data transformation: ETL facilitates data transformation by converting it into different formats, restructuring it, or adding new elements. This enables organizations to standardize data and make it compatible for analysis.

-

Data enrichment: ETL can enhance data by incorporating additional information, such as geolocation or demographic data. This enriched data gives organizations deeper insights and a more comprehensive understanding of their target audience.

Advantages and Disadvantages of Data Pipelines

Advantages of Data Pipelines:

-

Real-time data processing: Data pipelines enable organizations to process data in real-time, which is beneficial for applications such as fraud detection, risk management, and customer personalization.

-

Scalability: Data pipelines can easily handle large volumes of data, making them suitable for organizations experiencing rapid growth or dealing with substantial data loads.

-

Cost reduction: Automating the collection, cleaning, and preparation of data for analysis through data pipelines helps lower costs, freeing up IT resources for other tasks and ensuring improved data quality and consistency.

-

Improved data security: Data pipelines enhance data security by employing encryption techniques for data in transit and at rest, safeguarding against unauthorized access and ensuring compliance with data privacy regulations.

Disadvantages of Data Pipelines:

-

Increased complexity: Designing, building, and maintaining data pipelines can be complex, requiring specialized expertise and resources that may pose a challenge for organizations lacking in-house capabilities.

-

Data latency: Data pipelines introduce a certain degree of latency, affecting the time taken for data to be processed and made available for analysis. This may not be suitable for applications that demand real-time data processing.

-

Data loss risks: Data pipelines can be susceptible to data loss, which may occur due to human error, system failure, or malicious attacks. Data loss can significantly impact an organization’s operations.

Advantages and Disadvantages of ETL Pipelines

Advantages of ETL Pipelines:

-

Ease of use: ETL pipelines are known for their simplicity and user-friendliness. They are built on a well-established and widely-used process, making them relatively easy to set up and operate.

-

Cost-effectiveness: Compared to custom development, ETL pipelines are typically more cost-effective. They leverage off-the-shelf software that can be configured to meet an organization’s specific needs, saving on development time and costs.

-

Scalability: ETL pipelines can easily scale to accommodate growing data volumes. Their modular architecture allows for the seamless addition or removal of components as data requirements evolve.

Disadvantages of ETL Pipelines:

-

Data latency: ETL pipelines introduce a certain degree of data latency. This is the time it takes for data to be extracted from source systems, transformed, and loaded into target systems. Applications requiring immediate data processing may face challenges due to latency.

-

Data loss risks: ETL pipelines can be vulnerable to data loss. Human errors, system failures, or malicious attacks can result in the loss of valuable data.

-

Complexity: Designing and implementing ETL pipelines can be complex. It demands a deep understanding of data sources, transformations, and target systems, adding complexity to the development process.

Popular Tools and Technologies for Building Data Pipelines and ETL

There are many popular tools and technologies available for building data pipelines and ETL. Some of the most popular tools include:

Data pipeline tools: Apache NiFi, Apache Kafka, AWS Glue, and more

Apache NiFi is a flexible and scalable open-source platform for creating data pipelines. It offers real-time streaming, batch processing, and complex event processing capabilities, making it suitable for handling large data volumes. However, one downside is that it can be complex to learn and use.

DoubleCloud Managed Service for Apache Kafka

Fully managed open-source Apache Kafka service for distributed delivery, storage, and real-time data processing.

Apache Kafka is a scalable platform for real-time data collection and processing. It is ideal for creating robust data pipelines that handle high data volumes. However, it has a complex learning curve.

AWS Glue is a serverless data integration service provided by Amazon. It simplifies the process of building and managing data pipelines with its user-friendly interface and pre-built connectors to popular data sources. However, it is worth noting that AWS Glue has limited features.

Google Cloud Dataflow is a serverless Apache Beam service that simplifies the creation and execution of data pipelines. It provides a user-friendly interface, pre-built connectors, and supports GUI and CLI. However, it has limited features.

ETL tools: Apache Airflow, Talend, Informatica, and more

Apache Airflow is a scalable and reliable workflow management system used for scheduling and orchestrating complex ETL pipelines. It’s favored by organizations dealing with large data volumes, but setting it up and managing it can be complex.

Talend is an open-source ETL pipeline tool with a user-friendly graphical interface for building and managing data pipelines. It’s popular for integrating data from diverse sources, but its features are limited compared to Apache Airflow, and it’s not as scalable.

Informatica is a commercial ETL pipeline tool with extensive features for building and managing data pipelines. It’s highly regarded for integrating data from various sources and providing high performance and scalability. However, it has a steep learning curve and is expensive to use.

How to choose the right tool for your data processing needs

When selecting a data processing tool, several factors should be taken into account:

-

Data volume: The size and complexity of your data determine the tool’s requirements. Simple tools may suffice for small data sets, while more robust tools are necessary for large volumes of data.

-

Data complexity: The nature of your data also influences the tool selection. Basic tools work well for straightforward data, but complex data types and relationships necessitate more sophisticated solutions.

-

Data velocity: The speed at which data must be processed affects tool choice. Real-time data processing demands tools capable of handling instantaneous data, while more cost-effective options can handle batch processing.

-

Features: Consider the specific features offered by each tool. Some tools cater to particular industries or applications, so identifying your specific needs will guide you in selecting a tool with relevant features.

-

Cost: Budget is an important consideration. Some tools are open-source and free, while others are commercial and require a financial investment. Select a tool that aligns with your budget.

Final words

The decision to utilize either data pipelines or ETL depends on the organization’s specific requirements. Data pipelines are a suitable option for organizations that require real-time or near real-time data processing or for integrating data from diverse sources.

On the other hand, ETL is well-suited for organizations dealing with large data volumes or requiring data transformation into specific formats.

In certain cases, organizations may opt for a combination of both data pipelines and ETL. For instance, they might employ data pipelines for real-time data processing and utilize ETL for batch processing.

With numerous tools available, careful consideration will lead you to the most suitable choice. Once you have evaluated and chosen the right tool for your data processing needs, you can narrow down your options. Conduct thorough research, read reviews, engage with other users, and even try out demos to make an informed decision.

Frequently asked questions (FAQ)

What is the difference between ETL and data pipeline?

What is the difference between ETL and data pipeline?

ETL and data pipelines are both essential components of data analytics. While they share the common goal of moving data from one system to another, they differ in their approach and purpose.

Why are data pipelines and ETL important in modern data engineering?

Why are data pipelines and ETL important in modern data engineering?

What are some popular tools and technologies for building data pipelines and ETL processes?

What are some popular tools and technologies for building data pipelines and ETL processes?

Start your trial today