Kafka real-time analytics: Unleashing the power of real-time data insights

In today’s data-driven world, real time data analytics and deriving valuable business insights from processed data have garnered immense popularity. The utilization of real-time data analytics plays a pivotal role in enabling timely and well-informed decision-making processes.

Within the scope of this article, we will thoroughly explore the reasoning behind harnessing the power of Kafka analytics to foster the development of advanced streaming data analytics, ensuring real-time processing capabilities that are at the forefront of innovation.

What is Kafka?

In this article, we’ll talk about:

- What is Kafka?

- Is Kafka good for real-time data?

- How Kafka works?

- Real-time streaming architecture using Kafka

- Advantages of using Kafka for real-time analytics

- How is Kafka used for the real-time analytics?

- Building a real-time analytics pipeline with Kafka

- How DoubleCloud can help leverage Kafka?

- Conclusion

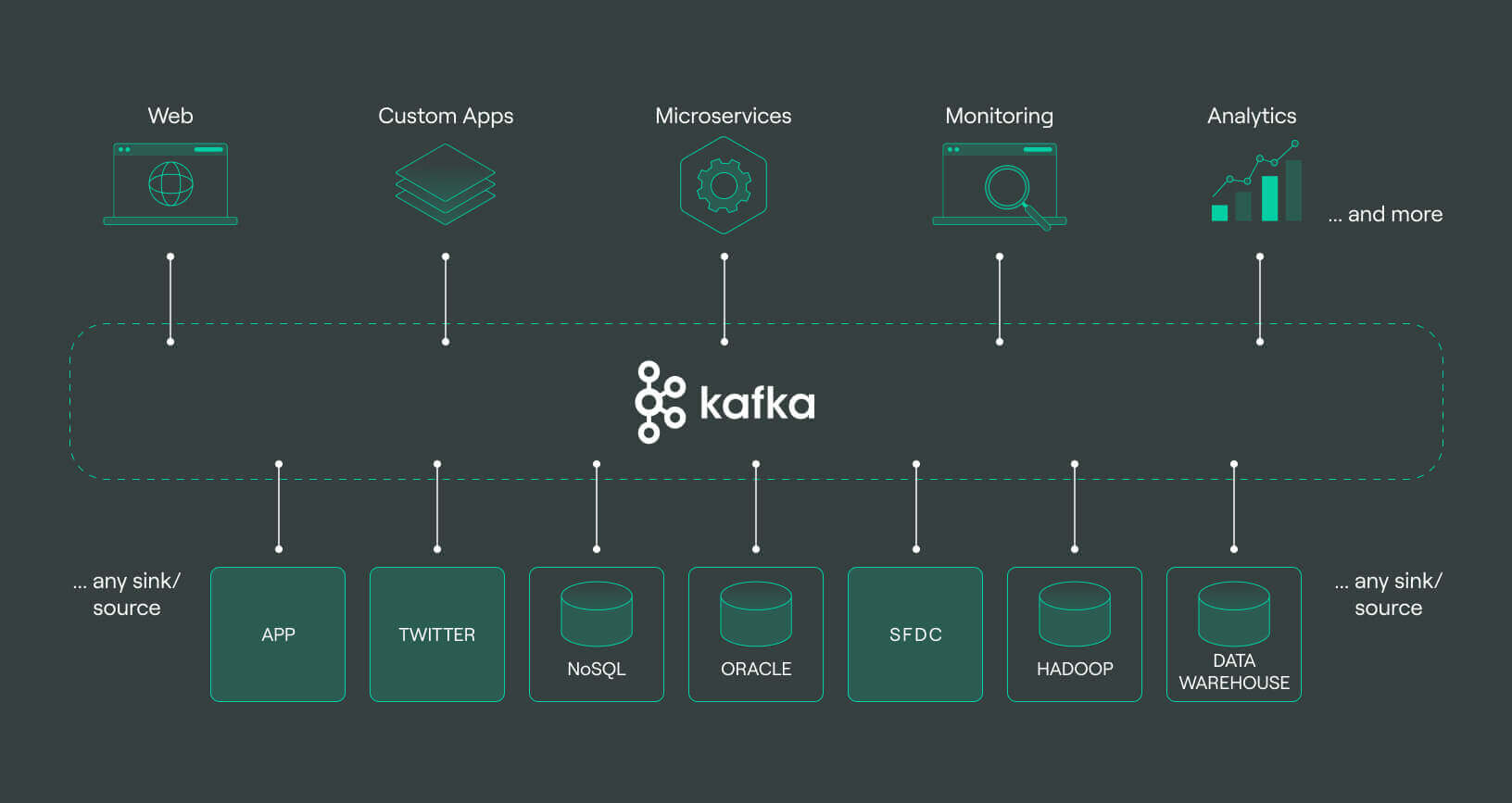

Kafka, an open-source distributed streaming platform developed by the Apache Software Foundation, has emerged as a powerful solution for efficiently and reliably handling large-scale, real-time data streams.

At its core, Kafka functions as a highly scalable and fault-tolerant publish-subscribe messaging system. This architecture allows producers to publish streams of records consumed by multiple subscribers in a distributed manner. This decoupling mechanism facilitates the efficient processing of high-volume data streams.

Here are the key applications and benefits of Kafka:

-

1. Real-time data streaming: Kafka excels in ingesting and processing high-throughput data streams in real time. It empowers applications to respond to data as it arrives, enabling real-time analytics, monitoring, and event-driven architectures.

-

2. Data integration: Kafka serves as a central hub for data integration, seamlessly connecting disparate systems and facilitating data exchange between various applications and services. It establishes reliable and scalable data pipelines, efficiently transferring data from diverse sources to target systems.

-

3. Event sourcing: Leveraging Kafka’s durable and ordered log-based structure, it becomes an ideal fit for event sourcing architectures. By capturing and storing all events within a system, developers can reconstruct past states and build event-driven systems with robust event replay capabilities.

-

4. Commit logs: Kafka analytics can be employed as a distributed commit log, ensuring the reliability and durability of data. This feature proves valuable in applications where data consistency and fault tolerance are critical, such as financial systems or transactional databases.

-

5. Stream processing: Kafka seamlessly integrates with stream processing frameworks like Kafka-Streams, Apache Flink, or Apache Spark. This integration empowers developers to perform complex processing tasks on streaming data in a scalable and fault-tolerant manner.

Is Kafka good for real-time data?

Kafka is widely recognized as an excellent choice for real-time data analytics due to its unique features:

-

1. Low latency: Kafka ensures minimal delay in processing high-throughput data streams, facilitating timely insights and actions based on real-time data.

-

2. Scalability: With its distributed architecture, Kafka scales horizontally to handle large data volumes and increasing workloads associated with real-time analytics.

-

3. Fault tolerance: Kafka’s fault-tolerant design guarantees reliable operation despite failures, ensuring data availability and consistency for real-time analytics.

-

4. Stream processing integration: Seamless integration with popular stream processing frameworks like Kafka-Streams, Apache Flink, and Apache Spark enables complex computations and real time analytics on streaming data.

-

5. Exactly-once semantics: Kafka supports exactly-once processing, ensuring data integrity by eliminating duplicates and inconsistencies in real time data analytics.

When considering Kafka for real time analytics, it is essential to evaluate specific requirements, including data complexity, stream size, and desired latency. Nevertheless, Kafka’s architecture, scalability, fault tolerance, and integration capabilities collectively establish it as a robust and favored solution for real-time data analytics.

How Kafka Works?

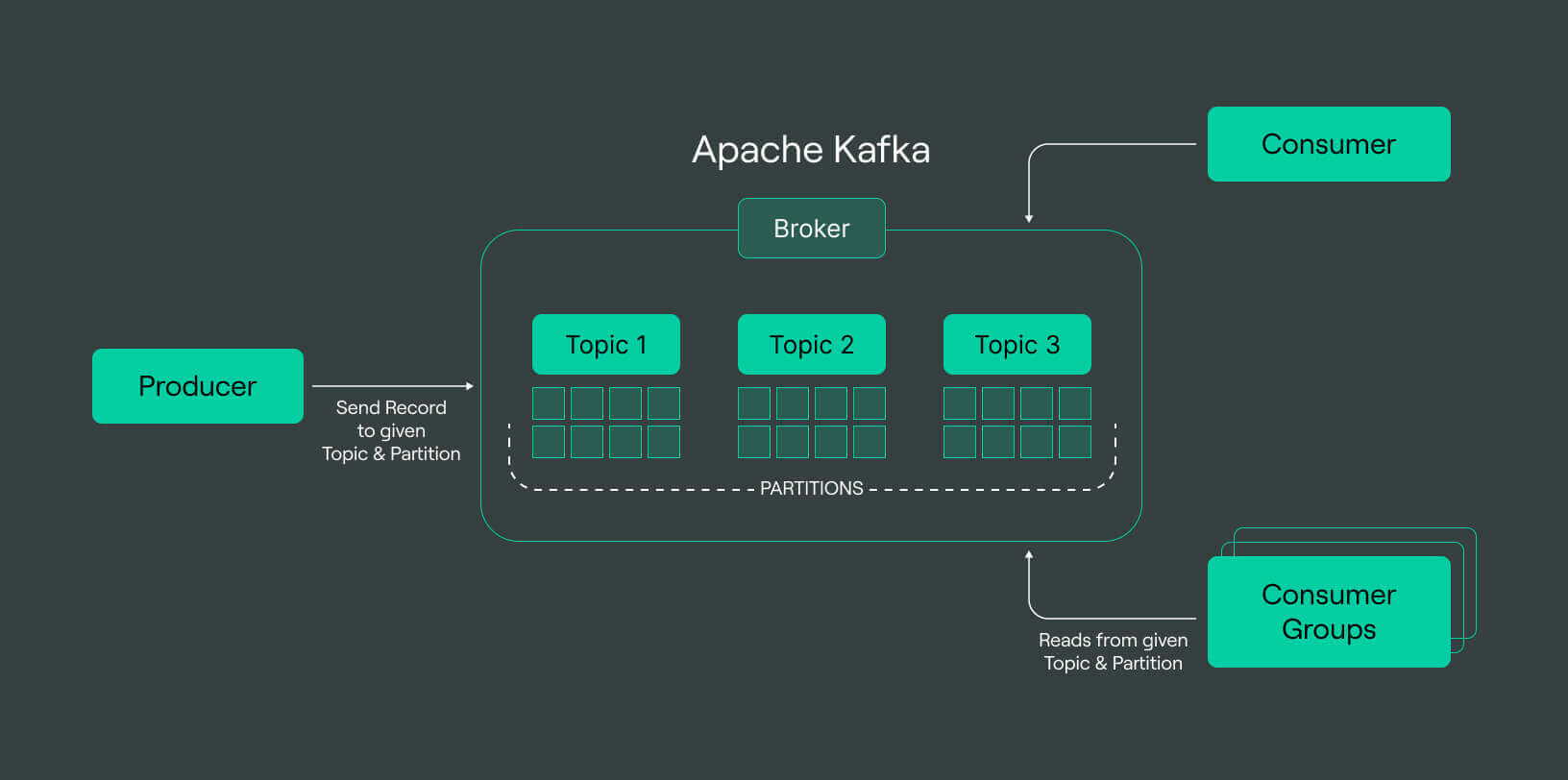

Kafka operates as a cluster-based system designed to store messages generated by producers. These messages are categorized into different topics and organized with indexing and timestamps. Kafka excels at processing real-time and streaming data, collaborating harmoniously with Apache Storm, Apache HBase, and Apache Spark. To enable its essential capabilities, Kafka relies on four primary APIs:

-

1. Producer API: This API empowers applications to publish data streams to one or multiple Kafka topics.

-

2. Consumer API: The Consumer API enables applications to subscribe to one or more topics and process the received stream of records.

-

3. Streams API: By utilizing the Streams API, input streams can be transformed into output streams, yielding the desired results.

-

4. Connector API: The Connector API allows for the creation and execution of reusable producers or consumers, promoting efficiency and flexibility.

Apache Kafka’s comprehensive API ecosystem and its ability to handle real-time and streaming data make it an invaluable tool for managing large-scale data streams and facilitating efficient data processing.

Real-time streaming architecture using Kafka

A data producer, such as a web server, can broadcast data to Kafka. Kafka divides data into topics, and the producer can disseminate data on a specific topic. The real-time streaming architecture involves several key steps:

-

Data publication: Producers, such as web hosts or servers, publish data to Kafka, which organizes it into topics.

-

Data consumption: Consumers or Spark Streaming components listen to specific topics in

-

Kafka to reliably consume the data in real time.

-

Processing with Spark streaming: Spark Streaming receives the consumed data and performs real-time processing and transformations using its powerful capabilities.

-

Storage: The processed data can be stored in different storage systems, such as MySQL or Cassandra, based on specific needs.

-

Real-time data pipeline: Kafka acts as a backbone for efficient processing and transmitting high-velocity and high-volume data through a real-time data pipeline.

-

Subscription and dashboard: Subscribed data from Kafka is pushed to a dashboard using

-

APIs, enabling users to visualize and interact with the real-time data.

In summary, this real-time streaming architecture harnesses the strengths of Kafka analytics, including data publication, consumption, and processing, in conjunction with Spark Streaming’s capabilities, to create a robust pipeline capable of managing high-velocity data and delivering real-time insights through an interactive dashboard.

Advantages of using Kafka for real-time analytics

Using Kafka for real-time analytics offers several advantages:

-

Kafka is a reliable and scalable system that manages massive amounts of data.

-

Unlike other message brokers such as JMS, RabbitMQ, and AMQP, Kafka utilizes a distributed publish-subscribe messaging system, making it superior.

-

Kafka excels at handling high-velocity real-time data, setting it apart from its counterparts.

-

The message queue in Kafka is persistent, retaining transmitted data until it meets the defined retention period.

-

Kafka offers extremely low end-to-end latency, ensuring fast processing of big data volumes.

-

With Kafka, the time it takes for a record to be produced and fetched by the consumer is significantly reduced.

How is Kafka used for the real-time analytics?

Kafka is utilized for real-time analytics in various ways, including:

Data ingestion

Kafka acts as a dependable and flexible platform that efficiently handles the ingestion of massive amounts of real-time data from diverse sources into data pipelines, facilitating streamlined analytics processing.

Data storage

Kafka functions as a robust and decentralized storage system that ensures the long-term durability of real-time data, enabling efficient retrieval for further analysis.

Real-time stream processing

Kafka seamlessly integrates with stream processing frameworks such as Kafka-Streams, facilitating real-time analytics, computations, and transformations on streaming data easily and efficiently.

Event streaming

Kafka’s publish-subscribe model enables event streaming, allowing real-time capture, processing, and analysis of events to extract valuable insights and trigger timely actions.

Real-time monitoring

Kafka’s capability to handle high-volume data streams enables real-time monitoring of diverse metrics and performance indicators. This empowers organizations to stay updated with live data and make informed decisions based on real-time insights.

Messaging

Kafka’s messaging capabilities facilitate the seamless real-time data exchange and communication among various systems and components within an analytics pipeline. This allows for smooth and efficient data flow, enabling different pipeline parts to interact and collaborate in real-time, leading to more effective data processing and analysis.

Log aggregation

Kafka’s log aggregation capabilities allow for the seamless collection of logs from multiple sources. It provides a centralized and unified view of real-time log data, enabling efficient analysis and troubleshooting across the system.

Metrics

Kafka enables the collection and processing of real-time metrics data, empowering organizations to monitor and analyze critical performance indicators in real-time.

Commit logs

The log-based architecture of Kafka makes it well-suited for dependable and resilient commit logs, guaranteeing the persistence and consistency of data in critical applications.

Data pipelines and ETL

Kafka plays a crucial role in establishing the foundation of real-time data pipelines and ETL (Extract, Transform, Load) processes by facilitating the smooth and uninterrupted movement of data across various stages of analytics processing.

Building a real-time analytics pipeline with Kafka

Building a real-time analytics pipeline with Kafka involves several steps that require technical expertise and skills. However, with the help of DoubleCloud, the process becomes much easier and more accessible.

-

1. Designing the pipeline architecture: The initial stage involves crafting the architecture of the analytics pipeline, taking into account the unique project requirements and objectives. This encompasses identifying the data sources, planning the processing stages, and defining the data flow within the pipeline.

-

2. Data ingestion with Kafka producers: In this step, Kafka Producers play a vital role in capturing data from diverse sources and feeding it into Kafka topics. These Producers can be custom-built to extract data from systems, devices, applications, or any other pertinent sources. Their primary function is ensuring seamless and efficient data transfer into the Kafka ecosystem.

-

3. Stream processing with Kafka streams: This provides a powerful API for performing real-time stream processing on the ingested data. It allows for transformations, computations, aggregations, and filtering on the data streams, enabling real-time analytics and insights.

-

4. Data storage and management: Kafka can be used as a durable and distributed storage system, allowing data to be stored reliably for subsequent analysis. Additionally, data can be stored in external databases, data warehouses, or data lakes for long-term storage and further processing.

-

5. Visualization and insights with real-time dashboards: Integrating real-time dashboards and visualization tools into the analytics pipeline allows for the meaningful interpretation of data. These tools enable real-time data visualization, empowering users to gain actionable insights and make informed decisions based on the information presented.

DoubleCloud API

We provide the ability to deploy, change and manage all our four services, including building and deploying charts and dashboards via API that is available using Python SDK or directly via gRPC.

How DoubleCloud can help leverage Kafka?

DoubleCloud’s managed Kafka solution simplifies the utilization of Kafka by handling setup, configuration, cluster management, monitoring, security, and professional support, enabling organizations to leverage Kafka for real-time data processing and analytics.

Conclusion

Managing data ingestion in real-world scenarios with multiple sources and targets and accommodating evolving variable schemas can be a complex and resource-intensive task. By utilizing the Kafka Stream API, performing transformations, aggregations, data filtering, and joining multiple data sources becomes straightforward and seamless.

Here are some of the key points:

-

Apache Kafka is a powerful platform for real-time data analytics and streaming processing.

-

Kafka handles large data volumes with low latency, zero data loss, and fault tolerance.

Integration with other message brokers and stream processing frameworks expands its capabilities. -

Key components like Kafka Connect, Kafka-Streams API, and Schema Registry enhance data integration and management.

-

DoubleCloud simplifies the building of real-time analytics pipelines with Kafka.

-

DoubleCloud manages setup, configuration, cluster management, and security.

-

DoubleCloud provides support, integration with other services, and optimization for Kafka utilization.

In summary, Kafka provides a robust, scalable, and fault-tolerant platform for building real-time data pipelines, integrating systems, and efficiently processing high-volume data streams. Its versatility and capability to handle vast amounts of data make it a popular choice for organizations engaged in large-scale data processing and real-time analytics.

DoubleCloud Managed Service for Apache Kafka

A fully managed open-source Apache Kafka service for distributed delivery, storage, and real-time data processing.

Frequently asked questions (FAQ)

Is Apache Kafka suitable for real-time data analytics?

Is Apache Kafka suitable for real-time data analytics?

Yes, Apache Kafka is highly suitable for real-time data analytics. Its capabilities include low-latency processing, fault tolerance, and efficient handling of large data volumes. These features make it an excellent choice for real-time analytics applications.

How does Apache Kafka ensure fault tolerance?

How does Apache Kafka ensure fault tolerance?

Can Apache Kafka integrate with other systems and tools?

Can Apache Kafka integrate with other systems and tools?

Start your trial today