CDC: from zero to hero

Written by: Andrei Tserakhau, DoubleCloud Tech Lead, and Tim Brunko, Engineer

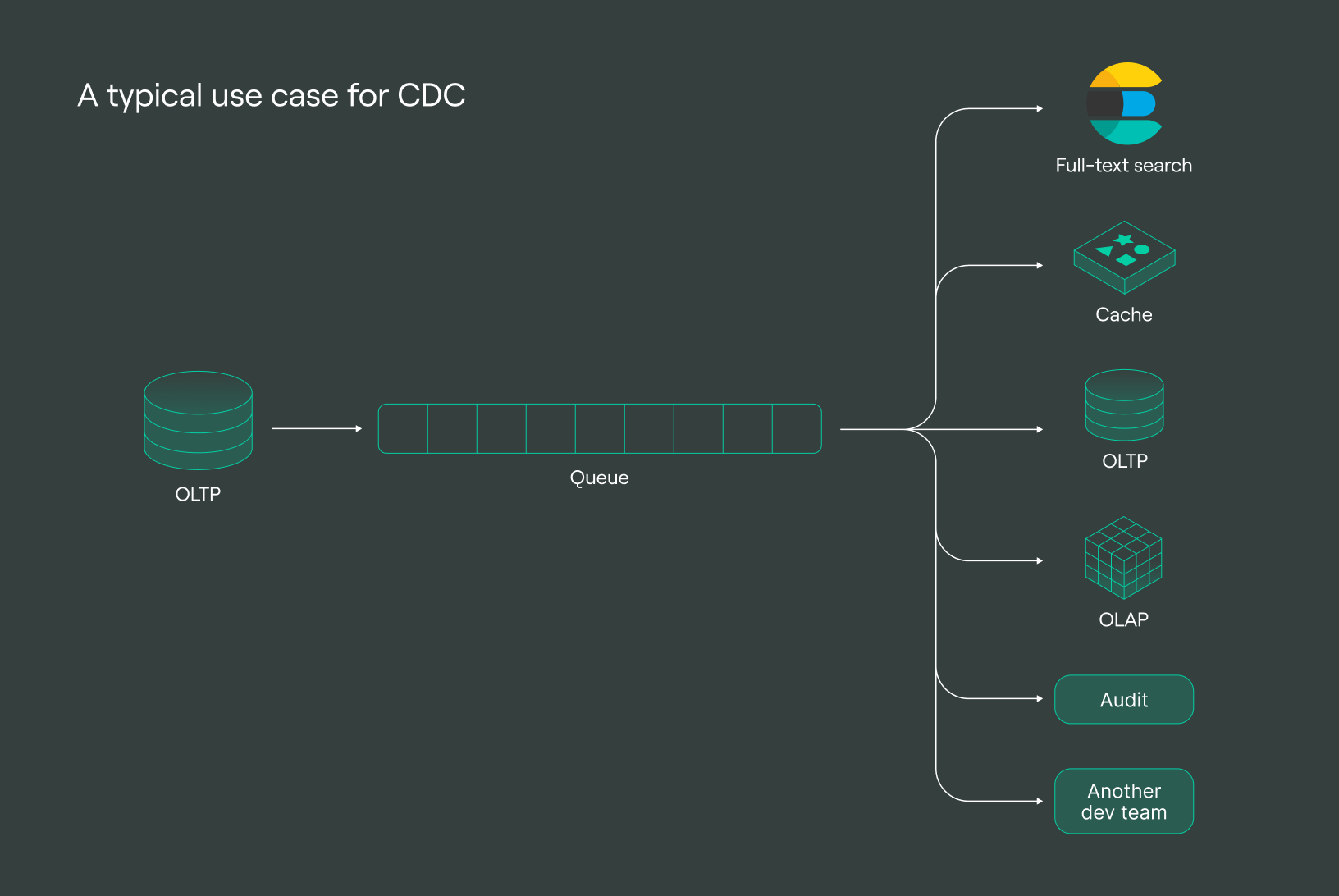

Modern microservice architectures often have a need for caches, full-text search indexes, replicas, and reactive interaction between components. Addressing each of those tasks individually can be a challenge, but it turns out there’s a single mechanism that can handle them all. Meet change data capture.

What is change data capture (CDC)?

CDC is a set of software development patterns that let you establish a reactive infrastructure, simplify microservices architecture, and break monoliths down into microservices.

In scenarios like these, you typically start with an online transaction processing (OLTP) database. With CDC, you obtain a stream of events regarding the addition, modification, and deletion of rows that you can then process. That transforms the database into an event-driven system.

In this article, we’ll talk about:

A properly configured CDC cuts event responsiveness to a matter of milliseconds. That’s why it’s frequently employed when there’s a need for real-time analysis of a section of data moved to external systems.

CDC exemplars

Below are examples of SQL commands for a PostgreSQL database and the CDC events they generate. The format or protocol is not as important given that they’re just examples. In this notation, “op” stands for operation, which can be “c” for create (insert), “u” for update, or “d” for delete.

Database

CREATE TABLE my_little_pony (

id INT PRIMARY KEY, name TEXT, description TEXT

);

INSERT INTO my_little_pony (id, name, description)

VALUES

(1, 'Twilight Sparkle', ''),

(2, 'Rainbow Dash', ''),

(3, 'Spike', 'Spike goes BRRR');

Serialized CDC

{

"op":"c",

"after":{

"id":1,

"name":"Twilight Sparkle",

"description":""

}

}

{

"op":"c",

"after":{

"id":2,

"name":"Rainbow Dash",

"description":""

}

}

{

"op":"c",

"after":{

"id":3,

"name":"Spike",

"description":"Spike goes BRRR..."

}

}

Database

UPDATE

my_little_pony

SET

description = '_'

WHERE

id < 3;

Serialized CDC

{

"op":"u",

"after":{

"id":1,"name":

"Twilight Sparkle",

"description":"_"

}

}

{

"op":"u",

"after":{

"id":2,

"name":"Rainbow Dash",

"description":"_"

}

}

Database

DELETE FROM

my_little_pony

WHERE

name = 'Rainbow Dash';

Serialized CDC

{

"op":"d",

"before":{

"id":2

},

}

As seen in the example, CDC events have the following properties:

-

The database-specific details disappear in the CDC stream (in practice, it’s reduced to almost zero, but some information can still be obtained).

-

All commands are transformed into a set of modified rows, including the new state. Depending on the settings, the previous state can also be obtained.

Where CDC shines

The ability of the CDC to swiftly and efficiently move data in small portions makes it invaluable for real-time responsiveness to changes in the source database. There are two main areas where the CDC is applied:

- Enabling reactive interactions between infrastructure components.

- Implementing the patterns needed to build microservice architecture.

Let’s look at those two areas separately.

Reactive interaction between infrastructure components

In modern architectures, it’s common to work with only a portion of the database, and CDC is typically applied to specific tables. By having a sequence of modifying events for a table, you can obtain an asynchronous replica. And the fact that events have already been decoupled from the source database in a format independent of the database itself means that the receiving end can be anything: OLTP/OLAP/cache/full-text search.

If everything is set up correctly, you can, for instance, achieve low-latency caching without modifying the business logic for your services. Let’s explore how that works with specific replication examples.

Replication to a DWH

By applying a stream of changes to a data warehouse (DWH), you can obtain an asynchronous replica of your production OLTP database. That solution addresses multiple issues at once:

- Analysts can work without impacting the production OLTP database.

- Data is transferred from the transactional database to the analytical one.

- Analysts can work with analytical databases.

For example, you can automatically obtain an asynchronous replica of your production PostgreSQL database in ClickHouse, Elasticsearch/OpenSearch, or S3.

Here’s an example of delivering a replication stream from MySQL to ClickHouse.

Cache invalidation

By applying a stream of changes to a cache, you can obtain it directly from your automatically updated (optionally aggregated/transformed) production OLTP database. The RedisCDC project for Redis is an excellent example.

It’s a distributed platform that simplifies the delivery of CDC streams to Redis.

Update search index (Elasticsearch/Solr)

By sending the relevant portion of the change stream to a full-text search engine, you can obtain an automatically updated full-text search index. Here’s an example of delivering a replication stream from MySQL to Elasticsearch.

Replication to the same OLTP storage

Having a stream of changes in the database allows you to obtain an asynchronous logical replica, for example, for a development environment (dev-stand). Since events are usually transmitted logically, you can also perform logical transformations on them along the way. One example is excluding columns with some sensitive data from the replica and encrypting other sensitive data.

Replication to another OLTP storage

If you want to replicate your data to another database, for example, so your production data in MySQL can be used in PostgreSQL or vice versa, CDC is your best friend. The replication might be necessitated by tool limitations.

Here’s an example of delivering data from MySQL to PostgreSQL.

Auditing what happens in your storage

CDC provides the ability to conduct a comprehensive audit of what’s happening in the database. By storing events for changes in the database as is, you can obtain audit logs of everything happening with your production database. You can also achieve real-time auditing when processing the message stream.

Here’s an example of a scenario with a similar audit.

Sharing change events between services

Dispatching events to a queue means you can notify other components or commands about them. While there’s a more systematic approach to this in the section about microservice patterns, you can inform your colleagues about events without relying on specific patterns.

Providing cross-region failovers

If you have a cross-region queue, you can duplicate readers in two different data centers by dispatching CDC to the queue, thereby delivering a regional failure guarantee (in case one region goes offline).

Reducing the load on the main OLTP storage

CDC lets you reduce the load on the master transactional database or service, especially when dealing with a microservice database. Serializing the CDC stream in a queue allows multiple receivers to work with the master’s information, burdening it only once. You can activate all the mentioned receivers, even doubling them to include copies at each data center, without changing the load on the master since they’ll all be reading the message queue.

CDC patterns for microservices

CDC is an approach that allows for simultaneous multiple microservice architecture patterns. From the perspective of microservice design patterns, CDC enables the concurrent implementation of event-driven processing between microservices, lets microservices be decoupled, and assists in breaking monoliths down into microservices. We won’t get into the differences between those patterns here, but there will be a list of useful references at the end for anyone who’s interested.

Outbox pattern

The outbox pattern, also known as application events, prescribes combining state changes with notification sending to other services. That can be achieved by either saving messages to be sent in adjacent tables within the same transaction (see transactional outbox) or subsequently sending messages through CDC.

When stacking the CDC stream into a queue, there’s a side effect: event sourcing with domain events on a bus like Apache Kafka and service interaction through Kafka. That partly enables microservice decoupling: services no longer need to actively notify others since anyone needing events can subscribe to them. The service only works with its own database. Additionally, some free benefits include the option to skip service discovery, reproducibility, and the ease of storing the entire stream, for example, in Elasticsearch.

CQRS pattern

Command query responsibility segregation (CQRS) is a pattern in which an application is conceptually divided into two parts: one modifying the state and the other reading it. CDC allows you to direct the part that modifies the state through a queue to a separate database where reading operations are performed.

Strangler pattern

Strangler is a pattern for breaking monoliths down into microservices. A properly prepared CDC is completely transparent to the legacy application and doesn’t require any changes to the inherited data model, which greatly facilitates the decomposition of the monolith and makes integration into the monolith as non-invasive as possible.

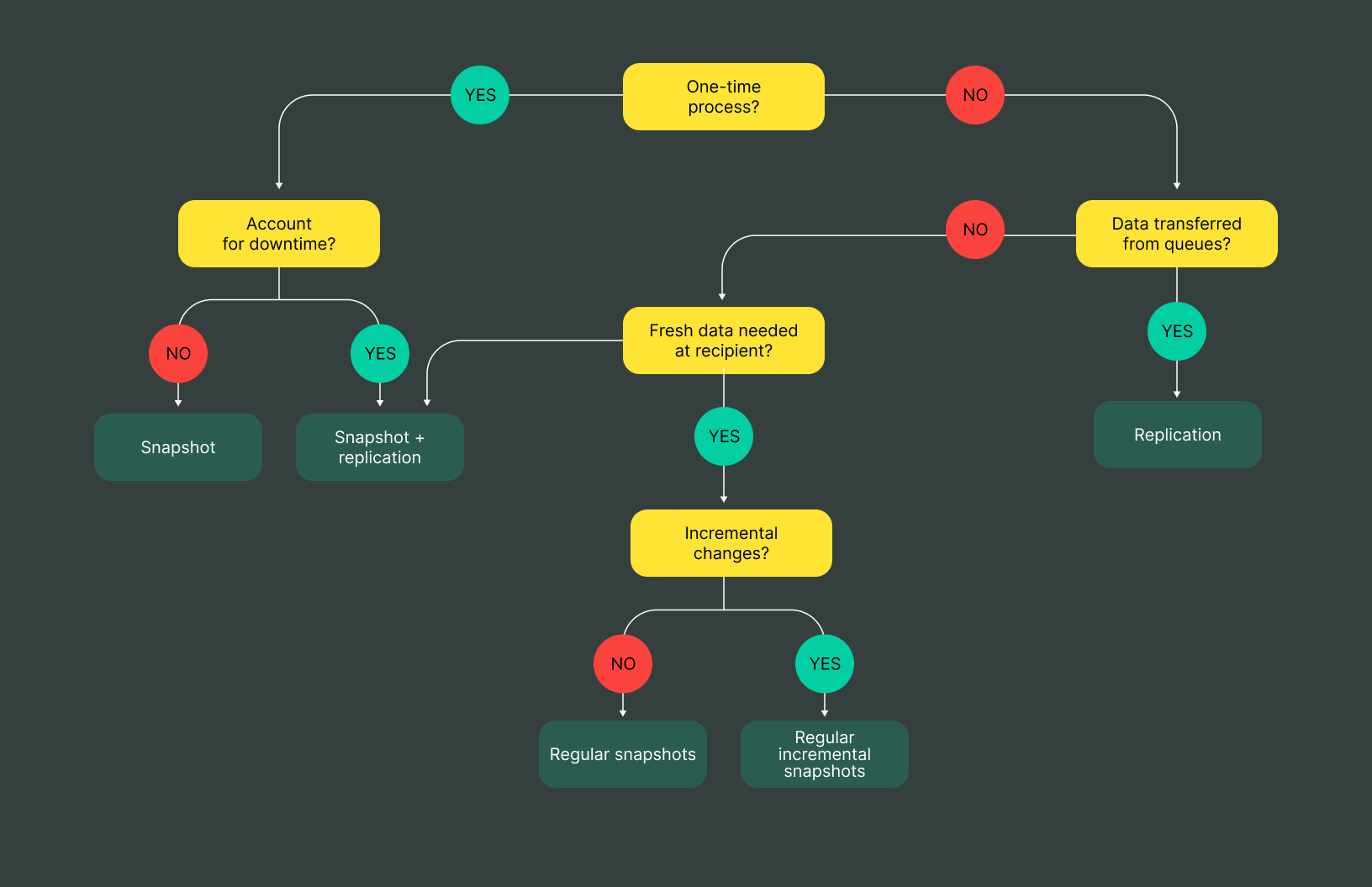

How to implement CDC

In theory, there are three ways to implement CDC: timestamps on rows, triggers on tables, and logical replication. In this section, we will look at the methods, their advantages, and their disadvantages.

Query-based CDC

For example, you might have a table in your database with a dedicated column (let’s call it “updated_at”) that gets filled with the value of now () at every insert/update.

You can regularly retrieve new or updated rows through polling with a straightforward query:

SELECT

...

FROM

my_table

WHERE

updated_at > saved_val;

In this basic setup, however, you won’t be able to distinguish between an insert and an update, and you won’t receive notifications about deleted rows. Complicating the schema can teach you how to differentiate between inserts and updates (for example, by introducing a “created_at” field) and receive notifications for row deletions (for instance, by replacing deletions with a flag like “is_deleted”).

|

Pros |

Cons |

|

It works |

Non-obvious interaction requirements |

|

It doesn’t store the change history |

Schema and business logic modifications |

|

Involves polling, which requires |

|

|

Simple implementations lack the capability |

There are modifications to this approach that mitigate some drawbacks while complicating the schema, but the core is the same. Here’s what you can add in rows:

- Version numbers

- State indicators

- Time/version/status

Table triggers

In this case, you create a dedicated table for the history and set up triggers that activate when rows are modified or deleted.

|

Pros |

Cons |

|

It works |

Triggers burden and slow |

|

Changes are captured instantly, |

Triggers modify the database |

|

Triggers can capture all event types, |

Requires an external process |

|

Triggers can add valuable metadata |

Creating and maintaining triggers can |

Logical replication

Every database provides ACID guarantees with a write-ahead log, a log of changes encoded in binary format. It’s called “wal” in PostgreSQL, “binlog” in MySQL, “redo-log” in Oracle, “oplog” in MongoDB, and “transaction log” in MSSQL.

The log already has what we need, so we just have to decode it and send it to a message queue.

But limitations do come up on occasion. In the case of PostgreSQL, for example, that approach isn’t compatible with pg_repack.

|

Pros |

Cons |

|

Processing happens in real time |

It’s complicated |

|

All change types are captured: |

It often requires a separate role |

|

An additional data storage mechanism |

The mechanism may not exist |

|

It’s mostly not polling, so it |

If replication is not configured |

How we do CDC at DoubleCloud Transfer

DoubleCloud Transfer tackled the cloud migration problem right from the beginning. A database snapshot is transferred, after which all changes that occur in the source database from that point on are applied to the target database. An asynchronous replica of the database is created in the cloud, and all that’s left is to disable write operations in the source database, wait a few seconds for the replica to catch up with the master, and enable operations in the cloud database.

That approach minimizes downtime when migrating to the cloud.

Over time, in addition to migration, the service was introduced for data transfer between different databases, such as from transactional to analytical databases, as well as between message queues and databases. If you put all supported transfer options in one matrix, you can see that it works with snapshots and data replication streams, scaling horizontally, and sharding.

In the service’s terminology, there are two main entities:

-

Endpoint: these are connection settings along with additional configurations. An endpoint can be either a source from which data is extracted or a target where data is loaded.

-

Transfer: this connects a source endpoint to a target endpoint. It includes its own settings, primarily the type of transfer: snapshot, replication, or snapshot + replication.

Once a transfer is created, it can be activated as a one-time action or scheduled for activation. The data is transferred in the case of a table snapshot, and then the transfer is automatically deactivated. For replication, an ongoing process is initiated to continuously move new data from the source to the target.

DoubleCloud Transfer

A cloud agnostic service for aggregating, collecting, and migrating data from various sources.

Transfer is written in Go and operates as a cloud-native solution. It has a control plane responsible for API and data plane management. When activating user transfers, data planes are created in runtimes. One example of a supported runtime is Kubernetes (K8s), where pods are launched to activate the transfer, making it a standalone service running on top of a specific runtime. EC2 and GCP runtime are also supported. When a transfer is activated, DoubleCloud Transfer creates virtual machines in the runtime as needed and starts moving data inside of it. It only takes a few clicks to configure data delivery clocking in at gigabytes per second.

The service has been successfully used at scale in production by multiple teams for several years. Besides its inherent horizontal scalability, we’ve parallelized wherever possible. Nearly two thousand data streams currently run in our internal infrastructure, moving multiple gigabytes per second.

We’ve also implemented the most frequently requested ELT transformations, and ELT capabilities will continue to expand. Configuration can be done through a user-friendly UI, API, and Terraform.

All of that has made DoubleCloud Transfer a versatile data transfer service from any source to any destination (with ELT processing capabilities) for both snapshots and data streams. Users have found numerous creative ways to utilize the service beyond its primary purpose, with CDC just one application.

Even more detail

Since DoubleCloud Transfer has been receiving replication streams at the logical replication level since its inception, the natural step was to make that available to users. And working through wal log processing is what turned DoubleCloud Transfer into a CDC solution.

We began shipping logical replication events to a queue based on user requests, and the decision was made to generate events in the Debezium format so we could become a drop-in replacement for Debezium. That way, it would be possible to replace one CDC product with another in a configured pipeline while keeping the pipeline. This approach would provide useful integrations without introducing a new data format.

As a result, we had to create converters from our internal objects to the serialized Debezium format and cover it with extensive testing.

DoubleCloud Transfer currently has the serializer implemented for PostgreSQL and MySQL sources, with MongoDB source support recently added. You can now easily configure CDC delivery from your YDB tables to Apache Kafka, EventHub, or Kinesis in Debezium format with just a few clicks.

That made DoubleCloud Transfer a drop-in Debezium replacement for MySQL and PostgreSQL. Some notable differences include DoubleCloud Transfer’s ability to handle master migration in PostgreSQL, when a replica becomes the master, with the pg_tm_aux plugin enabled. Additionally, the service can transfer user-defined types in PostgreSQL.

DoubleCloud Transfer also implemented the ability to organize query-based CDC, which the documentation refers to formally as incremental tables and informally as increments.

Real-world use cases for DoubleCloud Transfer

Let’s talk about popular use cases.

OLTP to a DWH

The most common use case is using CDC to create replicas of transactional databases (PostgreSQL, MySQL, MongoDB) in analytical data storages (ClickHouse, S3). Storing events in a queue unloads data from the transactional database once, and there are typically two analytical recipient databases: one in each data center to guarantee survival during regional outages.

The problem

Production processes run in transactional databases, and the business side needs analytical reports conveniently generated through analytical databases. Since HTAP databases are still considered exotic, data needs to be somehow transferred from the transactional database to the analytical one.

|

Before CDC |

After CDC |

|

Teams usually transfer data from |

CDC (at DoubleCloud Transfer) |

Regional analytics

This is the same as above, only with an extra requirement regarding regional outages.

The problem

Production data needs to be moved to a regional analytical DWH with a sub-second delay.

|

Before CDC |

After CDC |

|

A custom Java service was used to Afterward, a series of scripts were Introducing a new data |

The team set up delivery for Two other replication transfers The queue itself provides As a result, the team has three |

CDC to a search engine

A customer from another team started using CDC to update Elasticsearch search indexes, and then applied CDC for reactive interaction between components. CDC ultimately became an integral part of the project.

The problem

When data in MySQL was modified, the Elasticsearch indexes had to be updated.

|

Before CDC |

After CDC |

|

Before CDC, a transactional output But since DoubleCloud Transfer |

DoubleCloud Transfer allowed us They use CDC to send push notifications, |

Analyzing a raw CDC stream

A customer transformed MySQL/PostgreSQL into a streaming database using CDC events on top of a DWH. The tool they created is called incremental materialized views.

The problem

MySQL is set up in the customer system such that many services access it to retrieve the configurations, so they need a full trail of config changes for observability purposes.

|

Before CDC |

After CDC |

|

Running all services in MySQL wasn’t Initially, caching services were set up: The caching services began to consume Meanwhile, services that accessed |

After setting up a CDC stream with Caches cut lag times to an average Services were able to get rid of |

Summary

This article thoroughly explored CDC: its history, theory, use cases, open-source practices, and corporate practices in addition to real user stories.

To learn more about CDC, read Martin Kleppmann’s article and experiment with Debezium and DoubleCloud Transfer.

It’s worth noting that DoubleCloud Transfer is a fully managed cloud-native solution that’s easy to onboard and master. Feel free to come and use it, give us feedback, and request features.

Get started with DoubleCloud