How Terraform reshapes the data engineering experience

Written by: Andrei Tserakhau, DoubleCloud Tech Lead

About me

Hey there! My name is Andrei Tserakhau, and I’m an engineer. I previously worked at Yandex, and am now a Tech Lead at DoubleCloud. DoubleCloud is not just another data platform, but an engine that helps you to grow your own data stack on top of our pillars. I write about modern data stacks, how they are built, and how to use them as efficiently as possible.

What is a modern data stack?

What is a data stack? A data stack is a collection of various technologies that allow for raw and sparsed data to be processed before it can be used. A modern data stack (MDS) consists of the specific tools that are used to organize, store, and transform data. These tools allow for the data to be taken from “inedible data” (data that cannot be worked with) to “edible data” (data that can be worked with).

Modern stacks are mostly built with several layers:

-

1. Ingestion — ELT / ETL pipelines that act as an entrance for your data

-

2. Storage — OLAP database or DWH / Data Lake. Act as the main value holder for your raw/clean data for later usage.

-

3. Business Intelligence (BI) — some tools for BI

-

4. Orchestration — something to orchestrate this madness

For some companies, these layers can be more or less wide, but the common frame is still there.

Traditional ELT/ETL Pipeline Development

Most data pipelines can be broadly divided into two groups:

-

1. Code-based — usually some python/java/scala code that is deployed in orchestration tools (from Airflow to Apache Flink).

-

2. Non-code-based — usually built-in SaaS-based applications via the UI.

Let’s focus first on code-based pipelines and their main challenges:

-

1. Complexity and maintenance — Pipelines need to be implemented, deployed, and then monitored.

-

2. Scalability — Scaling resources and infrastructure on demand in response to changing data needs is challenging.

-

3. Long Development Cycles — Writing code can be hard, especially for data-intensive products.

For non-code-based ones (especially SaaS) these issues are less of a problem, but they have their own:

-

1. Limited visibility and monitoring — This can lead to delays in detecting and addressing data pipeline problems.

-

2. Hard to reproduce — Reproducting UI-based pipelines can be complicated. Making copies of your pipeline to test some changes can be torture for data engineers.

-

3. Lack of version control — UI-based pipelines are hard to control in terms of evolution since all changes are user-made instead of automated.

All of this can push us to want to make a change. What if we can unify the simplicity of UI-based pipelines with the visibility and reproducibility of code-based pipelines?

How to improve your pipeline developer experience with Terraform

Recent developments and technologies have made it easier than ever to get started building a modern data stack. However, this increased ease of use has likewise raised the likelihood of a data warehouse changing in unchecked and unintended ways. Terraform by HashiCorp is an open-source project that provides infrastructure-as-code and can implement change management of the modern data stack.

Modern world data-intensive applications are always near actual infrastructure. Terraform can answer the question of how to make your data stack declarative, reproducible, and modular while retaining the simplicity of SaaS tools.

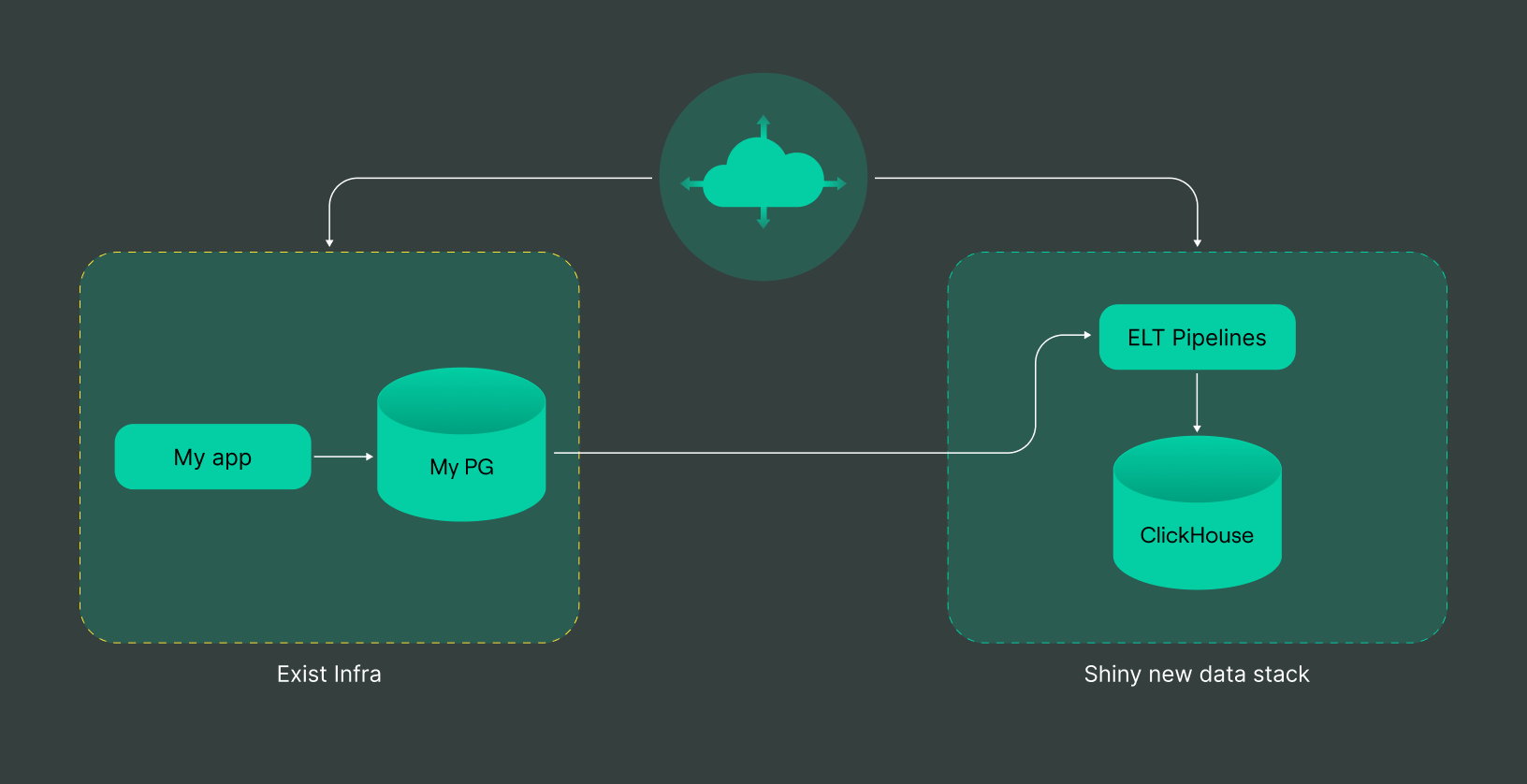

Let’s say we have a typical web application that lives inside Terraform and consists mainly of storages, e.g. Postgres.

And what we need is to add some analytical capabilities here:

-

1. Offload oltp-db analytical to different storage.

-

2. Aggregate all data in one place.

-

3. Join it with data outside our scope.

So let’s say that the target infrastructure should look something like this:

Of course, we need to do the same for all stages: dev, preprod, and prod. Doing this via the UI is a painful and time-consuming process and the exact scenario where Terraform really shines.

How to make it with Terraform

First of all, let’s take a look at how to organize code between stages. I prefer a module and several roots here so we can tweak it a bit more easily.

Let’s start with a main.tf, usually it contains providers definition, nothing more:

provider "doublecloud" {

endpoint = "api.double.cloud:443"

authorized_key = file(var.dc-token)

}

provider "aws" {

profile = var.profile

}

This will just enable usage for certain environments, like AWS and DoubleCloud here.

The first thing we need to do is create our storage. In this example, we will use ClickHouse.

To enable ClickHouse, we need to create a network to put it in. For this example, I chose a BYOA network, so it can be easily peered with existing infrastructure

module "byoc" {

source = "doublecloud/doublecloud-byoc/aws"

version = "1.0.3"

doublecloud_controlplane_account_id = data.aws_caller_identity.self.account_id

ipv4_cidr = "10.10.0.0/16"

}

resource "doublecloud_clickhouse_cluster" "dwh" {

project_id = var.project_id

name = "dwg"

region_id = "eu-central-1"

cloud_type = "aws"

network_id = doublecloud_network.network.id

description = "Main DWH Cluster"

resources {

clickhouse {

resource_preset_id = "s1-c2-m4"

disk_size = 51539607552

replica_count = var.is_prod ? 3 : 1 # for prod it's better to be more then 1 replica

shard_count = 1

}

}

config {

log_level = "LOG_LEVEL_INFO"

text_log_level = "LOG_LEVEL_INFO"

}

access {

data_services = ["transfer", "visualization"]

ipv4_cidr_blocks = [{

value = data.aws_vpc.infra.cidr_block

description = "peered-net"

}]

}

}

data "doublecloud_clickhouse" "dwh" {

name = doublecloud_clickhouse_cluster.dwh.name

project_id = var.project_id

}

resource "doublecloud_transfer_endpoint" "dwh-target" {

name = "dwh-target"

project_id = var.project_id

settings {

clickhouse_target {

connection {

address {

cluster_id = doublecloud_clickhouse_cluster.dwh.id

}

database = "default"

user = data.doublecloud_clickhouse.dwh.connection_info.user

password = data.doublecloud_clickhouse.dwh.connection_info.password

}

}

}

}

Once we have СlickHouse, we can start designing our data pipes. First, let’s make Postgres-to-ClickHouse:

resource "doublecloud_transfer_endpoint" "pg-source" {

name = "sample-pg2ch-source"

project_id = var.project_id

settings {

postgres_source {

connection {

on_premise {

hosts = [

var.postgres_host

]

port = 5432

}

}

database = var.postgres_database

user = var.postgres_user

password = var.postgres_password

}

}

}

resource "doublecloud_transfer" "pg2ch" {

name = "pg2ch"

project_id = var.project_id

source = doublecloud_transfer_endpoint.pg-source.id

target = doublecloud_transfer_endpoint.dwh-target.id

type = "SNAPSHOT_AND_INCREMENT"

activated = false

}

This creates a simple replication pipeline between your existing Postgres and the newly created DWH ClickHouse cluster.

As you can see, a lot of stuff here actually comes as variables, so it’s quite easy to prepare different stages, simple add stage_name.tfvars and run terraform apply with it:

variable "dc-token" {

description = "Auth token for double cloud, see: https://github.com/doublecloud/terraform-provider-doublecloud"

}

variable "profile" {

description = "Name of AWS profile"

}

variable "vpc_id" {

description = "VPC ID of exist infra to peer with"

}

variable "is_prod" {

description = "Is environment production"

}

variable "project_id" {

description = "Double.Cloud project ID"

}

variable "postgres_host" {

description = "Source host"

}

variable "postgres_database" {

description = "Source database"

}

variable "postgres_user" {

description = "Source user"

}

variable "postgres_password" {

description = "Source Password"

}

That’s it. Your small modern data stack is ready to use. As the next step you may just setup your own visualization connection and start playing with your data:

resource "doublecloud_workbook" "k8s-logs-viewer" {

project_id = var.project_id

title = "dwh"

connect {

name = "main"

config = jsonencode({

kind = "clickhouse"

cache_ttl_sec = 600

host = data.doublecloud_clickhouse.dwh.connection_info.host

port = 8443

username = data.doublecloud_clickhouse.dwh.connection_info.user

secure = true

raw_sql_level = "off"

})

secret = data.doublecloud_clickhouse.dwh.connection_info.password

}

}

Congratulations! You’re awesome. Such a configuration is really easy to deploy (just run terraform apply) and copy-run it with a different variable set like this.

That’s how you can set up almost any number of pipelines with easy integration into your infrastructure. A full example can be found here. This example adds a peer connection to your existing VPC and creates a sample ClickHouse in it with replication transfer between two databases.

DoubleCloud Managed Service for ClickHouse

An open-source, managed ClickHouse DBMS service for sub-second analytics. Don’t take two days to set up a new data cluster. Do it with us in five minutes.

Start your trial today