DoubleCloud’s 11th Product Update

Hey everyone! Victor here, with the news for this month:

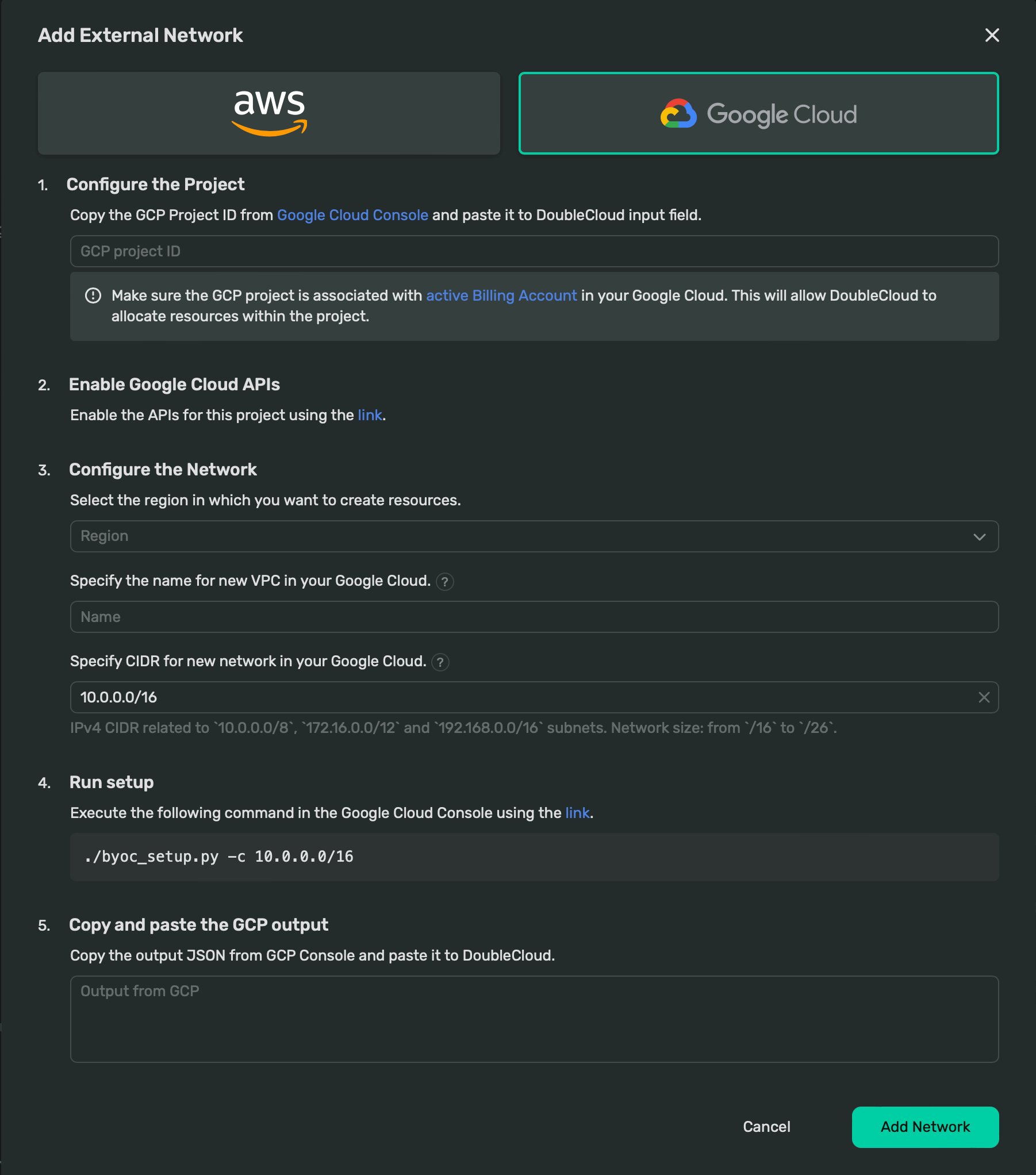

Google Cloud — Bring Your Own Cloud

I’m glad to announce that we have added Bring-Your-Own-Cloud (BYOC) support for Google Cloud. This allows you to deploy DoubleCloud resources and services directly into your GCP account, giving you control over access and connectivity. You can also apply GCP discounts and ensure compliance with GDPR and other regulations.

To use this feature, simply follow the steps in the wizard in the VPC section of our console. Once you complete these steps, you will be able to deploy resources like ClickHouse and Apache Kafka into an external VPC in your account. For more detailed instructions, please refer to our documentation.

In this article, we’ll talk about:

- Google Cloud — Bring Your Own Cloud

- Apache Kafka managed MirrorMaker and S3 sink connectors

- New S3 connector in DoubleCloud Transfer

- Update in Terraform Provider

- Notification capabilities for projects in preview

- Managed ClickHouse service enhancements

- Apache Airflow managed service in Preview

- Quality of life improvements

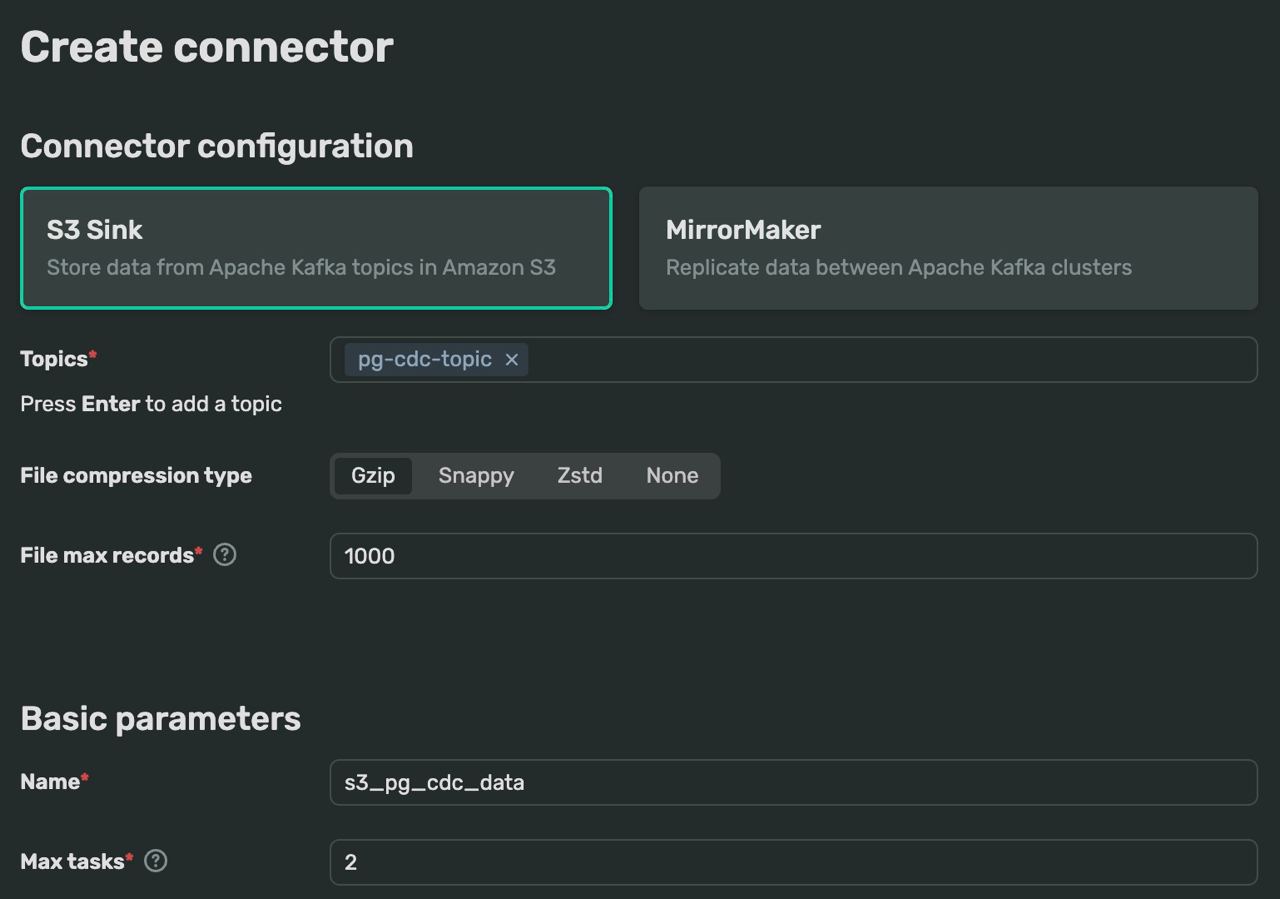

Apache Kafka managed MirrorMaker and S3 sink connectors

Now, you can easily enhance your Apache Kafka cluster with built-in MirrorMaker and S3 Sink connectors.

The MirrorMaker connector allows you to replicate data between different Apache Kafka clusters. This is useful for scenarios like Disaster Recovery across different providers or deployments, or building a geo-distributed solution to bring essential data closer to your users.

The S3 Sink connector is a popular and efficient method for transferring data into your S3 object storage, either for archival purposes or further analysis.

We’ve introduced these first two Kafka connectors to facilitate a smooth transition for users already familiar with similar services. We are actively exploring additional connectors and welcome your suggestions. If there’s a specific connector you need, please let us know.

New S3 connector in DoubleCloud Transfer

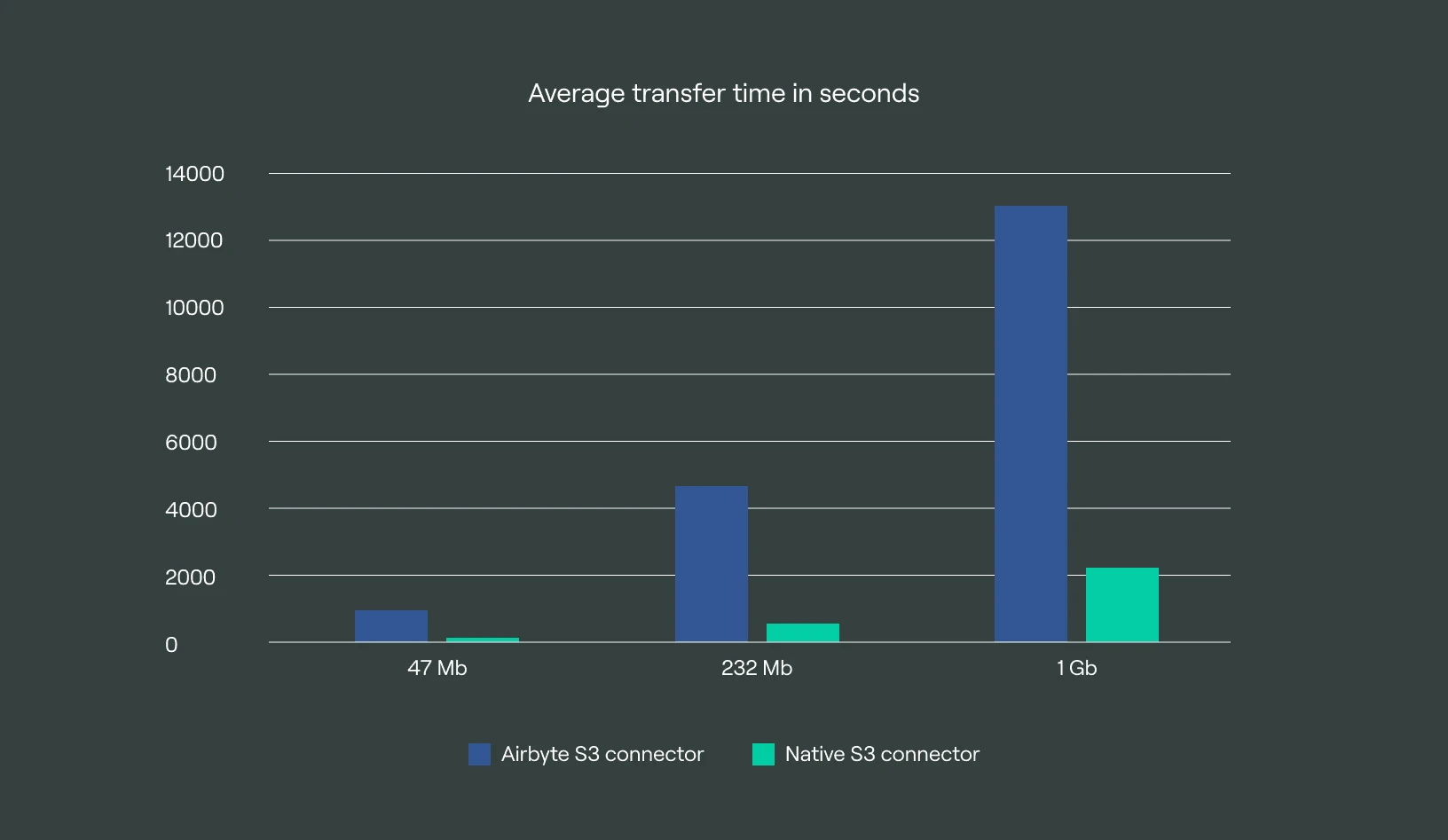

In response to a need to transfer large amounts of data within a reliable timeframe, we have developed a new connector for Object Storage from scratch. Based on our internal tests, this connector provides approximately 8x more throughput in comparison to the Airbyte connector, using the same compute resources.

This improvement was largely achieved by implementing multi-threaded operations for data handling in object storage. Out of the box, we support formats such as CSV, JSON, and Parquet, with Delta format support coming soon.

The new connector also features automatic schema detection and its propagation into ClickHouse, an event-driven approach based on events in AWS SQS, and many other benefits, all achievable with a no-code solution.

Update in Terraform Provider

We’ve received a lot of positive feedback about our Terraform provider, which streamlines development and deployment work for our customers. We’ve added new features to it based on feedback and popular usage scenarios.

-

BYOC Modules for AWS and GCP: You can now provision a setup for BYOC, from the initial setup to the final deployment of clusters using Terraform. This includes deploying and provisioning a VPC in your account, fully automated from end to end.

-

Dedicated Keeper Mode for ClickHouse: For those who wish to offload the load of keepers to dedicated machines, this can now be achieved using our Terraform provider.

-

Configuration Kafka Engine in ClickHouse.

-

Additionally, we have prepared end-to-end examples that can assist in deploying ClickHouse and Kafka in BYOC mode, and in setting up integration between them using Kafka Engine or the DoubleCloud transfer service. Simply do a git clone of the Terraform example, insert your DoubleCloud API key, and in a half-hour or so, you will have a complete, production-ready deployment of managed services in your AWS or GCP account.

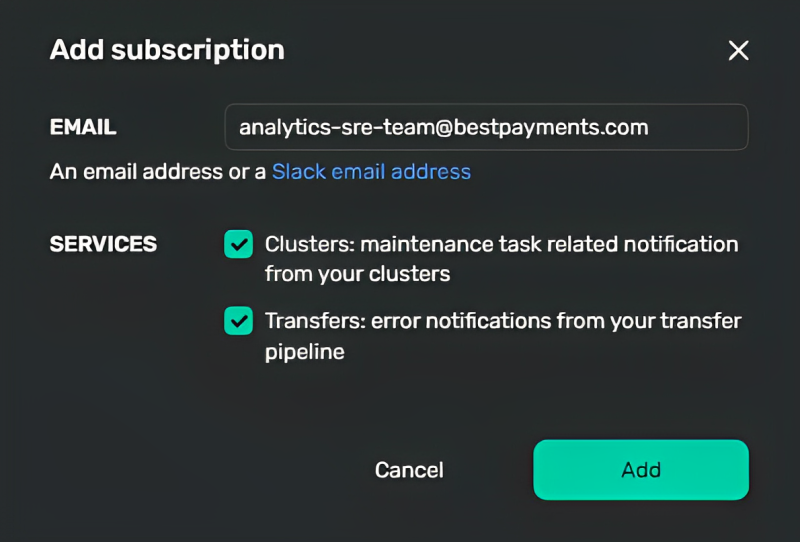

Notification capabilities for Projects in preview

We have added the ability to set up notifications for events on the DoubleCloud platform to be sent to different teams via email or Slack channels. Previously, only the creator received such notifications, but in larger companies, a broader audience should also be able to receive these updates.

Let’s say there is planned maintenance coming up for your cluster, the team of SREs should be informed so they can react accordingly or prepare in advance. Similarly, if there is an issue with the transfer pipeline, the team of data engineers should be notified.

Managed ClickHouse service enhancements

-

We now support up to 7 replicas for large configurations and extreme workloads.

-

For those who need to maximize ClickHouse speed and prefer to store data on SSD disks, we have increased the maximum disk size to 16 TB per node, with the maximum throughput provided by gp3 and pd-balanced disks.

-

The compatibility of ClickHouse with MySQL and PostgreSQL protocols has been greatly enhanced over the past year. Therefore, all newly created ClickHouse clusters on DoubleCloud will be enabled with this feature, allowing users to connect through ports 3306 and 5432.

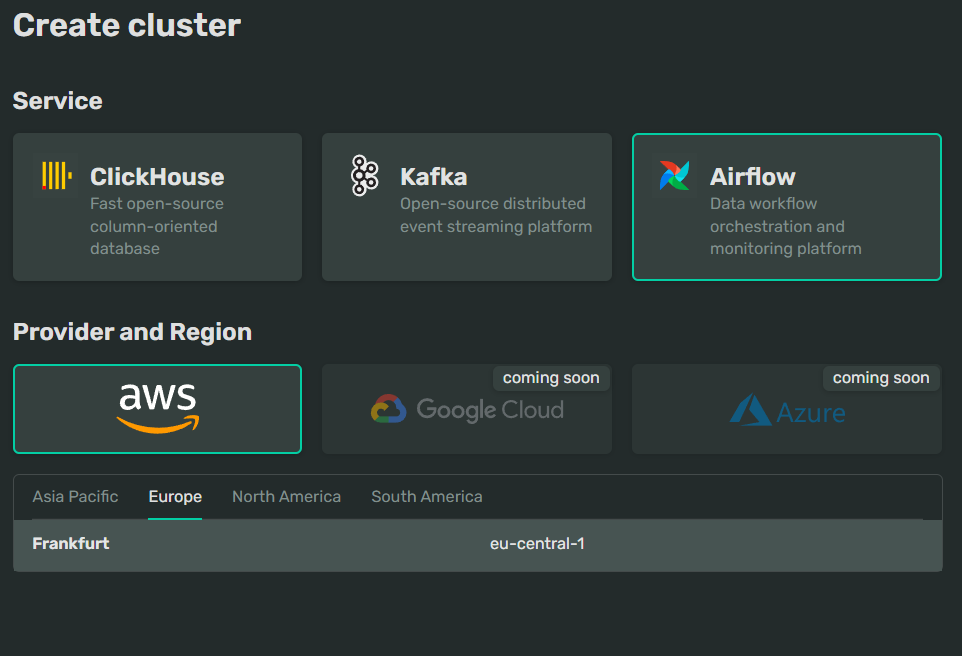

Apache Airflow managed service in preview

As we announced a few weeks ago, we have started a preview of our new managed service for Apache Airflow. You can easily request access here in the console. This service includes out-of-the-box integration with Git, auto-scaling worker nodes, and a super quick setup process — all on top of everything you expect from a managed service.

Quality of life improvements

Here’s a list of some minor but potentially pivotal improvements that simplify workflows and day-to-day tasks:

-

Bulk edit of IP CIDRs in allowed lists: You can now add or change entities in the allowed list in bulk. This shortens wait times and allows all changes to be made in one step.

-

Billing permissions: To accommodate scenarios where you need to restrict access to billing information or grant access exclusively to someone in your billing department, we have added a new type of permission related to billing.

-

Improved UX for adding elements to dashboards: Experience a more streamlined process through the new panel for adding elements to your dashboard.

Get started with DoubleCloud