DoubleCloud’s 12th Product Update

Hey everyone! Victor here, with the news for this month:

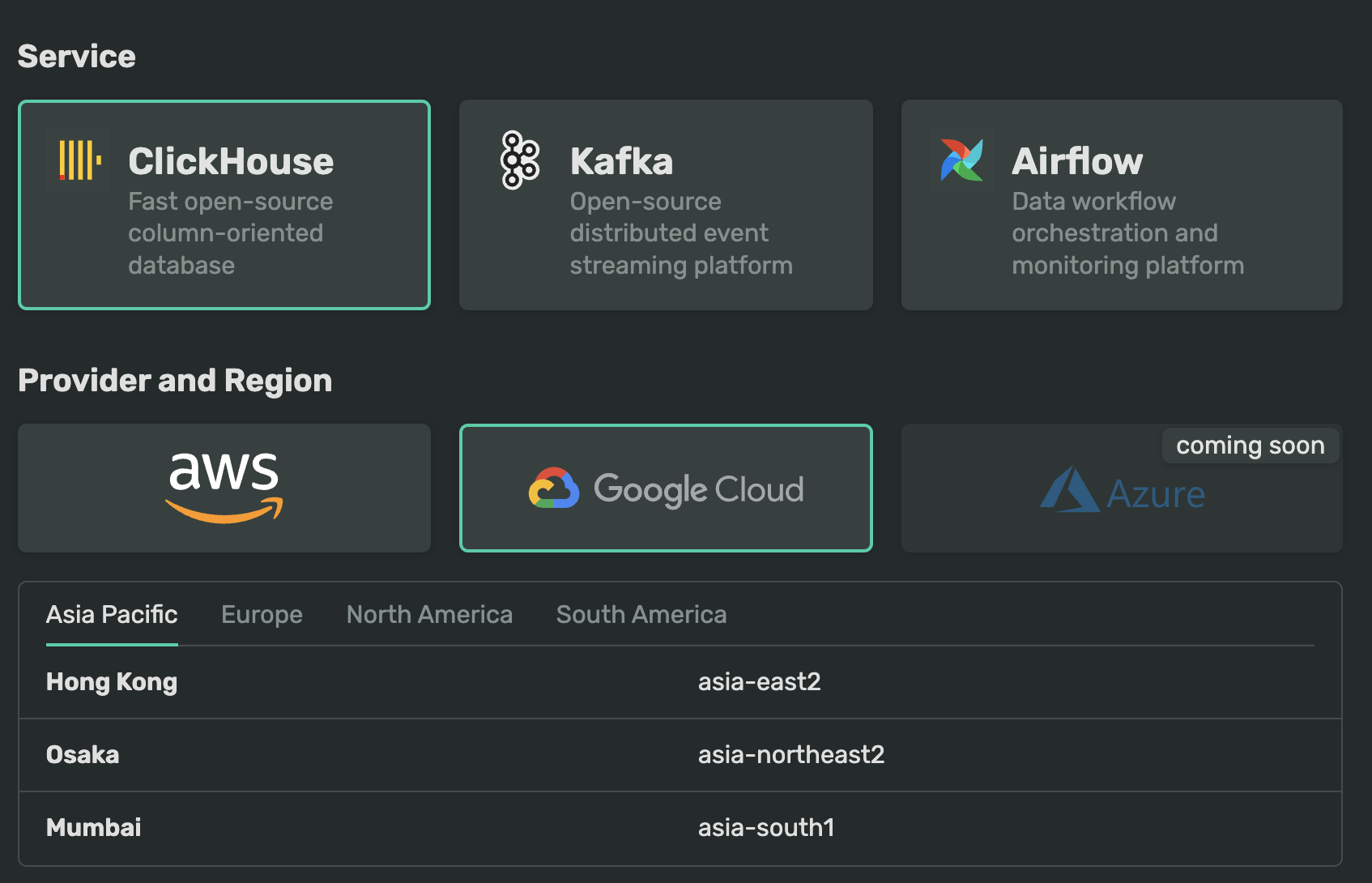

Google Cloud — General availability

The following services now support GCP, including Apache Kafka, ClickHouse, and the DoubleCloud Transfer service in dedicated mode, as well as BYOC mode. These services are available in eight Google Cloud regions across Asia, South America, North America, and Europe, and can be accessed without a request access form.

On GCP, we utilize n2d instances based on the AMD Milan architecture, which offer an approximate 20-30% improvement in price/performance ratio compared to the previous generation. Additionally, we automatically apply sustainable discounts, resulting in projected end-user costs approximately 10-15% lower than those on AWS.

In this article, we’ll talk about:

PCI DSS

After we recently obtained our SOC 2 Type 2 Certification, we are proud to announce that DoubleCloud has also achieved PCI DSS (Payment Card Industry Data Security Standards) certification.

This means that DoubleCloud adheres to the highest standards of security in storing, processing, and transmitting cardholder data. Our new PCI DSS certification offers our customers an additional layer of assurance, validating that DoubleCloud safeguards sensitive data at every level, from employee onboarding to payment processing.

Exporting logs to Datadog, Amazon S3 and Coralogix

For users who employ a unified log service like Datadog, or need to collect and store logs for compliance purposes, we now offer the capability to continuously export logs to external services including Datadog, S3, and Coralogix. This allows you to utilize your existing alerting mechanisms or analyze logs using familiar services. More details about this feature are available here.

If you are looking for or interested in integration with other providers, please feel free to reach out to me.

Disk auto-resize for ClickHouse and Apache Kafka (in preview)

To minimize failures caused by insufficient disk space, we have implemented automation that incrementally scales the disk size to prevent service disruptions due to a lack of space for new data.

This feature is now available for ClickHouse, the Keeper sub-cluster, and Apache Kafka. By simply setting an upper limit for disk size, you can activate this feature and effectively avoid such issues. When disk usage exceeds 95%, the system will automatically scale the disk size up to the next level. For more detailed information on how this works, please refer to the link provided.

New-generation instances on AWS

We have added a new generation of instances on AWS, including ARM, AMD, and Intel-based options in all available regions. Our s2-series instance types, based on the c6a and m6a types, utilize AMD EPYC processors (codenamed Milan) and offer a 20-35% improvement in price performance compared to the previous generation. Similarly, our g2-series, which are ARM-based, now operate on c7g and m7g instance types, delivering a 25% boost in price performance compared to their predecessors.

Users currently using s1 or g1 types can continue doing so, but we strongly recommend you upgrade your instances. For those with high-availability configurations, this can be done with just a few clicks in the console without interrupting your services. More details about new instances can be found here.

New 23.12 version of ClickHouse available on DoubleCloud

To keep our commitment to offer the latest versions of ClickHouse, we are now supporting the newly released version 23.12 on DoubleCloud. My favorite feature in this update is the introduction of Refreshable Materialized Views. This feature enables you to schedule updates for the materialized view, supporting scenarios such as updating the view with fresh data from multiple tables or recreating a current snapshot of data. The complete changelog is available here.

Quality of life improvements

Here’s a list of some minor but potentially pivotal improvements that simplify workflows and day-to-day tasks:

-

The speed of cluster creation or changes has been significantly improved, now taking an average of 5-7 minutes compared to the previous 12 minutes.

-

We have also introduced additional charts in the Monitoring section for ClickHouse, including Disk IOPS and Disk Throughput. These metrics will assist in determining if your disk resources are sufficient for your workload.

-

Another enhancement is the ability to filter and search logs on the Logs page.

-

The ChatGPT model has been updated to support up to 128k tokens from 8k in AI insights.

-

Additionally, you can now easily select a source ClickHouse from the drop-down menu when creating a connection in the Visualization service, eliminating the need to provide connection strings.

Get started with DoubleCloud