(Source: ClickHouse)

Best practices for data transformation as pipeline complexity grows

Modern data platforms revolve around data transformation.

That is the entire point of data pipelines–transforming raw data into actionable insights. However, data transformation is also a gigantic process, ranging from simple cleaning and formatting to complex aggregations and machine learning models.

The correct transformation approach is crucial for efficiency, scalability, and data integrity. If you get this wrong, you risk creating a bottleneck that can slow down your entire data operation, lead to inconsistent or unreliable results, and make it difficult to adapt to changing business needs or scale your data infrastructure.

If you get it right, though, you can easily handle increasing data volumes and complexity, deliver consistent, high-quality insights across your team, and provide a solid foundation for advanced analytics like AI and machine learning.

Here, we want to explain how we think about data transformation best practices at DoubleCloud, concentrating on each level of transformation as your pipeline complexity grows.

Always start with ingestion-level transformations

Starting at the start may seem obvious, but teams can easily skip this step. They ingest vast amounts of data and then pump it down the pipeline without considering the problems this will cause. These problems can be segmented into two categories.

Data quality

“Garbage In, Garbage Out” is a famous aphorism in data analytics that is starting to be forgotten. With the advent of AI and machine learning, teams can get into the habit of thinking that these models can easily work around or take care of any data quality issues.

But the refrain continues to be true. The better the quality of your data at the beginning of the pipeline, the better the quality of your insights at the end. Here are some first-line-of-defense transformations that can make it easier to work with your data downstream:

1. Data type validation: Incoming data must adhere to expected data types, such as converting strings to appropriate numeric or date formats, parsing JSON or XML structures, or casting boolean values to a standardized format. This reduces the need for error handling and type conversions in the later stages of the pipeline.

2. Null value handling: You can deal with null or missing values using imputation, flagging, or removal. Null value handling is required to ensure that downstream analysis and pipelines don’t break due to unexpected null values.

3. Deduplication: Deduplication, removing duplicate records at the ingestion stage, improves data quality and reduces storage costs. Though you can brute-force this at scale, depending on your data characteristics and business rules, you must consider fuzzy matching techniques or libraries such as dedupe.

4. Trimming and cleaning: Remove leading/trailing whitespaces, control characters, or other unwanted artifacts from string fields. This seemingly simple step can prevent numerous issues downstream, such as unexpected behavior in string comparisons or joins.

5. Schema validation: This ensures the incoming data structure matches the expected schema, handling any discrepancies or schema evolution. Robust schema validation can prevent data inconsistencies. You might want to implement versioning for your schemas to ensure your pipeline can adapt to evolving data structures without breaking.

6. Referential integrity checks: Verify that foreign key relationships are maintained, especially when ingesting data from multiple sources. This maintains logical consistency of your data across different tables or datasets. Implement checks that validate the existence of referenced keys and handle scenarios where referential integrity might be temporarily broken due to the order of data ingestion (essential in migrations).

All these are for a single reason: improve data quality now to reduce complexity later.

Data security

We left data security checks out of the list above because they require more discussion. You must deal with personal or sensitive data at the ingestion level. This is not just a best practice but often a legal requirement under regulations like GDPR, CCPA, or industry-specific standards like HIPAA.

Here are some key data security transformations to consider at the ingestion stage:

1. Data masking: Apply masking techniques to sensitive fields such as personal identifiers, credit card numbers, or health information. This involves replacing sensitive data with fictitious but realistic data maintaining the data’s format and consistency while protecting individual privacy.

2. Encryption: Implement encryption for highly sensitive data fields. This could involve using robust encryption algorithms to protect data at rest and in transit. Consider using format-preserving encryption for fields where the encrypted data needs to maintain the original format.

3. Tokenization: Replace sensitive data elements with non-sensitive equivalents or tokens. This is particularly useful for fields that must maintain uniqueness without exposing the original data.

4. Data anonymization: For datasets used in analytics or machine learning, consider anonymization techniques that remove or alter personally identifiable information while preserving the data’s analytical value.

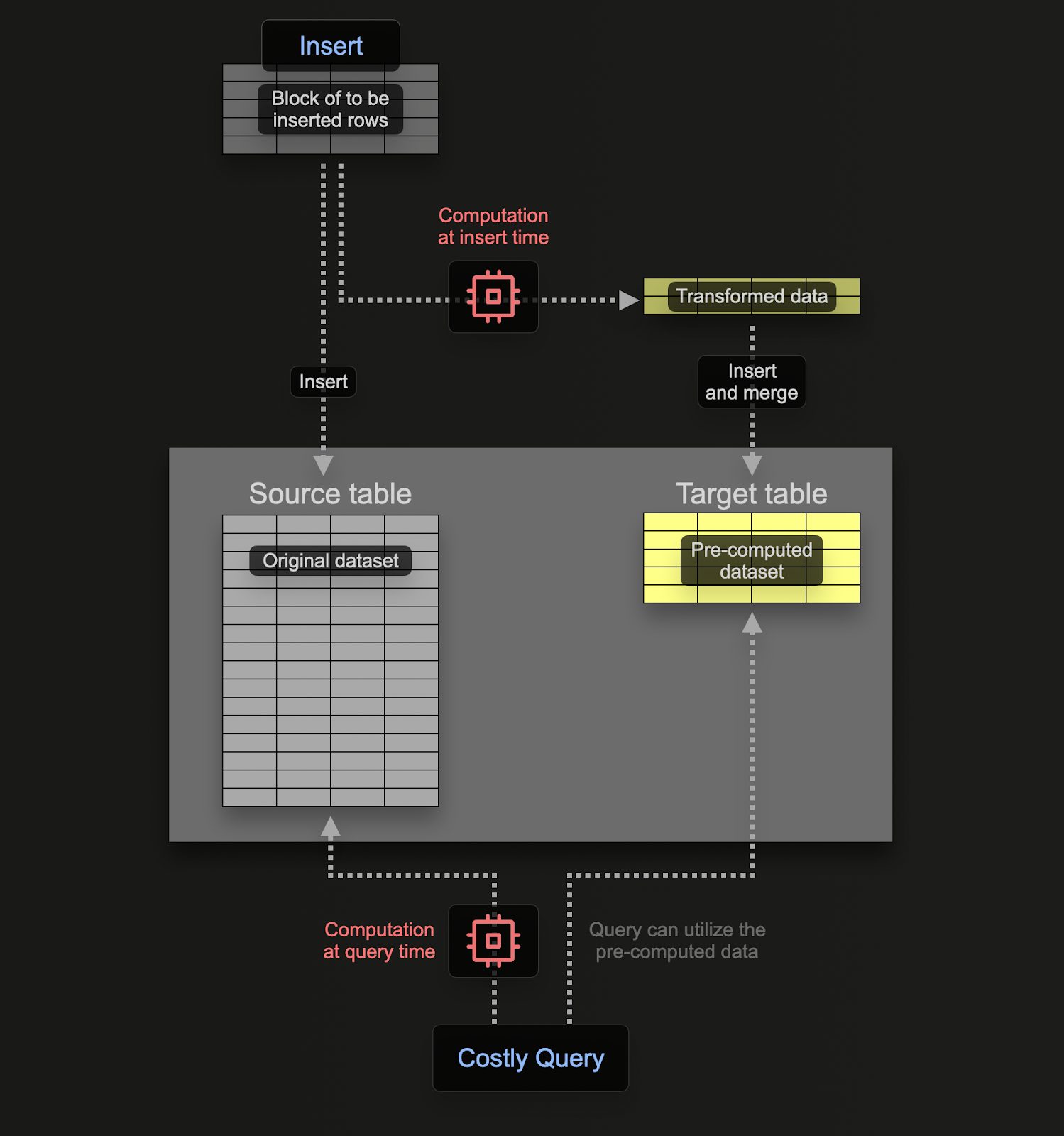

Move to materialized views as data starts to scale

As data volume grows and query complexity increases, simple transformations are still necessary but no longer sufficient.

At this point, data engineers start working with materialized views, which are pre-computed result sets stored for faster query performance.

Quick navigation:

- Always start with ingestion-level transformations

- Move to materialized views as data starts to scale

- Choose dbt when quality and complexity becomes the focus

- Use airflow for the most complex workflows

- 5 tips for every data transformation

- DoubleCloud’s approach to data transformation

- Elevate your data transformation strategy with DoubleCloud

Imagine running an e-commerce platform that generates billions of clickstream events daily. You’ll frequently need to analyze user engagement and sales performance. Instead of running complex queries on this large raw dataset every time, you can create materialized views to pre-compute common aggregations:

CREATE MATERIALIZED VIEW daily_user_activity

ENGINE = SummingMergeTree()

ORDER BY (date, user_id)

POPULATE

AS SELECT

toDate(event_time) AS date,

user_id,

countIf(event_type = 'pageview') AS pageviews,

countIf(event_type = 'click') AS clicks,

countIf(event_type = 'purchase') AS purchases,

sum(purchase_amount) AS total_spent

FROM raw_events

GROUP BY date, user_id;

The view stores only the aggregated data, which updates automatically as source data changes, significantly reducing the amount of data scanned for common queries.

The golden rule for materialized views is to understand your query performance. Materialized views perform best in situations with frequent, expensive queries. In particular, you want to precompute expensive joins and aggregations. To do that, you can use EXPLAIN ANALYZE to understand query costs:

- Analyze execution time: Anything taking several seconds or more could be considered expensive, especially if run frequently.

- Check for Seq Scans on large tables: These are often indicators of expensive operations.

- Compare cost estimates: Higher costs suggest more expensive queries.

- Consider the frequency of the query: Even a moderately expensive query can be problematic if run very often.

Materialized views are a crucial layer in your data transformation pipeline, enabling real-time analytics on massive datasets while maintaining the flexibility to access raw data when needed.

Choose dbt when quality and complexity becomes the focus

At some point, manual transformation isn’t going to cut it. The rule of thumb for when you need to bring in automation to your transformations is when:

-

You have more than five materialized views or

-

when data transformations become too complex to manage manually.

Then, data quality tools such as dbt are needed. dbt is an open-source tool that enables data analysts and engineers to transform data in their warehouses more effectively. Engineers primarily use it for more complex transformations and ensuring data quality.

dbt brings a more developer-like workflow to data transformation. Instead of individual SQL queries that need to be run manually for part of an ad-hoc transformation process, dbt brings structured queries and version control into the mix. Engineers can use dbt to create modular, reusable SQL code that defines how raw data should be transformed into analytics-ready models.

In dbt, you break down complex transformations into smaller, more manageable pieces. This modular approach makes understanding, maintaining, and reusing transformation logic easier. Then, dbt includes built-in functionality for testing your data transformations. You can define tests to ensure data quality, such as checking for null values, unique constraints, or custom business logic.

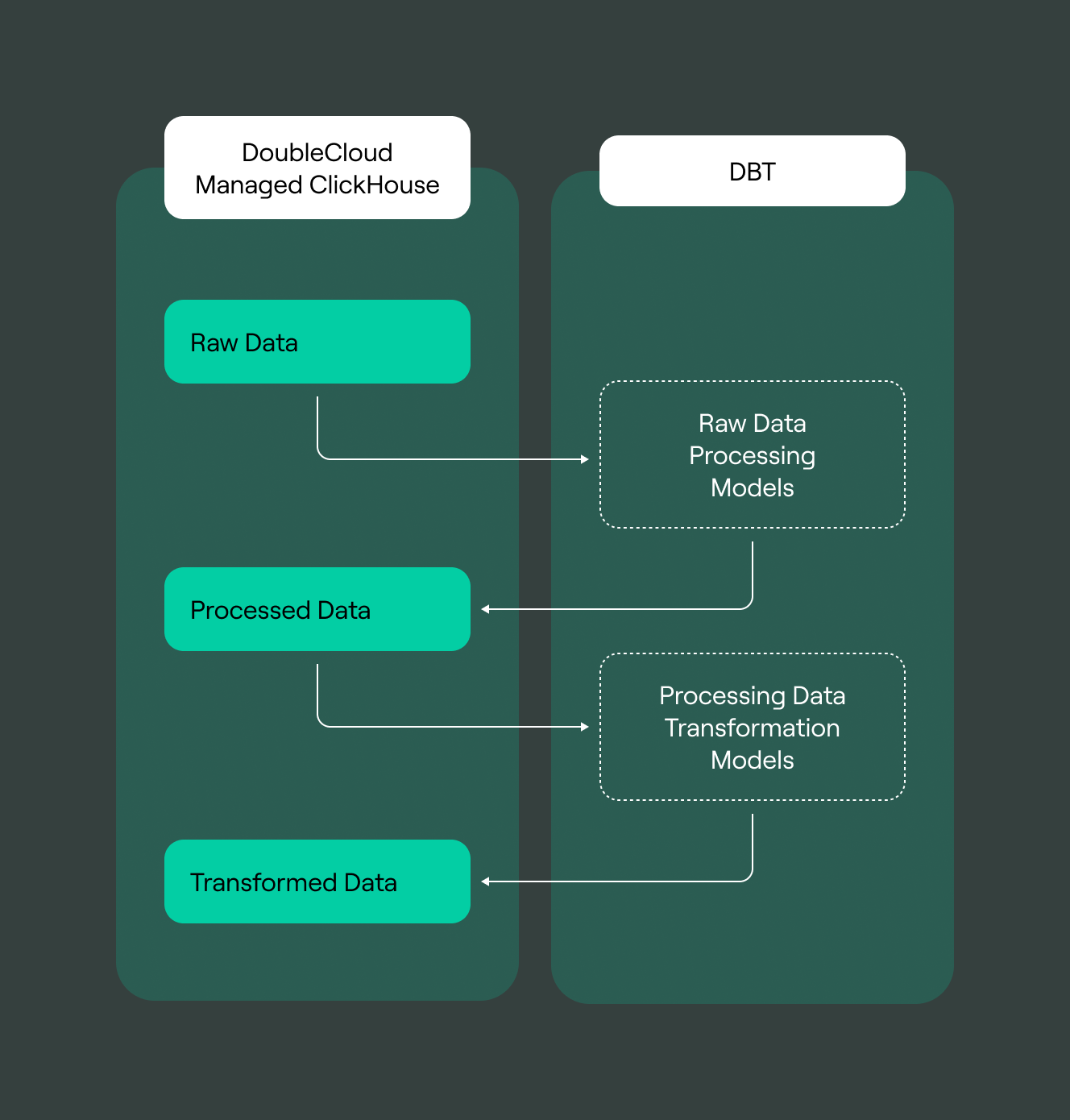

These are the fundamentals we mentioned for the ingestion phase. Even though you might only start using dbt once you have more complexity in your pipelines, you should use it throughout your pipeline once you hit that level of complexity. dbt integrates with DoubleCloud’s Managed ClickHouse cluster to do precisely that, allowing you to build a seamless workflow from data ingestion to transformation:

To get started with dbt in your data transformation workflow:

1. Define your models: Create SQL files that represent your data models, from staging tables that closely represent your raw data to intermediate and final models that power your analytics.

2. Set up tests: Write simple YAML configurations to test your data for nulls, uniqueness, accepted values, and custom business logic.

3. Document your models: Use YAML files and markdown to document your models, making it easier for others to understand and use your transformed data.

4. Create a project structure: Organize your dbt project with a clear folder structure, separating staging models, intermediate models, and final models.

5. Implement CI/CD: Set up continuous integration to run your dbt models and tests automatically when changes are pushed to your repository.

The transition to using dbt should be gradual. Start with a few key models, get comfortable with the workflow, and expand its use across your data transformation process. By adopting dbt, you’re not just automating your transformations but bringing software engineering best practices to your data work. This results in more reliable, maintainable, and scalable data transformations, which is crucial as your data pipeline grows in complexity.

Use Airflow for the most complex workflows

With dbt, you can build workflows, but dbt itself doesn’t handle scheduling. For that, you need an orchestration tool, such as Airflow.

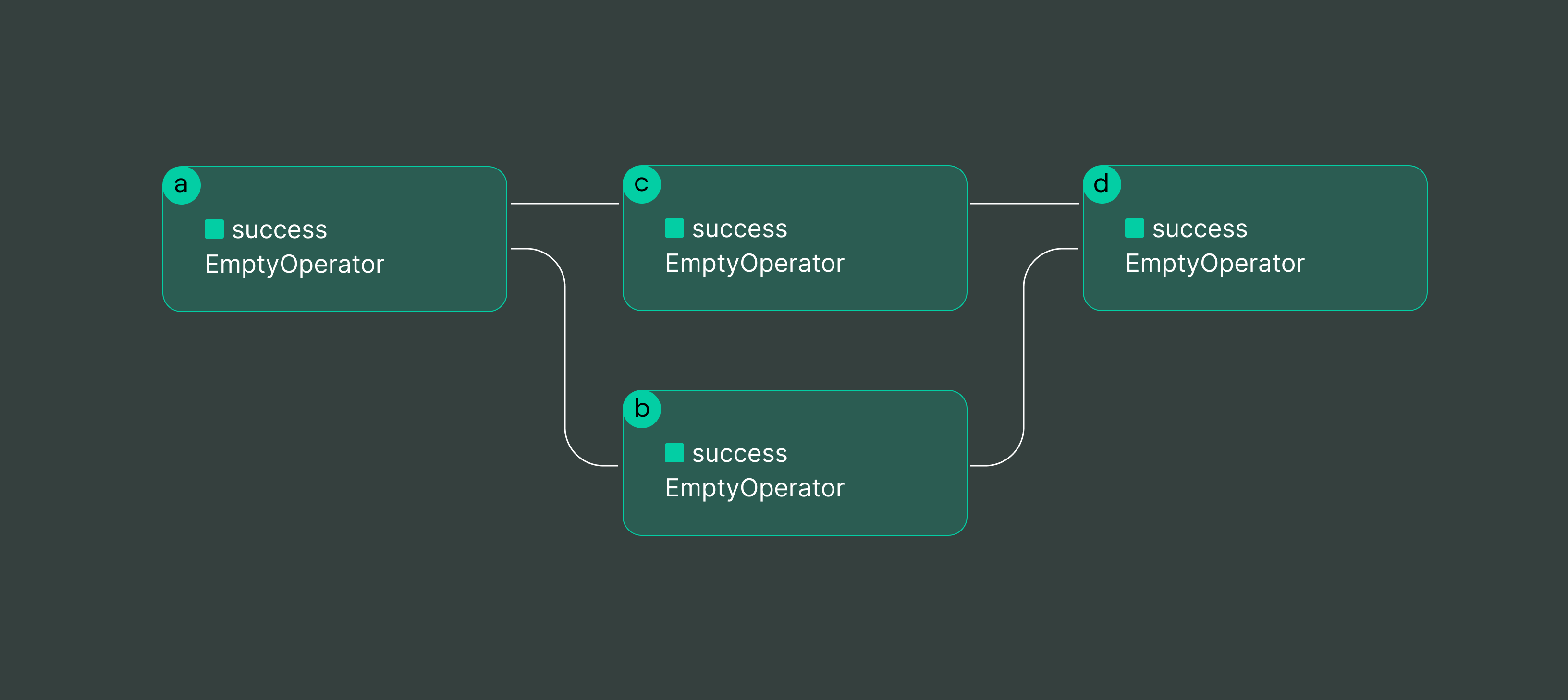

Airflow will allow you to manage dependencies between tasks and schedule complex jobs, give you the flexibility to handle diverse workflows, provide scalability for large-scale data processing, and provide robust error handling and monitoring. Airflow’s “secret sauce” is the Directed Acyclic Graph, or DAG. Airflow leverages DAGs to manage complex workflows and scheduling.

In Airflow, you define your workflow as a DAG using Python code. This lets you programmatically create a structure representing your entire data pipeline or process. Within a DAG, you define individual tasks and their dependencies. Tasks can be anything from running a SQL query to executing a Python function or interacting with an external system. Dependencies determine the order in which tasks should run.

(Source: Apache Airflow)

Each DAG can have a schedule associated with it. This could be as simple as “run daily at midnight” or as complex as “run every 15 minutes on weekdays”. Airflow’s scheduler uses these definitions to determine when to trigger DAG runs. When it’s time for a DAG to run, Airflow’s executor starts processing tasks. It begins with tasks with no dependencies and moves through the graph as tasks are completed, always respecting the defined dependencies.

Because the workflow is defined as a graph, Airflow can quickly identify which tasks can run in parallel. This allows for efficient execution of complex workflows. Airflow keeps track of the state of each task (running, success, failed, etc.). If a task fails, Airflow can automatically retry it based on your configuration.

This approach allows Airflow to handle everything from simple linear workflows to complex branching processes with multiple dependencies and parallel execution paths. It’s ideal for the most complex data engineering scenarios where you might need to orchestrate ETL processes, run machine learning pipelines, or manage complex data transformation workflows.

5 tips for every data transformation

While we’ve covered specific approaches for different stages of data pipeline complexity, some overarching principles apply universally.

1. Simplicity and maintainability as key goals

Always strive for simplicity in your transformations. Complex transformations might seem impressive, but they often lead to headaches down the road. Here’s why simplicity matters:

-

Easier debugging: When issues arise (and they will), more straightforward transformations are quicker to troubleshoot.

-

Improved maintainability: As your team evolves, simpler code is easier for new team members to understand and modify.

-

Reduced error risk: Complex transformations introduce more points of failure. Keeping things simple minimizes this risk.

Implement this by breaking down complex transformations into smaller, manageable steps. Use clear naming conventions and add comments to explain the logic behind each transformation. Remember, the goal is not just to transform data but to do so in a sustainable and scalable way as your data needs grow.

2. Denormalization in data warehousing

In traditional database design, normalization is king. But denormalization often takes the crown in the world of data warehousing and analytics. Here’s why:

-

Faster queries: Denormalized data requires fewer joins, significantly speeding up query performance.

-

Simpler joins: When joins are necessary, they’re typically simpler in a denormalized model.

-

Improved read performance: Analytics workloads are often read-heavy, and denormalization optimizes for reads.

Denormalization isn’t without its trade-offs. You’re storing redundant data, which increases storage needs, and updating denormalized data requires careful management to maintain consistency.

3. Performance considerations for real-time analytics

Optimizing your transformations for low-latency queries becomes crucial when building for real-time insights. As discussed earlier, materialized views can significantly speed up common queries, but that isn’t the only performance optimization you can make.

Firstly, you can consider your own data transformation strategy. Do you need to reprocess all data? Or can you focus on transforming only the new or changed data?

Second, can you take advantage of tooling for better optimization? This might be in-memory stores for the most time-sensitive operations or properly partitioned and indexed data to improve query performance.

Remember, real-time doesn’t always mean instantaneous. Understand your actual latency requirements and optimize accordingly. Sometimes, “near real-time” is sufficient and much easier to achieve.

4. Security and compliance throughout the transformation process

Data security isn’t just an ingestion-level concern. It needs to be baked into every stage of your data pipeline. Here’s how:

-

Data encryption: Ensure data is encrypted at rest and in transit, even during transformation processes.

-

Access controls: Implement fine-grained access controls. Not everyone needs access to all transformed data.

-

Audit trails: Maintain detailed logs of all data transformations for compliance and troubleshooting.

-

Data lineage: Track the origin and transformations of each data element. This is crucial for both compliance and data governance.

5. Consider the end-use

Finally, always consider the end-use of your data. Your transformation strategy should be tailored to how the data will ultimately be consumed.

Do you have a data contract or SLA with downstream users that decides latency or freshness? Establish clear data contracts with downstream users. These should define expectations for data quality, freshness, and availability. For instance, a real-time dashboard might require 99.9% uptime and data no older than 5 minutes, while a monthly report might tolerate 24 hours of lag.

Remember, the data team’s job is to ship insights, not data. Align your transformation strategy with key business metrics and decision-making processes. Understand which transformations directly support critical business KPIs and prioritize these. This approach transforms data engineering from a support function into a strategic driver of business value, directly tying your technical decisions to business outcomes and user satisfaction.

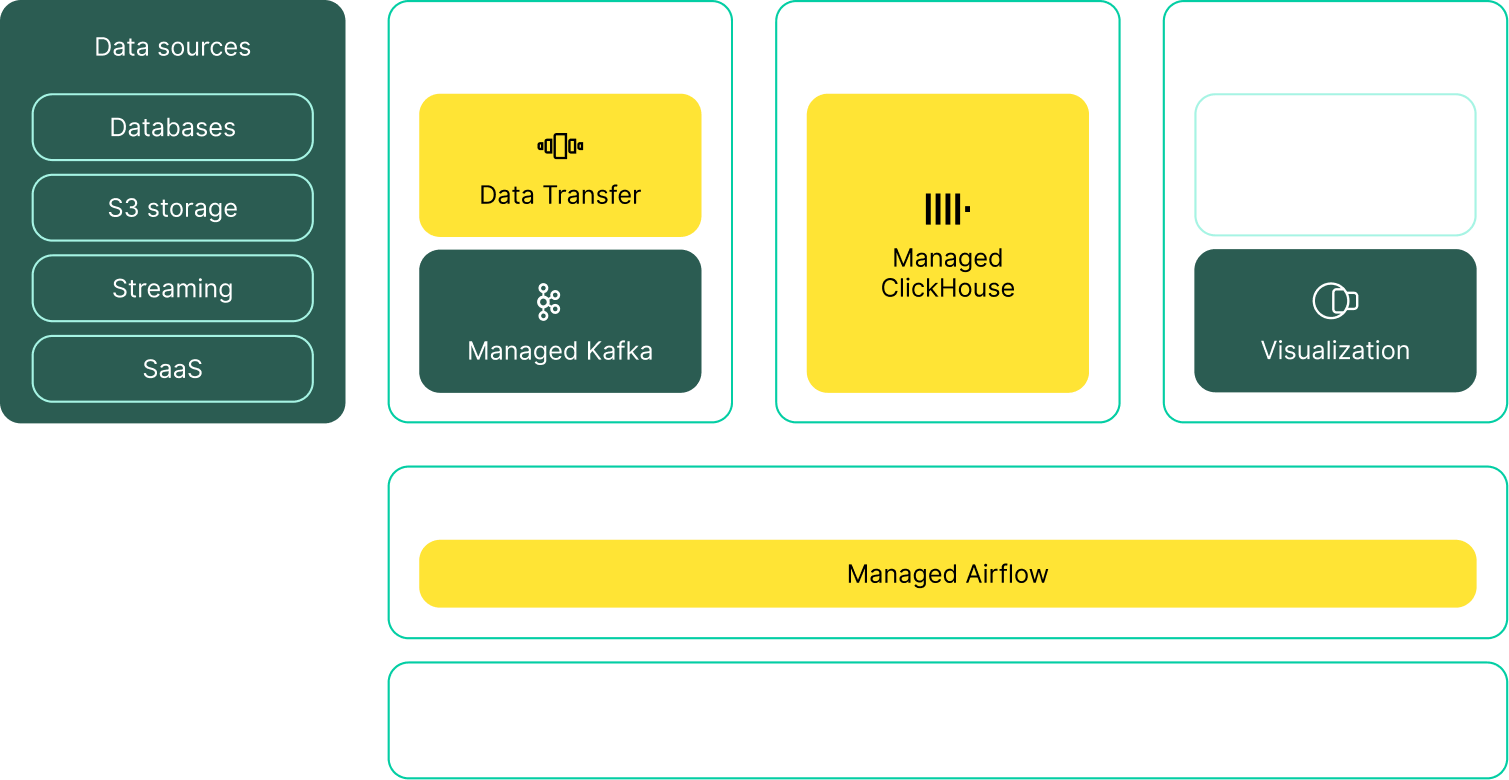

DoubleCloud’s approach to data transformation

Effective data transformation is the cornerstone of any successful data strategy. At DoubleCloud, our approach is designed around transformation to meet the needs of modern data teams, from simple ETL processes to complex, real-time analytics pipelines.

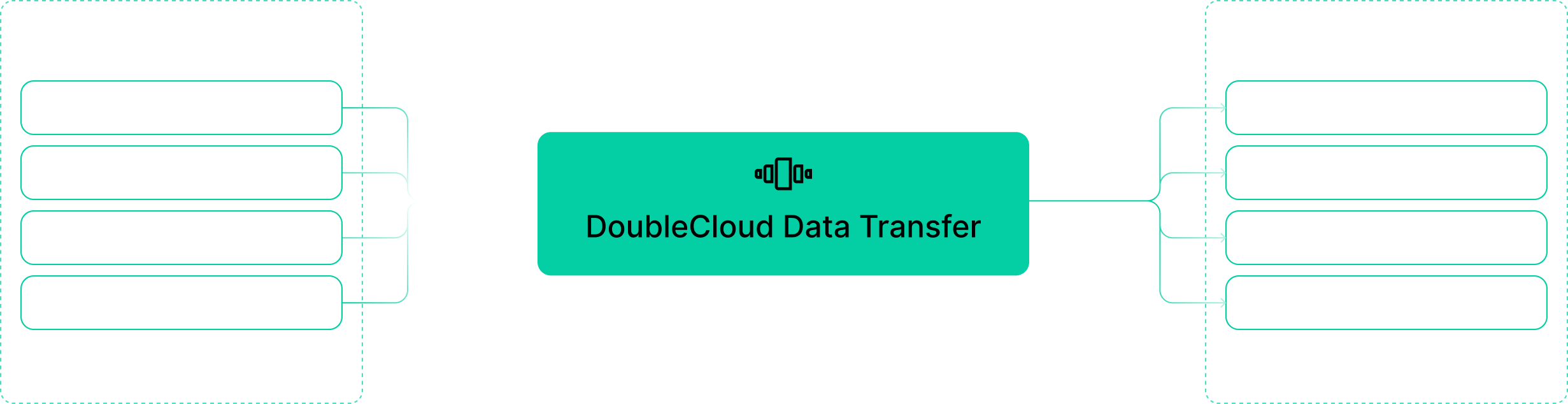

In particular, DoubleCloud Data Transfer is designed to be a simple data integration solution that excels at extracting, loading, and transforming data. It is nine times faster than Airbyte and ideal for working with ClickHouse.

With high-speed data ingestion into ClickHouse, Data Transfer can reach up to 2 GB/s, making it an ideal choice for organizations looking to build customer-facing or real-time analytics solutions.

This lets you move data in any arrangement, giving you complete control over your data flow. This flexibility is crucial for complex data transformation pipelines. DoubleClod Data Transfer natively integrates with dbt, allowing users to quickly transform, clean, and summarize data for analysis. This integration enhances data modeling capabilities and improves team collaboration to build out the data products your organization needs.

DoubleCloud’s approach to data transformation aims to provide a comprehensive, efficient, and cost-effective solution for modern data teams. DoubleCloud Data Transfer is designed to meet data transformation needs while optimizing for performance and cost, whether you’re dealing with simple ETL processes or complex, real-time analytics pipelines.

Elevate your data transformation strategy with DoubleCloud

Data transformation is a strategic imperative for any organization looking to harness the full power of its data. From ingestion-level transformations to complex orchestration with tools like Airflow, each stage of your data pipeline requires careful consideration and planning.

The best practices form the backbone of a robust data transformation strategy. By implementing these practices, your data team can produce the insights the rest of your organization requires. Building your data solutions on DoubleCloud infrastructure gives you the ability to:

-

Scale your data pipelines to handle growing volumes and complexity

-

Optimize performance with ClickHouse-native tools and high-speed data ingestion

-

Maintain data quality and security throughout the transformation process

-

Streamline your workflow with built-in dbt integration for advanced transformations

This is all necessary for robust data transformation. You can get started right away with DoubleCloud to move your data, or you can reach out to the DoubleCloud team to tell us more about your data needs.

Get started with DoubleCloud