Use Visualization API

This quick start guide explains how to create a workbook, a connection,and all the Visualization assets within it using DoubleCloud API.

Before you start

To start working with DoubleCloud API, do the following:

-

Create a service account:

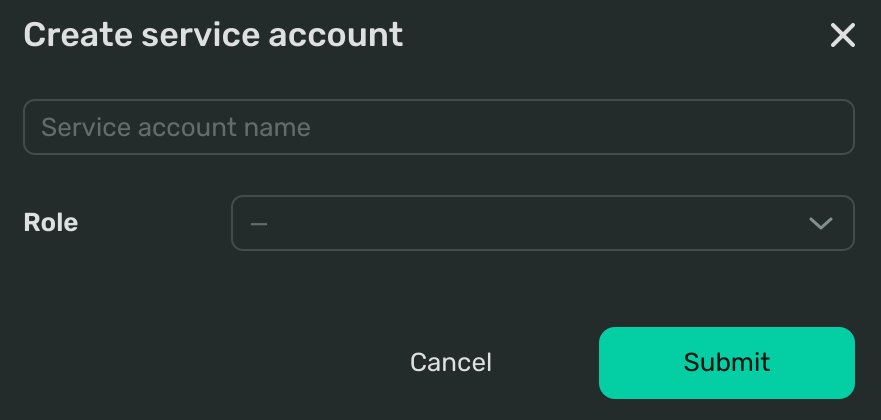

- Go to the Service accounts tab of the Members page in the console. You'll see the following dialog:

-

Name your service account.

-

From the drop-down menu, select the Admin user role - we will need both read and write access.

-

Click Submit. You'll see your new service account appear on the list.

-

Issue an API key for your service account:

-

Go to the Service accounts tab of the Members page in the console.

-

Open the information page of the service account for which you want to create an API key.

-

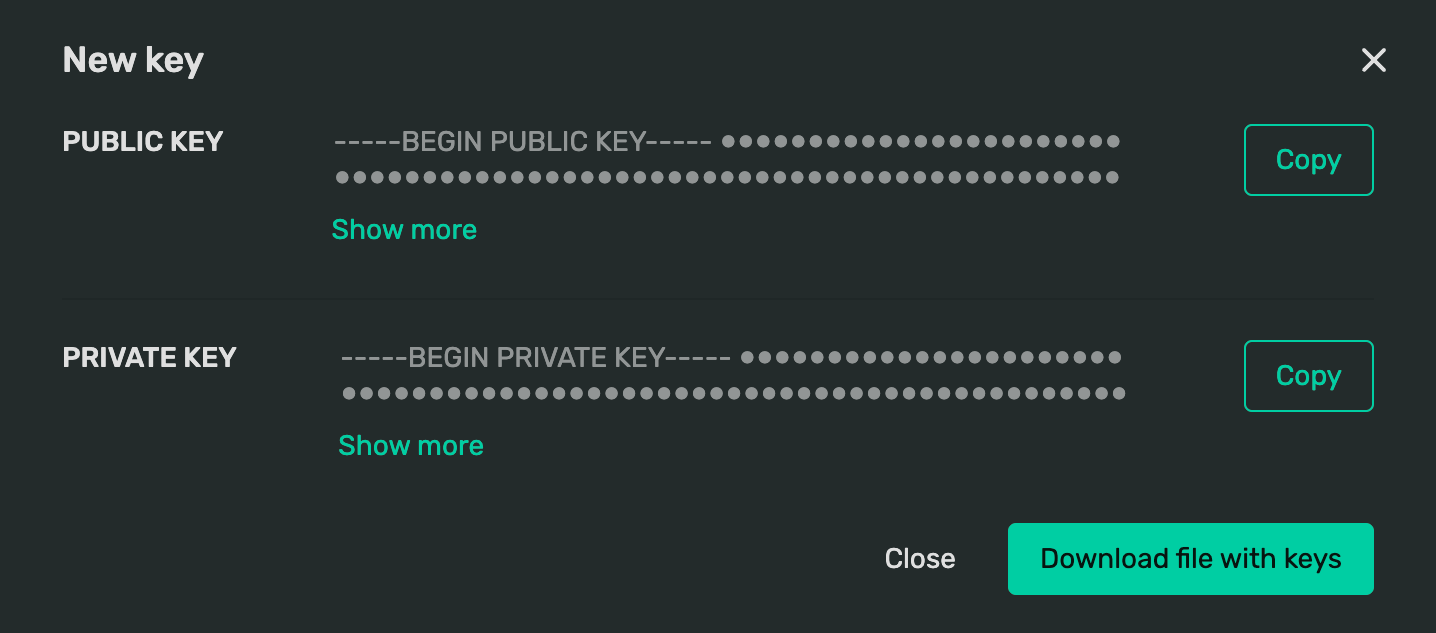

Under API keys, click Create key to create you account's first Secret key. You'll see the following dialog:

-

Click Download file with keys. You'll use it to authenticate API requests.

-

Import the necessary dependencies

Note

Here and below the code segments are presented as parts of the whole. You can find the full DoubleCloud API SDK code listing at the end of this tutorial.

For this scenario, you'll need to import the following libraries, services and methods:

import argparse

import json

import logging

from google.protobuf.json_format import MessageToDict

from google.protobuf.wrappers_pb2 import BoolValue

import doublecloud

from doublecloud.v1.operation_pb2 import Operation

from doublecloud.visualization.v1.workbook_pb2 import (

Connection,

Dataset,

PlainSecret,

Secret,

Workbook,

)

from doublecloud.visualization.v1.workbook_service_pb2 import (

AdviseDatasetFieldsRequest,

CreateWorkbookConnectionRequest,

CreateWorkbookRequest,

DeleteWorkbookConnectionRequest,

DeleteWorkbookRequest,

UpdateWorkbookRequest,

)

from doublecloud.visualization.v1.workbook_service_pb2_grpc import WorkbookServiceStub

Create a workbook

To create a workbook, use the doublecloud.visualization.v1.WorkbookService Create method:

-

project_id- the ID of the project in which you want to create a workbook. You can get this value on your project's information page. -

workbook title- specify the title of your workbook.

Let's describe the workbook:

def create_workbook(svc, project_id: str, name: str) -> Operation:

"""

Function creates an empty workbook

We will fill it with other functions

"""

return svc.Create(CreateWorkbookRequest(project_id=project_id, workbook_title=name))

Create a connection

DoubleCloud Visualization API allows to create two connection types:

For this tutorial, create a connection to a special Managed Service for ClickHouse® demo cluster:

Use the Connection API model and specify the ClickhouseConnection configuration:

-

kind: clickhouse- specify the connection type. -

raw_sql_level- set the lowest level for an SQL database interaction. This is anenumwith three options:off,subselectanddashsql. -

cache_ttl_sec- (optional) specify how long to store cache (in seconds). -

host- this is the name of the ClickHouse® to which you want to connect. -

port- this is the host's interface port (8443by default ). -

username- the ClickHouse® cluster's username. -

secure- abooleanto enable/disable TLS

Let's describe this connection using our Python SDK:

def create_workbook_connection(svc, workbook_id: str, connection_name: str) -> Operation:

"""

A special sample database, that available from all projects

You can create this connection by your own and

try all of Double.Cloud Visualisation features

Don’t work with secrets like that for your environments.

It's just an example. Ask your system administrator for your secrets provider.

"""

connection = Connection()

connection.config.struct_value.update(

{

"kind": "clickhouse",

"cache_ttl_sec": 600,

"host": "rw.chcpbbeap8lpuv24hhh4.at.double.cloud",

"port": 8443,

"username": "examples_user",

"secure": True,

"raw_sql_level": "off",

}

)

operation = svc.CreateConnection(

CreateWorkbookConnectionRequest(

workbook_id=workbook_id,

connection_name=connection_name,

connection=connection,

secret=Secret(plain_secret=PlainSecret(secret="yahj@ah5foth")),

)

)

return operation

Create Visualization assets

Now that you have a connection to the database, describe all the assets to be created. The sequence of assets description is crucial for your request to be processed correctly.

Describe a dataset

An essential part of the dataset description is the listing of all the data fields ingested to it from the source. This can be a time-consuming task.

To avoid unnecessary complication of the creation process, get the listing of all the data fields on the source when creating a dataset:

def advise_dataset_fields(svc, workbook_id: str, sources: list, connection_name: str):

"""

Function helps to define automatically all fields, their names/IDs and types

based on underlying datasource (table, view, SQL query, etc.).

ID of fields will be equals to column names.

You can use define them manually or use this handler to simplifying for popular cases

"""

dataset = Dataset()

dataset.config.struct_value.update(

{

"fields": [],

"avatars": None,

"sources": sources,

}

)

return svc.AdviseDatasetFields(

AdviseDatasetFieldsRequest(

workbook_id=workbook_id,

connection_name=connection_name,

partial_dataset=dataset,

)

)

Configure the data source specification

To return our connection as a dataset source, we need to describe the data source type:

-

id- specify the data source ID. It must be unique within the dataset. -

title- the data source title. -

connection_ref- the connection'snamefrom which to get a data source. -

spec- data source specification:-

kind-sql_table, use a SQL table as a data source. -

db_name- the name of the database on your ClickHouse® cluster. -

table_name- the name of the table to use as a data source.

-

def get_clickhouse_table_dataset_sources(*, connection_name: str, db_name: str, table_name: str) -> list:

"""

Function returns a dataset source as DB table or view.

You can use another datasource types:

https://double.cloud/docs/en/public-api/api-reference/visualization/configs/DataSourceSpec

And another connection types:

https://double.cloud/docs/en/public-api/api-reference/visualization/configs/Connection

"""

return [

{

"id": "production_marts",

"title": "hits_sample",

"connection_ref": connection_name,

"spec": {"kind": "sql_table", "db_name": db_name, "table_name": table_name},

}

]

Create charts

For this tutorial, create three charts:

-

An indicator.

Let's describe each of these charts using our API SDK:

For a complete description of all the fields below, see the API section of the Column chart reference.

def a_column_chart(name: str, *, dataset_name: str) -> dict:

"""

This is an example of column chart

See https://double.cloud/docs/en/data-visualization/quickstart#create-a-column-chart

"""

return {

"chart": {

"ad_hoc_fields": [

{

# Here we add a field with applied aggregation to chart

# This is equal to adding a field to a dataset. But only when used with this chart.

"field": {

"description": None,

"id": "time_spent_sum",

"cast": "float",

# Example of a calculated field

# https://double.cloud/docs/en/data-visualization/concepts/calculated-fields

# Note that in API fields should be referenced by ID, not title

"calc_spec": {

"kind": "id_formula",

"formula": "SUM([Time_Spent])",

},

"aggregation": "none",

"hidden": False,

"title": "Time Spent Sum",

},

"dataset_name": dataset_name,

}

],

"visualization": {

"y": [

{

"source": {

"kind": "ref",

"id": "time_spent_sum",

}

}

],

"kind": "column_chart",

"sort": [],

"coloring": {

"mounts": [],

"palette_id": None,

"kind": "dimension",

"source": {"kind": "ref", "id": "browser"},

},

"x": [{"source": {"kind": "ref", "id": "date"}}],

},

"datasets": [dataset_name],

},

"name": name,

}

For a complete description of all the fields below, see the API section of the Donut chart reference.

def a_donut_chart(name: str, *, dataset_name: str) -> dict:

"""

Example of a donut chart

See https://double.cloud/docs/en/data-visualization/quickstart#create-a-donut-chart

"""

return {

"chart": {

"ad_hoc_fields": [

# Here we add a field with applied aggregation to a chart.

# This is equal to adding field to dataset. But only when used with this chart.

{

"field": {

"description": None,

"id": "browser_count_unique",

"cast": "string",

"title": "Browser Count Unique",

# Example of direct field with applied aggregation

"aggregation": "countunique",

"calc_spec": {"kind": "direct", "avatar_id": "production_marts", "field_name": "Browser"},

"hidden": False,

},

}

],

"visualization": {

"kind": "donut_chart",

"sort": [],

"coloring": {

"mounts": [],

"palette_id": None,

"source": {"kind": "ref", "id": "technology"},

},

"measures": {

"source": {

"kind": "ref",

"id": "browser_count_unique",

}

},

},

"datasets": [dataset_name],

},

"name": name,

}

For a complete description of all the fields below, see the API section of the Indicator reference.

def an_indicator_chart(name: str, *, dataset_name: str) -> dict:

"""

Example of an indicator chart

See https://double.cloud/docs/en/data-visualization/quickstart#create-an-indicator

"""

return {

"chart": {

"ad_hoc_fields": [],

"visualization": {

"field": {

"source": {

"kind": "ref",

"id": "hits_count",

}

},

"kind": "indicator",

},

"datasets": [dataset_name],

},

"name": name,

}

Create a dashboard

Now that you have the charts, create a dashboard where to place and display them.

Every dashboard must contain at least one tab:

-

If you have a single tab, it won't show on the dashboard. Consider this tab to be your dashboard's charting space.

-

If you have more than one tab in your dashboard, the switching controls will show.

The structure of the dashboard description is the following:

-

Describe the tab:

-

title- the string with the tab title. -

id- tab's ID, must be unique within the dashboard. -

items-elements, the tab can contain all the available dashboard elements.

-

-

Specify the dashboard's

name.

Now, let's describe it using our API SDK:

def get_single_tab_dashboard(dashboard_name: str, elements: list[dict]) -> list:

return [

{

"dashboard": {

"tabs": [

{

"title": "Tab 1",

"id": "qG",

"items": elements,

"ignored_connections": [],

}

]

},

"name": dashboard_name,

}

]

Create a selector

Selectors allow you to filter values of charts on a dashboard. Each selector works only with charts that are in the same tab with it.

Describe a multiple-choice selector:

-

kind-control_multiselectto be able to select multiple values. -

show_title- abooleanwhich toggles the display of the selector's title on the dashboard. -

title- a string containing the selector's title. -

source- describe the data source:-

kind-dataset_field, the selector will get its values from a specified field in the dataset. -

dataset_name- the name of the dataset to which you connect the selector. -

field_id- the ID of the field from which the selector will get its list of values.

-

-

comparison_operation- anenumthat triggers a comparison function. For this tutorial, specifyNone(default value isIN). -

default_value- specifies the selector's default value when the dashboard is loaded. -

id- the selector's element ID. It must be unique within the workbook. -

placement- describes the position of the element with integer numbers for the following coordinate values:Tip

The placement grid consists of

36columns, each height unit equals18pixels.XandYparameters start from0.-

x- the position on the horizontal axis. -

y- the position on the vertical axis. -

w- the width of the element. -

h- the height of the element.

-

def dash_element_selector_by_dataset_field(

element_id: str,

title: str,

dataset_name: str,

field_id: str,

# Element placement

# See details here: https://double.cloud/docs/en/public-api/api-reference/visualization/configs/Dashboard

x: int,

y: int,

h: int,

w: int,

):

return {

"element": {

"kind": "control_multiselect",

"show_title": True,

"title": "Miltiselect by field",

"source": {

"kind": "dataset_field",

"dataset_name": dataset_name,

"field_id": field_id,

},

"comparison_operation": None,

"default_value": None,

},

"id": element_id,

"placement": {"x": x, "y": y, "h": h, "w": w},

}

Create a method to modify the workbook configuration

As the DoubleCloud Visualization API uses the declarative language concept to create its assets structure, you need to update the workbook every time a new asset is created.

Let's use our SDK to create this method:

def modify_workbook(svc, workbook_id: str, workbook_config: dict) -> Operation:

"""

Function rewrites rewrites workbook with declarative description,

which usually given from `describe_workbook` method.

"""

wb = Workbook()

wb.config.struct_value.update(workbook_config)

return svc.Update(

UpdateWorkbookRequest(

workbook_id=workbook_id,

workbook=wb,

force_rewrite=BoolValue(value=True),

)

)

Now you need to create a new workbook configuration.

For this tutorial, we'll use our SDK to create code that'll automatically describe all the assets that a new workbook state might have:

def get_workbook_config_with_single_dataset(

dataset_name: str,

ds_fields: list = None,

ds_avatars: dict = None,

ds_sources: list = None,

charts: list = None,

dashboards: list = None,

) -> dict:

"""

Function returns a declarative structure of a sample workbook with single dataset.

Structure used for changing workbook config through API

"""

return {

"datasets": [

{

"name": dataset_name,

"dataset": {

"fields": ds_fields or [],

"avatars": ds_avatars or None,

"sources": ds_sources or [],

},

}

],

"charts": charts or [],

"dashboards": dashboards or [],

}

Build the assets

Now that you've created all the descriptions needed to create the Visualization assets, create the main function to execute the request:

def main(): # pylint: disable=too-many-locals,too-many-statements

logging.basicConfig(level=logging.INFO)

arguments = parse_args()

if arguments.token:

sdk = doublecloud.SDK(token=arguments.token)

else:

with open(arguments.sa_json_path) as infile:

sdk = doublecloud.SDK(service_account_key=json.load(infile))

workbook_id = None

connection_created = False

# Entries references each other by it's names

# So we define it here

connection_name = "conn_ch_1"

dataset_name = "ds_hits_sample"

chart_name_total_hits = "chart_total_hits"

chart_name_time_spent_per_browser = "chart_time_spent_per_browser"

chart_name_user_share_by_platform = "chart_user_share_by_platform"

dashboard_name = "dash_main"

svc = sdk.client(WorkbookServiceStub)

try:

# Create a new workbook

operation = create_workbook(svc, arguments.project_id, arguments.name)

operation_result = sdk.wait_operation_and_get_result(operation)

workbook_id = operation_result.operation.resource_id

logging.info(f"Created a new workbook: {workbook_id}, https://app.double.cloud/workbooks/{workbook_id}")

# Create a connection to a database

operation = create_workbook_connection(svc, workbook_id, connection_name=connection_name)

sdk.wait_operation_and_get_result(operation)

connection_created = True

logging.info(f"Created a new connection: {connection_name}")

logging.info("Modify workbook and add data source")

sources = get_clickhouse_table_dataset_sources(

connection_name=connection_name,

db_name="examples",

table_name="hits",

)

workbook_spec = get_workbook_config_with_single_dataset(

dataset_name=dataset_name,

ds_sources=sources,

)

operation = modify_workbook(svc, workbook_id, workbook_spec)

sdk.wait_operation_and_get_result(operation)

# Get advised fields for our datasource, instead of manually write all of them

advised_ds_config = MessageToDict(

advise_dataset_fields(svc, workbook_id, sources, connection_name).dataset.config

)

# Adding aggregated field to dataset

advised_ds_config["fields"].append(

{

"description": None,

"id": "hits_count",

"cast": "integer",

"calc_spec": {"kind": "direct", "field_name": "Hit_ID"},

"aggregation": "countunique",

"hidden": False,

"title": "Total hits",

}

)

# Fill the workbook with advised fields

workbook_spec = get_workbook_config_with_single_dataset(

dataset_name=dataset_name, ds_fields=advised_ds_config["fields"], ds_sources=advised_ds_config["sources"]

)

operation = modify_workbook(svc, workbook_id, workbook_spec)

sdk.wait_operation_and_get_result(operation)

logging.info("Filled advised fields for our datasets")

# Create example charts and dashboard

charts = [

an_indicator_chart(chart_name_total_hits, dataset_name=dataset_name),

a_donut_chart(chart_name_user_share_by_platform, dataset_name=dataset_name),

a_column_chart(chart_name_time_spent_per_browser, dataset_name=dataset_name),

]

dashboards = get_single_tab_dashboard(

dashboard_name,

[

dash_element_selector_by_dataset_field(

title="Traffic Source",

dataset_name=dataset_name,

field_id="traffic_source",

element_id="P9",

x=0,

y=0,

h=2,

w=20,

),

dash_element_chart(

chart_name=chart_name_time_spent_per_browser,

title="Time spent per browser",

element_id="K5",

x=0,

y=2,

h=16,

w=20,

),

dash_element_chart(

chart_name=chart_name_user_share_by_platform,

title="User shares by platform",

element_id="nd",

x=20,

y=0,

h=23,

w=16,

),

dash_element_chart(

chart_name=chart_name_total_hits,

title="Total hits",

element_id="L7",

x=0,

y=18,

h=12,

w=20,

),

],

)

workbook_spec = get_workbook_config_with_single_dataset(

dataset_name=dataset_name,

ds_fields=advised_ds_config["fields"],

ds_sources=advised_ds_config["sources"],

charts=charts,

dashboards=dashboards,

)

operation = modify_workbook(svc, workbook_id, workbook_spec)

sdk.wait_operation_and_get_result(operation)

logging.info("Added charts for our data")

input("Press F to respect and delete all created resources ...")

finally:

if workbook_id:

try:

if connection_created:

logging.info(f"Deleting connection {connection_name}")

operation = delete_connection(svc, workbook_id, connection_name)

sdk.wait_operation_and_get_result(

operation,

)

finally:

logging.info(f"Deleting workbook {workbook_id}")

operation = delete_workbook(svc, workbook_id)

sdk.wait_operation_and_get_result(

operation,

)

Put the code together in a sequence

You can download the complete code example

Full DoubleCloud Python API SDK code listing

import argparse

import json

import logging

from google.protobuf.json_format import MessageToDict

from google.protobuf.wrappers_pb2 import BoolValue

import doublecloud

from doublecloud.v1.operation_pb2 import Operation

from doublecloud.visualization.v1.workbook_pb2 import (

Connection,

Dataset,

PlainSecret,

Secret,

Workbook,

)

from doublecloud.visualization.v1.workbook_service_pb2 import (

AdviseDatasetFieldsRequest,

CreateWorkbookConnectionRequest,

CreateWorkbookRequest,

DeleteWorkbookConnectionRequest,

DeleteWorkbookRequest,

UpdateWorkbookRequest,

)

from doublecloud.visualization.v1.workbook_service_pb2_grpc import WorkbookServiceStub

def create_workbook(svc, project_id: str, name: str) -> Operation:

"""

Function creates an empty workbook

We will fill it with other functions

"""

return svc.Create(CreateWorkbookRequest(project_id=project_id, workbook_title=name))

def create_workbook_connection(svc, workbook_id: str, connection_name: str) -> Operation:

"""

A special sample database, that available from all projects

You can create this connection by your own and

try all of Double.Cloud Visualisation features

Don’t work with secrets like that for your environments.

It's just an example. Ask your system administrator for your secrets provider.

"""

connection = Connection()

connection.config.struct_value.update(

{

"kind": "clickhouse",

"cache_ttl_sec": 600,

"host": "rw.chcpbbeap8lpuv24hhh4.at.double.cloud",

"port": 8443,

"username": "examples_user",

"secure": True,

"raw_sql_level": "off",

}

)

operation = svc.CreateConnection(

CreateWorkbookConnectionRequest(

workbook_id=workbook_id,

connection_name=connection_name,

connection=connection,

secret=Secret(plain_secret=PlainSecret(secret="yahj@ah5foth")),

)

)

return operation

def modify_workbook(svc, workbook_id: str, workbook_config: dict) -> Operation:

"""

Function rewrites rewrites workbook with declarative description,

which usually given from `describe_workbook` method.

"""

wb = Workbook()

wb.config.struct_value.update(workbook_config)

return svc.Update(

UpdateWorkbookRequest(

workbook_id=workbook_id,

workbook=wb,

force_rewrite=BoolValue(value=True),

)

)

def get_workbook_config_with_single_dataset(

dataset_name: str,

ds_fields: list = None,

ds_avatars: dict = None,

ds_sources: list = None,

charts: list = None,

dashboards: list = None,

) -> dict:

"""

Function returns a declarative structure of a sample workbook with single dataset.

Structure used for changing workbook config through API

"""

return {

"datasets": [

{

"name": dataset_name,

"dataset": {

"fields": ds_fields or [],

"avatars": ds_avatars or None,

"sources": ds_sources or [],

},

}

],

"charts": charts or [],

"dashboards": dashboards or [],

}

def get_clickhouse_table_dataset_sources(*, connection_name: str, db_name: str, table_name: str) -> list:

"""

Function returns a dataset source as DB table or view.

You can use another datasource types:

https://double.cloud/docs/en/public-api/api-reference/visualization/configs/DataSourceSpec

And another connection types:

https://double.cloud/docs/en/public-api/api-reference/visualization/configs/Connection

"""

return [

{

"id": "production_marts",

"title": "hits_sample",

"connection_ref": connection_name,

"spec": {"kind": "sql_table", "db_name": db_name, "table_name": table_name},

}

]

def a_column_chart(name: str, *, dataset_name: str) -> dict:

"""

This is an example of column chart

See https://double.cloud/docs/en/data-visualization/quickstart#create-a-column-chart

"""

return {

"chart": {

"ad_hoc_fields": [

{

# Here we adding field with applied aggregation to chart

# This is equal to adding field to dataset. But only when used with this chart.

"field": {

"description": None,

"id": "time_spent_sum",

"cast": "float",

# Example of calculated field

# https://double.cloud/docs/en/data-visualization/concepts/calculated-fields

# Note that in API fields should be referenced by ID, not title

"calc_spec": {

"kind": "id_formula",

"formula": "SUM([Time_Spent])",

},

"aggregation": "none",

"hidden": False,

"title": "Time Spent Sum",

},

"dataset_name": dataset_name,

}

],

"visualization": {

"y": [

{

"source": {

"kind": "ref",

"id": "time_spent_sum",

}

}

],

"kind": "column_chart",

"sort": [],

"coloring": {

"mounts": [],

"palette_id": None,

"kind": "dimension",

"source": {"kind": "ref", "id": "browser"},

},

"x": [{"source": {"kind": "ref", "id": "date"}}],

},

"datasets": [dataset_name],

},

"name": name,

}

def a_donut_chart(name: str, *, dataset_name: str) -> dict:

"""

Example of a donut chart

See https://double.cloud/docs/en/data-visualization/quickstart#create-a-donut-chart

"""

return {

"chart": {

"ad_hoc_fields": [

# Here we adding field with applied aggregation to chart

# This is equal to adding field to dataset. But only when used with this chart.

{

"field": {

"description": None,

"id": "browser_count_unique",

"cast": "string",

"title": "Browser Count Unique",

# Example of direct field with applied aggregation

"aggregation": "countunique",

"calc_spec": {"kind": "direct", "avatar_id": "production_marts", "field_name": "Browser"},

"hidden": False,

},

}

],

"visualization": {

"kind": "donut_chart",

"sort": [],

"coloring": {

"mounts": [],

"palette_id": None,

"source": {"kind": "ref", "id": "technology"},

},

"measures": {

"source": {

"kind": "ref",

"id": "browser_count_unique",

}

},

},

"datasets": [dataset_name],

},

"name": name,

}

def an_indicator_chart(name: str, *, dataset_name: str) -> dict:

"""

Example of an indicator chart

See https://double.cloud/docs/en/data-visualization/quickstart#create-an-indicator

"""

return {

"chart": {

"ad_hoc_fields": [],

"visualization": {

"field": {

"source": {

"kind": "ref",

"id": "hits_count",

}

},

"kind": "indicator",

},

"datasets": [dataset_name],

},

"name": name,

}

def dash_element_selector_by_dataset_field(

element_id: str,

title: str,

dataset_name: str,

field_id: str,

# Element placement

# See details here: https://double.cloud/docs/en/public-api/api-reference/visualization/configs/Dashboard

x: int,

y: int,

h: int,

w: int,

):

return {

"element": {

"kind": "control_multiselect",

"show_title": True,

"title": title,

"source": {

"kind": "dataset_field",

"dataset_name": dataset_name,

"field_id": field_id,

},

"comparison_operation": None,

"default_value": None,

},

"id": element_id,

"placement": {"x": x, "y": y, "h": h, "w": w},

}

def dash_element_chart(

title: str,

chart_name: str,

element_id: str,

# Element placement

# See details here: https://double.cloud/docs/en/public-api/api-reference/visualization/configs/Dashboard

x: int,

y: int,

h: int,

w: int,

):

# Generating unique chart tab ID based on element ID

chart_tab_id = "ctid_" + element_id + "_1"

return {

"element": {

"hide_title": False,

"kind": "charts_container",

"default_active_chart_tab_id": chart_tab_id,

"tabs": [

{

"title": title,

"id": chart_tab_id,

"chart_name": chart_name,

}

],

},

"id": element_id,

"placement": {"x": x, "y": y, "h": h, "w": w},

}

def get_single_tab_dashboard(dashboard_name: str, elements: list[dict]) -> list:

return [

{

"dashboard": {

"tabs": [

{

"title": "Tab 1",

"id": "qG",

"items": elements,

"ignored_connections": [],

}

]

},

"name": dashboard_name,

}

]

def advise_dataset_fields(svc, workbook_id: str, sources: list, connection_name: str):

"""

Function helps to define automatically all fields, their names/IDs and types

based on underlying datasource (table, view, SQL query, etc.).

ID of fields will be equals to column names.

You can use define them manually or use this handler to simplifying for popular cases

"""

dataset = Dataset()

dataset.config.struct_value.update(

{

"fields": [],

"avatars": None,

"sources": sources,

}

)

return svc.AdviseDatasetFields(

AdviseDatasetFieldsRequest(

workbook_id=workbook_id,

connection_name=connection_name,

partial_dataset=dataset,

)

)

def delete_workbook(svc, workbook_id):

operation = svc.Delete(DeleteWorkbookRequest(workbook_id=workbook_id))

return operation

def delete_connection(svc, workbook_id, connection_name):

operation = svc.DeleteConnection(

DeleteWorkbookConnectionRequest(workbook_id=workbook_id, connection_name=connection_name)

)

return operation

def main(): # pylint: disable=too-many-locals,too-many-statements

logging.basicConfig(level=logging.INFO)

arguments = parse_args()

if arguments.token:

sdk = doublecloud.SDK(token=arguments.token)

else:

with open(arguments.sa_json_path) as infile:

sdk = doublecloud.SDK(service_account_key=json.load(infile))

workbook_id = None

connection_created = False

# Entries references each other by it's names

# So we define it here

connection_name = "conn_ch_1"

dataset_name = "ds_hits_sample"

chart_name_total_hits = "chart_total_hits"

chart_name_time_spent_per_browser = "chart_time_spent_per_browser"

chart_name_user_share_by_platform = "chart_user_share_by_platform"

dashboard_name = "dash_main"

svc = sdk.client(WorkbookServiceStub)

try:

# Create a new workbook

operation = create_workbook(svc, arguments.project_id, arguments.name)

operation_result = sdk.wait_operation_and_get_result(operation)

workbook_id = operation_result.operation.resource_id

logging.info(f"Created a new workbook: {workbook_id}, https://app.double.cloud/workbooks/{workbook_id}")

# Create a connection to a database

operation = create_workbook_connection(svc, workbook_id, connection_name=connection_name)

sdk.wait_operation_and_get_result(operation)

connection_created = True

logging.info(f"Created a new connection: {connection_name}")

logging.info("Modify workbook and add data source")

sources = get_clickhouse_table_dataset_sources(

connection_name=connection_name,

db_name="examples",

table_name="hits",

)

workbook_spec = get_workbook_config_with_single_dataset(

dataset_name=dataset_name,

ds_sources=sources,

)

operation = modify_workbook(svc, workbook_id, workbook_spec)

sdk.wait_operation_and_get_result(operation)

# Get advised fields for our datasource, instead of manually write all of them

advised_ds_config = MessageToDict(

advise_dataset_fields(svc, workbook_id, sources, connection_name).dataset.config

)

# Adding aggregated field to dataset

advised_ds_config["fields"].append(

{

"description": None,

"id": "hits_count",

"cast": "integer",

"calc_spec": {"kind": "direct", "field_name": "Hit_ID"},

"aggregation": "countunique",

"hidden": False,

"title": "Total hits",

}

)

# Fill the workbook with advised fields

workbook_spec = get_workbook_config_with_single_dataset(

dataset_name=dataset_name, ds_fields=advised_ds_config["fields"], ds_sources=advised_ds_config["sources"]

)

operation = modify_workbook(svc, workbook_id, workbook_spec)

sdk.wait_operation_and_get_result(operation)

logging.info("Filled advised fields for our datasets")

# Create example charts and dashboard

charts = [

an_indicator_chart(chart_name_total_hits, dataset_name=dataset_name),

a_donut_chart(chart_name_user_share_by_platform, dataset_name=dataset_name),

a_column_chart(chart_name_time_spent_per_browser, dataset_name=dataset_name),

]

dashboards = get_single_tab_dashboard(

dashboard_name,

[

dash_element_selector_by_dataset_field(

title="Traffic Source",

dataset_name=dataset_name,

field_id="traffic_source",

element_id="P9",

x=0,

y=0,

h=2,

w=20,

),

dash_element_chart(

chart_name=chart_name_time_spent_per_browser,

title="Time spent per browser",

element_id="K5",

x=0,

y=2,

h=16,

w=20,

),

dash_element_chart(

chart_name=chart_name_user_share_by_platform,

title="User shares by platform",

element_id="nd",

x=20,

y=0,

h=23,

w=16,

),

dash_element_chart(

chart_name=chart_name_total_hits,

title="Total hits",

element_id="L7",

x=0,

y=18,

h=12,

w=20,

),

],

)

workbook_spec = get_workbook_config_with_single_dataset(

dataset_name=dataset_name,

ds_fields=advised_ds_config["fields"],

ds_sources=advised_ds_config["sources"],

charts=charts,

dashboards=dashboards,

)

operation = modify_workbook(svc, workbook_id, workbook_spec)

sdk.wait_operation_and_get_result(operation)

logging.info("Added charts for our data")

logging.info(

"\n\nWonderful! 🚀 Check out created workbook\n" f"https://app.double.cloud/workbooks/{workbook_id}\n"

)

input("Press F to respect and delete all created resources ...")

finally:

if workbook_id:

try:

if connection_created:

logging.info(f"Deleting connection {connection_name}")

operation = delete_connection(svc, workbook_id, connection_name)

sdk.wait_operation_and_get_result(

operation,

)

finally:

logging.info(f"Deleting workbook {workbook_id}")

operation = delete_workbook(svc, workbook_id)

sdk.wait_operation_and_get_result(

operation,

)

def parse_args():

parser = argparse.ArgumentParser(description=__doc__, formatter_class=argparse.RawTextHelpFormatter)

auth = parser.add_mutually_exclusive_group(required=True)

auth.add_argument(

"--sa-json-path",

help="Path to the service account key JSON file.\nThis file can be created using UI:\n"

"Members -> Service Accounts -> Create and then create API keys",

)

auth.add_argument("--token", help="IAM token")

parser.add_argument("--project-id", help="Your project id", required=True)

parser.add_argument("--name", default="sdk-example", help="New workbook title")

return parser.parse_args()

if __name__ == "__main__":

main()

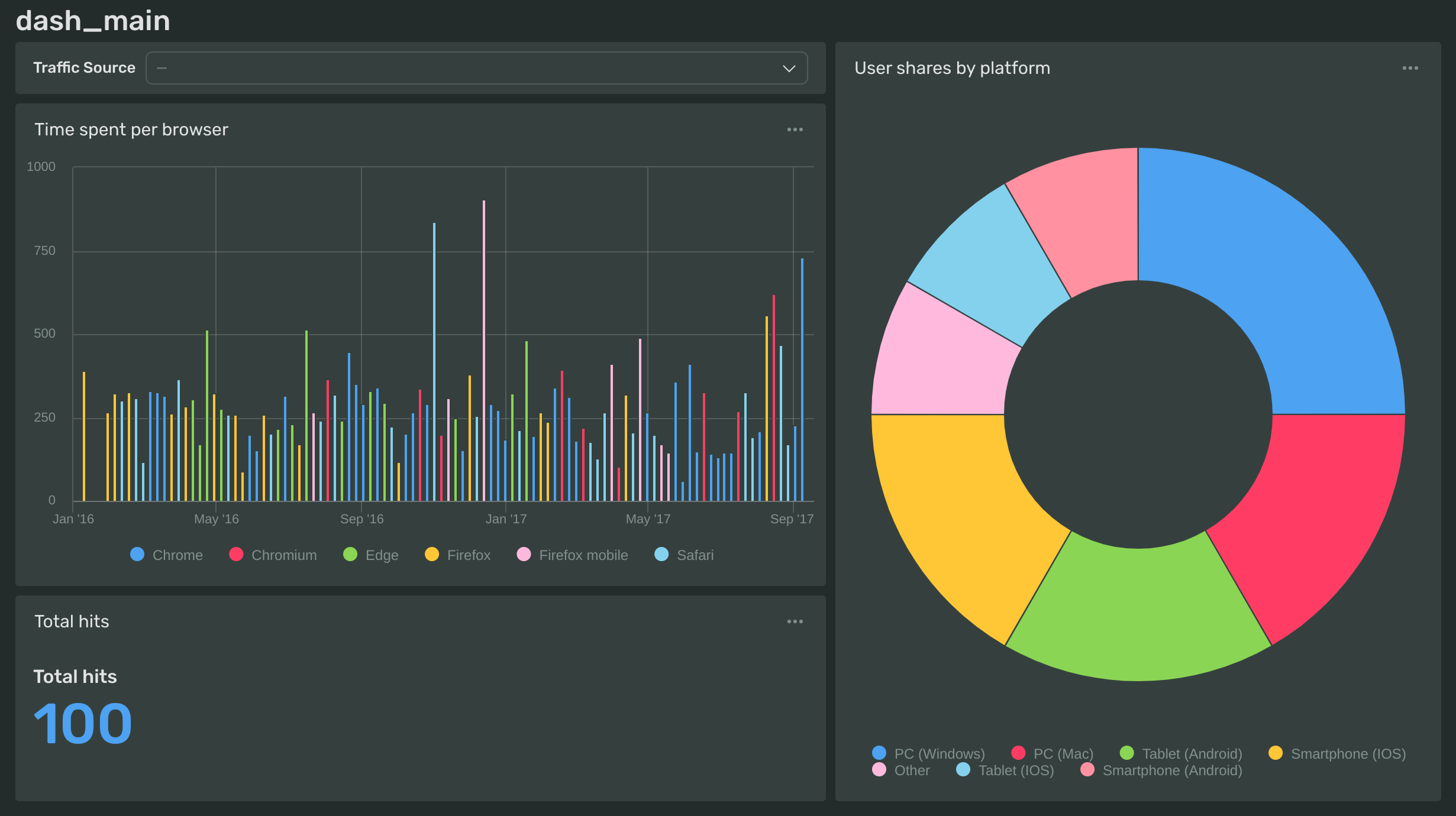

Now you have a workbook with a dashboard containing a selector charts that will automatically update when the data on the source changes:

Download the complete code example

You can download the full code listing for all the steps above from our Python SDK GitHub repository