Why ETL pipelines are essential for businesses

Data is core to business today, and ETL (Extract, Transform, Load) pipelines are becoming critical to businesses. Modern ETL pipelines help to get the data ready quickly for business use. Cloud-based ETL constitutes an essential tool that companies use for managing massive data sets and one that companies will increasingly rely on in the future. Data pipelines are growing in importance with advances in Big Data.

What is an ETL pipeline?

ETL pipelines are dedicated processes that transfer data from various sources into a database, such as a data warehouse. ETL means “extract, transform, load,” three interdependent data integration operations that transport data from one database to another. After loading the data, they can be utilized for reporting, analysis, and deriving meaningful business insights.

A brief history of ETL

ETL gained prominence in the 1970s when businesses began storing diverse types of business information in multiple data repositories or databases. During the late 1980s and early 1990s, data warehouses emerged. Data warehouses, a different type of database, provided access to data from multiple systems, including mainframe computers, minicomputers, personal computers, and spreadsheets. The number of data types, sources, and techniques has grown exponentially over time, and pipelines for ETL can handle them optimally. ETL is one of the organizations' methods to collect, import, and process data.

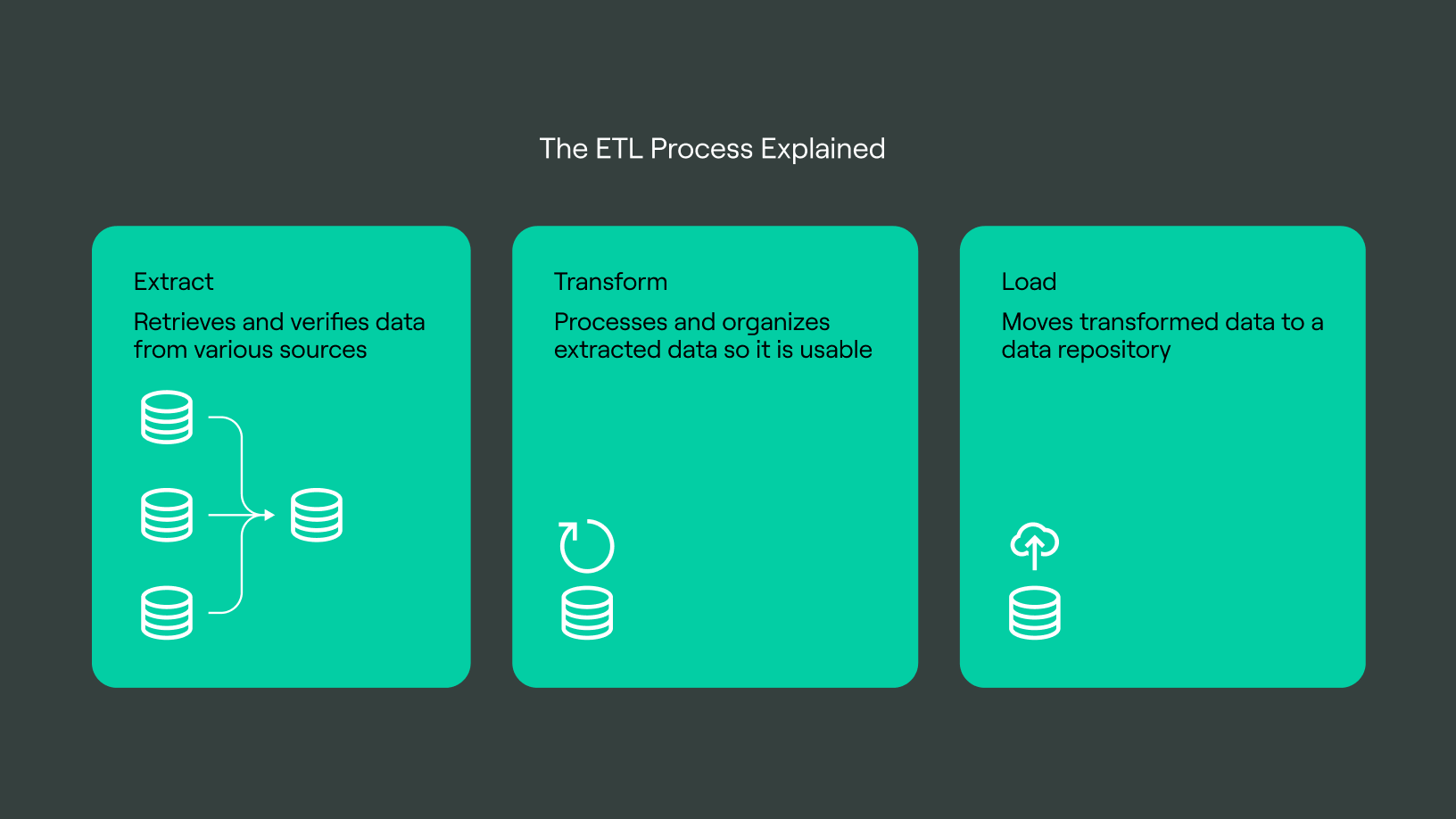

How ETL works

The ETL pipelines and their transformation comprise three processes that enable source-to-destination data integration: data extraction, transformation, and loading.

Step 1: Extraction

Most firms handle data from multiple sources to generate business intelligence insights and employ various data analysis techniques. Data must be allowed to move freely between systems and apps through pipelines to carry out such a complicated data strategy.

Before moving to a new location, data has to be retrieved from its source, such as a data warehouse or data lake. You then need to import and aggregate structured and unstructured data into a single repository during the first step of the ETL process. Retrieving data volumes can be from a variety of data sources, including:

- Existing databases and legacy systems

- Cloud, hybrid, and on-premises infrastructures

- Applications for sales and marketing

- Mobile devices and apps

- CRM systems

- Platforms for storing data

- Data warehouses

- Analytical software

Although one can manually perform hand-coded data extraction, it’s time-consuming and prone to errors. ETL pipelines automate extraction.

The resulting outcomes from the pipelines are a more dependable and efficient workflow.

Step 2: Transformation

During this stage of the ETL process, one can implement rules and regulations to ensure data quality and accessibility. You can also use controls to assist your firm in meeting reporting standards. The data transformation process gets divided into various sub-processes:

- Cleansing — resolves discrepancies and missing values in the data.

- Standardization — applies formatting guidelines to the dataset.

- Deduplication — entails excluding or discarding redundant data.

- Verification — entails removing unusable data and flagging irregularities.

- Sorting — entails organizing data by kind.

- Other tasks — applying additional/optional rules to improve data quality.

Consider transformation as the most critical component of ETL pipeline processing activities.

Data transformation improves data integrity by eliminating duplicates and verifying that raw data arrives at its new destination completely compliant and ready to use.

Step 3: Loading

The final step in the ETL process consists of loading newly transformed data into a new location (data lake or data warehouse). One can load data at once (full load) or at predetermined intervals (incremental load).

Full-Loading: In an ETL full-loading scenario, all transformation assembly line output gets loaded as new, unique entries into the data warehouse or repository. Full-loading may occasionally be helpful for research purposes, but it causes datasets to grow exponentially and become challenging to maintain rapidly.

Incremental Loading: The gradual loading method is less thorough but more manageable. The incremental loading method compares incoming data with existing data and only creates new entries if someone discovers new and unique information. This architecture permits smaller, less costly data warehouses to manage business intelligence.

Cloud-based ETL vs. local

Traditional data warehouses consist of on-premises physical servers, which refers to local data management and warehousing. These regional data warehouses cleanse and convert data from diverse sources before storing it in their physical databanks.

Cloud-based ETL services provide the same function as their on-premises counterparts; however, the data warehouse and most data sources are now exclusively online. Cloud ETL technologies enable customers to control their data flow through a single interface that connects to the data’s origin and destination.

Now Let’s dive into some benefits of Cloud ETL solutions.

In this article, we’ll talk about:

Benefits of Cloud ETL solutions

Cloud-based ETL tools provide different advantages for companies compared to on-premises data management. Here are some of them:

Scalability: The scalability of cloud computing is significantly greater than on-premises data management. If you approach the storage or processing restrictions of the cloud, you may quickly acquire a new server or purchase more space. For on-premise computing, however, you would need to buy more hardware, which is both costly and time-intensive.

Mobile-Friendliness: Cloud platforms increasingly enable mobile devices, including smartphones, tablets, and laptops, granting consumers access from any location. On-premises ETL, on the other hand, can be adjusted for mobile compatibility but often does not have this feature by default.

Real-time data management: Eliminating data stream delays by collecting and transforming data from several applications and keeping it in a central, easily accessible location. In addition, ETL on the cloud places the required data within microseconds of the user’s fingertips.

Fully managed services: For the convenience of end users, public cloud services offer fully integrated applications that adhere to service and maintenance responsibilities. Having an on-premises ETL solution assures that you will be responsible for handling these difficulties on your own, necessitating the hiring of competent in-house tech personnel.

Loss Prevention: There is a possibility of losing data stored locally and on a few servers. However, with a cloud-based server, all information transmitted to the cloud remains secure and easily accessible from any internet-connected device.

ETL is a crucial method for consolidating all relevant data into a single repository to make it actionable, i.e., to analyze it and enable executives, administrators, and other stakeholders to make critical business decisions based on the data.

ETL use cases

The following are the use cases of ETL:

Data warehousing: A data warehouse can be called a database that combines data and analyzes it from multiple sources for business purposes. Frequently, ETL is used to transfer data to a data warehouse.

Marketing data integration: Marketing data integration is transferring all marketing data, such as customer, social networking, and web analytics data, into a centralized location to be analyzed and used to design future strategies. Utilize ETL to gather and organize marketing data for analytical purposes.

Machine Learning (ML) and artificial intelligence (AI): Machine learning (ML) is a technique for extracting meaning from data without actively constructing analytic models. Instead, the system uses artificial intelligence algorithms to learn from data. One can use ETL to consolidate data for machine learning purposes.

IoT data integration: IoT is a collection of linked devices capable of collecting and transferring data via hardware-integrated sensors. IoT devices may include factory equipment, network servers, smartphones, and many other machines, including wearables and implantable ones. ETL enables data consolidation from numerous IoT sources into a single location for analysis.

Cloud Migration: Companies are transferring their data and apps from on-premises to the cloud to save money, increase the scalability of their programs, and protect their data. ETL usage is common in organizations to manage these transitions.

Changes to data are visible immediately at the destination when using an efficient cloud ETL service. Data analysts can extract relevant insights much faster, providing businesses with the competitive advantage they require.

Companies that use the DoubleCloud platform for their Cloud ETL tools find it easy and efficient to integrate their data and take valuable insights from it almost immediately.

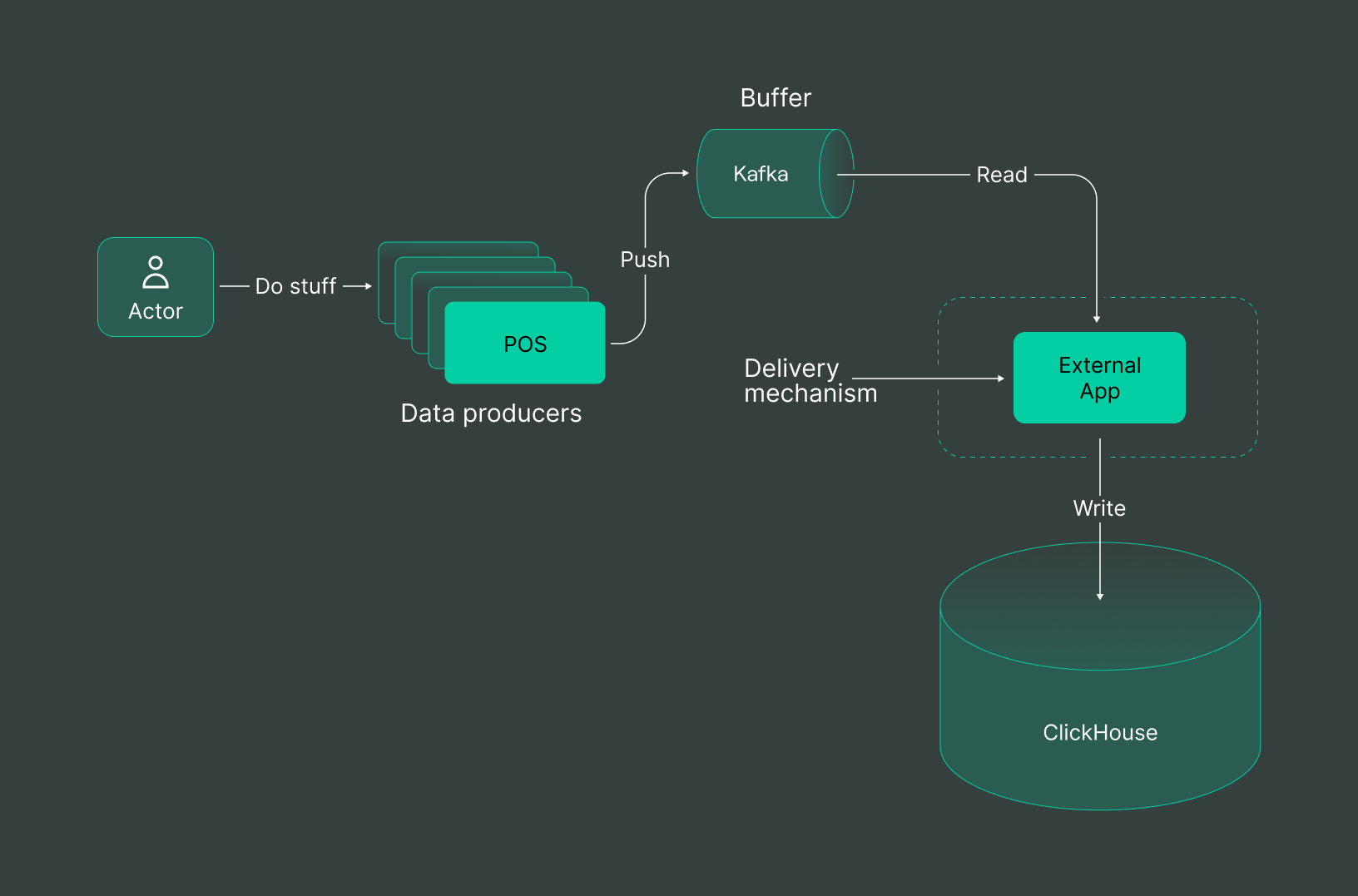

DoubleCloud’s platform helps our clients build sub-second data analytical solutions and pipelines across open-source technologies like ClickHouse® and Apache Kafka® in less than 5 minutes.

- ClickHouse® is a trademark of ClickHouse, Inc. https://clickhouse.com

- Apache® and Apache Kafka® are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries.

No-code ELT tool: Data Transfer

A cloud agnostic service for aggregating, collecting, and migrating data from various sources.

Start your trial today