Why is ClickHouse so fast? Features that make it lightning fast

ClickHouse is one of many OLAP databases, but what makes ClickHouse so popular among different data warehouses? One of a few reasons is its remarkable data speed. ClickHouse is very fast in processing complex analytical queries on large datasets.

This article will explain why ClickHouse is so fast and what helps it work rapidly for big companies with huge arrays of source data.

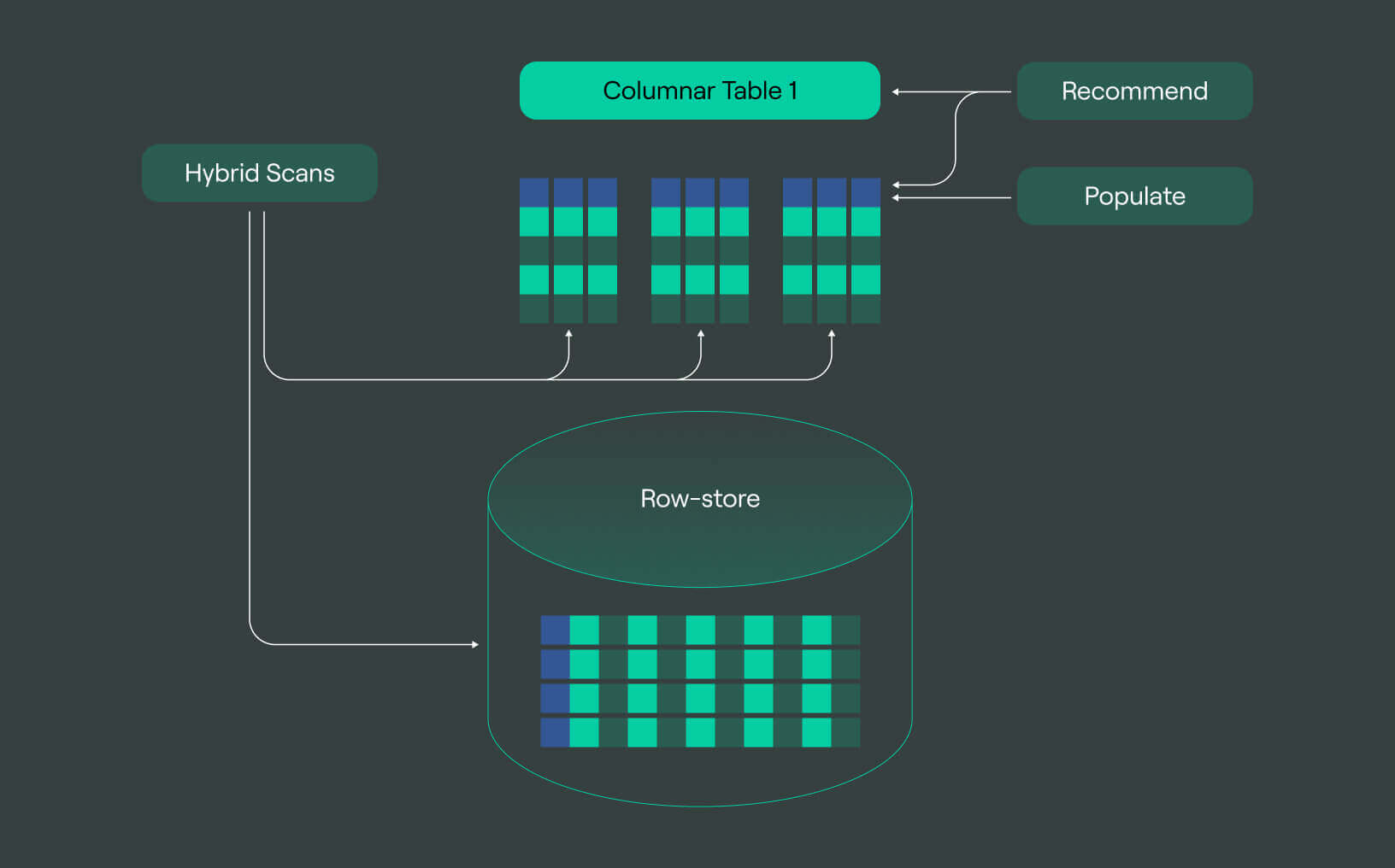

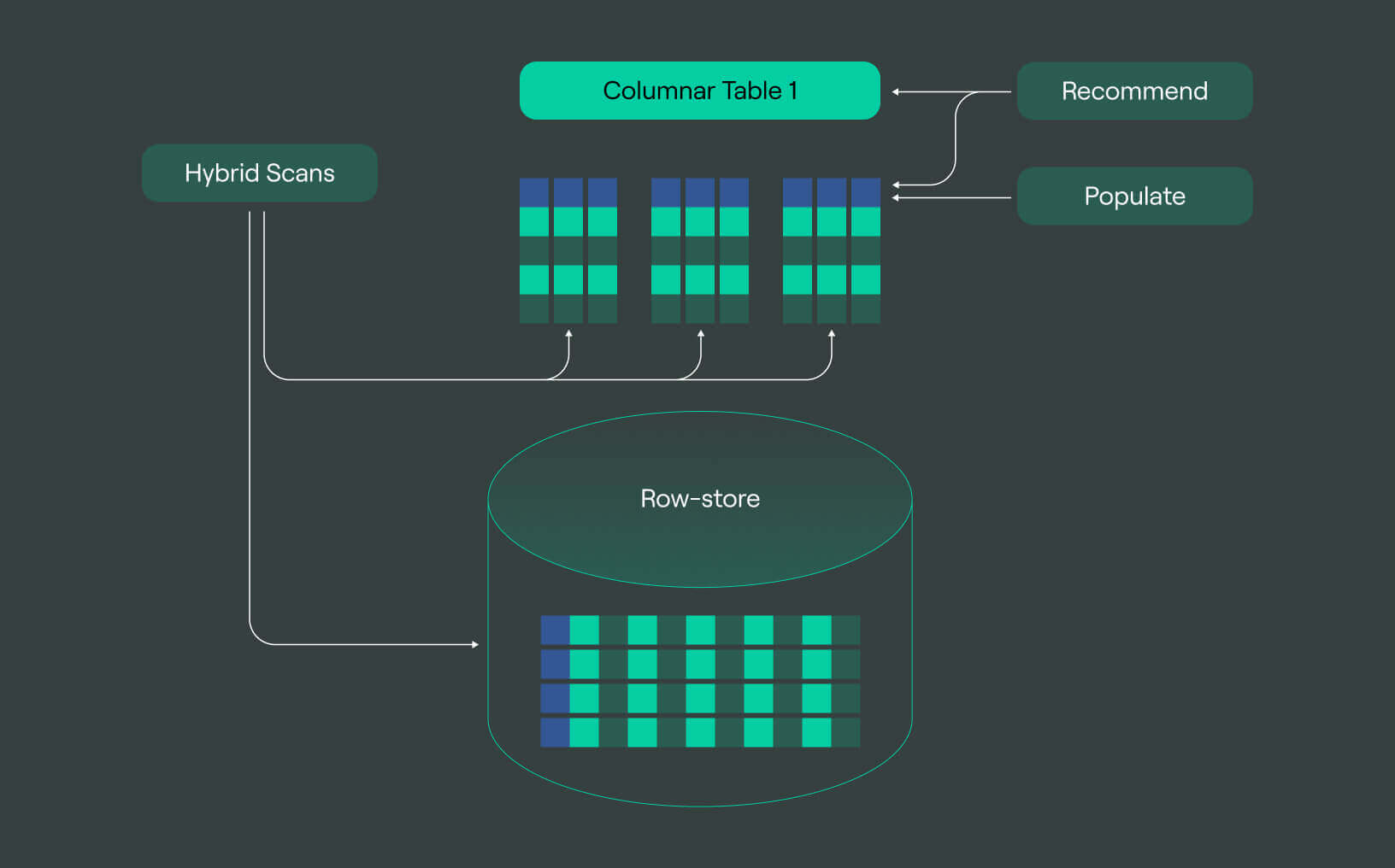

Columnar storage architecture

What is columnar storage architecture?

ClickHouse is a column-oriented OLAP database. In column-oriented databases, all the raw data is stored in columns. Each data recording can have hundreds and sometimes thousands of attributes. In column-oriented storage, every attribute is stored separately from the others. For each recording, the same attribute is stored in the same column.

In this article, we’ll talk about:

- Columnar storage architecture

- Data compression and encoding

- Distributed query execution

- Materialized views and Aggregations

- Vectorized query execution

- What do these features allow ClickHouse to do?

- ClickHouse performance example

- ClickHouse Hardware Requirements

- How does DoubleCloud help with ClickHouse?

- So, is ClickHouse the fastest?

- Final words

When executing analytical queries, ClickHouse checks data only from certain columns that are specified in the query and does not need to check the whole real data array. This makes the processing speed very fast.

Columnar and row-based storage–what’s the difference?

ClickHouse is a modern database. In classic row-based data storages, the same data for each record is stored contiguously, with all the attributes of a record grouped together. For this reason, the ClickHouse logic, based on separated attributes storing, can’t work for a row-by-row approach.

More specifics of a columnar approach.

ClickHouse supports multiple table engines. Each table engine uses special logic by inserting data into database tables in an optimal way for specific types of workloads. That also greatly helps to increase the data processing speed.

Both primary and secondary indexes are used in ClickHouse. The primary index determines the physical ordering of the data from the primary key column (s). It also enables efficient range-based queries. The secondary indexes provide additional indexing options for specific columns to optimize query performance.

A sparse index is an example of the secondary indexes used in ClickHouse. Each sparse index is designed to handle cases where the data in a column has a significant number of repeated or null values. It helps to reduce the required disk space and contributes to the query execution speed.

Data compression and encoding

ClickHouse uses various data encoding algorithms. The encoding process includes uncompressed data transformation and data compression. Compression algorithms help to reduce storage requirements and optimize query processing for a higher speed.

Compressed data needs less disk space and less system operations, so it reduces Disk I/O and increases the efficiency of memory utilization. Also, efficient data compression enables caching mechanisms by storing more compressed data within the available cache capacity.

Here are some ClickHouse data compression algorithms:

-

LZ4: A default lossless data compression algorithm. It provides fast compression and decompression speeds which makes it perfect for cases when the high processing speed level has a crucial role.

-

LZ4HC: A high compression algorithm where you can configure the compression level according to special database requirements.

-

ZSTD: One more algorithm with configurable compression levels. It is useful for asymmetric scenarios. For example when it is necessary to compress and decompress data repeatedly.

-

Delta: This algorithm works by storing the difference (delta) between each value and the previous value in a sorted column instead of storing the actual values themselves. Only the first value stays unchanged. The algorithm is effectively used for columns that contain sorted or monotonically increasing values (for example, sorted data about cart sums of buyers of an online shop).

-

DoubleDelta: This algorithm stores deltas of deltas in data columns with accelerating or decelerating patterns (for instance, website traffic values or energy consumption during certain hours of the day or seasons).

-

Gorilla: An algorithm that uses the combination of the delta of deltas encoding approach, XOR compression, and bit packing. Gorilla compression handles high-resolution time series data with a high degree of data variation (for example, time series of stock prices that can experience significant changes within a short period).

-

FPC (Fast PFor Compression): This allows one to achieve high compression ratios and fast decompression speeds for integer values (such as students' marks or age). The FPC compression algorithm uses the concept of SIMD (Single Instruction, Multiple Data). It allows parallel processing of multiple values at the same time.

You can find more information about data compression algorithms in ClickHouse documentation.

Distributed query execution

Distributed query execution is one more factor that allows ClickHouse to provide very high speed while data processing. It is achieved by dividing the workload of executing queries among multiple nodes in data storage (instead of relying on a single node to handle all the queries).

ClickHouse breaks a distributed query into smaller parts and assigns each part to a separate node in a cluster. Therefore, distributed processing is ensured by many small processes working in parallel. Each node returns its intermediate result. All the node results are combined into a final query response.

Here are the steps of distributed query execution in ClickHouse:

1. Query analysis: To understand the query structure, identify the relevant tables and sections, and determine the query dependencies.

2. Query parsing: To divide it into fragments in an optimal way based on the analysis results.

3. Query optimization: To minimize data movement across the cluster and maximize query performance by selecting the most efficient execution plan.

4. Query routing: To route the query fragments to the relevant nodes that hold the required data.

5. Parallel query execution: ClickHouse executes query fragments in parallel for each node and collects the results.

6. Data Aggregation: Once the query fragments are processed, ClickHouse performs the necessary merging of intermediate results data and produces the final query result.

The distributed query execution approach brings faster data processing. This happens as each node in the cluster can separately process its assigned query part in parallel mode. Also, the multiple nodes and clusters structure increases database throughput, scalability, and fault tolerance.

Materialized views and aggregations

Materialized views are objects of a database. They store precalculated query results obtained from the underlying data. The resulting data for a materialized view is stored in a separate table. Each table refers to a certain executed query.

Materialized views are exceptionally useful for scenarios with frequently accessed queries. They also provide excellent performance results for complicated queries as they reduce the need for repetitive and resource-intensive calculations.

The materialized views approach is based on query operations like joins and data aggregation. There are lots of aggregation functions in ClickHouse. Here are some of them:

- COUNT: Calculates the number of rows or non-null values in a column.

- SUM: computes the sum of the values in a column.

- AVG: calculates the average (mean) of the values in a column.

- MIN: returns the minimum value in a column.

- MAX: returns the maximum value in a column.

- GROUP_ARRAY: aggregates values into an array within each group.

- GROUP_CONCAT: concatenates values into a string within each group.

- ANY: returns an arbitrary non-null value from a group.

- DISTINCT: removes duplicate values from a column or group.

- HLL_COUNT.MERGE: merges HyperLogLog counters to estimate unique values in a column.

- MEDIAN: computes the median value within a group.

- TOPK: returns the top-K values based on a specified criterion.

- QUANTILE: calculates the quantile value within a group.

- ARRAY JOIN: unrolls arrays and performs subsequent aggregations on the expanded data.

- argMin/argMax: returns the argument (value or index) associated with the minimum or maximum value in a group.

It is also possible to use combinators for aggregation functions. They help to perform additional calculations, filtering, or transformations on the aggregated results.

On top of that, ClickHouse supports user-defined aggregate functions (UDAFs). These functions allow custom aggregation logic to support specific requirements.

Different versions of ClickHouse support different aggregation functions' availability and syntax. To get the most up-to-date and full information please refer to ClickHouse documentation.

Vectorized query execution

Vectorized query execution is an important optimization technique in ClickHouse. It contributes remarkably to ClickHouse’s speed and helps to improve performance. Vectorized query execution operates on data in batches or chunks and processes several rows or columns as a single unit.

A more standard row-by-row process of reading data works on an individual row basis. It operates on only one row and a single query at a time. Only after it gets the results for all rows of data can it provides the final query result. In comparison, ClickHouse vectorized query execution can process the calculations for multiple rows at once.

With its parallel approach, the ClickHouse database provides higher query processing for large datasets or complex queries. This leads to improved processing speed.

What do these features allow ClickHouse to do?

ClickHouse has a number of technical solutions that make it really fast, even for complicated and resource-intensive analytical queries. Its features also make ClickHouse a very scalable database. It can be expanded according to the specific business needs.

Parallel query execution.

Thanks to vectorized and distributed query execution, it is possible to process query parts in parallel and to operate on many data batches at once. Also, several data analytics can process several competitive queries at one time without losing speed.

Prearranged data operations.

Data aggregation together with data compression and encoding allows faster calculations for big queries with numerous parameters. It is also a great feature for often repeated queries or queries with equal scenarios.

The online analytical processing is based on preliminary query results. That means that at least part of query processing is already done and data analytics can get the final query result in a very short time.

Fault tolerance and load balancing.

ClickHouse can sensibly distribute queries to less loaded cluster nodes. This helps avoid overloading of specific nodes or usage of a node that, for some reason, becomes unavailable.

Database scalability.

Columnar storage architecture, query execution distributed by multiple nodes, and data compression algorithms allow storing very large datasets with a reasonable place on the disk used. It means that you are able to store and supplement as much data as you need according to the business specifically. You don’t have to delete data, saving disk space.

To sum up, thanks to its approach to data structure and optimization features, ClickHouse is a very fast, reliable, and modern data storage system. It is great for companies with large and growing data arrays. It also performs well with analytical tasks that have complex and numerous queries.

ClickHouse performance example

If you don’t have several different databases where the same data is stored, it will be difficult to test the query performance speed for different databases on your own. The good news is you can easily find plenty of the results of already organized performance speed tests.

Here is the latest example from DoubleCloud. This time we compared ClickHouse and PostgreSQL. Processing the same query through the same dataset, we got the query result more than 13 times faster with ClickHouse!

ClickHouse hardware requirements

Here are some commodity hardware tips to achieve optimal performance with ClickHouse:

-

CPU (central processing unit): choose a single server with a high-performance CPU, multiple cores, and SIMD support. CPUs with more cores will increase query execution speed as they will allow more parallel processes.

-

RAM (random access memory): allocate sufficient RAM to hold the working set of data in memory. Consider a minimum of 64 GB or more of RAM for efficient performance.

-

Storage: select fast storage options such as SSDs (Solid State Drives). They will allow ClickHouse to perform at high speeds.

-

Network: use a quick and reliable network infrastructure for distributed ClickHouse setups. Ensure Fast Ethernet connections, such as 10GbE or faster.

-

Disk I/O: ClickHouse performs heavy disk I/O operations, so fast and reliable disk subsystems are beneficial. Consider RAID configurations or NVMe SSDs to optimize disk I/O performance.

-

Operating system: ClickHouse chords Linux and various other systems. Linux distributions are usually used for production deployments. Choose a recent version of the operating system and keep it updated for stability and performance improvements.

-

Monitoring and tuning: implement monitoring tools to track the database performance and notice bottlenecks. Remember to monitor CPU, memory, disk, and network utilization to ensure efficient resource allocation.

Hardware requirements may vary depending on the specific workload, data volume, and query complexity. You can go through testing and benchmarking to find the optimal modern hardware configuration for your specific ClickHouse deployment.

ClickHouse is also able to complement OLTP databases. You may need it in case you are focused both on efficient management of individual data transactions and providing fast analytics and reporting capabilities on top of the transactional data stored in an OLTP database. Specific hardware requirements may appear in that case.

How does DoubleCloud help with ClickHouse?

ClickHouse requires human resources for process management. More complex and large data structures need more management. Good news–many of the processes can be automated with the help of analytical databases such as DoubleCloud.

DoubleCloud is a database management system. It provides you with all the benefits of ClickHouse and with remarkable automation opportunities on top of them.

Using DoubleCloud for ClickHouse as a service for data management, you will have all the necessary automation and security functionality on the DoubleCloud management console. It includes the features like monitoring, backups, patching, configuration, security, and high availability handled by DoubleCloud.

DoubleCloud provides all the ClickHouse cluster and infrastructure management, so you are keeping control of your data and saving your time for more interesting and important tasks.

So, is ClickHouse the fastest? Final words

ClickHouse’s columnar storage, relevant column access, vectorized query execution, and other performance optimizations contribute to its ability to handle large-scale analytical workloads with exceptional speed and efficiency.

Being a columnar data storage, ClickHouse has taken the best features of more classic relational database and transactional database approaches. With its performance-improving features, it becomes much faster than traditional data storage systems.

ClickHouse is able to handle massive data volumes and execute calculations quickly. It makes query performance well-suited for OLAP workloads that require fast data retrieval and aggregation. That is what makes ClickHouse a powerful high-speed database for companies with large data arrays.

As it is said, “data is the new oil.” And the speed of getting query results means the speed of getting the information necessary to make decisions for business growth.

We recommend that you not only use ClickHouse as a data storage system for your ‘new oil’ but that you also empower it with DoubleCloud. With such an approach you will have all the superior features of both solutions for reliable storage and fast analysis of your company’s data.

DoubleCloud Managed Service for ClickHouse

Start your trial today