What is data lake? A beginner's guide for everyone

Reports suggest users generate around 2.5 quintillion bytes of data every day. Managing such a large amount of raw data is challenging. In fact, 95% of companies say they can’t grow because handling unstructured data is difficult. But this is where data lakes come into play.

In this article, we’ll discuss what is data lake, different data lake platforms, the difference between lake vs data warehouse, use cases, pros, and cons, and significance for big data analytics.

What is a data lake?

A data lake is a platform for storing structured, semi-structured, and unstructured data. It can ingest extensive data from several sources, such as consumer apps, Internet of Things (IoT) sensors, videos, audio, etc.

It gets its name from James Dixon — the Chief Technology Officer of Pentaho — as a data lake is similar to a data pool. Data from different sources flow in without any transformations, making it easier to manage big data.

It makes a data lake very flexible for analytical use cases. For example, its large data volume helps with data science and machine learning (ML). Plus, its suitable for big data processing and real-time analytics.

Also, a data lake contains no predefined schema (structure). Instead, it works on a schema on-read basis. The lake applies relevant schemas when a data scientist queries it for analysis.

Due to its flexibility, data scientists and analysts use data lakes for ad-hoc analysis and operational reporting. They can define appropriate schemas and transformations for reproducing reports if necessary.

This video gives a detailed overview of a data lake. Check it out!

In this article, we’ll talk about:

- What is a data lake?

- Key concepts of data lake

- How data lakes differ from data warehouse?

- What can a data lake store?

- What are the key components of a data lake?

- How does a data lake work?

- Who uses a data lake?

- What is a data lake used for? Use cases

- Importance of data lake for businesses

- Types of data lakes

- What are data lake platforms?

- Pros and cons of data lake

- How DoubleCloud helps your business?

- Final words

Is data lake a database?

One can often confuse a data lake with a database. After all, both store data. But they’re not the same. A database stores current data, whereas a data lake stores historical and current data.

You require a database management system (DBMS) to operate a database, and several database types exist. Also, a database has a predefined schema and stores structured or semi structured data. For example, a relational database stores data in a predefined tabular format.

A data lake can have data in database tables since it doesn’t require a predefined structure. However, a data lake has better analytical capabilities as it can store several data types.

Key concepts of data lake

Understanding data lakes can be challenging. The following sections explain four concepts to help clarify the data lake platform.

Data sources and integration

You can integrate your data lake with several data sources. Data sources, as mentioned earlier, can include mobile apps, IoT sensor data, the internet, internal business applications, etc. The sources can be in different formats.

For example, sources may have unstructured data such as images, video, emails, audio, etc. Semi-structured data can be comma-separated value (CSV) or JavaScript Object Notation (JSON) files. And structured data can be in table formats.

Data storage

Data storage means recording and retaining data in a particular platform. Traditionally, people stored data such as documents, images, and other files on local disks. Today, you can store data on large data servers.

A data server is a piece of computer hardware responsible for storing, managing, and providing user data. A data lake can consist of several data servers which integrate with a company’s data sources.

Also, the servers can be on-premises or on the cloud. A company has complete control and ownership of on-premises servers.

Cloud data storage consists of third-party servers. For example, Amazon provides cloud services called Amazon Web Services (AWS). It owns an extensive server network globally and sells data storage and management services to other businesses. You can purchase AWS services and build a cloud data lake on the AWS platform.

Cloud data lakes provide more scalability and data reliability as the cloud service provider manages the infrastructure. However, services can be expensive, and customizability can be low. Businesses can adjust on-premises infrastructure as required as they get to have complete control.

Data querying and analysis

A data lake aims to help users with big data analysis. But analysis requires data extraction — requesting a data lake to provide you with relevant data. After the platform receives the request, it processes it and provides the desired results.

The most common way to request data is through writing data queries. A query helps you get relevant answers and insights from data. For example, you can ask a data lake, “What were the sales in the last month?”. After processing the query, it will generate a figure indicating last month’s sales.

The only difference is that you must convert the question into language a computer can process. Most data lake platforms support the Structured Query Language (SQL) to process information. You can use SQL to analyze existing data directly by writing queries that perform various calculations.

Or, you can extract and analyze the relevant data through an external tool such as Microsoft Excel, Tableau, PowerBI, etc. Of course, you will need more advanced analytics tools to study unstructured data. For example, Python — a high-level programming language — helps analyze unstructured data efficiently.

Metadata management

Managing large volumes of data is time-consuming and costly. However, data lakes simplify processing data through metadata management. Metadata is data about your data. For example, you can have a sales database in your data lake.

The database can contain several tables concerning sales information. It can have data regarding products, customers, inventory, purchases, etc. A data scientist can require such data to predict sales for the coming year.

Since sales is not a data scientist’s domain, they may need help understanding several columns in a particular table. So they can refer to the table’s metadata to understand each column’s meaning.

The metadata can provide domain-specific knowledge, information about the creators, date of creation, recent updates, data formats, etc. Using metadata, a data scientist can write appropriate queries to select the correct columns for analysis.

Data lakes automate metadata management. For example, they can automatically assign definitions to data according to a business glossary. Of course, you must first establish a standard glossary with all the relevant terms and their meanings. As data lakes ingest data, they can apply the terms appropriately.

How data lakes differ from data warehouse?

A data warehouse and a data lake are different storage platforms. A data warehouse typically stores structured data only. It doesn’t store unstructured data. And this means a data warehouse has a predefined schema.

For example, a data warehouse can contain several databases for specific lines of business. It can have finance, sales, and supply chain databases. Each database will have well-defined tables, and each table will have specified rows and columns.

The rigid structure of a data warehouse makes it suitable for creating standard reports. Business analysts can use it to provide business intelligence through intuitive visualizations. Also, the well-organized formats make data access easier to control.

But identifying relationships and managing access in data lakes requires an expert. That’s because traditional data lakes store data from several sources.

However, data lakes don’t require a structured data format. They apply a particular schema to raw data automatically. Plus, data lakes let you perform Machine Learning (ML) and data science tasks using SQL with low latency. And that’s why data engineers, scientists, and analysts can easily use machine learning data lakes.

Also, implementing changes in data lakes is easier. You don’t have to apply transformations to give structure to your data. Making changes in a data warehouse is time-consuming.

You must transform data, so it agrees with the warehouse’s design constraints.

What can a data lake store?

As mentioned, a traditional data lake can store relational data in table formats. They can also store non-relational data from mobile apps, videos, audio, IoT sensors, social media, and JSON or CSV files.

How is a data lake architected?

Data lakes can have several architectures. Depending on the requirements, a data lake architecture can combine several tools, technologies, services, and optimization layers to deliver data storage and processing.

However, a typical data lake architecture requires a resource management facility. The facility ensures optimal resources are available for storage and processing.

Also, since many users access a data lake, an access management service is necessary. The service must provide the relevant tools to help the data administration team manage access.

In addition, a data lake architecture needs a metadata management layer to profile, catalog, and archive data. The process maintains data integrity and improves searchability.

Plus, it requires Extract, Load, and Transform (ELT) pipelines to help users extract data and load it into analytical platforms. Such pipelines define a set of automatic processes for bringing data into a usable format.

And, of course, it requires analytical tools, allowing users to gain valuable insights from processed data. The tools should have visualization features and support several development environments. They should also work with multiple programming languages for better usability.

Further, the architecture must have robust data lake security protocols to ensure data quality and compliance. For example, they should let administrators encrypt sensitive data quickly. They must also allow for easy monitoring of data movements to detect data corruption.

Lastly, a comprehensive data governance framework must guide all data lake activities. It can consist of built-in checks within the platform or best practices that all teams follow. Such frameworks define how users store, create, share, and access data.

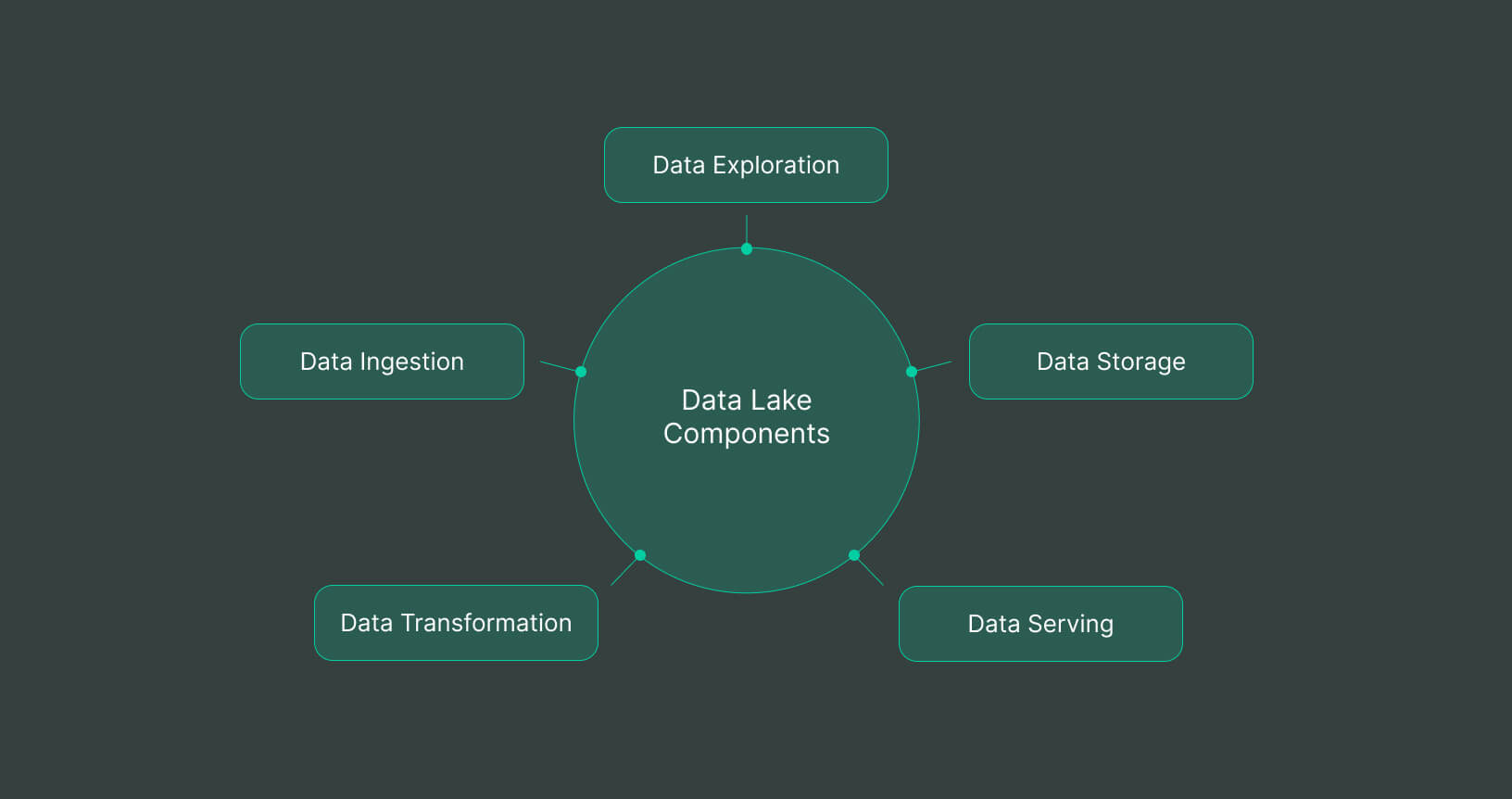

What are the key components of a data lake?

A data lake consists of five critical components based on the abovementioned architecture: data ingestion, data storage, data transformation, data serving, and data exploration.

-

Data ingestion: The ingestion component loads data from different sources into the data lake. Data ingestion can be in real-time or in batches. Real-time or streaming data ingestion continuously loads new data. Batch data ingestion loads data at regular intervals.

-

Data storage: The storage component retains raw data in several formats. As data lakes don’t require a predefined structure, storage is flexible and less costly.

-

Data transformation: The data transformation component consists of pipelines that process data into usable formats. Data cleaning can also occur at this stage. The cleaning process ensures data structures are consistent.

-

Data serving: The serving component provides access to users. A data lake can be self-serving. With self-service, users can search for relevant data assets and use them if granted access.

Domain teams manage access themselves without relying on a central IT administrator. The mechanism saves time as teams decide which members should access their data assets. It also increases data shareability and breaks data silos. The result is better collaboration.

- Data exploration: The data exploration component can include ELT pipelines and a querying interface. They allow users to query data easily and work on separate analytical platforms.

How does a data lake work?

Data lakes store data using object storage. With object storage, data lakes store data as distinct units or entities. Each object comprises the data, metadata, and a unique identifier (ID). The metadata contains essential information about each data object. It makes finding data more straightforward.

Object storage is different from file and block storage methods. Under file storage, a system stores data in a hierarchical format. It consists of several folders and sub-folders. The system requires a complete path to find the relevant data.

In contrast, the block storage method splits a data file into several blocks. Each block is a distinct unit with an identifier. A block storage system is also hierarchical. The system requires a physical drive’s sector to locate a particular block.

Accessing a data file requires the system to find and combine all the relevant blocks. The process makes data retrieval slower than object storage. Also, metadata is limited in block storage.

With object storage, you can have unlimited scalability. You can store data objects in a virtual bucket and keep adding data indefinitely. Such high-scale storage is due to a flat address system.

A flat address system is similar to a social security number (SSN). No hierarchies exist, yet you can identify an individual using their SSN.With detailed metadata against each object, data searches and retrieval are quick. The method is suitable for storing large volumes of unstructured data.

Who uses a data lake?

Data scientists use data lakes to perform predictive analytics and build robust ML models. Data lakes help create insightful features as they have extensive data available. ML models use these features or variables to predict a specific outcome.

Data analysts can use data lakes to create detailed ad-hoc business reports. The reports can help business managers better understand their company’s standing. Analysts can also set up real-time reports displaying relevant metrics. They will help executives have an up-to-date view of the company’s performance.

Financial organizations, tech giants, retailers, etc., use data lakes to improve operational efficiency. Netflix, First United Bank, NASDAQ, Walmart, and Uber are some big names that use data lakes.

What is a data lake used for? Use cases

Multi-channel marketing

Data lakes allow marketers to use diverse data from several sources to understand customer pain points.

For example, they can collect and analyze reviews on social media to understand where customers face problems. The analysis will help them improve product and service features.

They can use customer data for personalized marketing. For instance, they can send customized emails based on consumer feedback. They can tailor their package according to a customer’s needs more effectively.

Healthcare

Healthcare professionals can use data lakes to collect and analyze a patient’s historical data. It will help them provide better diagnoses and identify the root cause of a particular disease.

For example, data lakes can help analyze images from X-rays, CT scans, MRI, etc., to detect anomalies quickly. Also, healthcare organizations can predict costs more accurately using data on treatment expenses.

In addition, doctors can collect real-time data from wearables to track a patient’s vitals. Based on such data, sophisticated models can predict a patient’s recovery and intervention time. It would also help doctors recommend better regimens for healthy patient outcomes.

Finance

Financial institutions commonly use structured data, such as historical movements in stock prices, interest rates, macroeconomic indicators, etc.

But they can collect extensive economic, political, and financial data with data lakes. For example, they can capture the public’s sentiments against a political regime using social media. ML models can predict movements in stock prices using such information.

Banks can use data lakes to analyze the credit ratings of potential borrowers more accurately. For example, they can measure default risk by examining a borrower’s historical financial and general socio-economic data.

Importance of data lake for businesses

Businesses today must leverage the power of big data to fuel significant company decisions. Without efficient data management, companies can quickly lose out to competitors. A data lake is a primary tool allowing organizations to extract insights from big data.

In particular, a data lake can give a 360-degree view of customers. For example, it can combine customer relationship management (CRM) platform data with other data sources. Such sources can include data from mobile apps and social media. Together, businesses can better understand customer behavior.

Plus, data can show inefficiencies in specific business operations. For example, digital supply chain data from several sources can quickly show high-cost components. Organizations can act proactively to eliminate such bottlenecks and increase profits.

A data lake can enable companies to benefit from ML and AI. They can use a vast amount of data to build reliable ML models. Such models can have several use cases. For example, they can predict customer buying patterns, economic downturns, supply chain disruptions, etc. The information will help executives make better decisions.

Types of data lakes

A data lake can come in four types; enterprise data lake, cloud data lake, Hadoop data lake, and real-time data lake.

Enterprise data lake

An enterprise data lake (EDL) stores organizational data relating to costs, customers, inventory, etc. Any data lake that serves data and facilitates data sharing across an organization can be an EDL. The data stored can include information regarding the organization’s customers, suppliers, employees, shareholders, etc.

Cloud data lake

An EDL can be a cloud data lake. As discussed, a third party owns and controls the cloud data lake architecture. Cloud data lakes mainly comprise several data storage servers in different locations. For example, Microsoft’s Azure and Amazon’s AWS provide cloud data services.

Hadoop

Hadoop data lakes use the Hadoop framework to store, process, and analyze big data. Hadoop is an open-source platform that uses clusters to manage data. The clusters consist of several computers or nodes that can perform data operations in parallel.

Fundamentally, the clusters use the Hadoop Distributed File System (HDFS) for parallel processing. The HDFS component consists of low cost commodity hardware and ensures faster data processing by distributing the workload across clusters.

Hadoop also features a resource manager called Yet Another Resource Negotiator (YARN). YARN’s job is to optimize resource allocation and monitor cluster nodes.

Real-time data lake

A real-time data lake ingests data continuously from different sources. Since a data lake is schema-less, you can ingest data in any format from any source.

All data lakes must operate in real time as they capture data from endpoints that generate data constantly. For example, IoT sensors, the internet, consumer apps, etc., produce vast amounts of data every second.

What are data lake platforms?

Several data lake platforms exist that provide big data processing features. The most popular stand-alone platforms include AWS, Hadoop, Microsoft Azure, Oracle, and Google Cloud. The platforms have open-source technologies, and different vendors can build on top of them.

Of course, such third-party solutions are low on customizability, as you cannot change architectural configurations. There’s a risk of vendor lock-in. However, they benefit organizations that lack the necessary resources to build a data lake from scratch.

Pros and cons of data lake

Data lakes are not a panacea. They also have particular advantages and disadvantages.

|

Advantages |

Disadvantages |

|

Flexible and Scalable |

Complex Access management |

|

Enables ML and Data Science |

Maintenance is costly |

|

Data sharability |

Data swamp |

Advantages

-

Flexible and Scalable: Data lakes are flexible as they can store data in any format. The schema-on-read architecture with the object storage method allows for greater scalability.

-

Enables ML and Data Science: ML models require extensive data to perform well. Data lakes provide the perfect data source as they contain vast raw data. Also, the data is diverse since it comes from several different sources.

-

Data shareability: With a self-service architecture, data lakes facilitate team collaboration. The in-depth metadata in data lakes allows different teams to get relevant domain knowledge. It helps them understand what others are working on and ensure consistency.

Disadvantages

-

Complex Access management: The disparate nature of data in data lakes makes access management challenging. Identifying relationships between different data entities is complex.

-

Maintenance is costly: Since data lakes ingest vast data, maintaining it takes time and effort. To ensure data quality, you require complex pipelines to sift through all this data. In particular, real-time data lakes can be tricky to manage.

-

Data swamp: A data lake can become a data swamp without proper governance. Data discovery and analysis become problematic. Organizations must follow strict data governance protocols to avoid swamps. However, getting all teams to do so takes time and effort.

How DoubleCloud helps your business?

Organizations today require a robust solution to store data and perform data analytics. Data lakes are obviously an all-in-one solution. However, they can be overkill for businesses mainly working with structured data.

In particular, organizations using structured data can work with any database management system (DBMS). ClickHouse is one such open-source column-oriented DBMS. It’s a popular real-time platform for creating analytical reports using SQL.

However, it is challenging to set up and manage data in ClickHouse. But that’s where DoubleCloud helps. With DoubleCloud, you can set up data clusters in minutes. The platform manages backups and monitors operations automatically.

DoubleCloud frees you from scheduling updates, creating replicas, and configuring sharding. The platform manages all such services in the background. Also, it can transfer old data to AWS S3 automatically.

In short, DoubleCloud takes care of all the routine tasks for database management. It allows you to focus more on analytics. And it helps you benefit from ClickHouse’s fast processing speeds within S3.

Try DoubleCloud’s Managed ClickHouse service now to get maximum business value from your data assets.

Final words

As data volume, velocity, and variability increase, a data lake will be a crucial tool for every business. It can ingest structured and unstructured data from several sources, such as mobile apps, IoT devices, the web, etc. With a schema-on-read architecture, data lakes let you store raw data easily.

With object storage, data lakes are highly scalable. They also provide superior analytical capabilities. Data scientists can quickly perform predictive analytics and build robust ML models. Such flexibility makes a data lake usable in many industries.

However, managing access in data lakes is challenging. Also, having large data volumes from many sources, data lakes can quickly become swamps. But with strong governance procedures, companies can avoid such issues.

Frequently asked questions (FAQ)

What is the difference between data lake and data warehouse?

What is the difference between data lake and data warehouse?

The primary difference is how they store data. Data lakes can store data in any format. Data warehouses have a rigid design. Users must transform raw data into a particular format to store it in a warehouse.

Is data lake in cloud or on-premise?

Is data lake in cloud or on-premise?

What are the benefits of using a data lake in my organization’s data management strategy?

What are the benefits of using a data lake in my organization’s data management strategy?

What are some benefits of using a data lake for data storage and analysis?

What are some benefits of using a data lake for data storage and analysis?

Start your trial today