Unifying real-time data processing: Kafka, Spark, and ClickHouse

Written by: Amos Gutman, DoubleCloud Senior Solution Architect

In the era of big data, a lot of organizations face the challenge of managing and analyzing massive amounts of data in real time. To address this challenge, several powerful tools have emerged, including Apache Kafka, Apache Spark, and ClickHouse. These technologies offer unique capabilities and can be combined to create a comprehensive and efficient data processing pipeline. In this blog post, we will explore how Kafka, Spark, and ClickHouse fit together, their respective strengths, and where they compete in the data processing landscape.

Kafka: The event streaming platform

Apache Kafka is a distributed event streaming platform that provides a high-throughput, fault-tolerant, and scalable solution for real-time data streaming. It acts as a durable publish-subscribe system that allows data to be published by producers and consumed by multiple subscribers.

Kafka’s key strength lies in its ability to handle high data volumes and ensure reliable message delivery, making it an excellent choice for building real-time data pipelines.

Why would you choose Kafka and why you won’t?

I think it’s better to understand why not to use Kafka to get started, as it seems that today people just throw more components to the enterprise architecture just for the fun of it which makes things complex, maybe it’s done for personal reasons like personal technical development or job security.

Overall the idea of Kafka is to hold and pass messages from one place to another.

Sounds like a good fit for almost any system designed today, especially microservices type of design. However, there are cases where Kafka might not be the most suitable option. For instance, if you have a small-scale application with low data volumes and simple communication requirements, introducing Kafka could be an overkill.

If the application primarily follows a request-response model, where services interact synchronously and don’t require the scalability or fault-tolerance features of Kafka, a simpler messaging system like RabbitMQ or a lightweight HTTP-based communication approach may be more appropriate.

For example, imagine you have a monolithic application where different components communicate with each other in a tightly-coupled manner, and the data volume is relatively low. In such a scenario, introducing Kafka as a messaging layer might introduce unnecessary complexity and overhead. The additional infrastructure, operational costs, and maintenance efforts associated with running a Kafka cluster might outweigh the benefits, especially if the application doesn’t require real-time processing or data streaming capabilities.

In this article: AWS themselves described a move from a microservice architecture to a monolithic application to improve performance and reduce complexity.

So… when do we want to use Kafka?

I’d say the first sign is the need to have multiple different “Publishers” and\or “Consumers”

Given the examples above, if we add more “players” into the systems we need to start and track who got which message, are they up to date on the latest, how far back do we need to keep records for them to “recover”\”complete” the processing.

These are great signs of the need for a middle layer to decouple the components and provide room for flexibility — this is exactly where Kafka would fit in.

Kafka provides us with a scalable fault-tolerant “rea-ltime” message retention layer. The key aspect is the combination of these needs.

For example, if we needed just a scalable, fault-tolerant place to store data for other services to read from we can just use any S3-like object storage and it would be way cheaper. But as soon as we need the promise that we want the latest data in low latency — and we are talking milliseconds here, we would need something else, and that’s why one would need to get into Kafka.

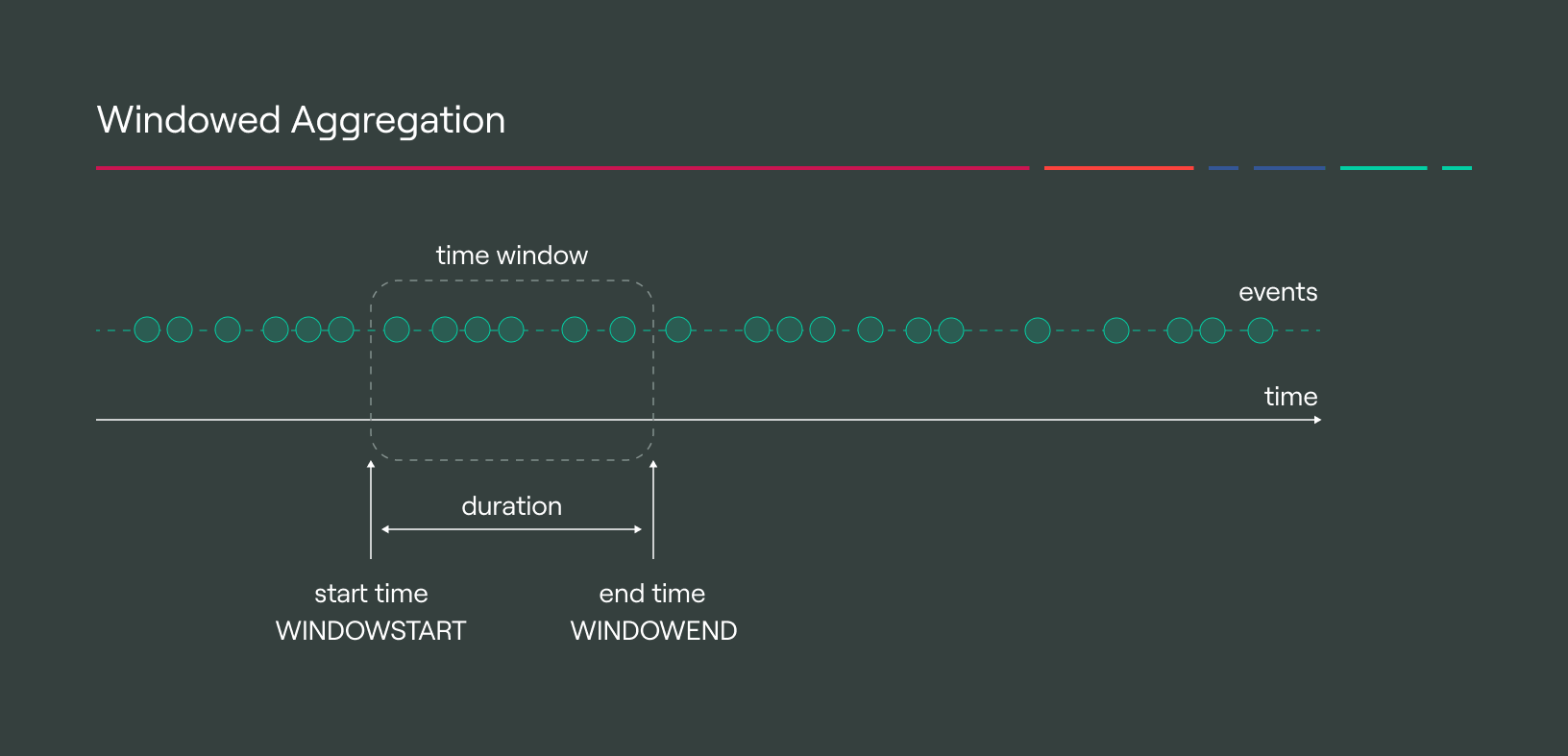

Usually people add some inline processing like described in this article to provide quick insights like “how many events have been processed in the last hour or last 5 minutes”, for example.

In this article, we’ll talk about:

DoubleCloud Managed Service for Apache Kafka®

Fully managed open-source Apache Kafka service for distributed delivery, storage, and real-time data processing.

Things start to get complex when you need to start and aggregate for more complex queries — like count of unique clients that sent messages for the last X minutes. Sounds simple enough, but when the data starts growing to let’s say billions of customers — we need to keep tab of each one for each of the window frames defined — now memory requirements start to get more expensive — and just getting a single large VM as easy as it might sound to get a quick win, we get into trouble when we need to update or god forbid handle unplanned outages.

This is where Spark entered the “Stream Processing” world.

Introducing Spark

Spark is designed for general-purpose data processing, including batch processing, stream processing, and machine learning.

Spark introduced stream processing around version 0.7 and had some major changes and improvements along the way in version 2 and 3.

The basic need for Spark was to process large amounts of data by using multiple machines. At the time of its creation, data usually stayed within a database — typically a single cluster. But the concepts of “big data” and the Hadoop environment for cheap storage and an overall good throughput opened the door to offload those workloads from the databases which served the applications for the day to day operations and enable the complex analytics to run on different machines.

Again the concept of layers and separation here repeats as with Kafka.

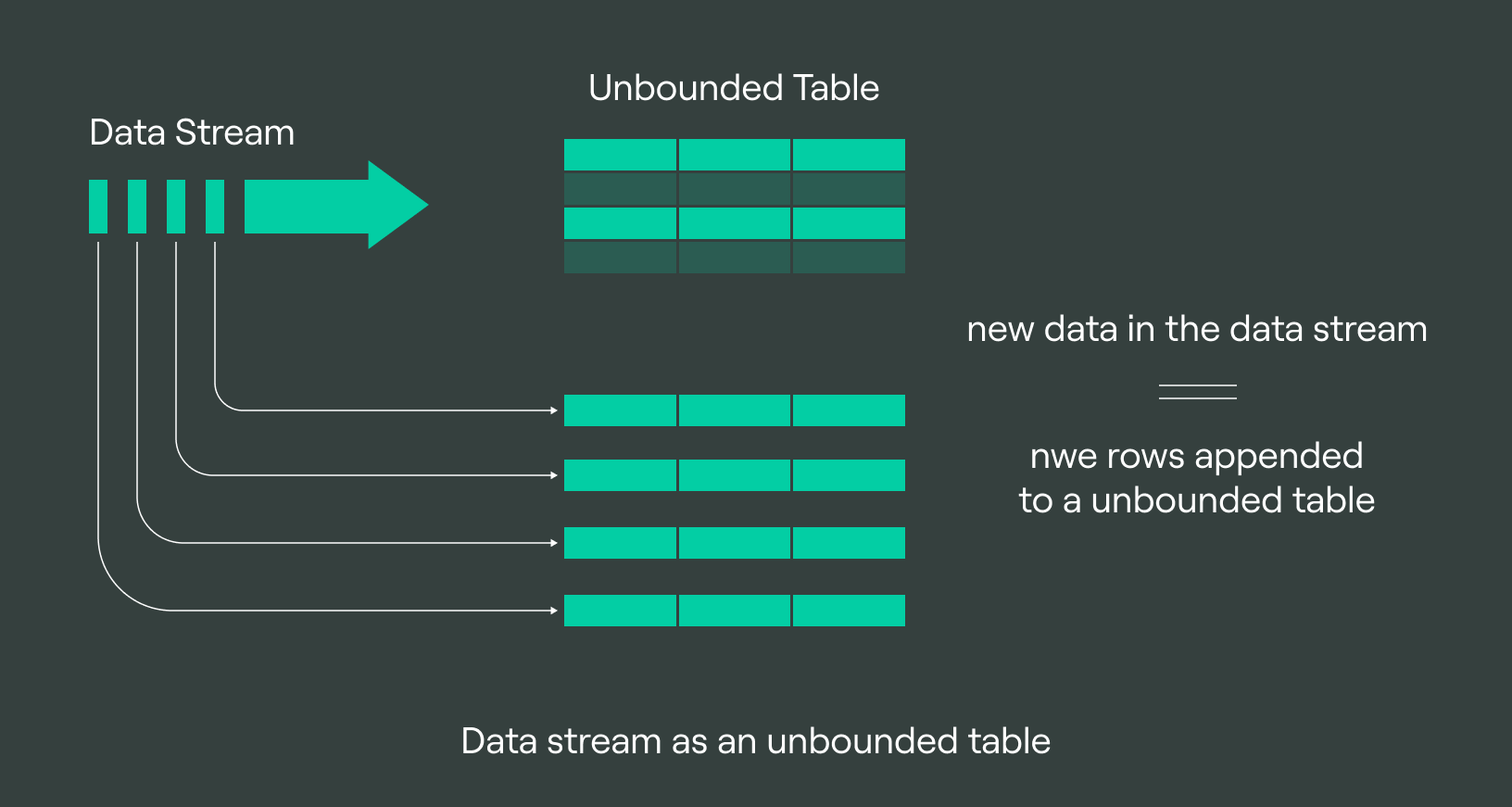

Spark streaming just looked at the stream as a long batch — which didn’t end.

At that time Spark, Kafka and the rest of the “big data” ecosystem mainly grew from the Apache foundation, and things kinda fit together nicely especially for the on prem.

With the cloud growth momentum more tools and competition entered and each went into its separate way but these two remained as the dominant ones.

So what do we have now?

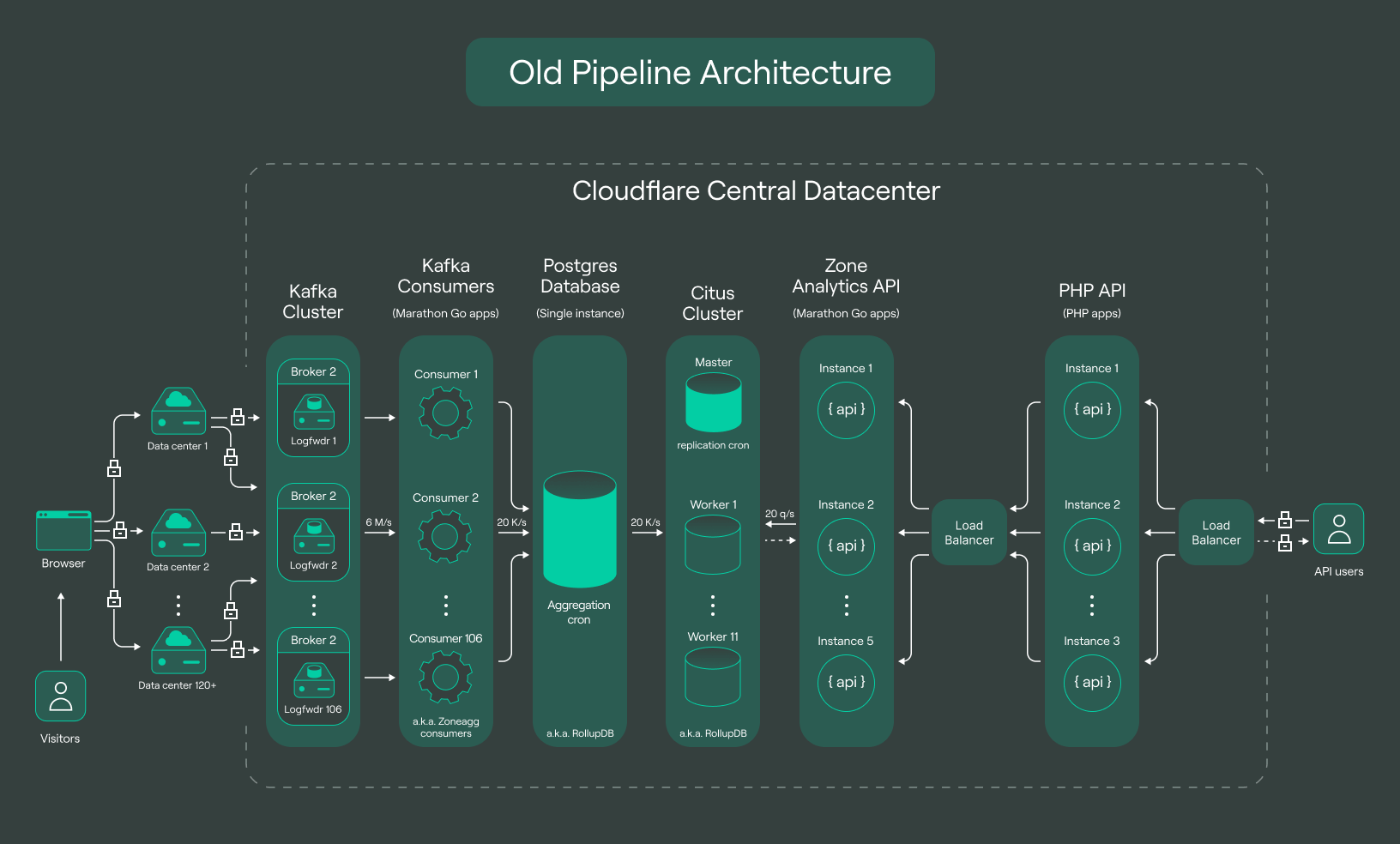

A bunch of machines used as a Kafka cluster to get messages from different services\users\devices, a bunch of machines running Spark to try and sort and aggregate the data — and write it in the new format that’s easier to query later.

A lot of times Spark would be used to write to some other database for analytical purposes ranging from Snowflake, Redshift or even Postgres.

While keeping in mind that Spark is what’s called a stateless analytical engine — i.e. it doesn’t really “remember” things VS let’s say databases — or even Kafka (which is de facto an event log — time based database): it comes with some inherent design limitations because of that, which over the years were mitigated to an extent with the improvements we mentioned in the versions of Spark.

This is where we meet ClickHouse.

ClickHouse — Clickstream analytical warehouse

ClickHouse is an open-source columnar database management system optimized for real-time analytics.

ClickHouse was designed with a unique storage system that supports fast ingestion with low latency and good compression based on the columns.

Built to be distributed, which allows for horizontal scalability, ClickHouse’s primary strength lies in its ability to provide ad-hoc analytics on large datasets in real time, making it a popular choice for analytical workloads.

ClickHouse was designed initially to answer the specific use case of reading data from a streaming source and maintaining that “State” of counts or sums as we gave in the example before, i.e. number of unique visitors we saw in the stream or number of clicks on a link and so forth…

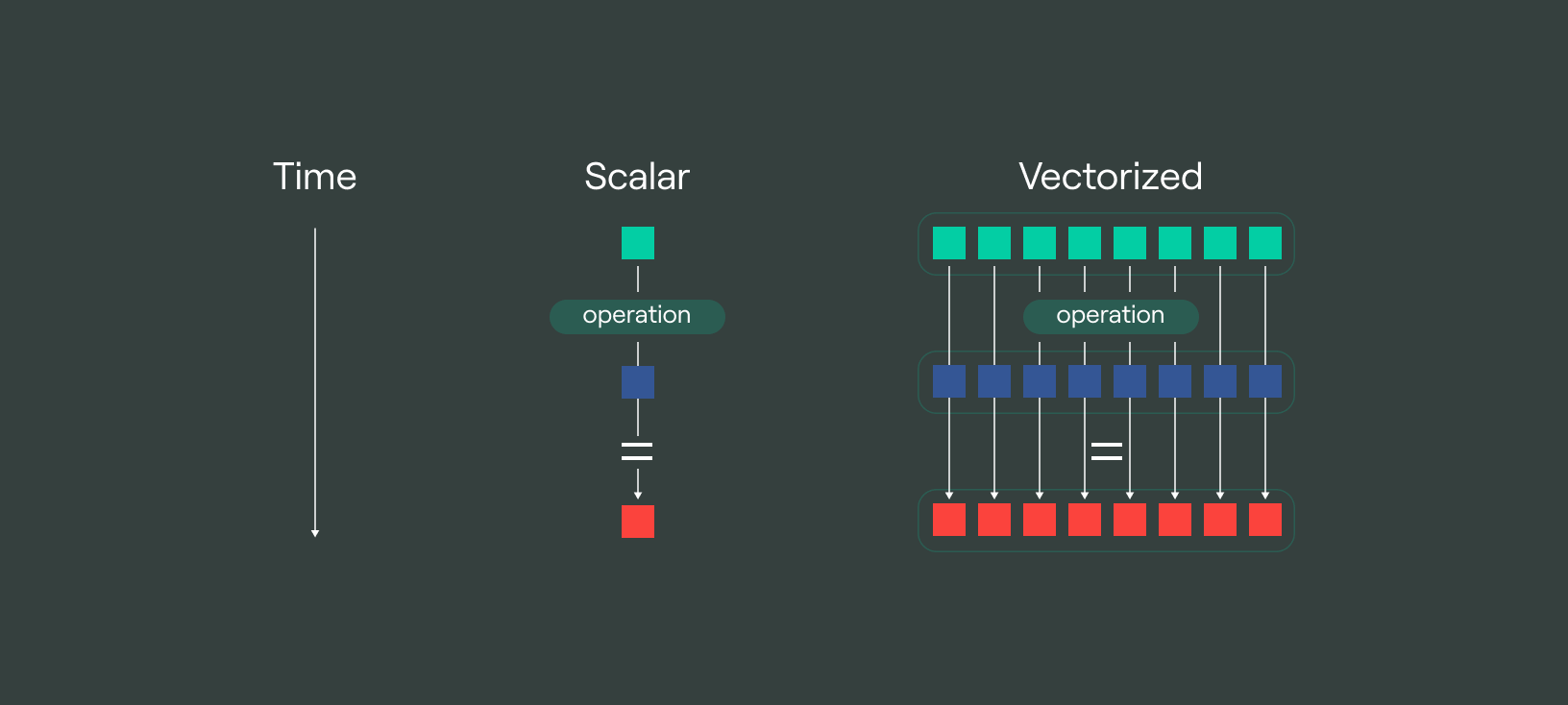

One of the differences between Spark and ClickHouse starts with the fact that ClickHouse is a database — it was designed to store state — i.e. remember what happened before X minutes. Another difference is the runtime language chosen for CH — C++, an extremely efficient and low latency language which can easily use advanced low level CPU instructions to gain huge performance improvements especially for aggregation type of processing.

DoubleCloud Managed Service for ClickHouse®

Fully managed solution for ClickHouse — fastest and the most resource efficient open-source OLAP database for real-time analytics.

As such ClickHouse enables “Consuming” data from Kafka at extremely low latency while keeping track of all the stage aggregations we need to answer our business needs while also providing us with an analytical database to run SQL queries on the large scale data and the aggregations we define, all in the milliseconds from the moment the data is sent to our systems.

For example:

Summary

As a closing remark, what used to be the “magic pair” of Kafka & Spark might not be the undisputed king of the hill anymore, and there might be alternative, more modern ways to handle advanced business needs of today’s evolving data world. When we want or sometimes need access to the data as recent as its creation and as fast as possible to outpace the competition and provide that “instant gratification” that customers crave so much, like posting on social media sites and immediately seeing statistics of views from your audience — I think we all can relate and understand how disappointing it would be to see results only hours, days, or weeks after things happened.

DoubleCloud provides an easy and cost effective way to deploy Kafka and ClickHouse for customers.

ClickHouse® is a trademark of ClickHouse, Inc. https://clickhouse.com

Start your trial today