ClickHouse, known for its efficient data compression techniques

What we do in the shadows: Scaling log management with managed services

Written by: Valery Denisov, DoubleCloud Senior Security Engineer

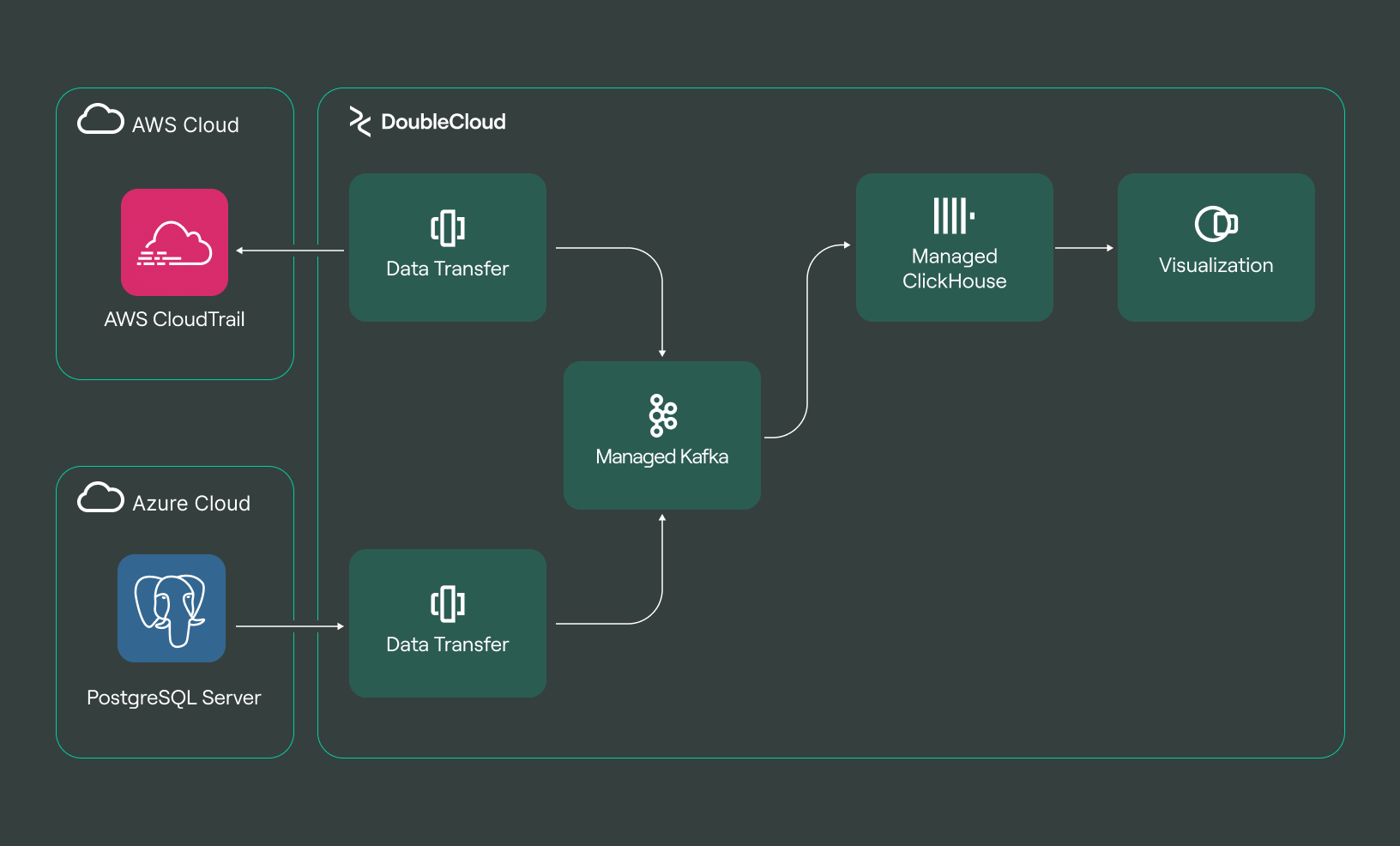

Consider a scenario where a company’s core function involves transferring, storing, processing, and visualizing customers’ data. In such a context, maintaining stringent security measures is not just a preference but a necessity. These security measures encompass a range of controls and practices designed to ensure the integrity and compliance of the digital infrastructure. One of the critical byproducts of these measures is the generation of substantial volumes of logs and data. The cost implications of searching through extensive log data, especially when looking back several weeks or more, can be significant. This raises an important question: Is there a more practical approach to managing and analyzing these logs?

Logs serve as crucial navigational aids for security teams, akin to breadcrumbs. They trace activities like the creation of an EC2 instance, the unexpected appearance of a security group, or the disappearance of a seldom-used IAM role. In instances of security breaches or during routine audits, it’s imperative to determine the timing, reasons, and personnel involved in these activities.

In managing log data, a common approach involves using two distinct types of tools: one for short-term log analysis and incident response, and another for long-term log aggregation, trend visualization, and search capabilities. Systems commonly used for these purposes often excel in their ability to swiftly analyze a wide range of log sources with automatic parsing and provide a comprehensive suite of features for incident response, as well as the detection and prevention of malicious activities. However, a significant challenge arises with these systems — the cost of processing and storing logs tends to increase substantially as data volumes grow and logs age. This challenge is often reflected in the pricing models of standard market solutions, where retaining data for longer periods can become substantially expensive, thereby impacting overall cost-effectiveness.

In many scenarios, the requirement extends to analyzing logs several months old, and sometimes even what could be considered ‘ancient’ in digital terms, such as six months old. To handle these older logs, methods like rehydration or retrieval from storage solutions, including cloud-based object storage, are often necessary. It’s not uncommon in these cases to be handling data on the scale of terabytes, presenting a significant challenge in terms of both processing power and cost.

Consider the volume of data generated by a seemingly modest setup: an empty Kubernetes cluster (EKS) alone can produce over 2 GB of audit logs per day. Maintaining these logs is not just a technical requirement but a compliance necessity.

While systems with robust features, such as those offered by popular solutions like Datadog, are available for log management, their cost-effectiveness often diminishes when tasked with complex operations. Creating comprehensive dashboards for tracking events over extended periods, like managing AWS CloudTrail events over a span of six months, can become notably expensive with these widely-used solutions. This reality prompts the exploration of alternative solutions that can balance functionality with cost efficiency.

Elasticsearch is often brought into this conversation. It’s true that Elasticsearch effectively meets specific requirements, but scalability becomes a financial and logistical challenge. Maintaining its operational efficiency, while addressing issues like sharding and occasional shard failures, requires substantial resources. This level of maintenance is not always feasible, especially for teams without the luxury of extensive engineering support.

Faced with these challenges, one might ask, “How can we cut costs while maintaining efficiency in log management?” A viable solution emerges in the merging of the advantages of open-source tools with the efficiency of managed services. This combination effectively addresses scalability and cost concerns, while upholding the operational efficiency required. Striking this balance between functionality, cost, and effort is particularly critical for teams constrained by budget and limited engineering resources.

To illustrate this approach, consider specific log management strategies, such as the one implemented by DoubleCloud, which embody these principles. DoubleCloud, for instance, employs services like ClickHouse for data transfer and visualization, effectively managing substantial log volumes within a modest budget. ClickHouse is renowned for its efficient data compression techniques, serving as a prime example of how open-source tools, when properly managed, can significantly enhance log management processes. This scenario provides a practical demonstration of how the integration of open-source benefits with managed services can offer optimal solutions to the challenges previously discussed.

In this article, we’ll talk about:

ClickHouse compression algorithms: a detailed walkthrough

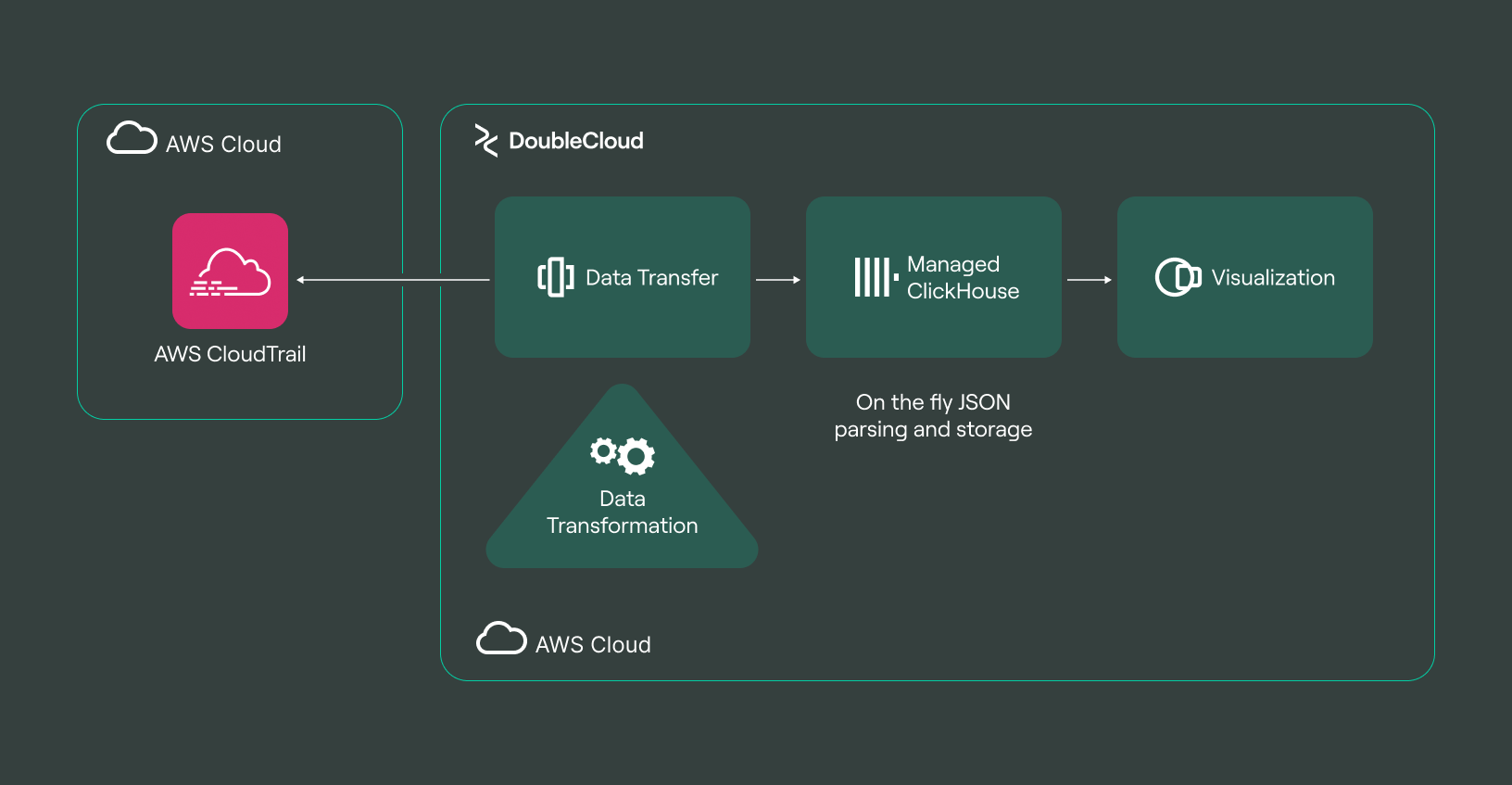

In this section, a detailed exploration into the cost-efficient approach to managing and visualizing the aggregation of organization-wide AWS CloudTrail logs is presented. This step-by-step guide outlines a method focused on achieving efficiency in log management, particularly in reducing operational costs.

<Diagram: AWS CloudTrail → Data Transfer → ClickHouse → Charts>

Step 1: Creating the ClickHouse cluster

The foundation of a log management system can be a ClickHouse storage cluster. The setup process, guided by the principles of infrastructure-as-code (IaC), ensures each step is secure and scalable, with rigorous evaluation of all IaC elements from a security standpoint.

Terraform stands out as the tool of choice for implementation due to its reliability and versatility.

The authentication process hinges on the use of a service account and its corresponding keys, a critical step for ensuring secure access and operation.

To provide a clearer picture, here’s an excerpt from the provider.tf file, illustrating the initial setup:

# Terraform configuration for DoubleCloud and AWS integration

terraform {

required_providers {

doublecloud = {

source = "registry.terraform.io/doublecloud/doublecloud"

}

}

}

# DoubleCloud provider setup

provider "doublecloud" {

endpoint = "api.double.cloud:443"

authorized_key = file("authorized_key.json")

}

# AWS provider setup

provider "aws" {

profile = var.profile

}

With the provider configuration in place, the next step is to define the ClickHouse cluster. A key decision in this process is determining the location of the cluster.

<Diagram: BYOC vs. DoubleCloud Managed Infrastructure>

The choice typically lies between using managed infrastructure or deploying it in one’s own cloud environment (BYOC). A BYOC approach involves defining a network and setting up peering with the managed infrastructure provider.

The following is an illustration of how a BYOC module might be defined in Terraform:

module "byoc" {

source = "doublecloud/doublecloud-byoc/aws"

version = "1.0.3"

doublecloud_controlplane_account_id = data.aws_caller_identity.self.account_id

ipv4_cidr = "10.10.0.0/16"

}

resource "doublecloud_clickhouse_cluster" "dwh" {

project_id = var.project_id

name = "dwg"

region_id = "eu-central-1"

cloud_type = "aws"

network_id = doublecloud_network.network.id

description = "Main DWH Cluster"

resources {

clickhouse {

resource_preset_id = "s1-c2-m4"

disk_size = 51539607552

replica_count = var.is_prod ? 3 : 1 # for prod it's better to be more than 1 replica

shard_count = 1

}

}

config {

log_level = "LOG_LEVEL_INFO"

text_log_level = "LOG_LEVEL_INFO"

}

access {

data_services = ["transfer", "visualization"]

ipv4_cidr_blocks = [{

value = data.aws_vpc.infra.cidr_block

description = "peered-net"

}]

}

}

data "doublecloud_clickhouse" "dwh" {

name = doublecloud_clickhouse_cluster.dwh.name

project_id = var.project_id

}

This Terraform configuration enables the setup of the ClickHouse cluster with specified parameters, including size, high-availability features, and access permissions. While Terraform offers a streamlined, code-based approach, an alternative is configuring the cluster via a user interface, catering to those who prefer a graphical setup process.

Step 2: Loading logs with data transfer service

Once the ClickHouse cluster is operational, the next crucial step is to load organization-wide CloudTrail logs into it. This involves creating a data transfer endpoint specifically for the ClickHouse cluster. The setup, while straightforward, is crucial to ensure efficient and secure data transfer.

The following is the Terraform configuration used to create the data transfer endpoint:

resource "doublecloud_transfer_endpoint" "dwh_target" {

name = "dwh-target"

project_id = var.project_id

settings {

clickhouse_target {

connection {

address {

cluster_id = doublecloud_clickhouse_cluster.dwh.id

}

database = "default"

user = data.doublecloud_clickhouse.dwh.connection_info.user

password = data.doublecloud_clickhouse.dwh.connection_info.password

}

}

}

}

This configuration snippet outlines the setup for the endpoint, specifying the connection details for the ClickHouse cluster. It includes the cluster’s ID, the default database, and the necessary authentication details. Such a setup ensures a seamless and secure pipeline for transferring CloudTrail logs into the ClickHouse data warehouse, facilitating the commencement of data analysis and visualization processes.

Once the ClickHouse cluster is established, the next step is to create a data conduit between the AWS CloudTrail API and the data transfer service. This connection is crucial for seamless data integration and analysis.

The following is the Terraform configuration used for setting up the AWS CloudTrail source endpoint:

resource "doublecloud_transfer_endpoint" "aws_cloudtrail_source" {

name = "example-cloudtrail-source" # Replace with your desired name

project_id = var.project_id # Replace with your DoubleCloud project ID variable

settings {

aws_cloudtrail_source {

key_id = var.aws_cloudtrail_key_id # AWS CloudTrail Access Key ID

secret_key = var.aws_cloudtrail_secret_key # AWS CloudTrail Secret Key

region_name = var.aws_region # AWS region of CloudTrail

start_date = var.cloudtrail_start_date # Start date from tf.vars, format YYYY-MM-DD

}

}

}

It’s essential to underscore the importance of security best practices in this setup. Credentials such as Access Key ID and Secret Key should never be hardcoded into Terraform scripts. Instead, they should be managed securely, for instance, through variables or secure vaults, to prevent unauthorized access and maintain the integrity of the cloud infrastructure.

This configuration establishes a dedicated pathway for transferring CloudTrail logs to the DoubleCloud service, enabling efficient data processing and management. By meticulously configuring this connection, the log data is accurately and securely transferred, paving the way for effective log analysis and visualization.

To complete the setup, a connection is established between the source (AWS CloudTrail) and the target (ClickHouse cluster), reminiscent of connecting the dots in a game. This final step is crucial for initiating the actual data transfer.

The following is the Terraform script used to link the source and target:

resource "doublecloud_transfer" "cloudtrail2ch" {

name = "cloudtrail2ch"

project_id = var.project_id

source = doublecloud_transfer_endpoint.aws_cloudtrail_source.id

target = doublecloud_transfer_endpoint.dwh_target.id

type = "SNAPSHOT_AND_INCREMENT"

activated = true

}

This configuration essentially ‘bridges’ the CloudTrail source with the ClickHouse target. Upon successful activation, data begins to flow seamlessly from CloudTrail into the ClickHouse cluster, facilitating the initiation of comprehensive log analysis.

For further Terraform examples and to gain an understanding of various configurations, the DoubleCloud GitHub repository can be explored at DoubleCloud Terraform Provider Examples. Connecting a GitHub account is also recommended for easier access and updates.

Step 3: Unleashing the power of data visualization

This phase of the process enters an exciting domain: data visualization. CloudTrail data, securely stored in ClickHouse, is now ready for transformation and analysis. A crucial consideration at this stage is how ClickHouse organizes data.

In ClickHouse, data is stored in a columnar format, with one of the columns containing JSON objects that encompass the entire CloudTrail event. While this format is efficient for storage, it presents a challenge for direct analysis and visualization.

To address this, the initial step involves transforming these extensive event logs into a more manageable format, achieved through the implementation of a simple yet effective parser. This parser breaks down the complex JSON objects into structured data, facilitating easier analysis and visualization.

Converting the raw log data into a structured format unlocks the potential for creating insightful and visually compelling charts. This transformation is key in translating vast amounts of raw data into actionable insights that are intuitively understandable and can be leveraged for decision-making.

CREATE MATERIALIZED VIEW default.parsed_data_materialized_view

(

`http_useragent` Nullable(String),

`error_message` Nullable(String),

`error_kind` Nullable(String),

`network_client_ip` Nullable(String),

`eventVersion` Nullable(String),

`eventTime` DateTime,

`eventSource` Nullable(String),

`eventName` Nullable(String),

`awsRegion` Nullable(String),

`sourceIPAddress` Nullable(IPv4),

`userAgent` Nullable(String),

`requestID` Nullable(String),

`eventID` Nullable(String),

`readOnly` Nullable(UInt8),

`eventType` Nullable(String),

`managementEvent` Nullable(UInt8),

`recipientAccountId` Nullable(String),

`account` Nullable(String),

`userIdentity_type` Nullable(String),

`userIdentity_principalId` Nullable(String),

`userIdentity_arn` Nullable(String),

`userIdentity_accountId` Nullable(String),

`userIdentity_accessKeyId` Nullable(String),

`sessionIssuer_type` Nullable(String),

`sessionIssuer_principalId` Nullable(String),

`sessionIssuer_arn` Nullable(String),

`sessionIssuer_accountId` Nullable(String),

`sessionIssuer_userName` Nullable(String),

`federatedProvider` Nullable(String),

`attributes_creationDate` Nullable(String),

`attributes_mfaAuthenticated` Nullable(UInt8),

`requestParameters_commandId` Nullable(String),

`requestParameters_instanceId` Nullable(String),

`tlsDetails_tlsVersion` Nullable(String),

`tlsDetails_cipherSuite` Nullable(String),

`tlsDetails_clientProvidedHostHeader` Nullable(String),

`responseElements` Nullable(String),

`webIdFederationData_attributes` Nullable(String),

`attributes_mfaAuthenticated_string` Nullable(String),

`requestParameters_durationSeconds` Nullable(Int64),

`requestParameters_externalId` Nullable(String),

`responseElements_credentials_accessKeyId` Nullable(String),

`responseElements_credentials_sessionToken` Nullable(String),

`responseElements_credentials_expiration` Nullable(String),

`responseElements_assumedRoleUser_assumedRoleId` Nullable(String),

`responseElements_assumedRoleUser_arn` Nullable(String),

`resources` Nullable(String),

`userIdentity_sessionContext` Nullable(String),

`requestParameters_agentVersion` Nullable(String),

`requestParameters_agentStatus` Nullable(String),

`requestParameters_platformType` Nullable(String),

`requestParameters_platformName` Nullable(String),

`requestParameters_platformVersion` Nullable(String),

`requestParameters_iPAddress` Nullable(String),

`requestParameters_computerName` Nullable(String),

`requestParameters_agentName` Nullable(String),

`requestParameters_availabilityZone` Nullable(String),

`requestParameters_availabilityZoneId` Nullable(String),

`tlsDetails` Nullable(String),

`requestParameters_bucketName` Nullable(String),

`requestParameters_Host` Nullable(String),

`requestParameters_acl` Nullable(String),

`additionalEventData` Nullable(String),

`userIdentity_invokedBy` Nullable(String),

`requestParameters_encryptionAlgorithm` Nullable(String),

`requestParameters_encryptionContext` Nullable(String),

`resources_ARN` Nullable(String),

`requestParameters_roleArn` Nullable(String),

`requestParameters_roleSessionName` Nullable(String),

`sharedEventID` Nullable(String),

`resources_accountId` Nullable(String),

`resources_type` Nullable(String),

`eventCategory` Nullable(String),

`requestParameters` Nullable(String),

`userIdentity` Nullable(String)

)

ENGINE = MergeTree

ORDER BY eventTime

SETTINGS index_granularity = 8192 AS

SELECT

JSONExtractString(CloudTrailEvent, 'http_request.user_agent') AS http_useragent,

JSONExtractString(CloudTrailEvent, 'errorMessage') AS error_message,

JSONExtractString(CloudTrailEvent, 'errorCode') AS error_kind,

JSONExtractString(CloudTrailEvent, 'sourceIPAddress') AS network_client_ip,

JSONExtractString(CloudTrailEvent, 'eventVersion') AS eventVersion,

parseDateTimeBestEffort(ifNull(JSONExtractString(CloudTrailEvent, 'eventTime'), '')) AS eventTime,

JSONExtractString(CloudTrailEvent, 'eventSource') AS eventSource,

JSONExtractString(CloudTrailEvent, 'eventName') AS eventName,

JSONExtractString(CloudTrailEvent, 'awsRegion') AS awsRegion,

toIPv4(JSONExtractString(CloudTrailEvent, 'sourceIPAddress')) AS sourceIPAddress,

JSONExtractString(CloudTrailEvent, 'userAgent') AS userAgent,

JSONExtractString(CloudTrailEvent, 'requestID') AS requestID,

JSONExtractString(CloudTrailEvent, 'eventID') AS eventID,

JSONExtractBool(CloudTrailEvent, 'readOnly') AS readOnly,

JSONExtractString(CloudTrailEvent, 'eventType') AS eventType,

JSONExtractBool(CloudTrailEvent, 'managementEvent') AS managementEvent,

JSONExtractString(CloudTrailEvent, 'recipientAccountId') AS recipientAccountId,

JSONExtractString(CloudTrailEvent, 'aws_account') AS account,

JSONExtractString(CloudTrailEvent, 'userIdentity.type') AS userIdentity_type,

JSONExtractString(CloudTrailEvent, 'userIdentity.principalId') AS userIdentity_principalId,

JSONExtractString(CloudTrailEvent, 'userIdentity.arn') AS userIdentity_arn,

JSONExtractString(CloudTrailEvent, 'userIdentity.accountId') AS userIdentity_accountId,

JSONExtractString(CloudTrailEvent, 'userIdentity.accessKeyId') AS userIdentity_accessKeyId,

JSONExtractString(CloudTrailEvent, 'userIdentity.sessionContext.sessionIssuer.type') AS sessionIssuer_type,

JSONExtractString(CloudTrailEvent, 'userIdentity.sessionContext.sessionIssuer.principalId') AS sessionIssuer_principalId,

JSONExtractString(CloudTrailEvent, 'userIdentity.sessionContext.sessionIssuer.arn') AS sessionIssuer_arn,

JSONExtractString(CloudTrailEvent, 'userIdentity.sessionContext.sessionIssuer.accountId') AS sessionIssuer_accountId,

JSONExtractString(CloudTrailEvent, 'userIdentity.sessionContext.sessionIssuer.userName') AS sessionIssuer_userName,

JSONExtractString(CloudTrailEvent, 'userIdentity.sessionContext.webIdFederationData.federatedProvider') AS federatedProvider,

JSONExtractString(CloudTrailEvent, 'userIdentity.sessionContext.attributes.creationDate') AS attributes_creationDate,

JSONExtractBool(CloudTrailEvent, 'userIdentity.sessionContext.attributes.mfaAuthenticated') AS attributes_mfaAuthenticated,

JSONExtractString(CloudTrailEvent, 'requestParameters.commandId') AS requestParameters_commandId,

JSONExtractString(CloudTrailEvent, 'requestParameters.instanceId') AS requestParameters_instanceId,

JSONExtractString(CloudTrailEvent, 'tlsDetails.tlsVersion') AS tlsDetails_tlsVersion,

JSONExtractString(CloudTrailEvent, 'tlsDetails.cipherSuite') AS tlsDetails_cipherSuite,

JSONExtractString(CloudTrailEvent, 'tlsDetails.clientProvidedHostHeader') AS tlsDetails_clientProvidedHostHeader,

JSONExtractString(CloudTrailEvent, 'responseElements') AS responseElements,

JSONExtractRaw(CloudTrailEvent, 'userIdentity.sessionContext.webIdFederationData.attributes') AS webIdFederationData_attributes,

JSONExtractString(CloudTrailEvent, 'userIdentity.sessionContext.attributes.mfaAuthenticated') AS attributes_mfaAuthenticated_string,

JSONExtractInt(CloudTrailEvent, 'requestParameters.durationSeconds') AS requestParameters_durationSeconds,

JSONExtractString(CloudTrailEvent, 'requestParameters.externalId') AS requestParameters_externalId,

JSONExtractString(CloudTrailEvent, 'responseElements.credentials.accessKeyId') AS responseElements_credentials_accessKeyId,

JSONExtractString(CloudTrailEvent, 'responseElements.credentials.sessionToken') AS responseElements_credentials_sessionToken,

JSONExtractString(CloudTrailEvent, 'responseElements.credentials.expiration') AS responseElements_credentials_expiration,

JSONExtractString(CloudTrailEvent, 'responseElements.assumedRoleUser.assumedRoleId') AS responseElements_assumedRoleUser_assumedRoleId,

JSONExtractString(CloudTrailEvent, 'responseElements.assumedRoleUser.arn') AS responseElements_assumedRoleUser_arn,

JSONExtractRaw(CloudTrailEvent, 'resources') AS resources,

JSONExtractRaw(CloudTrailEvent, 'userIdentity.sessionContext') AS userIdentity_sessionContext,

JSONExtractString(CloudTrailEvent, 'requestParameters.agentVersion') AS requestParameters_agentVersion,

JSONExtractString(CloudTrailEvent, 'requestParameters.agentStatus') AS requestParameters_agentStatus,

JSONExtractString(CloudTrailEvent, 'requestParameters.platformType') AS requestParameters_platformType,

JSONExtractString(CloudTrailEvent, 'requestParameters.platformName') AS requestParameters_platformName,

JSONExtractString(CloudTrailEvent, 'requestParameters.platformVersion') AS requestParameters_platformVersion,

JSONExtractString(CloudTrailEvent, 'requestParameters.iPAddress') AS requestParameters_iPAddress,

JSONExtractString(CloudTrailEvent, 'requestParameters.computerName') AS requestParameters_computerName,

JSONExtractString(CloudTrailEvent, 'requestParameters.agentName') AS requestParameters_agentName,

JSONExtractString(CloudTrailEvent, 'requestParameters.availabilityZone') AS requestParameters_availabilityZone,

JSONExtractString(CloudTrailEvent, 'requestParameters.availabilityZoneId') AS requestParameters_availabilityZoneId,

JSONExtractRaw(CloudTrailEvent, 'tlsDetails') AS tlsDetails,

JSONExtractString(CloudTrailEvent, 'requestParameters.bucketName') AS requestParameters_bucketName,

JSONExtractString(CloudTrailEvent, 'requestParameters.Host') AS requestParameters_Host,

JSONExtractString(CloudTrailEvent, 'requestParameters.acl') AS requestParameters_acl,

JSONExtractRaw(CloudTrailEvent, 'additionalEventData') AS additionalEventData,

JSONExtractString(CloudTrailEvent, 'userIdentity.invokedBy') AS userIdentity_invokedBy,

JSONExtractString(CloudTrailEvent, 'requestParameters.encryptionAlgorithm') AS requestParameters_encryptionAlgorithm,

JSONExtractRaw(CloudTrailEvent, 'requestParameters.encryptionContext') AS requestParameters_encryptionContext,

JSONExtractString(CloudTrailEvent, 'resources[0].ARN') AS resources_ARN,

JSONExtractString(CloudTrailEvent, 'requestParameters.roleArn') AS requestParameters_roleArn,

JSONExtractString(CloudTrailEvent, 'requestParameters.roleSessionName') AS requestParameters_roleSessionName,

JSONExtractString(CloudTrailEvent, 'sharedEventID') AS sharedEventID,

JSONExtractString(CloudTrailEvent, 'resources[0].accountId') AS resources_accountId,

JSONExtractString(CloudTrailEvent, 'resources[0].type') AS resources_type,

JSONExtractString(CloudTrailEvent, 'eventCategory') AS eventCategory,

JSONExtractString(CloudTrailEvent, 'requestParameters') AS requestParameters,

JSONExtractString(CloudTrailEvent, 'userIdentity') AS userIdentity

FROM default.management_events

With the successful transformation of data, navigating and utilizing it becomes significantly more straightforward. This conversion from raw, column-stored JSON objects into a structured and analyzable format marks a pivotal moment in the log management process. At this juncture, the data, once cumbersome to handle, is now primed for detailed examination and insightful visual representation. The result is an enhanced ability to search through, analyze, and draw meaningful conclusions from CloudTrail logs, turning a complex dataset into a valuable resource for decision-making and strategy development.

Step 3 (continued): Exploring advanced visualization techniques

Delving into the realm of visualization, two distinct paths emerge for crafting insightful visual representations of parsed data.

The first option is to deploy Grafana, a powerful tool known for its capability to create nifty visualizations. Grafana’s native integration with ClickHouse enhances its utility, enabling us to leverage its extensive visualization capabilities directly with ClickHouse-stored data.

No-code ELT tool: Data Transfer

A cloud agnostic service for aggregating, collecting, and migrating data from various sources.

Alternatively, the native DoubleCloud visualization service can be explored. This service offers a straightforward yet sophisticated approach to data visualization. The process begins with creating a workbook, serving as the foundation for visualizations. Once the workbook is established, the next step is to set up a direct connection to the ClickHouse cluster. This connection becomes the conduit through which data flows into the visualization service, transforming raw numbers and logs into visually compelling and informative charts.

Both Grafana and the DoubleCloud visualization service offer unique advantages, with the choice largely depending on the specific needs and preferences of a team. Whether it’s the extensive customization options of Grafana or the seamless integration of DoubleCloud’s service, these tools play a key role in bringing data to life, enabling the visualization of trends, patterns, and insights that were previously obscured in raw data.

resource "doublecloud_workbook" "aws_cloudtrail_visualisation" {

project_id = var.project_id

title = "AWS CloudTrail Dashboards"

connect {

name = "main"

config = jsonencode({

kind = "clickhouse"

cache_ttl_sec = 600

host = data.doublecloud_clickhouse.dwh.connection_info.host

port = 8443

username = data.doublecloud_clickhouse.dwh.connection_info.user

secure = true

raw_sql_level = "off"

})

secret = data.doublecloud_clickhouse.dwh.connection_info.password

}

}

As the process progresses into the final phase of Step 3, the focus shifts to dynamically engaging with data. The creation of the first chart using the visualization service marks the start of this interactive exploration. Interestingly, this process can be further optimized through Terraform, streamlining the creation and deployment of visualizations.

Whether through the intuitive interfaces of visualization services or the automation capabilities of Terraform, the objective is to transform insights into visual representations. The culmination of this effort is the assembly of an intuitive dashboard, where each chart and graph come together to form a coherent and comprehensive view of the data.

This dashboard serves not just as a visual summary but also as a powerful tool for extracting actionable insights, revealing trends and patterns that might otherwise remain hidden in the depths of raw data. At this stage, the true value of such efforts becomes evident, as the potential of data is unlocked through these thoughtfully crafted visualizations.

(Note: For privacy and security reasons, sensitive data and specific details have been omitted from the examples provided.)

DoubleCloud Visualization. Get insights with ChatGPT!

Don’t waste time reading numerous reports and manually analyzing data — rely on AI-Insights and get fast and accurate conclusions.

Conclusion

The landscape of log management is undergoing a significant transformation, particularly in handling the enormous volume of security logs in today’s data-driven world. The trend towards managed solutions reflects a strategic shift towards systems that not only offer cost savings but also excel in managing the complexities of large-scale data analysis.

Managed solutions, involving technologies like ClickHouse, signify a transition to more sophisticated data analysis techniques. These advancements enable organizations to efficiently manage extensive log volumes, enhancing both speed and analysis capabilities.

Managed Service for Apache Kafka

Fully managed, secure, and highly available service for distributed delivery, storage, and real-time data processing.

The integration of open-source managed services has shown significant progress in this area, and the potential inclusion of technologies like Kafka offers an opportunity to further refine and enhance these solutions. As the field continues to evolve, emerging technologies such as AI and cloud-based analytics are set to expand the horizons, providing innovative approaches to log management challenges.

Get started with DoubleCloud