Apache Kafka vs Spark: Real-time data streaming showdown

Big data is the new oil that’s driving Fourth Industrial Revolution. But we need infrastructure to refine and utilize this oil effectively. Moreover, the Big data market size was valued at $70 billion in 2022, and it would be $103 billion in 2027, growing at a CAGR of 8.03%.

The open-source tools for big data processing are Apache Kafka and Apache Spark. Kafka and Spark are Apache Software Foundation projects that offer robust data processing functionalities across many industries.

However, choosing between these technologies can be challenging if companies still have budget constraints and are still determining their needs. To make a well-informed decision, it’s essential to explore their unique features, strengths, and the specific use cases they are best suited.

Apache Kafka

Apache Kafka is a distributed streaming platform designed to handle real-time data feeds. 80% of Fortune 100 companies use Apache Kafka for event streaming. Kafka is a distributed messaging system that can process streams of records in real time. Kafka operates on the publish-subscribe model, serving as a message broker.

Let’s consider the example of an e-commerce store. If the company wants to keep track of page views, items added to the cart by the customer Apache Kafka’s distributed streaming platform will help them.

Moreover, organizations such as hospitals can use Kafka’s distributed messaging system to collect, process, and distribute data to different systems for real-time processing.

To understand Kafka’s publish-subscribe model, let’s take the example of news media. The news producers generate data and send it to various topics. Then, consumers read the information from these topics. Kafka securely stores real-time data streams fault-tolerantly, simultaneously enabling streaming data processing as the data flows in.

Apache Spark

Apache Spark is an open-source, distributed computing system for big data processing and analytics. Spark helps companies in big data analysis in real time. Spark uses machine learning models for predictive intelligence and customer segmentation. It is also used for network security.

Spark provides an interface for programming entire clusters with implicit data parallelism and fault tolerance. Implicit data parallelism means the system simultaneously processes different data portions across multiple processors. In contrast, fault tolerance indicates the system’s ability to process data correctly, even if some components fail.

Contrary to disk-based data processing systems, Spark has an in-memory data processing engine that allows it to perform tasks much more quickly. Spark’s micro-batch processing approach and structured streaming enable it to manage input and real-time data sources efficiently.

Apache Spark VS Apache Kafka

Apache Spark and Apache Kafka are critical components in big data domain and stream processing but serve distinct roles. Before discussing Apache Spark VS Apache Kafka, it is essential to note that they are not substitutes for each other.

Businesses can use both technologies as one collects real-time data. The critical difference between the two technologies considering various parameters is as follows:

Use cases

Kafka is used in industries such as finance and e-commerce, among many others, because it allows complex event processing.

Financial sector

In the financial industry, Kafka gets real-time stock data, processes payments, detects fraudulent transactions, etc. According to the Kafka website, 7 out of 10 banks in the Fortune 100 use Kafka. Kafka, therefore, is ideal for managing streaming data and transaction data.

Spark is used in the financial sector to get real-time analytics. These analytics can help detect anomalous activities. According to the official website, 80% of Fortune 500 companies use Apache Spark.

E-commerce

Microservices refers to the subsections of e-commerce platforms. For example, login details are one microservices and customer adding items to a cart is another.

For the smooth execution of orders, an e-commerce platform needs a system that enables communication between microservices. Apache Kafka does that task. Kafka is used for inventory management and order processing. E-commerce platforms such as Shopify and BigCommerce use Kafka for managing operations.

Famous companies that use Apache Kafka include Netflix and Airbnb.

Spark in e-commerce is used for personalized recommendations. It uses data such as past purchases, items in the cart, etc., for personalized recommendations.

Popularity

In 2023, over 24 000 companies started using Apache Kafka, and over 14,000 companies started using Apache Spark till June. Per monthly search volume, Apache Spark, with 14,800 searches, is more popular than Apache Kafka, with 12,100 searches.

Apache Spark has 1947 contributors, while Apache Kafka has 1019 contributors on GitHub. As the number of businesses using technology is central, Apache Kafka is famously compared to Apache Spark.

Technology

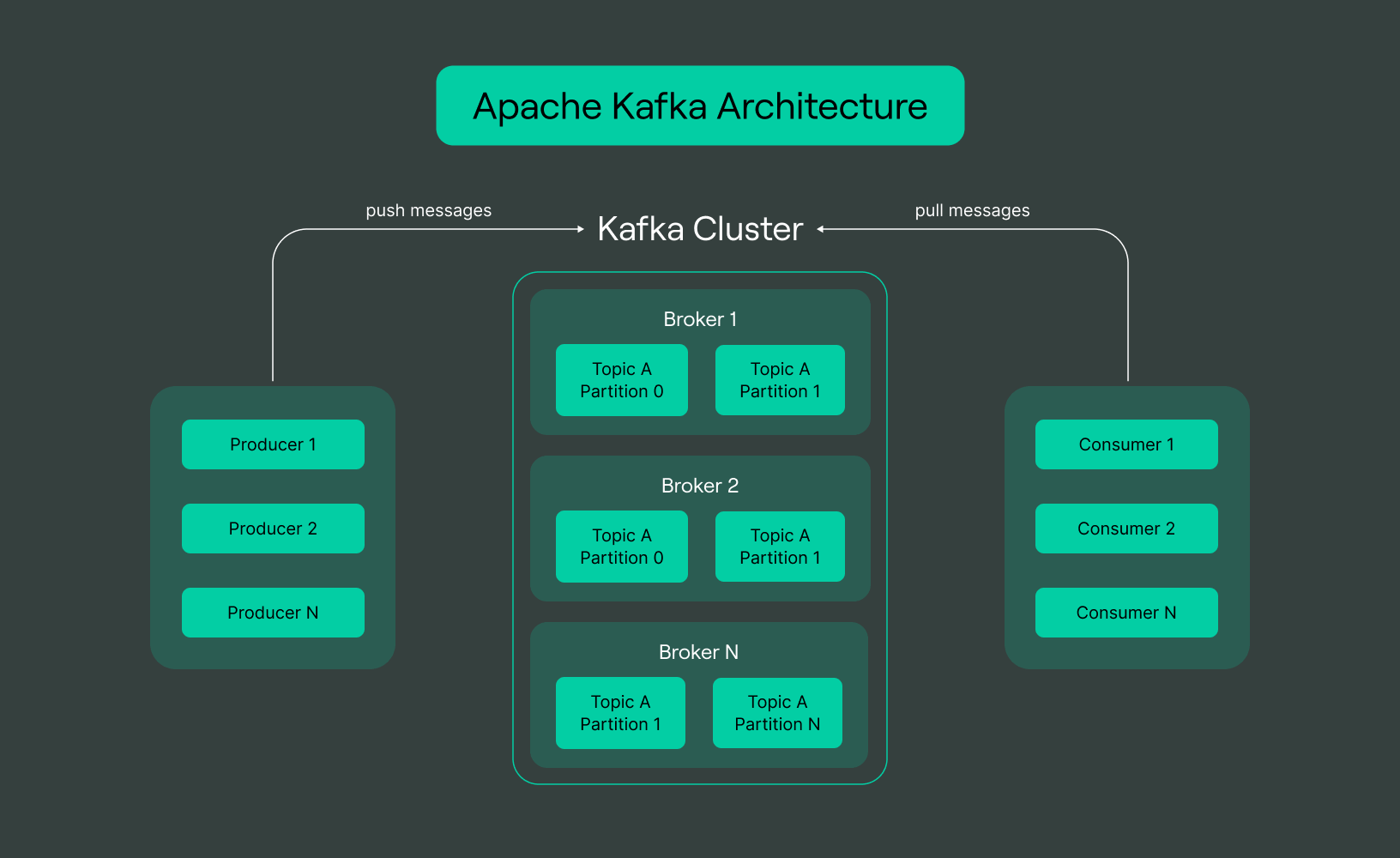

Apache Spark and Apache Kafka are both powerful technologies used in the world of big data processing. Let’s explore the technological features of Kafka:

- Kafka uses a topic-based publish-subscribe model to handle streaming data.

Visual representation of this process is as follows:

The Many Use Cases Of Apache Kafka®: When To Use & Not Use It

-

It stores data streams across a distributed cluster. It operates in a fault-tolerant manner.

-

Kafka ensures data durability by replicating data across multiple nodes.

Now let’s discuss the technological features of Apache Spark:

-

Spark’s core technical feature is applying machine learning to big data. Spark’s Machine Learning MLlib library is used for scalable machine learning.

-

The secret to Spark’s speed is RDDs. RDD acts as a container that loads and distributes data to computers. This phenomenon allows parallelism.

-

To process the live data and perform analytics, we use Spark Streaming. Here, incremental batch processing allows faster streaming.

Performance

As discussed earlier, key performance indicators of Apache Spark and Apache Kafka are speed, fault tolerance, and scalability.

Kafka is built to handle many messages each second. It’s perfect for quickly taking in real-time data. For example, PayPal receives 400 billion messages a day. Kafka features a message delivery lag of milliseconds. It makes Kafka suitable for real-time applications that require no delay.

Scheduling tasks at worker nodes is the key performance indicator in Spark. Each machine learning process consists of a series of transformations. Spark also facilitates graph processing with GraphX.

For example, nodes refer to products and users in a recommendation engine. GraphX finds the relationship between nodes based on interactions. It then recommends to the user the product he’s most likely interested in.

Scalability

In the scenario of real-time data at high volume and velocity, scalability becomes essential. Kafka achieves scalability by distributing the load across multiple brokers in a cluster. Each broker is responsible for a particular portion of the data. Kafka further divides the incoming data into partitions inside a broker. This distributed architecture allows for faster processing of incoming data. It handles incoming streams while making sure that data loss is minimal.

Instead of relying on a single computer to transform data in Spark, we distribute the data processing tasks to multiple computers that form the cluster. Each computer in a cluster is called a worker or node. Following this distributed computing model, Spark can quickly apply data transformation on large volumes of data.

Pricing

The pricing for the Kafka.m5.large broker instance at MSK, which provides two vCPUs and 8 GiB of memory, is $0.21 per hour. As the requirements for vCPUs and memory increase, the pricing scales accordingly. For example, for a broker instance with 96 vCPUs and 384 GiB of memory, the price would be approximately $10.08 per hour, assuming pricing doubles with each doubling of vCPU and memory resources.

Apache Spark’s pricing structure combines software and EC2 instance costs. For example, the t2.small EC2 instance has a software cost of $0.05 per hour and an EC2 cost of $0.023 per hour, totaling $0.073 per hour. Similarly, the t2.2xlarge instance with software costs of $0.05 per hour and EC2 costs of $0.371 per hour totals $0.421 per hour.

Features

Let’s discuss the features of Apache Kafka first:

- The distributed messaging system is the most prominent feature of Apache Kafka. This is a centralized system where producers send messages to topics, and consumers can read those messages.

For example, on Twitter, a person can post a tweet with a hashtag. This tweet will reach the consumers who subscribed to that hashtag.

- Another essential feature of Apache Kafka is Real Time Streaming. Real-Time Streaming enables Kafka to process data with low latency. Gaming is the most critical example of the importance of real-time streaming.

Many things happen simultaneously in multiplayer games, such as player movements or interactions. These actions must be communicated to all players quickly. Kafka shines in such scenarios.

The Spark features are as follows:

-

Spark performs in-memory computation, and this allows Spark to work at a faster speed as compared to traditional systems like MapReduce.

-

One key differentiating feature of Spark is its ability to connect with multiple data sources. It can connect with Hadoop’s HDFS, Apache Hive, Cassandra, etc.

Ease of use

Kafka provides APIs and client libraries in multiple programming languages like Java and Python. In Kafka, data producers send data to topics, and data consumers retrieve this data from the topics. This decoupled technique allows for scalable solutions. But, setting up Kafka clusters might be challenging for beginners.

And tuning Kafka for optimal performance requires technical expertise. Although Kafka allows basic stream processing, Kafka works with Spark for more complex tasks.

Spark’s in-built APIs for Java, Scala, Python, and Spark SQL make it accessible to many users. Its simple building blocks allow the writing of user-defined functions. Plus, the interactive mode allows immediate feedback. Extensive online resources for both of these technologies also enhance their ease of use.

Support and services

Apache Software Foundation has provided detailed documentation for Apache Spark and Apache Kafka. Users can read about concepts and get a practical head start. Moreover, various platforms provide support for setting up these technologies.

For Example, DoublCloud offers Kafka as a fully managed service. Moreover, both of these software have active contributors who provide continuous improvements.

Community

Apache Kafka and Apache Spark have a rich community that connects through multiple channels, with JIRA being one of them. JIRA is an issue-tracking system developed by Atlassian that both Apache Spark and Kafka can use. Developers and programmers can interact through these communities to enhance the platform and open-source project.

Moreover, Kafka and Spark have a mailing list as well. Users can ask questions, report issues, and contribute to the project through the mailing list. Kafka and Spark have GitHub repositories as well. Lastly, Spark has an active subreddit community where developers and users engage with each other, while Apache Kafka doesn’t have one.

Integration

Kafka and Spark offer various integrations through modules and frameworks. Details of integration are as follows:

-

Apache Spark provides native integration with Apache Kafka through its Spark Streaming module. It allows Spark Streaming applications to consume data directly from Kafka topics.

-

Spark provides various integration to read and write data from multiple sources, such as dataframe, HDFS, and Cassandra.

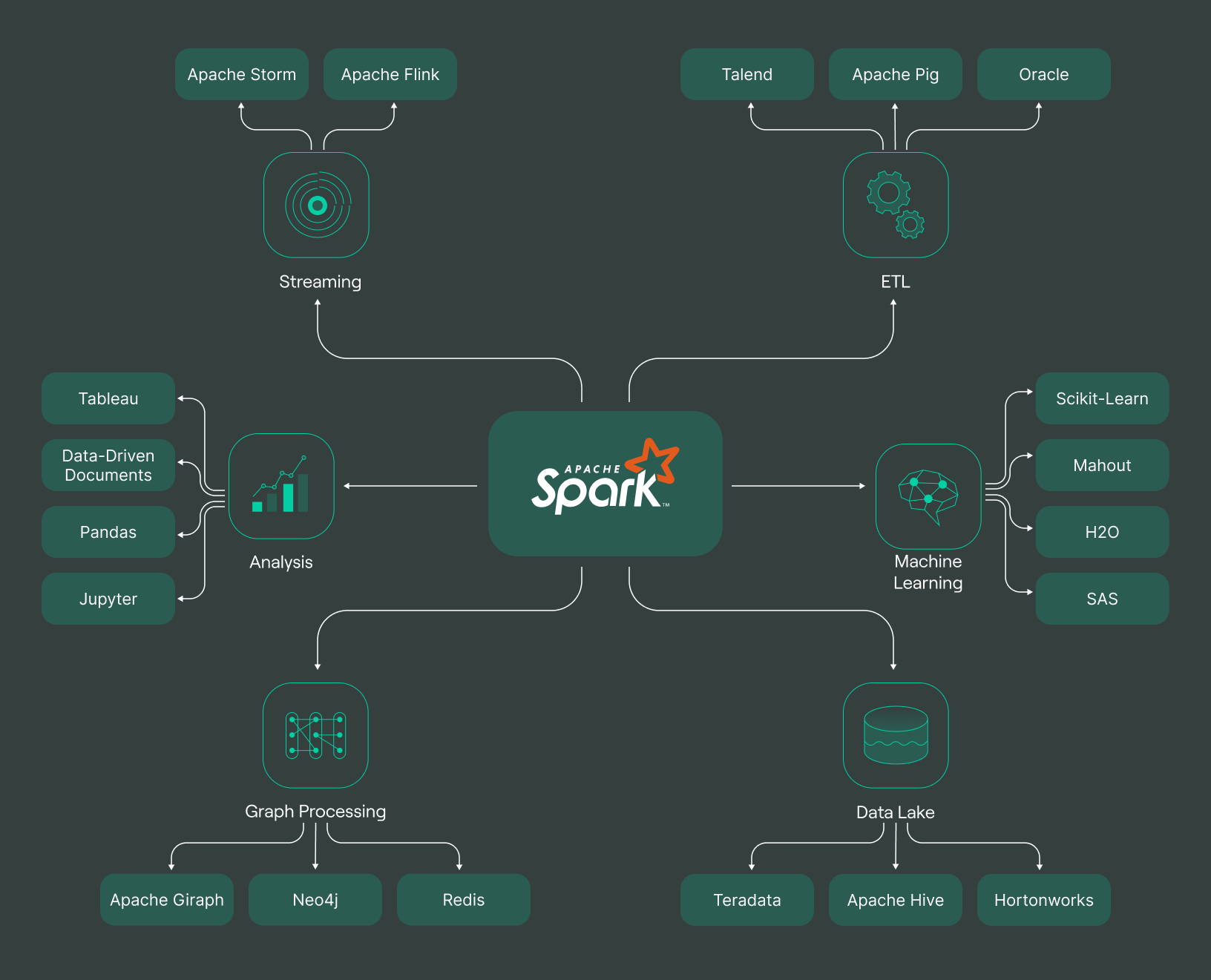

Spark allows multi-faceted integration, as shown in the following image:

-

Kafka can integrate with tools like Apache Flink to directly access Kafka data.

-

Kafka has a JDBC connector that allows users to connect with relational databases such as PostgreSQL.

-

HDFS Connector is used to get access to Hadoop HDFS.

-

Kafka Streams API allows you to create customized business apps

Security

Both systems provide several features when considering security in Apache Kafka and Apache Spark.

Kafka supports authentication via Simple Authentication and Security Layers. PLAIN, SCRAM-SHA-256, SCRAM-SHA-512, etc., can back SASL. For authorization, it provides Access Control Lists that can be used to set permissions at the user level for reading, writing, and managing topics or consumer groups.

Spark also offers several security features. Firstly, it supports Kerberos authentication for its web UI and client-server connections. For encryption, Spark supports SSL for encrypting data. Its web UIs have multiple levels of protection. This includes authentication via shared secret, filters on HTTP methods, etc.

Monitoring

Kafka offers several tools for monitoring and managing clusters. It uses Java Management Extensions for reporting metrics. Metrics are organized in a hierarchy of MBeans; It can be viewed using JMX client tools such as JConsole.

Moreover, Kowl is an open-source Kafka management interface that offers a user-friendly approach to managing Kafka topics and consumers. Grafana can visualize Kafka metrics.

Furthermore, the Kafka Connect API enables its components' health and performance monitoring. Spark provides several utilities for monitoring and managing applications. Each SparkContext launches a web UI, typically on port 4040, displaying useful application information. Spark uses the Dropwizard/Codahale metrics library for detailed metrics.

These metrics can be reported via JMX, Console output, CSV files, and other pluggable reporters. It also provides a REST API for managing Spark jobs. Driver and executor logs can help troubleshoot application issues and are accessible through the Spark web UI.

Deployment

Kafka operates as a distributed system typically deployed across one or more servers, each referred to as a Kafka broker. These brokers manage and store data while controlling the data flows between producers and consumers.

Deployment of Kafka necessitates ZooKeeper, a service used to maintain and coordinate the Kafka brokers. But this dependency is no longer present in the latest versions.

Spark can be deployed in several ways: in standalone mode, on a cloud, or with cluster managers like Hadoop YARN or Apache Mesos. In a standalone deployment, Spark’s built-in cluster manager allocates resources. Managed services like AWS EMR, Google Cloud Dataproc, or Microsoft Azure HDInsight are options in a cloud-based environment.

When deployed with Hadoop YARN, or Apache Mesos, Spark can share resources with other applications and benefit from centralized, fine-grained resource management.

Ecosystem

Kafka has many tools in its ecosystem, the details of which are as follows:

-

Kafka Connect: A framework for managing connectors to integrate Kafka with external systems.

-

Kafka Streams: A client library for building real-time stream processing applications on top of Kafka.

-

Kafka MirrorMaker: Allows replicating data between Kafka clusters for backup purposes.

-

Kafka REST Proxy: Provides a RESTful interface for interacting with Kafka using HTTP protocols.

Spark also has a diverse ecosystem which is as follows:

-

Spark SQL: Allows SQL queries using DataFrame APIs.

-

Spark Streaming: Enables analyzing real-time streaming data.

-

MLlib: A machine learning library for modeling.

-

GraphX: A graph processing library for analyzing graph data.

Connectors

Apache Spark and Apache Kafka have connectors that enable integration and data exchange between the two technologies. Apache Spark provides the Kafka Direct Stream API, which allows Spark Streaming applications to consume data directly from Kafka topics. It provides an interface for reading Kafka data and performing various transformations and analytics using Spark.

On the other hand, Apache Kafka offers Kafka Connect, a framework for building and managing connectors that facilitate data integration between Kafka and other systems. DoubleCloud provides custom-built connectors for Apache Kafka. This enhances the connectivity and data transfer options.

Kafka vs Spark comparison table

|

Comparison parameter |

Apache Spark |

Apache Kafka |

|

Use cases |

Complex data processing, batch processing, real-time stream processing |

Real-time data pipelines, distributed messaging, stream processing |

|

Features |

High-speed processing, language support (Java, Scala, Python, R), Resilient Distributed Datasets (RDDs) |

Publish/subscribe model, distributed and fault-tolerant, data durability, and replication |

|

Performance |

Supports complex operations, efficient resource management, batch, and stream processing |

High throughput, low latency, scalable design |

|

Community & support |

Rich community, JIRA, mailing list, and GitHub repositories for support and collaboration |

Strong community support, JIRA, mailing list, and GitHub repositories for collaborative development |

|

Deployment & management |

Can be deployed in standalone mode, on a cloud, or with cluster managers. Provides multiple utilities for monitoring and managing applications |

Typically deployed across one or more servers. Comes with several tools for monitoring and managing clusters. |

|

Security |

Supports Kerberos authentication and SSL encryption for data transfer |

Supports SASL and PLAIN and has Access Control Lists for user-level permissions |

|

Ecosystem |

Its Ecosystem consists of Spark SQL, Spark Streaming, MLlib, GraphX |

Provides tools such as Kafka Connect, Kafka Streams, Kafka MirrorMaker: Kafka REST Proxy |

|

Pricing |

t2.small EC2 instance has a software cost of $0.05 per hour and an EC2 cost of $0.023 per hour, totaling $0.073 per hour |

The pricing for the Kafka.m5.large broker instance, with 2 vCPUs and 8 GiB of memory, is $0.21 per hour |

|

Key performance indicators |

Speed, Fault Tolerance, and scalability |

Speed, Fault Tolerance, and scalability |

|

Integration |

Spark Streaming module for data consumption |

Kafka Connect for data integration |

Pros and cons of Apache Spark

Pros of Kafka

-

Real-time streaming is the most prominent benefit of Kafka.

-

Kafka is highly scalable because of message brokers and partitions within message brokers.

-

Kafka creates replication of data that makes it fault-tolerant and durable.

Cons of Kafka

-

Configuring clusters can take a lot of work for beginners. Technical expertise comes with learning and experience.

-

Storage cost is required for fault tolerance.

-

Kafka requires many monitoring tools for management, which adds to the system’s complexity.

Pros and cons of Apache Spark

Pros of Spark

-

Speed is the number one benefit of Spark. It runs 100 times faster than MapReduce.

-

Spark provides APIs for multiple languages. Plus, it provides libraries such as MLlib for data processing.

-

It is highly scalable because of its ability to distribute data for computation.

Cons of Spark

-

Spark works in memory, which means that it requires significant memory while working on large datasets.

-

It does not have a file management system and depends on other platforms like Hadoop.

-

Configuring spark clusters can be complex.

-

Developing technical expertise in Spark follows a learning curve.

How DoubleCloud helps you with Apache Kafka?

While Apache Kafka is flexible, deployment comes with various challenges. These challenges are as follows:

-

Setting up Kafka requires establishing at least one data center, configuring security protocols, and managing servers.

-

It requires adequate physical machines to ensure durability.

-

A dedicated staff is required for regular system maintenance. Fulfilling the requirement mentioned above can take time and effort. Hence, opting for a fully managed service like DoubleCloud can be a game-changer.

The DoubleCloud Managed Service for Apache Kafka streamlines your Apache Kafka management, enhancing efficiency and security. It handles Kafka cluster configurations, relieving you of routine tasks. Built on the original Apache Kafka distributive, it’s user-friendly and operates on your AWS account, keeping computations and data under your control.

Additionally, DoubleCloud ensures high security via TLS-encrypted connections and SASL-authenticated access control lists (ACL) to topics. It’s fault-tolerant, distributing brokers across availability zones and setting up a replication factor for high availability.

With DoubleCloud, you can also enjoy real-time analytics, delivering large amounts of data directly to ClickHouse without additional development.

Final words. Spark VS Kafka: What to choose?

Apache Spark and Apache Kafka complement each other in big data processing.

Kafka’s key strength is real-time data flow management. Once the data is available, Apache Spark is used for complex data processing. Apache Spark’s key strength is processing and interpreting data on data speedily.

Although unique technologies, Spark and Kafka can be combined to leverage their strengths: Kafka for efficiently capturing and storing streaming data and Spark for processing it. Therefore, their combination enables comprehensive big data solutions.

DoubleCloud Managed Service for Apache Kafka

Fully managed open-source Apache Kafka® service for distributed delivery, storage, and real-time data processing.

Frequently asked questions (FAQ)

Can Kafka and Spark be used together?

Can Kafka and Spark be used together?

Yes, Kafka and Spark can be used together to make big data processing systems. Kafka will collect real-time data. It supports data storage in a distributed manner. Spark will process and provide analytics based on that data.

How do Kafka and Spark handle real-time streaming data?

How do Kafka and Spark handle real-time streaming data?

How does the architecture of Kafka and Spark differ?

How does the architecture of Kafka and Spark differ?

What are the considerations for choosing between Kafka and Spark?

What are the considerations for choosing between Kafka and Spark?

What are the potential challenges or limitations of Kafka and Spark?

What are the potential challenges or limitations of Kafka and Spark?

Start your trial today